Streaming Aggregation

Transform incoming telemetry data into metrics to improve query performance, optimize storage costs, and manage high cardinality in Last9.

Streaming Aggregation transforms incoming telemetry data into metrics to improve query performance and optimize storage costs. It processes data before storage, making it particularly effective for managing high cardinality metrics in time series data while preserving valuable information.

For in-depth explanation of high cardinality challenges and conceptual details, check our guide on Streaming Aggregation.

Common Use Cases

Reducing cardinality while preserving information

Problem: You have a metric with high-cardinality labels (pod, pod_name) alongside lower-cardinality labels (instance, country, service).

Solution: Create two streaming aggregations:

-

One that drops high-cardinality labels but keeps lower-cardinality ones:

- promql: "sum by (instance, country, service) (my_metric{}[2m])"as: my_metric_by_location_2m -

Another that preserves only essential high-cardinality information:

- promql: "sum by (pod, pod_name, service) (my_metric{}[2m])"as: my_metric_by_pod_2m

This approach reduces cardinality while allowing you to correlate information between the two aggregated metrics.

Transforming Logs to Metrics (Last9 LogMetrics)

Last9 LogMetrics enables you to create metrics from your logs data, helping you extract key insights and metrics from log-based data sources.

-

Monitoring Service Error Rates: This creates a metric that shows how many errors each service is generating every 5 minutes, allowing you to quickly identify problematic services.

- Filter:

level=error - Aggregate:

count as _count - Group by:

service - Timeslice:

5 minutes

- Filter:

-

Tracking API Response Times: This creates metrics showing average response times per endpoint and status code, helping to identify slow endpoints or failed requests.

- Filter:

component=api - Aggregate:

avg(response_time) as avg_response_time - Group by:

endpoint, status_code - Timeslice:

1 minute

- Filter:

-

Detecting Service Unavailability: By tracking the volume of logs from critical services, you can detect when a service potentially goes down if log volume drops significantly.

- Filter:

service in ("payment", "authentication", "database") - Aggregate:

count as _count - Group by:

service - Timeslice:

10 minutes

- Filter:

Rolling up data over time

Use streaming aggregation to roll up data over longer time windows:

- promql: "sum by (service, endpoint) (api_calls_total{}[5m])" as: api_calls_total_5mThis creates a 5-minute rolled-up version of your metric, which is useful for longer-term trend analysis while reducing storage requirements.

Getting Started

Last9 offers two main approaches to set up streaming aggregations:

Option 1: Using the UI

-

Navigate to Control Plane → Streaming Aggregation

-

Click + NEW RULE to open the rule creation form

-

Choose your Telemetry source:

- Metrics: For metric-based aggregation

- Events: For event-based aggregation

- Logs: For creating metrics from logs (Last9 LogMetrics)

-

For Metrics & Events:

- Enter the metric name, resolution, and aggregation function

- Choose labels to include (With) or exclude (Without)

- Set the output metric name and rule name

-

For Logs (Last9 LogMetrics):

-

Use the Builder or Editor interface to create your query

-

Set filter conditions, aggregate functions, and group by dimensions

-

Define your evaluation frequency (timeslice), auto inherited from Editor mode query

-

Set the output metric name and rule name

You can also create LogMetrics directly from Logs Explorer:

- Create and run a query in Logs Explorer

- Click the Create Metric button next to your visualization

- This opens a pre-filled streaming aggregation form with your query

- Verify the preview looks correct

- Set the output metric name and rule name

-

-

Click SAVE to activate your streaming aggregation rule

Option 2: GitOps Workflow

For teams who prefer infrastructure-as-code approaches:

-

Request enabling GitOps workflow

Please reach out to cs@last9.io, or on our shared Slack/Teams channel, to switch you over to the GitOps workflow for Streaming Aggregation.

-

Define Your Aggregation PromQL

Identify the specific metrics you want to aggregate. Using the Explore tab in embedded Grafana, create a PromQL query that defines how you want to aggregate your data.

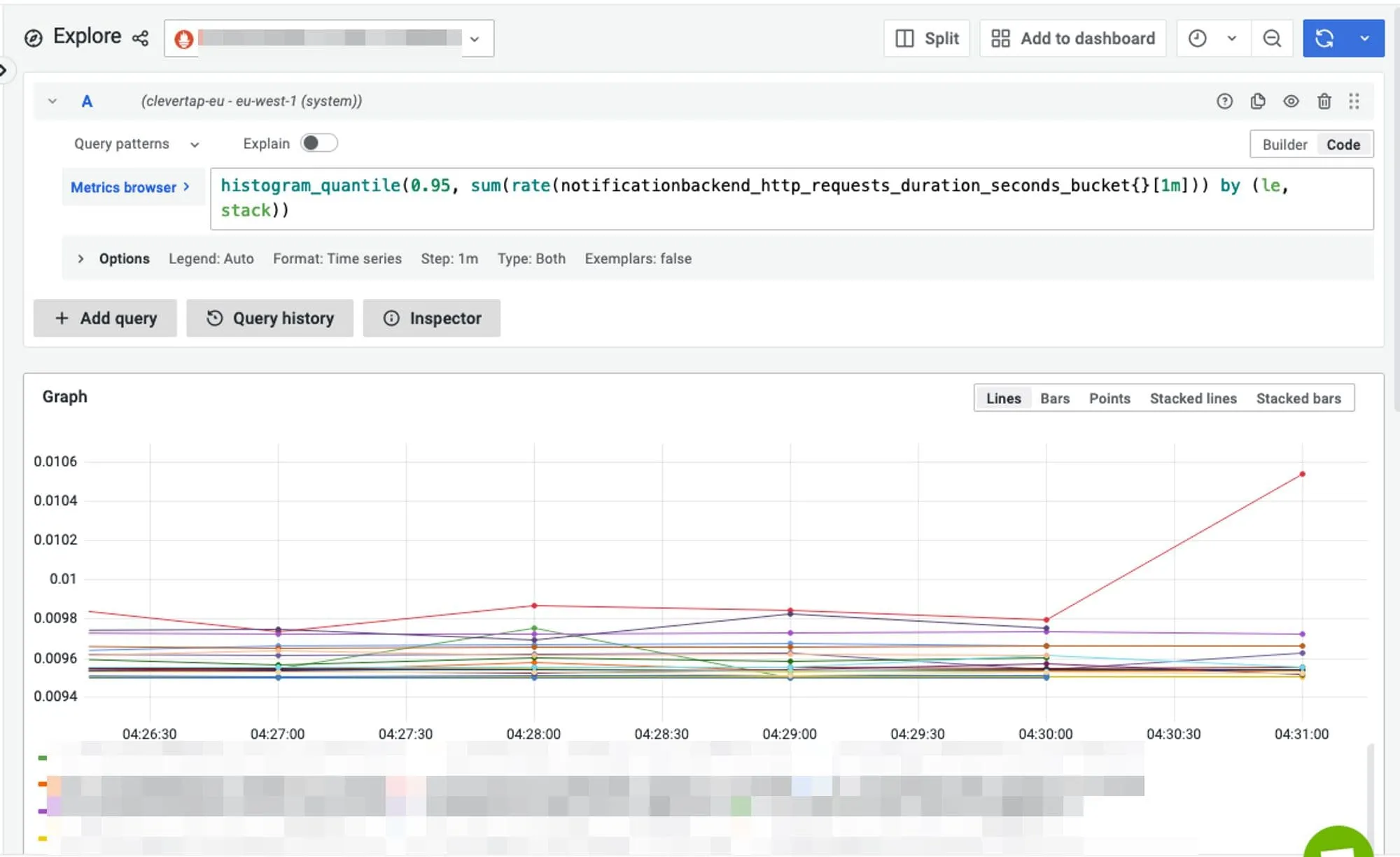

For example, to aggregate HTTP request durations by stack:

sum by (stack) (http_requests_duration_seconds_count{service="pushnotifs"}[1m])This query reduces cardinality by grouping data by the

stacklabel, making it more manageable and queryable. -

Configure the Aggregation Rule

Add your aggregation rule to the YAML file for your Last9 cluster. The basic syntax is:

- promql: 'sum by (stack, le) (http_requests_duration_seconds_bucket{service="pushnotifs"}[2m])'as: pushnotifs_http_requests_duration:2mThis configuration:

- Takes the metric

http_requests_duration_seconds_bucketfiltered for thepushnotifsservice - Aggregates it over a 2-minute window

- Groups by

stackandle(latency buckets) - Creates a new metric named

pushnotifs_http_requests_duration:2m

- Takes the metric

-

Deploy Using GitOps Workflow

- Create a Pull Request with your updated rules to the GitHub repository

- Wait for CI Tests to validate your streaming aggregation syntax

- Merge the Pull Request to activate the pipeline in Last9

- Query the New Metric in your Last9 cluster

Histogram Aggregation for Percentiles

Histograms are essential for calculating accurate percentiles (like p95 latencies). When aggregating histogram metrics, you need to maintain their statistical integrity by properly handling all three components.

Histograms require three related metrics to function properly: <metric_name>_bucket, <metric_name>_sum, and <metric_name>_count.

To create a proper histogram using streaming aggregation, define all three components:

- promql: 'sum2 by (stack, le) (http_requests_duration_seconds_bucket{service="pushnotifs"}[2m])' as: pushnotifs_http_requests_duration_seconds_bucket- promql: 'sum2 by (stack) (http_requests_duration_seconds_sum{service="pushnotifs"}[2m])' as: pushnotifs_http_requests_duration_seconds_sum- promql: 'sum2 by (stack) (http_requests_duration_seconds_count{service="pushnotifs"}[2m])' as: pushnotifs_http_requests_duration_seconds_countYou can then use histogram_quantile functions on the aggregated metrics:

Supported Functions

For Metrics

The following aggregation functions are available for metric-based Streaming Aggregation:

- sum: Total to be used for other metric types

- max: The Maximum value of the samples

- sum2: Sum, but for counters and reset awareness

For Logs

For log-based aggregations, see the supported functions in the Logs Query Builder Aggregate Stage documentation. Note that only one aggregate function is allowed per query.

Troubleshooting

-

Streaming Aggregation Not Appearing

- Check that your rule was properly saved or PR was successfully merged

- Verify the syntax of your PromQL or log query

- Check Cardinality Explorer to Ensure the cardinality is below the 3M timeseries per hour limit

- For LogMetrics, ensure your query is returning numerical data

-

Incorrect Aggregation Results

- For counters, ensure you’re using

sum2instead ofsum - Check the time window

[Nm]is appropriate for your data frequency - Verify that your

byclause or group by includes all necessary labels - For logs, make sure your filter conditions are correctly specified

- For counters, ensure you’re using

-

Performance Issues

- Start with longer time windows like

[5m]to reduce processing load - Limit the number of labels in your

byclause - Consider creating multiple targeted aggregations instead of one large one

- For log queries, add specific filters to narrow down the data being processed

- Start with longer time windows like

Please get in touch with us on Discord or Email if you have any questions.