Remapping

Transform and standardize your logs and traces data by extracting and mapping fields for better searchability and analysis.

Remapping allows you to standardize your logs and traces data by extracting fields and mapping them to consistent formats. This powerful feature helps you normalize data across different services and sources, making your telemetry more searchable and easier to analyze.

Remapping consists of two primary functions:

- Extract: Pull specific fields or patterns from your log lines

- Map: Transform extracted fields into standardized formats

This capability is valuable for scenarios like:

- Normalizing different service names across your infrastructure

- Standardizing severity levels from various sources (ERROR, err, Fatal, 500)

- Creating consistent environment labels (prod, production, prd)

- Extracting structured data from JSON or pattern-based logs

- Maintaining consistent field naming conventions

Attribute Priority Order

When mapping multiple source attributes to a single target attribute, the order of preference is left to right. The system evaluates attributes in sequence and uses the first non-empty value found.

Example: If you configure Service mapping with three source attributes:

| Position | Source Attribute | Value |

|---|---|---|

| 1st (leftmost) | attributes["service_name"] | "" (empty) |

| 2nd | attributes["app_name"] | "checkout-api" |

| 3rd | resource["service.name"] | "checkout" |

Result: Service is set to "checkout-api" because it’s the first non-empty value when evaluated left to right.

Working with Remapping

Extract

- Navigate to Control Plane > Remapping

- Select the “Extract” tab

- View existing extraction rules in the table showing:

- Name: Descriptive name of the extraction rule

- Method: JSON or Pattern Match extraction method

- Scope: Which lines the extraction applies to

- Fields/Pattern: Which fields or patterns to extract

- Action: How the extracted data is handled (Upsert/Insert)

- Active Since: When the rule was activated

- Click ”+ NEW RULE” to create a new extraction rule

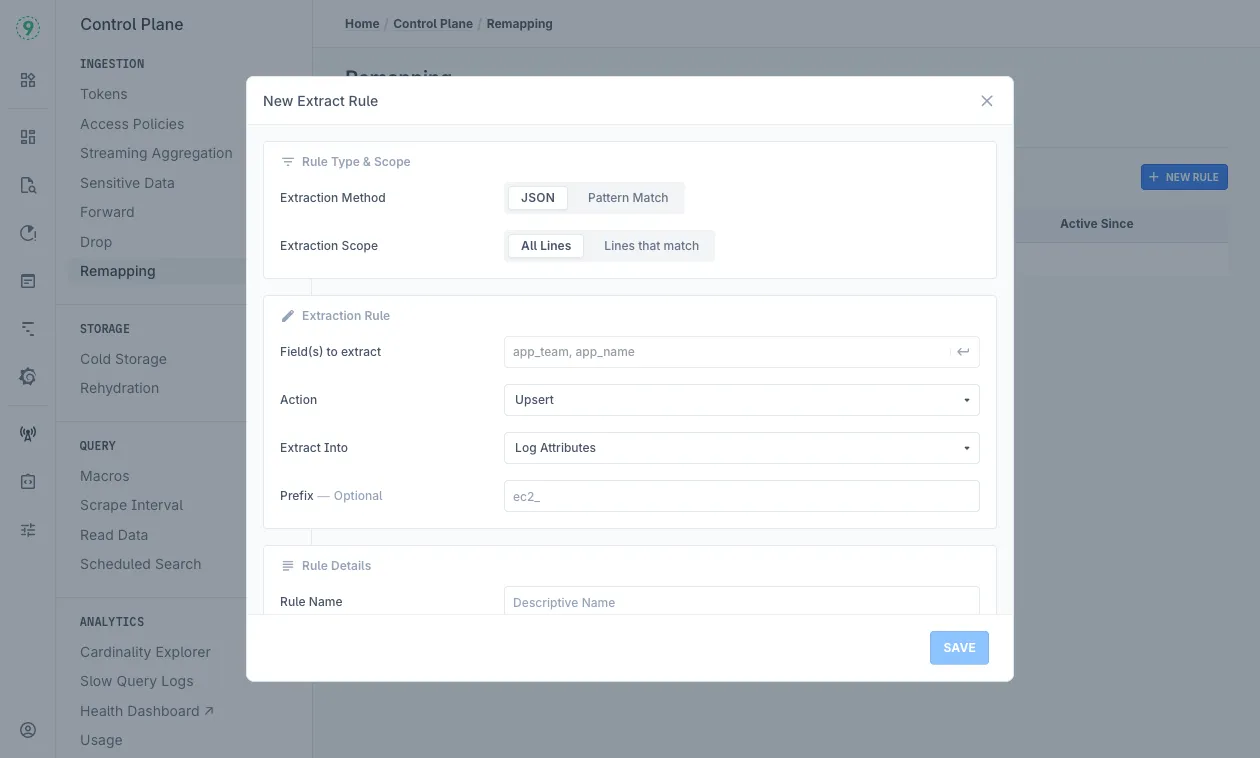

Creating a New Extraction Rule

-

Select “Extraction Method”:

- JSON: Extract fields from structured JSON logs

- Pattern Match: Use regex patterns to extract fields from unstructured logs

-

Choose “Extraction Scope”:

- All Lines: Apply extraction to every log line

- Lines that match: Apply only to lines matching specific criteria

-

Field(s) to Extract:

-

For JSON method:

- Select the field(s) to extract

- Example fields: requestId, thread_id, logger_name, etc.

-

For Pattern Match method:

- Enter the regex pattern in “Pattern to Extract” field

- Example:

timeseries:\s*(?P<timeseries>\d+)

-

-

Set “Action” to “Upsert” (update if exists, insert if not) or “Insert”

-

Choose “Extract Into” option:

- Log Attributes: Adds fields to the log’s searchable attributes

- Resource Attributes: Adds fields to the resource’s metadata

-

Optionally add a “Prefix” to extracted field names

- Example: “ec2_” would transform “id” to “ec2_id”

-

Enter a descriptive “Rule Name”

-

Click “SAVE” to activate the rule

Map

-

Navigate to Control Plane > Remapping

-

Select the “Map” tab

-

View “Remap Fields” section with existing mappings

-

Map common fields to standardized formats:

- Service: Map various service names to consistent values

- Example:

attributes["service_name"]

- Example:

- Severity: Map different log levels to standard severity

- Example:

attributes["level"]andattributes["levelname"]

- Example:

- Deployment Environment: Map environment indicators

- Select from available attributes

- Service: Map various service names to consistent values

-

Preview the mapping results in the “Preview (Last 2 mins)” section below

- SERVICE: How service names appear after mapping

- SEVERITY: Standardized severity levels

- DEPLOYMENT ENV: Normalized environment names

- LOG ATTRIBUTES: Other log details

- RESOURCE ATTR: Resource-related information

-

After configuring mappings, click “SAVE”

Example Use Cases

Logs

-

Standardizing Service Names: Map various service identifiers to consistent names

- Raw values: “auth-svc”, “auth_service”, “authentication”

- Mapped to: “authentication-service”

-

Normalizing Severity Levels: Create consistent severity levels across sources

- Raw values: “ERROR”, “err”, “Fatal”, “500”

- Mapped to: “ERROR”

-

Extracting Thread Information: Pull thread details from logs for better filtering

- Extract fields: thread_id, thread_name, thread_priority

- Makes thread-based troubleshooting more efficient

-

Environment Consistency: Standardize environment naming

- Raw values: “dev”, “development”, “preprod”, “staging”

- Mapped to consistent environment names

Traces

-

Service Name Standardization: Ensure consistent service names across spans

- Source attributes:

resource["service.name"],attributes["service"] - Map to standardized service names for cleaner service maps

- Source attributes:

-

Deployment Environment: Tag traces with environment information

- Source:

resource["deployment.environment"],attributes["env"] - Standardize to: “production”, “staging”, “development”

- Source:

-

Span Operation Normalization: Consistent operation naming across services

- Different frameworks may use varying conventions for span names

- Map to consistent operation names for easier filtering in Traces Explorer

Tips for Effective Remapping

- Start Simple: Begin with the most common fields you search by

- Use Consistent Naming: Follow a naming convention for all mapped fields

- Check Preview Results: Use the preview section to verify your mappings work as expected

- Mind the Order: Attributes are evaluated left to right—place your most reliable source first

- Use JSON When Possible: JSON extraction is more reliable for structured logs

- Test Pattern Matches: Validate regex patterns before implementing them

- Apply to Both Signals: The same remapping rules work for both logs and traces

Troubleshooting

If your remapping rules aren’t working as expected:

- Check the extraction pattern syntax for errors

- Verify field names match exactly what appears in your logs

- Ensure your extraction scope is appropriate

- Look at the preview to confirm data is flowing as expected

- Try simplifying complex regex patterns

Please get in touch with us on Discord or Email if you have any questions.