Jul 17th, ‘23/7 min read

What is OpenTelemetry Collector

What is OpenTelemetry Collector, Architecture, Deployment and Getting started

OpenTelemetry has emerged as a critical player in observability, monitoring, and debugging use cases, providing standardized APIs and instrumentation to collect telemetry data such as metrics, logs, and traces from your applications. A vital component of the OpenTelemetry ecosystem is the OpenTelemetry Collector. In this article, we'll take a closer look at what the OpenTelemetry Collector is, how it works, its architecture, and the advantages it offers.

Read more about what is OpenTelemetry.

What is the OpenTelemetry Collector?

OpenTelemetry Collector, or OTel Collector, is a crucial part of the OpenTelemetry ecosystem, an observability framework for cloud-native software. It's an open-source service that can ingest, process, and export telemetry data. This telemetry data can include traces, metrics, and logs from different sources.

The primary function of the OpenTelemetry Collector is to receive telemetry data from applications or services, process it, and send it to the backend specified by the user. It's vendor-agnostic, meaning it can receive data from various sources and export it to multiple destinations, including open-source and commercial backends.

The OpenTelemetry Collector is highly configurable and can be used to create robust telemetry data pipelines. Depending on the use case, it can be deployed as an agent on a host or as a standalone service. It is built with a modular architecture, allowing users to pick and choose between different receivers, processors, exporters, and extensions to meet their specific needs.

Otel Collector has extensive docs available here.

How Does the OpenTelemetry Collector Work?

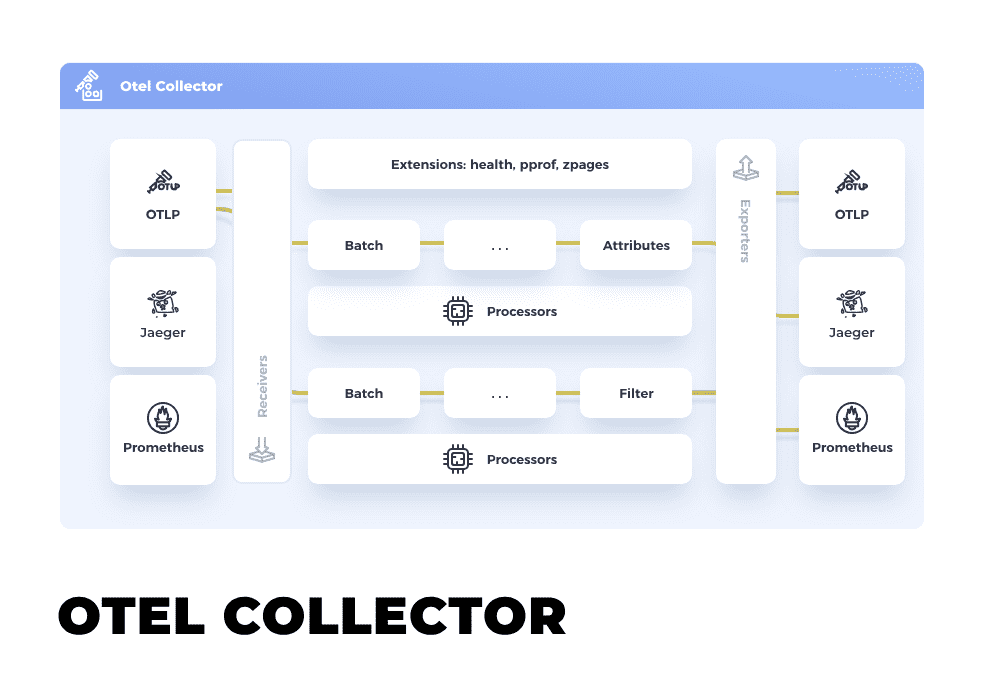

The Collector works in three main stages: it receives data, processes it, and then exports it.

- Receivers: The Collector's receivers accept telemetry data from various sources. These sources can be instrumentation libraries (like OpenTelemetry SDKs), other collectors, or other systems such as FluentBit. The data can be in multiple formats, including but not limited to, OpenTelemetry protocol (OTLP), Jaeger, Zipkin, and Prometheus.

- Processors: Once the data is received, it is processed. The processing stage can involve several operations, such as batching, filtering, or enhancing the data. You can control the processing operation by setting up pipeline configs.

- Exporters: After the data has been processed, it can be exported. The OpenTelemetry Collector can export data to multiple backends such as Jaeger, Zipkin, and Levitate. The list of supported backends is continuously growing as the community develops more exporters.

OpenTelemetry Collector Architecture.

The OpenTelemetry Collector (OTel Collector) doesn't have a formal specification document like the OpenTelemetry API and SDKs. However, some fundamental principles and architectural elements define the OTel Collector and how it operates.

Unified Format: The OTel Collector uses the OpenTelemetry Protocol (OTLP) as its internal format for telemetry data, including metrics, traces, and logs.

Modular and Configurable Architecture: The OTel Collector consists of multiple configurable components, which allows users to tailor its functionality to their specific needs. The primary components are:

The OTel Collector comprises several key components: Receivers, Processors, Exporters, and Extensions. Let's delve into each component.

Receivers

Receivers are the entry point of the telemetry data into the OTel Collector. They ingest data in various formats from different sources. Each receiver is designed to understand and interpret a specific data format. They convert this data into a format the OTel Collector can understand and further process.

Examples of receivers include:

otlp: to receive data from applications instrumented with OpenTelemetry Librariesjaeger: to receive Jaeger traceszipkin: to receive Zipkin tracesopencensus: to receive data from applications instrumented with OpenCensus Librariesprometheus: to scrape Prometheus metrics from a specified target.

Processors

Once the data is received, it is sent through a pipeline of Processors. These Processors can manipulate the data before it is sent to an Exporter. For instance, they can aggregate data, enrich it, filter it, or perform other transformations. Processors are an optional part of the pipeline and can be configured as needed.

Examples of processors include:

batch: This processor batches the trace data, which reduces the number of outgoing connections requiredqueued_retry: This processor ensures data is not lost during transmission. If the data cannot be transmitted on the first try, it's required for retrymemory_limiter: This processor prevents the Collector from exceeding a defined memory limitattributes: This processor can modify data attributes, like adding, updating, or deleting attributes

Exporters

Exporters are the final stop in the OTel Collector pipeline. They take the processed data and send it to the backend(s) defined in the Collector's configs. Like Receivers, different Exporters are designed to send data to different backends.

Examples of exporters include:

otlp: to send data to any backend supporting OTLP (OpenTelemetry Protocol)jaeger: to send Jaeger traces to a Jaeger backendzipkin: to send Zipkin trace data to a Zipkin backendprometheus: to expose the data as Prometheus metricsawsxray: to send trace data to AWS X-Ray

Extensions

Extensions are components that aren't directly involved in the data pipeline but provide additional capabilities or interfaces. For example, they can provide health checks, do performance profiling, or allow for dynamic configuration updates.

Examples of extensions include:

health_check: This extension enables a health check extension on the Collector, making it easier to monitor its statuspprof: This extension enables the golang net/http/pprof profiler in the Collector, which can be helpful for performance and troubleshooting analyseszpages: This extension enables a collection of web pages that display the running status of the Collector

Each of these components — Receivers, Processors, Exporters, and Extensions — plays a significant role in the functioning of the OpenTelemetry Collector, making it a flexible and powerful tool for observability in your application infrastructure.

Advantages of Using the OpenTelemetry Collector

- Vendor Neutrality: The Collector is vendor-agnostic, meaning it can receive data in multiple formats and export to multiple backends. This makes it highly adaptable and flexible, avoiding vendor lock-in.

- Reduced Complexity: By removing the need to send data directly from each service to your backend, the Collector reduces the complexity of your observability pipeline.

- Resource Efficiency: The Collector provides features like batching and compression out of the box, which can reduce the resource usage of your services and the network bandwidth required to send data to the backend.

- Unified Data Processing: Since all telemetry data passes through the Collector, you can uniformly apply processing such as filtering or augmentation across all data, ensuring consistency in your observability data.

Integration with Kubernetes

The OpenTelemetry Collector (OTel Collector) is designed to work seamlessly with Kubernetes and is often used to monitor the performance of Kubernetes clusters. Here's a step-by-step explanation of how the OTel Collector operates within a Kubernetes environment:

Deployment

- Deploying as a DaemonSet: You typically deploy the OTel Collector instance as a DaemonSet within a Kubernetes cluster. This deployment ensures that a single instance of the Collector runs on each node in the cluster, allowing it to gather metrics, traces, and logs from all applications running on that node.

- Deploying as a Sidecar: In some cases, you might also deploy the OTel Collector as a sidecar within specific Kubernetes pods. This deployment allows the Collector to only gather telemetry data from that specific pod or service.

Data Collection

- Receiving Data: When deployed on Kubernetes, the OTel Collector can receive telemetry data from your applications via receivers. You can instrument your applications with OpenTelemetry SDKs to send this data to the Collector.

- Scraping Metrics: The Collector can scrape metrics directly from Kubernetes via the Kubelet's and Prometheus metrics endpoints. With the Prometheus receiver, the Collector can scrape metrics from any target that uses the Prometheus exposition format.

- Collecting Logs: With the appropriate receiver, such as the FluentBit or Fluentd receiver, the Collector can collect logs generated by your applications.

Data Processing and Exporting

- Processing Data: The OTel Collector can process the collected telemetry data as per your configured pipeline. You can use processors to filter and enhance data or to remove sensitive information.

- Exporting Data: The processed data is exported to one or multiple backends using exporters. These backends could be a monitoring solution like Grafana, a tracing tool like Jaeger, a logging solution like Elasticsearch, or a telemetry data platform like Google Cloud Monitoring.

Integration with Kubernetes Services

- Service Discovery: The OTel Collector can service discovery in a Kubernetes environment. For instance, when configured with the Prometheus receiver, it can use Kubernetes service discovery to locate services exposing Prometheus metrics.

- Kubernetes Metadata Enrichment: The Collector can also enrich telemetry data with metadata from the Kubernetes API server. This enrichment can help provide valuable context to your metrics, traces, and logs.

By implementing the OpenTelemetry Collector in your Kubernetes environment, you can standardize your telemetry data collection, processing, and export across your entire infrastructure. This helps you maintain a clear view of your application's performance and reduces the complexity of your observability setup.

Additional capabilities

The OpenTelemetry Collector is a powerful, scalable tool for observability, providing a unified way to receive, process, and export telemetry data such as traces, metrics, and logs. However, while the core Collector is flexible and supports advanced use cases such as tail-based sampling, some use cases require even more specialized components or support for specific backends. That's where the opentelemetry-collector-contrib comes in.

opentelemetry-collector-contrib is an open-source project available on GitHub that extends the functionality of the OpenTelemetry Collector with additional components It includes receivers, processors, exporters, and extensions not part of the core distribution due to their experimental nature, limited usage, or maintenance status. These components can be contributed by the community and are provided to support more specialized or less common use cases.

Getting Started locally

Using the following collector configuration file, you can use Docker to set up the Otel collector locally.

docker pull otel/opentelemetry-collector-contrib:0.81.0

docker run otel/opentelemetry-collector-contrib:0.81.0otel-collector:

image: otel/opentelemetry-collector-contrib

volumes:

- ./otel-collector-config.yaml:/etc/otelcol-contrib/config.yaml

ports:

- 1888:1888 # pprof extension

- 8888:8888 # Prometheus metrics exposed by the collector

- 8889:8889 # Prometheus exporter metrics

- 13133:13133 # health_check extension

- 4317:4317 # OTLP gRPC receiver

- 4318:4318 # OTLP http receiver

- 55679:55679 # zpages extensionYou can find examples of sending metrics via OpenTelemetry Collector using Prometheus Remote Write protocol here.

In conclusion, the OpenTelemetry Collector offers a versatile and efficient solution for managing telemetry data in distributed systems. It is a powerful tool for developers and operators seeking to streamline their observability pipelines and maximize their system's efficiency and effectiveness.

Contents

Newsletter

Stay updated on the latest from Last9.

Handcrafted Related Posts

Prometheus Metrics Types - A Deep Dive

A deep dive on different metric types in Prometheus and best practices

Tripad Mishra

Why your monitoring costs are high

If you want to bring down your monitoring costs, you need to shake up a decision paralysis in engineering

Aniket Rao

Why Service Level Objectives?

Understanding how to measure the health of your servcie, benefits of using SLOs, how to set compliances and much more...

Piyush Verma