Large language models (LLMs) are rapidly becoming a foundational technology in various sectors, from conversational agents to content creation.

While these models offer powerful capabilities, they also present unique challenges, primarily due to their complexity and unpredictability. To manage and maintain LLMs effectively, it is crucial to implement comprehensive observability practices.

In this blog, we'll talk about what LLM observability is, why it's essential, and some common challenges to look for.

What is LLM Observability?

LLM Observability is the process of monitoring and understanding the behavior of Large Language Models (LLMs) in real time.

It's a way to track how well these models are performing and how they’re interacting with users in applications, from response accuracy to system performance.

LLM observability is essential for ensuring the reliability and efficiency of LLM applications, especially as they’re integrated into real-world systems such as Generative AI tools, RAG (retrieval-augmented generation) systems, and LangChain-powered platforms.

How LLM Observability Works

At its core, LLM observability works by embedding monitoring and tracking mechanisms within applications that use these models.

Using an observability solution, developers can gain insights into both the model's behavior and the systems around it. This helps teams quickly identify issues, like errors in document generation or unexpected results from embedded knowledge, and address the root causes of those problems.

Here’s how LLM observability typically works:

Monitoring and Visualization

The observability system collects data in real time, including metrics like response time, accuracy, and resource usage. Through advanced visualization tools, developers can track these metrics in dashboards, helping them quickly pinpoint issues across different applications.

Data Collection

Collecting logs and metrics on model performance allows these tools to track how LLMs process inputs, such as user queries in chatbots or document searches. For apps using open-source libraries, the collected data helps monitor any anomalies in the system’s behavior, ensuring smooth execution.

Embedding Insights

For better understanding, embedding techniques are often used in observability solutions to represent complex data and outputs from LLMs in a more understandable way. These embeddings help visualize relationships between inputs and outputs and assist in diagnosing issues more effectively.

User Feedback

An important aspect of observability is integrating user feedback to understand how the LLM is performing from an end-user perspective. Analyzing this feedback allows developers to adjust the model to improve performance and usability in real-time.

Root Cause Analysis

When an issue is identified, the observability system helps trace back to the root cause, whether it’s a model misconfiguration, lack of proper training, or an issue with the app’s integration with the LLM. This fast diagnosis is key to reducing downtime and improving the overall user experience.

LLM Monitoring Stack and Architecture

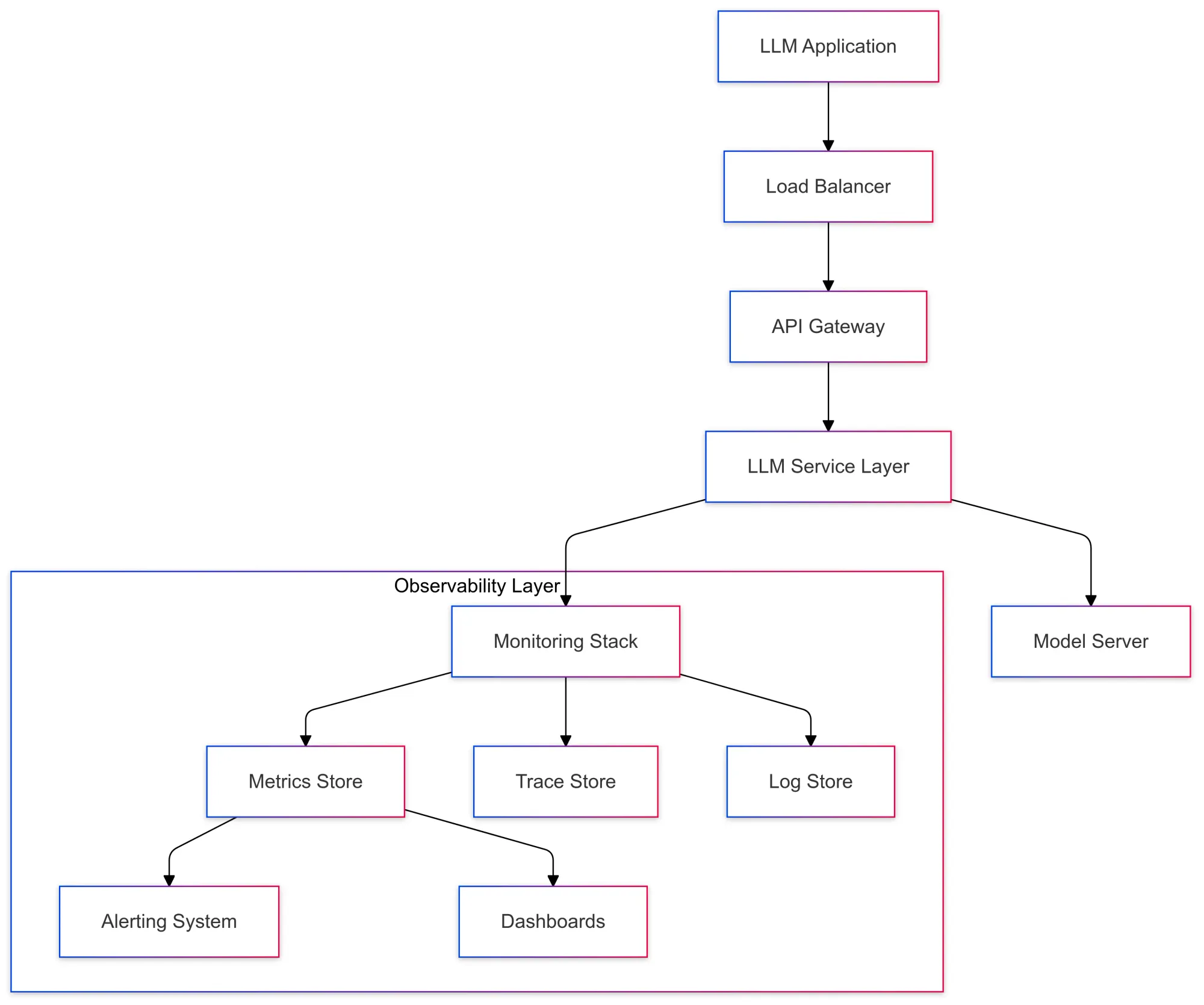

At the heart of the LLM architecture is the Monitoring Stack, which collects and processes data from various components like model servers, API gateways, and other critical system parts.

This allows you to keep track of the entire system's performance and catch issues quickly.

Below are the key layers that make up the monitoring architecture, along with a simple explanation of each one:

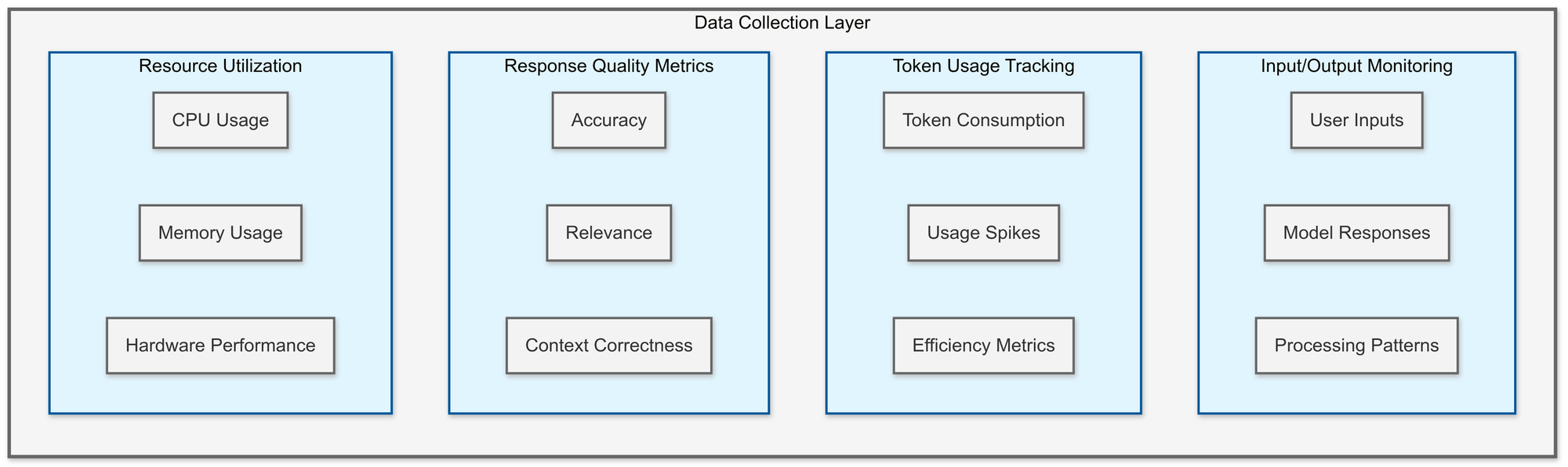

Data Collection Layer:

Input/Output Monitoring: This tracks the data coming into and out of your LLM models, such as the inputs from users and the model's generated responses. By monitoring this, you get insights into how the model is processing inputs and whether it’s producing the expected results.

Token Usage Tracking: Large language models, like GPT from OpenAI, often operate on tokens—pieces of text. This tracking helps measure how many tokens are being consumed.

Response Quality Metrics: This is about assessing how good the model’s responses are. Are they accurate? Relevant? Contextually correct? Monitoring response quality ensures that the model is providing answers that meet expectations, which is crucial for applications relying on AI observability and interpretability.

Resource Utilization: This layer tracks system resources like CPU, memory, and other hardware usage. Monitoring these helps you identify any performance bottlenecks, like if the system is running out of memory or CPU power. This is key to maintaining application performance at scale.

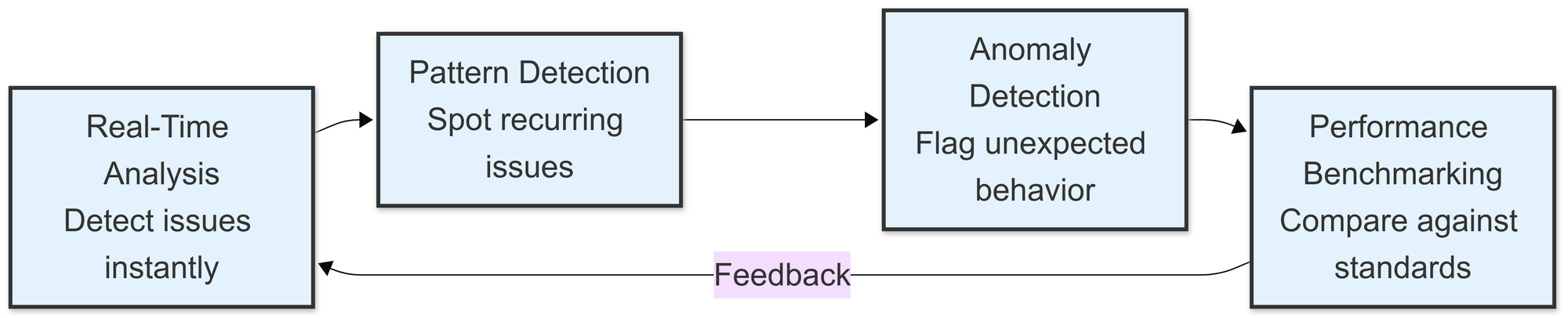

Processing Layer:

Real-Time Analysis: Real-time analysis allows you to detect issues as they occur. For instance, if there’s a sudden spike in response time or an error in model outputs, this layer will alert you immediately so you can take quick action to resolve it.

Pattern Detection: Analyzing past data helps the system spot recurring issues in how the model behaves. For example, if the model consistently has problems during certain times of the day or with specific types of queries, this information can be used to predict and avoid future problems through predictive maintenance.

Anomaly Identification: This component automatically flags anything that deviates from expected performance. So, if your GenAI model starts giving inconsistent answers or experiences a slowdown, it will be flagged for further investigation.

Performance Benchmarking: Benchmarking sets standards for how fast the model should respond, how accurate its results should be, and how much throughput it should be able to handle. Comparing real-time performance against these benchmarks helps you ensure everything is working as expected and can point out areas that need improvement.

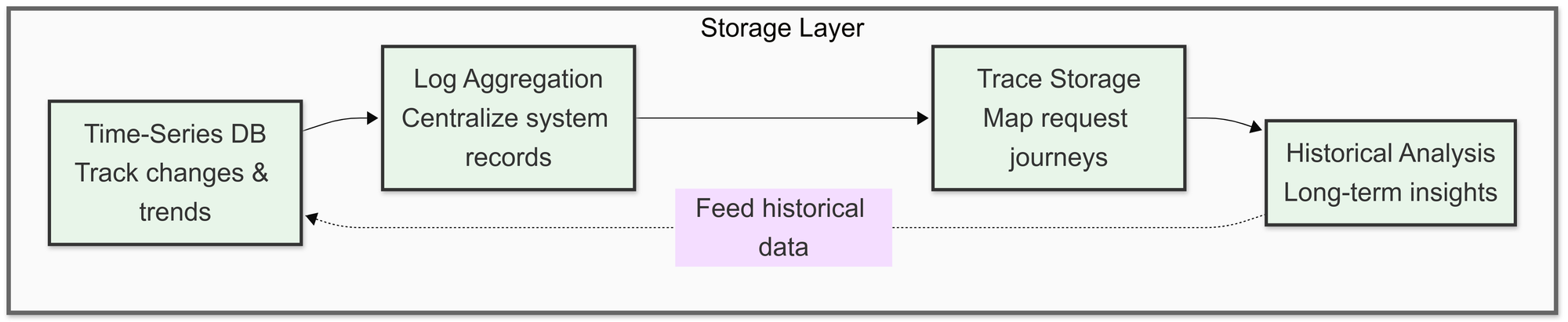

Storage Layer:

Time-Series Databases: These databases store data that changes over time, like latency or system utilization. Time-series data helps you analyze trends, so you can understand if things are improving or worsening over time. If there's an issue with response time, these databases let you look at the data historically to understand the cause.

Log Aggregation: Logs are detailed records of everything happening in the system. By gathering all logs in one place, it becomes much easier to troubleshoot any problems. For example, if there’s an issue with the way Opentelemetry is integrated or with a model misbehaving, log aggregation helps you find the root cause.

Trace Storage: Traces are like detailed maps of the requests as they travel through your system. If your SDK or API gateway is causing delays or if there's a performance bottleneck, trace storage lets you see exactly where the issue occurred. It provides transparency into the flow of requests through your system.

Historical Analysis: This is about looking at older data to identify trends, anomalies, and optimization opportunities. It’s especially useful when you're trying to analyze the long-term performance of your models, whether they’re built on Python, using Arize, or working with GitHub-based open-source frameworks like LlamaIndex.

The Core Observability Metrics for LLMs

The following are the most important ones:

- Latency: Measures how long it takes for the model to generate a response. High latency can hurt user experience, especially in real-time applications. Monitoring this ensures the model responds promptly.

- Error Rate: Tracks how often the model generates incorrect or faulty outputs. It’s critical for ensuring the model’s reliability and quality, helping identify when performance starts to degrade.

- Resource Utilization: LLMs are resource-heavy. Keeping an eye on CPU, GPU, memory, and disk usage helps ensure the model is not overloading the system and that resources are used efficiently.

- Throughput: Measures how many requests the model can handle per unit of time. Observing throughput helps assess whether the model can scale to meet user demand.

- Model Drift: Refers to the gradual change in model performance over time, often as a result of retraining or evolving data. Tracking model drift ensures that LLMs remain accurate and effective in dynamic environments.

Actionable Framework for Implementing LLM Observability

To ensure comprehensive observability for your LLM, follow this actionable framework:

- Define Key Metrics: Determine the metrics that matter most to your LLM use case. These could include latency, error rates, throughput, and model drift. Prioritize the metrics that directly impact performance and user experience.

- Integrate Observability Tools: Choose and integrate the right observability tools (such as Prometheus, Grafana, or Last9) into your workflow. These tools help collect and visualize the necessary data, providing visibility into model performance.

- Set Thresholds and Alerts: Establish performance thresholds for each metric. Set up automated alerts to notify the team when these thresholds are exceeded, allowing for quick identification and resolution of issues.

- Monitor and Analyze: Continuously track metrics in real time. Use distributed tracing and logs to monitor how each request flows through the system, identifying bottlenecks, errors, or unexpected behaviors.

- Iterate and Optimize: Observability isn’t a one-time setup. Regularly analyze performance data and fine-tune both the model and the observability system to adapt to new challenges or shifts in user behavior.

The Role of Explainability in LLM Observability

While observability provides valuable insights into model performance, explainability ensures that these insights lead to actionable understanding.

In the case of LLMs, explainability refers to the ability to understand why a model produces a certain output and how it arrived at its decision. The more explainable an LLM is, the easier it is for teams to trust its results, troubleshoot issues, and improve its performance.

Observability tools help bridge the gap between model output and decision-making by providing transparency into the factors influencing the model’s behavior.

Here’s how explainability fits into LLM observability:

- Model Attribution: Observability tools can help attribute model outputs to specific features or training data, giving teams insight into why the model made a certain decision.

This is crucial when a model’s output doesn’t align with expectations, as it helps identify potential biases or data issues.

- Feature Importance Tracking: By tracking which features the model weighs most heavily during decision-making, observability systems can highlight any shifts in feature importance over time.

This is useful for detecting and mitigating model drift.

- Troubleshooting and Debugging: When unexpected outputs arise, observability and explainability work together to provide insights into why the model is misbehaving.

Logs and traces can show which part of the pipeline caused the issue, and model explainability can reveal the underlying reasons.

Example:

Imagine an LLM that provides recommendations for healthcare treatment options. If the model outputs an incorrect suggestion, explainability can help trace the reasoning behind it, allowing practitioners to assess whether the data, model assumptions or an overlooked variable caused the issue.

LLM Observability Use Cases

E-commerce Product Description Generation

What to Monitor:

- Response Relevance to Product Category: Ensure the generated description is consistent with the product’s category, preventing mismatches.

- Brand Voice Consistency: Monitor the tone and style of product descriptions to ensure alignment with the brand's guidelines.

- Technical Specification Accuracy: Track the accuracy of technical details like dimensions, features, and materials.

- Generation Speed for Batch Processing: Measure how quickly the model generates descriptions for multiple products.

- Cost Per Description: Monitor the computational cost involved in generating each product description to optimize spending.

Customer Support Bot

What to Monitor:

- Response Accuracy Rate: Measure the accuracy of responses to ensure correct answers.

- Issue Resolution Rate: Track how often the bot resolves issues without needing human intervention.

- Escalation Patterns: Monitor how often conversations need to be escalated to human agents, identifying gaps in the bot’s abilities.

- User Satisfaction Scores: Gather feedback from users to assess satisfaction with the bot’s performance.

- Conversation Flow Metrics: Ensure that the flow of conversation aligns with expected interaction patterns and resolves user queries efficiently.

Content Moderation

What to Monitor:

- False Positive/Negative Rates: Track the rates of inappropriate content flagged or missed by the model.

- Processing Latency: Monitor how quickly content is processed for moderation.

- Moderation Accuracy: Measure how accurately the model identifies and flags content that violates policies.

- Bias Detection: Analyze the model's fairness by checking for biases in its moderation decisions.

- Rule Compliance: Ensure the model adheres to predefined moderation rules or standards.

10 Common Issues with LLM Applications

Large Language Models (LLMs) like GPT, OpenAI models, and other generative AI systems are powerful tools used in a wide range of applications. However, as with any complex technology, they come with their own set of challenges.

Below are some of the most common issues faced when developing and deploying LLM-based applications:

1. Inconsistent or Incorrect Responses

LLMs are trained on vast datasets, but they can still produce incorrect or nonsensical answers, especially when faced with ambiguous or poorly worded inputs.

This is particularly problematic for applications that require high accuracy, like customer support or healthcare. Models might misinterpret questions or fail to understand the context properly, leading to irrelevant or inaccurate responses.

2. Bias and Ethical Concerns

Like any machine learning model, LLMs inherit biases present in their training data. This means that LLM applications might unintentionally generate biased or harmful content. For example, they might reflect gender, racial, or cultural biases, which could lead to discriminatory outcomes.

3. Performance Issues

As LLMs are computationally intensive, applications that rely on these models can experience performance bottlenecks.

Issues like high latency, slow response times, or resource utilization spikes (CPU, GPU, or memory) can negatively affect user experience. These performance problems are often exacerbated when scaling LLM applications to handle large volumes of traffic or complex queries.

4. Cost and Token Usage

LLMs can be expensive to run, especially at scale. For applications that use cloud-based services like OpenAI or other AI providers, costs can skyrocket with high token usage.

Token consumption refers to the number of words or characters the model processes during inference. Inefficient use of tokens, such as generating overly verbose responses or repeated queries, can quickly drive up operational costs.

5. Lack of Context or Memory

LLMs typically do not have persistent memory across different sessions, meaning they can forget previous interactions or context.

In applications where maintaining context is crucial (e.g., conversational agents or long-form content generation), this can lead to disjointed conversations or outputs that lack coherence.

Solutions like memory augmentation or embedding techniques can help mitigate this, but the challenge persists in some contexts.

6. Security and Privacy Concerns

When LLM applications are handling sensitive data, such as personal information or confidential business data, security and privacy become significant concerns.

If not properly secured, LLMs could inadvertently expose user data in their responses, violating privacy standards and regulations. Additionally, malicious actors may try to manipulate the model by crafting inputs that could lead to data leakage or model misuse.

7. Lack of Interpretability

LLMs, like many other deep learning models, often work as "black boxes." This means it can be difficult to understand why a model made a particular decision or generated a specific response.

This lack of interpretability can be problematic in high-stakes applications where understanding the reasoning behind decisions is crucial, such as in finance or legal tech.

8. Data Dependency

LLMs rely heavily on the data they were trained on. If the training data is outdated or limited, the model’s performance will be subpar.

In applications where real-time information is important (like news aggregation or market analysis), an outdated model may generate irrelevant or inaccurate results.

This issue can be mitigated by fine-tuning the model on specific, up-to-date datasets, but it's not always feasible at scale.

9. Handling Multi-turn Conversations

For applications like chatbots or virtual assistants, handling multi-turn conversations can be challenging. LLMs may struggle with keeping track of long conversations or switching between different topics.

Without the ability to retain the context across multiple exchanges, these systems can provide disjointed responses, making them less effective for complex interactions.

10. Deployment and Integration Complexity

Deploying LLM applications and integrating them with other systems (such as databases, APIs, or external services) can be complex and error-prone.

Applications built using SDKs or frameworks like LangChain often require significant effort to ensure proper communication between the model and other components of the system. Misconfigurations or incorrect API calls can lead to system crashes, downtime, or unexpected behaviors.

Best Practices for Effective LLM Observability

- End-to-End Monitoring: Ensure that you’re tracking the entire lifecycle of the LLM, from input data to model output. This holistic approach allows you to catch issues early in the process.

- Integrate Tracing and Logging: Use distributed tracing and robust logging practices to capture detailed data on the flow of requests and model behaviors. This makes it easier to diagnose issues and track model performance over time.

- Use AI-Driven Observability Tools: Many observability platforms now incorporate machine learning to detect anomalies and predict potential issues. These tools can automatically flag deviations in model behavior and allow for faster intervention.

- Set Up Alerts: Use real-time alerts to monitor performance thresholds. When anomalies are detected, alerts should trigger specific actions to investigate and resolve issues promptly.

- Regular Model Audits: Conduct audits regularly, especially after model updates or retraining. This ensures the model’s performance remains consistent and that it continues to meet accuracy and quality standards.

Conclusion

LLM observability is essential for ensuring the performance, accuracy, security, and reliability of large language models. As these models grow more complex, observability helps you gain a deeper understanding of how they work, so you can monitor their performance and quickly address any issues.

Last9 makes this process easier by bringing together metrics, logs, and traces into one unified view. This integration allows teams to connect the dots across their systems, manage alerts more effectively, and simplify troubleshooting.

With Last9, you can get real-time insights that help optimize your model’s performance and minimize downtime, ensuring your LLMs are always running at their best.

Schedule a demo with us to learn more or try it for free!

FAQs

What is LLM observability?

LLM observability refers to the practice of monitoring and analyzing the performance, behavior, and health of large language models to ensure they function efficiently and accurately.

Why is LLM observability important?

It helps identify performance bottlenecks, model degradation, and issues like hallucinations or incorrect outputs. It ensures models are running efficiently, accurately, and securely.

What are the key components of LLM observability?

The key components include metrics (e.g., latency, error rates), traces (e.g., request journey analysis), and logs (e.g., event records) to monitor and diagnose issues.

How can I monitor LLM performance?

LLM performance can be monitored through metrics like response time, error rates, throughput, and resource utilization. Traces and logs help identify issues in real-time.

What are the best practices for LLM observability?

Best practices include end-to-end monitoring, integrating tracing and logging, setting up real-time alerts, and regularly auditing the model’s performance to maintain accuracy and reliability.