Dec 6th, ‘22/6 min read

Prometheus vs InfluxDB

What are the differences between Prometheus and InfluxDB - use cases, challenges, advantages and how you should go about choosing the right tsdb

Introduction

Metrics, logs, and traces are end-to-end observability's four core pillars. Despite being essential for acquiring complete visibility into cloud-native architectures, end-to-end observability is still out of reach for many DevOps and SRE teams. This is due to various causes, all of which have tooling as their common denominator. This tooling difficulty must be solved for the log management market to actualize its predicted expansion from $1.9 billion in 2020 to $4.1 billion by 2026 due to the growing usage of hyperscale cloud providers and containerized microservices.

Fusing automation, observability, and intelligence into DevOps pipelines, metrics monitoring, and management boosts the visibility of DevOps and SRE teams into the software and raises the software's overall quality. While several ready-made options for metric monitoring exist, Prometheus and InfluxDB are the market leaders. This article examines these two popular monitoring solutions to uncover their distinctive use cases and frequent user difficulties.

Prometheus V/s InfluxDB - A quick intro

Prometheus, a powerful open-source monitoring tool, provides real-time metrics data. InfluxDB, a time-series database, efficiently stores and queries this data. They are robust monitoring stacks for modern applications but have a few limitations one should know, as we will see in this blog post.

What is Prometheus?

Prometheus is an open-source time series database for tracking and gathering metrics. Prometheus contains a user-defined multi-dimensional data model and a query language on multi-dimensional data called PromQL.

There have been three significant revisions to the Prometheus time series database. The initial version of Prometheus stored all time series data and label metadata in LevelDB. By saving time series data for each time series and implementing delta-of-delta compression, V2 fixed several issues with V1. Write-ahead logging and improved data block compaction were added in V3 to make even more advancements.

What is InfluxDB?

Influx DB is an open-source time series database written in the Go language. It can store data ranging from hundreds of thousands of points per second. InfluxDB has gone through four key revisions — from version 0.9.0, which featured a LevelDB-based LSMTree scheme, to the now-updated version 1.3 features a WAL + TSM file + TSI file-based scheme.

Known Limitations

While exceptional for real-time monitoring and alerting, Prometheus struggles with long-term data storage due to its data retention limitations, which may not be ideal for users needing comprehensive historical data analysis.

InfluxDB, although highly capable of handling time-series data, doesn't have native support for high cardinality datasets, making it inefficient and costly when dealing with massive amounts of unique data points.

Prometheus and InfluxDB have their limitations regarding distributed computing: Prometheus lacks native support for clustering, making scaling more complex, and InfluxDB's clustering is available only in the Enterprise version, limiting scalability in the open-source version.

Limitations of Prometheus

Prometheus integrates easily with most existing infra components. Prometheus supports multi-dimensional data collection and querying. This is especially beneficial in the monitoring of micro-services. Prometheus' effectiveness in metrics and log management is demonstrated by its natural inclusion in the Kubernetes infrastructure for monitoring. Despite its apparent effectiveness, Prometheus has the following drawbacks:

Scale

Prometheus has no Long Term Storage (LTS) — it is not designed to be scaled horizontally. This is a significant negative, particularly for most large-scale enterprise environments.

Your clusters are subsequently upscaled, and your services have an increasing number of replicas as the number of services in your Kubernetes containers grows and metrics usage increases. Therefore, you must monitor and manage more cluster metrics to ensure your containers run efficiently. Sadly, this escalated usage wears down your Prometheus servers.

The quantity of time series stored in Prometheus closely relates to memory use, and as the number of time series increases, OOM kills begin to occur. Although increasing resource quota limitations is beneficial in the short run, it is ineffective in the long term because no pod can expand above the memory capacity of a node at some point.

There are workarounds for this problem. Sharding various metrics across several Prometheus servers using different third-party LTS solutions like Levitate, Thanox, or Cortex. However, these only make the already complex cluster more complex. Especially if you have a large number of metrics. In the end, this makes troubleshooting challenging.

Polling

All metric endpoints must be reachable by the Prometheus poller to comply with the pull-based approach used by Prometheus. Inferring that a more complex secure network configuration is necessary, the existing complex infrastructure becomes even more complicated.

Advanced database features

Prometheus does not support some database functions like stored procedures, query compilation, and concurrency control required for seamless monitoring and metric aggregation.

Limitations of InfluxDB

InfluxDB has two major limitations.

Cardinality and memory consumption

InfluxDb uses monolithic data storage to store indices and metric values in a single file. Hence, data relatively consumes more storage space. This could cause high cardinality problems.

Lack of robust alerting and visualization

InfluxDB does not have alerting and data visualization components. Hence, it has to be integrated with a visualization tool like Grafana. Unfortunately, the high latency rate is another issue when it is integrated with grafana, as evidenced by this review below:

Quick comparison between Prometheus vs. InfluxDB

The similarities and differences between Prometheus and InfluxDB highlight their distinctive utility in various scenarios.

| Comparison | Prometheus | InfluxDB |

|---|---|---|

| Data Collection | Pull based | Push based |

| Compression algorithm | Delta-of-delta | Delta-of-delta |

| Integration | Buffer encoding with Restful APIs | Snappy compressed protocol buffer encoding |

| Data Model | Basic unit is time series | Basic unit is shard unit |

| Data Types | Counter, Gauge, Summary, Histogram | Float, Integer, String, Boolean |

| Query Language | PromQL | InfluxQL |

Detailed breakdown Prometheus vs. InfluxDB

Following are comparisons and differences between the two monitoring solutions:

Data Collection

InfluxDB is a push-based system. It requires an application to push data into InfluxDB actively. Three parameters — view organization, view buckets, and view authentication token — are crucial when writing data into the InfluxDB system.

On the other side, Prometheus is a pull-based system. Prometheus periodically fetches the metrics that an application publishes at a certain endpoint. Prometheus then uses a pull mechanism to gather these metrics from the specified target. The target could be a SQL Server, API server, etc.

Compression

Prometheus and InfluxDB compress timestamps using the delta-of-delta compression algorithm, similar to the one used by Facebook's Gorilla time-series database.

Integration

Prometheus uses buffer encoding over HTTP and RESTful APIs to read and write protocols when integrating with remote storage engines. At the same time, InfluxDB employs HTTP, TCP, and UDP APIs using snappy-compressed protocol buffer encoding.

Data Model

Prometheus stores data as time series. A metric and a set of key-value labels define a time series. Prometheus supports the following data types: Counter, Gauge, Histogram, and Summary.

InfluxDB stores data in shard groups. In InfluxDB, the field data type must remain unchanged in the following range; otherwise, a type conflict error is reported during data writing: the same SeriesKey + the same field + the same shard. InfluxDB supports the following data types: Float, Integer, String, and Boolean.

Data storage

A time series database's storage engine should be able to directly scan data in a given timestamp range using a timeline, write time series data in large batches, and query all matching time series data in a given timestamp range indirectly using measurements and a few tags.

Indices and metric values in a monolithic data storage by InfluxDB using a trident solution consisting of the WAL, TSM, and TSI files. Series key data and time series data are kept distinct in InfluxDB and written into various WALs. This is how data is stored:

Even though both Prometheus and InfluxDB use key/value data stores, how these are implemented varies greatly between the two platforms. While InfluxDB stores indices and metrics in the same file, Prometheus uses LevelDB for its indices, and each metric is stored in its file.

Query language

InfluxDB employs InfluxQL, a regular SQL syntax, while Prometheus uses PromQL for querying.

Scaling

There is no need to worry about scaling the nodes independently because InfluxDB's nodes are connected. Due to the independence of Prometheus' nodes, independent scalability is required. This raises a problem already mentioned as one of the main issues with Prometheus.

How is Last9 different?

Levitate is Last9's managed Prometheus. A managed Prometheus solution removes the toil from your engineering teams and removes the necessary distractions of scale.

With Levitate, you can cut your storage expenditures in half thanks to its pay-as-you-go pricing. With its ability to process queries at any high cardinality and handle massive data scales, Levitate can also give you insights into which metrics aren't being used. Scaling, deduplication, duplication, or multiple Prometheus instances are not issues, as you can alter one or more remote-write endpoints of your TSDBs.

In another blog, we are comparing all the popular time series databases (Prometheus, Influx, M3Db) and Levitate. Go check it out.

Newsletter

Stay updated on the latest from Last9.

Handcrafted Related Posts

Why Service Level Objectives?

Understanding how to measure the health of your servcie, benefits of using SLOs, how to set compliances and much more...

Piyush Verma

Monitor Google Cloud Functions using Pushgateway and Levitate

How to monitor serverless async jobs from Google Cloud Functions with Prometheus Pushgateway and Levitate using the push model

Aniket Rao

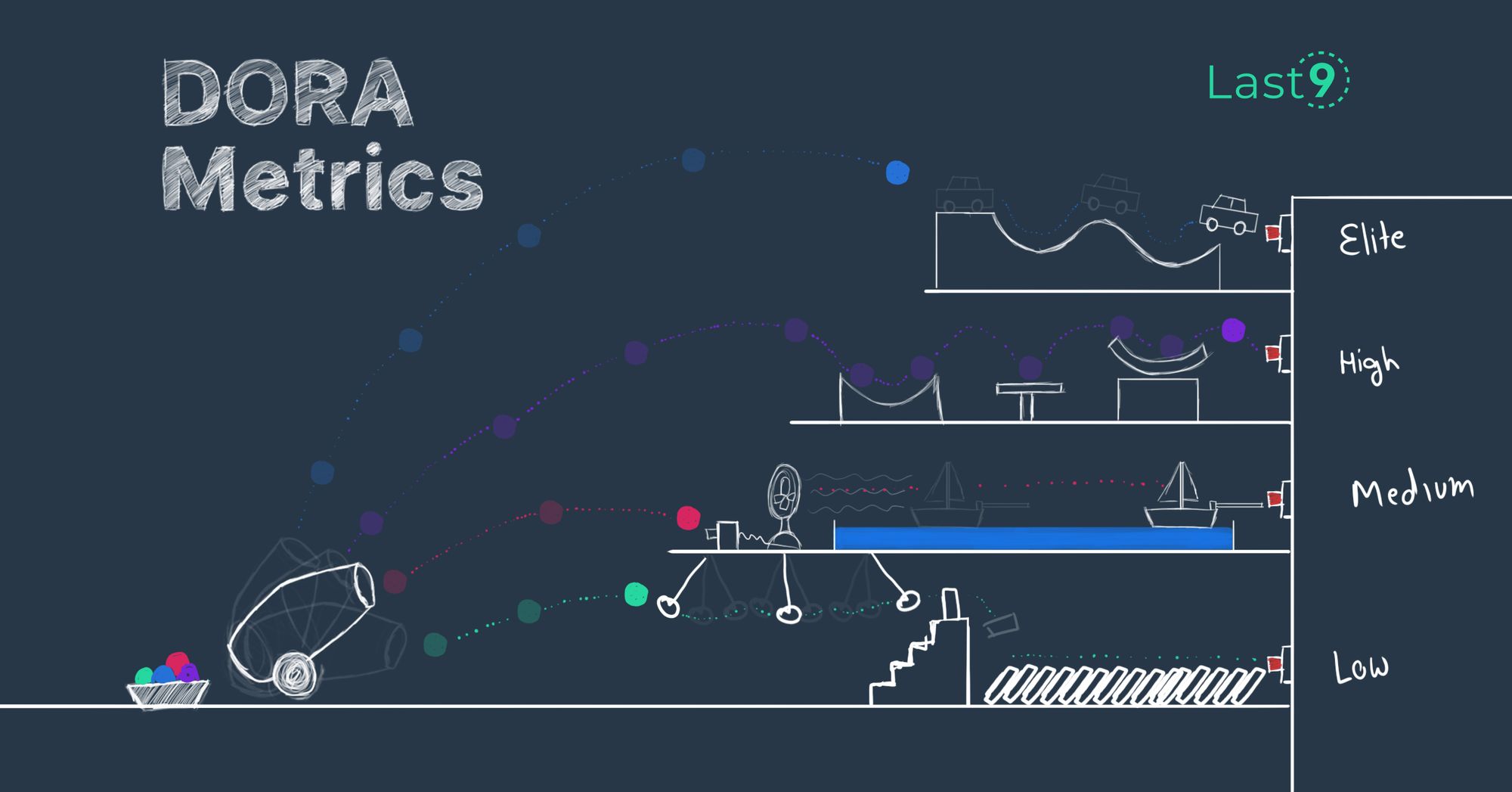

Introduction to DORA Metrics

DORA metrics, what they are, why they are important, and best practices for measuring them.

Prathamesh Sonpatki