Kafka has become a core technology in modern distributed systems, particularly when it comes to real-time data streaming. With organizations increasingly relying on Kafka to handle critical workloads, ensuring its performance and reliability has never been more important.

However, to keep track of Kafka's performance and quickly troubleshoot any issues, you need robust Kafka observability in place. But what exactly is Kafka observability, and why is it so essential?

What is Kafka Observability?

Kafka observability refers to the ability to monitor and gain insights into Kafka’s health, performance, and data flow.

With the right observability tools and metrics, organizations can detect bottlenecks, system failures, or any other issues that might arise in the Kafka cluster.

This helps in preventing downtime, ensuring efficient data flow, and optimizing Kafka's performance.

Three Primary Pillars of Kafka Observability

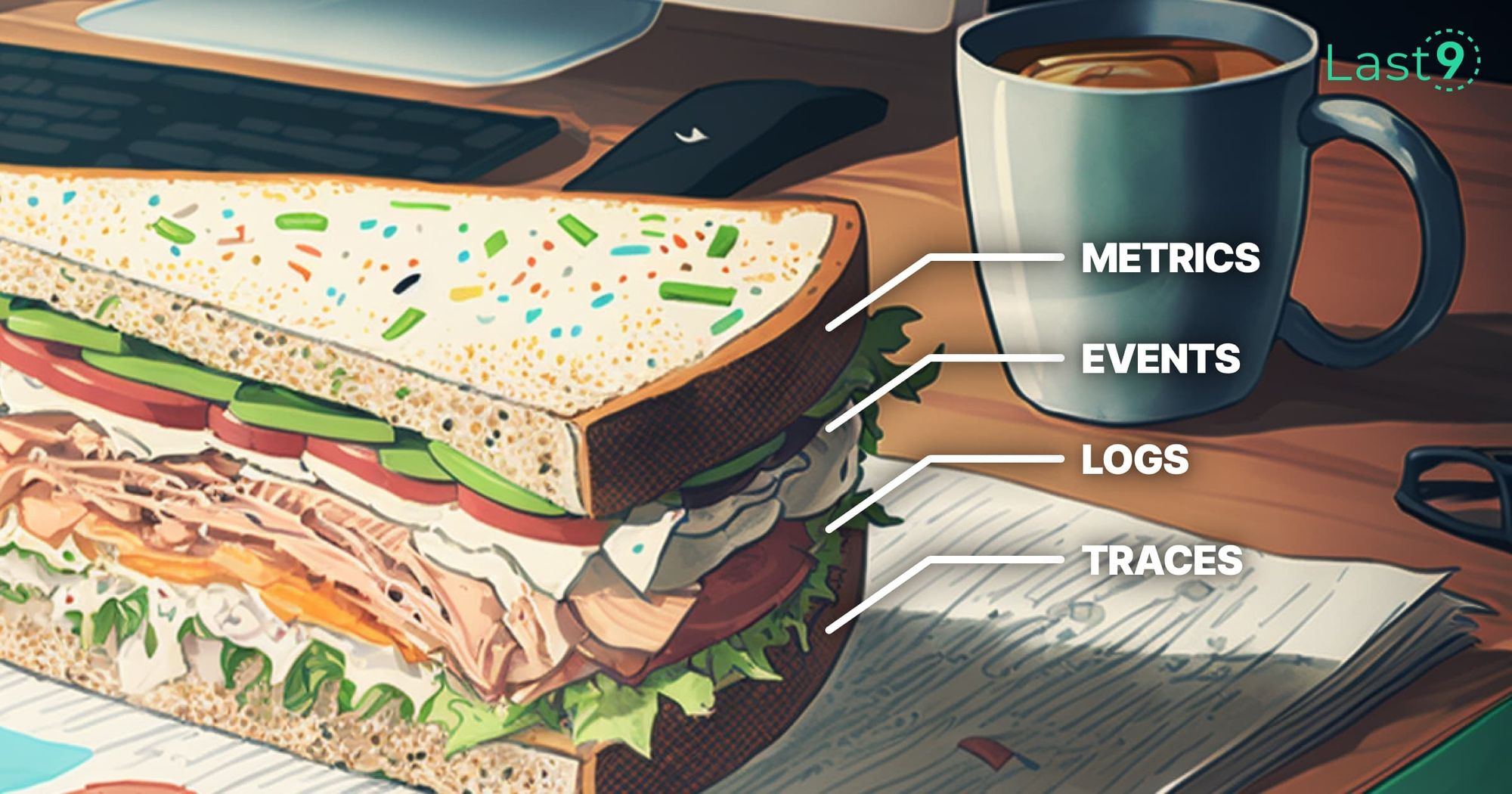

There are three primary pillars of observability:

1. Metrics

Metrics provide quantitative data about the Kafka system’s performance, such as throughput, latency, and resource usage.

2. Logs

Logs help track individual events or errors within Kafka processes, offering insights into what went wrong and when.

3. Traces

Traces track the flow of messages through Kafka, showing how data moves between producers, brokers, and consumers.

Combining these three elements enables Kafka observability to help teams troubleshoot problems faster, identify performance degradation early, and maintain Kafka's reliability within the system architecture.

Why Kafka Observability is Crucial

Performance Monitoring

Kafka is designed for high throughput and scalability, but managing these factors effectively requires continuous monitoring. Observability helps track how well Kafka is handling your workloads.

For example, it can highlight any issues related to throughput, consumer lag, or partition imbalances. This insight allows you to optimize Kafka’s configuration to ensure maximum performance.

eBPF for Enhanced Observability in Modern Systems

Improved Troubleshooting

Kafka runs as a distributed system, which means issues can emerge in different parts of the architecture.

A problem with a specific broker, consumer group lag, or a network issue can be quickly identified with Kafka observability, helping pinpoint the root cause.

Without this visibility, it can be time-consuming to pinpoint where the issue is coming from, leading to potential delays in resolving it.

Ensuring Data Consistency

Kafka is all about handling large volumes of real-time data, often involving critical operations like data streaming or event-driven architectures.

Any interruption can lead to data loss or inconsistency, which can have a significant impact on the system’s reliability.

Observability tools can monitor Kafka's data flow, alerting teams when something goes wrong, and ensuring the system remains in a consistent state.

Openetelemetry with Django

Preventing Downtime

Downtime can be costly for any business, especially in systems relying on real-time data. Kafka observability ensures that issues such as broker crashes, replication failures, or consumer lag are detected early.

Receiving alerts on performance degradation allows operators to prevent issues from escalating into system-wide outages.

Scalability and Growth

Kafka is often used to manage large-scale data streaming across multiple systems. As your system grows, so does the complexity of managing Kafka.

With observability in place, it becomes easier to scale your Kafka deployment by identifying which components require more resources or tuning. This proactive approach to growth ensures that Kafka continues to deliver consistent performance as your workloads increase.

Key Kafka Metrics to Monitor

Several important Kafka metrics should be monitored for optimal performance:

1. Throughput

Throughput measures how many messages Kafka is processing per second. This helps track how efficiently Kafka is handling incoming and outgoing data.

2. Consumer Lag

Consumer lag tracks how much behind the consumer is relative to the producer.

Consumer lag indicates potential performance issues and ensures that consumers are catching up with the incoming data.

3. Replication Factor

The replication factor ensures data is replicated across multiple Kafka brokers.

Any discrepancy in replication can lead to data inconsistency or loss.

4. Broker Metrics

These include disk usage, CPU load, and network throughput of Kafka brokers, all of which impact the performance of the cluster.

5. Partition Metrics

Monitoring partition leaders and replicas helps ensure that the data is properly balanced across the Kafka cluster and avoids single points of failure.

Kafka with Opentelemetry

Popular Observability Tools and Platforms

When it comes to observability, there’s no one-size-fits-all solution.

Depending on your requirements—whether you’re looking for open-source tools, tools with OTLP (OpenTelemetry Protocol) support, or platforms that don’t natively use OTLP—there are several great options out there.

Here’s a look at some of the most popular ones:

1. Prometheus (Open-Source)

Prometheus is a powerful, open-source monitoring and alerting toolkit widely used for collecting and querying time-series data. It’s particularly strong when it comes to metrics collection, making it a go-to choice for observability in cloud-native environments.

Prometheus can collect data from Kafka and other microservices architectures, but it does not natively support OTLP. Instead, it uses its own pull-based model and custom query language (PromQL).

Why it’s great:

- Open-source and highly flexible.

- Excellent integration with Kubernetes and cloud-native systems.

- Large ecosystem with support for exporting metrics to other observability tools.

Limitations:

- Lacks native support for traces and logs.

- Can become difficult to scale for large, complex systems.

2. Grafana (Open-Source)

Grafana is a popular open-source visualization tool often used alongside Prometheus. It allows teams to create highly customizable dashboards to monitor the health and performance of their systems.

While Grafana itself does not handle metrics collection, it excels at visualizing data from Prometheus, InfluxDB, Elasticsearch, and other sources. Grafana can integrate with OpenTelemetry for traces, but it does not natively collect data via OTLP.

Why it’s great:

- Highly customizable dashboards.

- Supports multiple data sources and integrates well with Prometheus.

- Open-source with a large user community.

Limitations:

- Needs integration with other tools for full observability (e.g., Prometheus for metrics, Elastic for logs).

3. Last9 (Cloud-Native, OTLP Support)

Last9 is a cloud-native observability platform designed specifically to simplify the monitoring of distributed systems, including microservices, Kubernetes, and tools like Prometheus and OpenTelemetry.

It integrates logs, metrics, and traces into a unified view, making it easy to track and troubleshoot system performance.

Last9 supports OTLP, allowing easy integration with OpenTelemetry, and offers insights into performance issues, error patterns, and system health in real time.

Why it’s great:

- Unified observability for metrics, logs, and traces.

- Designed for complex, distributed environments.

- Full OTLP support, simplifying OpenTelemetry integration.

- Special focus on high-cardinality, simplifying troubleshooting, and improving alert management.

Limitations:

- It may not be suitable for teams looking for a purely open-source solution.

4. Elasticsearch, Logstash, and Kibana (ELK Stack)

The ELK Stack (Elasticsearch, Logstash, and Kibana) is a popular combination for managing logs, with Elasticsearch handling storage and querying, Logstash processing log data, and Kibana visualizing it.

While it does not natively support OTLP, OpenTelemetry can be configured to export logs and traces to Elasticsearch for analysis. ELK is particularly powerful when it comes to aggregating, searching, and visualizing log data at scale.

Why it’s great:

- Excellent for managing and visualizing logs.

- Scalable and fast search capabilities via Elasticsearch.

- Open-source with active community support.

Limitations:

- Setting up and maintaining ELK can be resource-intensive.

- Does not offer full observability (metrics, traces) out-of-the-box.

5. Datadog (Commercial)

Datadog is a cloud-based observability platform that provides full-stack monitoring, including metrics, traces, and logs. Datadog supports OTLP natively, making it easy to collect data from OpenTelemetry-enabled applications.

It offers robust features for monitoring distributed systems, including integration with Kafka, Kubernetes, and cloud-native environments.

Why it’s great:

- Full-stack observability with metrics, logs, and traces in one platform.

- Easy to use and highly scalable.

- Native OTLP support for seamless OpenTelemetry integration.

Limitations:

- It’s a paid platform, which might be a barrier for smaller teams or businesses.

- The cost can grow significantly with scale.

Datadog Alternatives You Need to Know

The Role of Telemetry in Observability: Correlating Metrics, Traces, and Logs

In modern software systems, especially distributed ones, understanding how applications behave and diagnosing issues can be a challenge. Telemetry plays a pivotal role in observability by providing the data needed to monitor, analyze, and optimize system performance.

Collecting and correlating metrics, traces, and logs gives teams a clearer picture of their systems, helping them detect issues early and troubleshoot more effectively.

Let’s understand how telemetry works and how correlating these data types enhances system monitoring.

1. Telemetry Components: Metrics, Traces, and Logs

Before diving into correlation, let’s break down the key data types in telemetry:

- Metrics: These are numerical measurements that offer a snapshot of your system’s health and performance. Examples include response times, throughput, error rates, and resource utilization (like CPU or memory usage).

- Traces: Traces capture the flow of a request as it moves through different services or components. They show how long each step takes, helping to identify bottlenecks or failures along the way.

- Logs: Logs are records of events and messages generated by different components of your system. They often provide more detailed information than metrics or traces, such as error messages or system states at specific times.

While each of these components is important, the real value comes when they are correlated to give a fuller understanding of system behavior.

Observability Essentials

2. How Correlating Metrics, Traces, and Logs Improves Monitoring

Here's how these data types work together:

- Metrics + Traces = Better Performance Insight

Metrics provide an overall view of system health, but traces allow you to drill down into individual requests.

For instance, if you see a spike in latency in your metrics, traces help you identify where the problem occurred—whether it’s a specific microservice or a database query causing the delay. This correlation helps you pinpoint the root cause faster.

- Logs + Traces = More Effective Troubleshooting

Logs offer detailed insights into events at the individual service level, which can be critical for troubleshooting.

When correlated with traces, logs provide the context of what happened at each step of a transaction.

For example, if a trace shows a performance bottleneck, logs can reveal specific error messages or failed operations, making it easier to understand the issue and resolve it.

- Metrics + Logs = Faster Root Cause Analysis

Metrics can alert you to abnormal behavior, such as increased error rates or longer response times. - However, logs provide the detailed information needed to diagnose the root cause.

For example, if error rates spike from a specific service, logs can tell you if the error was due to a timeout, misconfiguration, or failure in a downstream component. Combining these data types helps you confirm whether the issue is performance-related or something else.

3. Practical Examples of Correlating Telemetry Data

Here are a few real-world examples of how correlating telemetry data can help:

- Identifying Latency Issues

If your metrics show increasing latency, you can use traces to follow the journey of requests and pinpoint where the delay happens (e.g., a specific service or database). Logs then provide the fine details, like a slow database query, enabling you to fix the issue quickly. - Investigating Service Failures

When a service starts failing, metrics will show an uptick in error rates. Traces can help you follow the affected requests, while logs can give you specific error details (e.g., an exception or stack trace), helping you understand why the service failed. - Resource Utilization Monitoring

Metrics help you monitor resource usage (CPU, memory, network traffic). If resource utilization spikes, traces can identify which services or requests are consuming excessive resources. If needed, logs can provide further insights, such as memory leaks or inefficient queries, pointing you toward the root cause.

Tracing Tools for Observability

Kafka Observability Challenges

While Kafka is essential for managing real-time data streams, its observability can be challenging:

- Volume of Data: Kafka generates massive amounts of metrics, logs, and traces. Managing this data efficiently demands robust tools and strategies for collection, storage, and analysis.

- Complexity of Distributed Systems: Kafka operates in a distributed environment, making it tough to track issues across multiple components without the right observability tools.

- Scalability of Observability Tools: As Kafka scales, your observability tools need to keep up with the growing load of data collection and analysis. Choosing and configuring the right solutions is key to handling this challenge.

Improving Collaboration and Visibility in Kafka Streams

In distributed systems, Kafka is often the backbone for real-time data streaming.

However, as data flows through Kafka streams, it can become difficult to maintain visibility and traceability—especially when debugging issues or collaborating across teams.

To make Kafka streams more understandable and collaborative, here are a few ways to add context and improve traceability:

1. Utilize Distributed Tracing with OpenTelemetry

Integrating distributed tracing with OpenTelemetry provides valuable context by allowing you to trace the flow of data across different services.

Using trace IDs and adding metadata to Kafka messages allows you to track each event’s path from origin to destination.

This gives teams the ability to track message flow, understand dependencies, and spot bottlenecks or failures faster.

How it works:

- Kafka producers can inject a trace ID into each message as it is produced.

- Consumers can pass this trace ID along as they process the message, enabling full visibility of how data moves between producers, Kafka, and consumers.

Viewing these traces in tools like Grafana or Jaeger enables teams to visualize a message’s entire journey, helping identify the component or service causing delays or errors.

Anatomy of Observability System

2. Enrich Kafka Messages with Contextual Metadata

Another way to enhance collaboration is by enriching Kafka messages with additional contextual metadata. This could include:

- Message type: Indicates what kind of data the message represents (e.g., an order, user activity, or payment).

- Business-specific identifiers: Add unique business identifiers like user IDs, order numbers, or session IDs.

- Event timestamps: Include precise timestamps of when the event occurred, which can help correlate with other logs or metrics.

- Source system information: Identify which system or microservice produced the event to improve debugging.

Enriching messages with context not only helps the team understand the flow of data, but also improves the ability to search, filter, and analyze streams in tools like Elasticsearch or Grafana.

3. Integrate Metrics and Alerts into the Kafka Flow

In addition to logs and traces, metrics provide another layer of context that can enhance collaboration.

Monitoring key metrics like consumer lag, message processing times, and throughput helps teams gain valuable insights into the health of Kafka streams.

You can create real-time alerts that notify team members of potential issues, allowing them to act before problems escalate.

Tools:

- Integrating Kafka with monitoring tools like Prometheus or Datadog can help track key performance metrics in real time.

- Tools like Grafana can be used to visualize the health of Kafka streams alongside other microservices.

4. Cross-Team Dashboards for Collaboration

Having centralized, cross-team dashboards that visualize Kafka streams alongside logs, traces, and metrics can significantly improve collaboration.

Tools like Grafana and Kibana enable teams to create custom dashboards that combine data from various sources, such as Kafka, databases, and microservices.

This gives teams a unified view of the system's health and data flow, making it easier for them to collaborate and troubleshoot issues in real-time.

5. Document Kafka Streams and Data Pipelines

Maintaining comprehensive documentation of Kafka streams and data pipelines is essential for fostering collaboration. This includes documenting:

- The purpose of each Kafka topic and the structure of the messages within them.

- The lifecycle of a message: where it originates, how it is processed, and where it ends up.

- Dependencies between different microservices or systems that interact with Kafka.

Documentation ensures that all team members are on the same page and helps onboard new members quickly, reducing misunderstandings and promoting better communication.

Conclusion

Kafka is an incredibly powerful tool for managing real-time data streams, but its complexity requires a proactive approach to monitoring and troubleshooting.

Kafka observability isn’t just a nice to have; it’s critical for maintaining high performance, reliability, and scalability in your infrastructure.

FAQs

What is Kafka observability?

Kafka observability refers to the practice of monitoring, tracking, and analyzing the performance and health of Kafka systems by collecting and correlating metrics, logs, and traces. It helps teams quickly detect issues and ensure reliable performance in distributed systems.

Why is Kafka observability important for distributed systems?

In distributed systems, Kafka acts as the backbone for real-time data streaming, making it crucial to monitor its performance. Kafka observability allows teams to troubleshoot issues faster, identify bottlenecks, and maintain system reliability and scalability.

What are the challenges of Kafka observability?

The key challenges include managing the vast volume of data generated by Kafka, tracking issues across multiple distributed components, and ensuring observability tools can scale as Kafka expands. Efficient data collection, storage, and analysis strategies are necessary to address these challenges.

How can I correlate metrics, logs, and traces in Kafka?

Integrating distributed tracing (e.g., with OpenTelemetry) and enriching Kafka messages with metadata enables tracing the journey of messages across Kafka producers, brokers, and consumers. Combining metrics like consumer lag with logs and traces helps quickly identify performance issues and root causes.

What are the benefits of integrating metrics into Kafka observability?

Metrics like consumer lag, message processing time, and throughput provide real-time insights into Kafka's health and performance. These metrics, combined with logs and traces, enable teams to detect issues before they escalate into system-wide failures, ensuring smoother operations.

What tools are commonly used for Kafka observability?

Common tools for Kafka observability include OpenTelemetry, Prometheus for metrics, Grafana for visualization, Jaeger for distributed tracing, and Elasticsearch for log aggregation. These tools help provide end-to-end visibility into Kafka’s performance and aid in proactive troubleshooting.

How does enriching Kafka messages with contextual metadata improve observability?

Adding metadata to Kafka messages, like message types, event timestamps, and source system information, enhances the traceability of data. This allows teams to better understand message flow, identify bottlenecks, and correlate events with other system data for easier debugging and performance optimization.