Log shippers are essential components in modern infrastructure, serving as the critical connection between the systems that generate logs and the platforms that store and analyze them. They operate behind the scenes to ensure that important system and application information reaches its destination reliably.

This guide provides a comprehensive overview of log shippers, including their functionality, implementation considerations, and selection criteria for different environments.

What is a Log Shipper?

A log shipper is a software tool that collects log data from various sources and forwards it to a centralized location for storage, processing, and analysis.

It functions as a transport mechanism for application and system telemetry, moving the continuous stream of log data from origin points to destinations where analysis can occur.

Log shippers handle the crucial task of moving data from where it's generated (servers, containers, or applications) to where it can be analyzed (log management platforms). This automation eliminates the need for manual log collection, which would be impractical at scale.

The Log Shipping Architecture

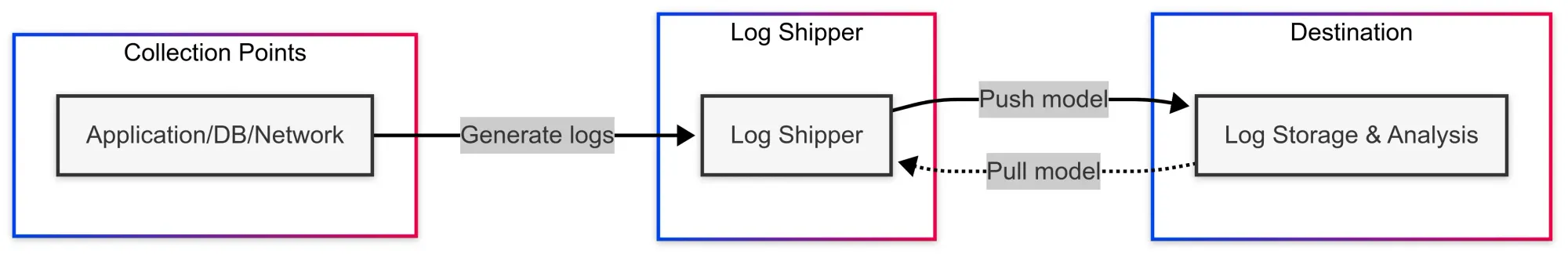

At its core, a log shipping architecture consists of three main components:

- Collection points - Where logs originate (application servers, databases, network devices)

- The log shipper itself - Software that transports the data

- Destination systems - Where logs are stored and analyzed (Elasticsearch, Splunk, Grafana Loki, etc.)

Most log shippers follow either a push or pull model:

- Push model: The shipper actively sends logs to the destination

- Pull model: The destination requests logs from the shipper

Modern architectures often involve multiple tiers, with local collectors feeding into aggregators, which then forward to the final destination. This approach helps with scaling and resilience.

How Log Shippers Work Under the Hood

Log shippers typically operate through these processes:

- Discovery: Finding log sources (files, sockets, APIs)

- Collection: Reading log data (tailing files, receiving over the network)

- Parsing: Converting raw logs into structured data

- Enrichment: Adding metadata (hostname, timestamps, tags)

- Buffering: Temporarily storing logs in memory or on disk

- Transmission: Sending logs to their destination

- Confirmation: Ensuring delivery was successful

Different log shippers implement these stages with varying levels of sophistication. Some focus on simplicity and speed, while others emphasize transformation capabilities and reliability.

Why Your Team Needs a Log Shipper

While it might be possible to access logs directly via SSH on individual servers, this approach becomes impractical in modern distributed environments.

Here are the key reasons why log shippers are essential components in production infrastructure:

Centralized Visibility

In environments with microservices spanning dozens or hundreds of containers, individual log inspection becomes inefficient. Log shippers consolidate this information into a central repository, providing complete visibility across the infrastructure.

With centralized logging via a log shipper, you can:

- Search across all systems simultaneously

- Correlate events that span multiple services

- Create unified dashboards showing your entire environment

- Apply consistent retention policies and access controls

Real-time Monitoring

Log shippers don't just collect data – they stream it in real time. This enables immediate issue detection, allowing teams to respond to problems as they occur rather than discovering them after they've impacted users.

Modern log shippers achieve this through:

- File watching with inotify or similar mechanisms

- Stateful tracking of file positions

- Incremental shipping of new log lines

- Websocket or persistent TCP connections to destinations

- Sub-second detection of new log entries

The real-time nature enables proactive incident response rather than reactive troubleshooting after the fact.

Reduced MTTR (Mean Time to Recovery)

When systems experience failures, log shippers facilitate faster root cause analysis. The capability to search through centralized, indexed data significantly reduces debugging time compared to manually reviewing fragmented logs distributed across multiple systems.

Technical factors that contribute to this include:

- Structured logging formats that are easier to query

- Consistent timestamp normalization across sources

- Addition of context metadata for filtering

- Full-text search capabilities at the destination

- Event correlation across distributed systems

Data Transformation

Modern log shippers don't just move data – they can transform it too. This includes parsing JSON, extracting fields, and redacting sensitive information. These transformations occur before logs reach their destination, improving downstream processing efficiency.

Common transformation capabilities include:

- Regex pattern extraction

- JSON and XML parsing

- Date/time normalization

- Structured to schema conversion

- Field dropping, renaming, and merging

- Conditional routing based on content

- Lookup enrichment from external sources

Resource Efficiency

Log shipping isn't just about convenience – it's also about efficiency:

- Network optimization: Through batching, compression, and protocol efficiency

- Storage reduction: Via filtering, sampling, and aggregation

- Query performance: Through pre-processing and indexing preparation

- Administrative overhead: By automating log management tasks

All of these translate to both cost savings and performance improvements across your observability stack.

Types of Log Shippers

Not all log shippers are created equal. Here's a rundown of the main types you'll encounter:

Agent-based Log Shippers

These run as lightweight processes on servers or containers. They operate continuously on each machine, monitoring for new log entries and transmitting them to central destinations.

Pros:

- Low latency (typically <1 second from generation to shipping)

- Works even when connectivity is intermittent (thanks to local buffering)

- Can process logs before sending them (reducing backend processing)

- Access to local context (hostname, environment variables, file metadata)

- Can use filesystem events for efficient log detection

Cons:

- Requires installation and management on each source

- Consumes some system resources (typically 5-100MB RAM depending on volume)

- Requires update/upgrade management across your fleet

- Security implications of having another service running

- Configuration drift problems at scale

Best for:

- Production environments where reliability is critical

- Systems generating high-volume logs

- Environments where preprocessing is needed

- Situations where network connectivity might be unreliable

Agentless Log Shippers

These collect logs remotely without needing software installed on each source.

Pros:

- No need to install agents everywhere

- Lower maintenance overhead

- No resource consumption on source systems

- Centralized configuration management

- Reduced attack surface on your servers

Cons:

- Generally higher latency (polling intervals typically 10+ seconds)

- Requires continuous network connectivity

- Limited pre-processing capabilities

- Typically requires authentication credentials to access remote systems

- Less insight into the local system context

Best for:

- Network devices and appliances

- Legacy systems where installing agents is difficult

- Development environments where convenience trumps performance

- Smaller deployments with fewer sources

Sidecar-based Log Shippers

Common in Kubernetes environments, these run alongside your application containers.

Pros:

- Isolated from the application (separate container)

- Can be deployed and scaled with your application (via pod definition)

- Great for microservices architectures

- Tailored configuration per application

- Failure isolation (one sidecar crash doesn't affect others)

Cons:

- Increases pod resource consumption

- More complex configuration (requires understanding of container orchestration)

- Additional network hops in some implementations

- Potential performance impact on application pods

- More complex lifecycle management

Best for:

- Kubernetes and container orchestration environments

- Microservices architectures

- Multi-tenant clusters with different logging requirements

- Organizations with mature container practices

Hybrid Approaches

Many modern environments use combinations of these approaches:

- Agent-based collectors feeding into centralized aggregators

- Sidecar shippers with agent-based node-level aggregation

- Push and pull models working in tandem

These hybrid architectures can optimize for specific constraints in different parts of your infrastructure.

Popular Log Shipper Tools Comparison

| Log Shipper | Type | Best For | Performance | Flexibility | Learning Curve | Language | Creation Date | Distinguishing Features |

|---|---|---|---|---|---|---|---|---|

| Fluentd | Agent | Kubernetes, cloud-native | Medium-High | High | Medium | Ruby/C | 2011 | Over 500 plugins, CNCF graduated project |

| Fluent Bit | Agent | Edge computing, IoT | Very High | Medium | Low-Medium | C | 2015 | Extremely lightweight (1/10th of Fluentd memory usage) |

| Logstash | Agent | Complex processing | Medium | Very High | Medium-High | JRuby | 2009 | Rich expression language, deep Elastic stack integration |

| Vector | Agent | High-performance needs | High | High | Medium | Rust | 2019 | Data-oriented pipeline model, observability built-in |

| Filebeat | Agent | Simple file shipping | High | Low | Low | Go | 2015 | Lightweight, easy setup, Elastic stack integration |

| Promtail | Agent | Grafana Loki users | High | Medium | Low | Go | 2018 | Label-based routing, works seamlessly with Loki |

| rsyslog | Agent | Unix/Linux systems | Very High | Medium | Medium | C | 2004 | Extremely battle-tested, high throughput |

| syslog-ng | Agent | Enterprise environments | High | High | Medium-High | C | 1998 | Advanced filtering, wide protocol support |

| NXLog | Agent | Windows environments | High | High | Medium | C | 2010 | Excellent Windows Event Log support |

| Cribl Stream | Aggregator | Large enterprises | Very High | Very High | High | C++/JS | 2017 | Advanced preprocessing, routing, sampling |

Once those logs are converted into metrics or used to trigger alerts, Last9 steps in—to help you make sense of the signal in all that noise.

Fluentd In-depth

Fluentd deserves special attention as one of the most widely adopted log shippers in cloud-native environments.

Architecture:

- Input plugins collect logs from various sources

- Parser plugins convert logs into structured data

- Filter plugins modify or drop events

- Output plugins send data to destinations

- Buffer plugins provide reliability between stages

Configuration Example:

<source>

@type tail

path /var/log/containers/*.log

pos_file /var/log/fluentd-containers.log.pos

tag kubernetes.*

read_from_head true

<parse>

@type json

</parse>

</source>

<filter kubernetes.**>

@type kubernetes_metadata

kubernetes_url https://kubernetes.default.svc

bearer_token_file /var/run/secrets/kubernetes.io/serviceaccount/token

ca_file /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

</filter>

<match **>

@type elasticsearch

host elasticsearch-logging

port 9200

logstash_format true

</match>Performance Characteristics:

- Memory usage: ~100MB base + ~40MB per 1,000 events/second

- CPU usage: Moderate, Ruby interpreter overhead

- Throughput: Up to 10,000 events/second per node with default config

- Latency: Typically 1-3 seconds end-to-end

Vector Deep Dive

Vector represents the newer generation of log shippers, built with performance as a primary goal.

Architecture:

- Sources receive data

- Transforms modify data

- Sinks send data to destinations

- Each component runs in parallel with controlled topology

Configuration Example:

[sources.docker_logs]

type = "docker_logs"

include_images = ["app", "db", "cache"]

[transforms.parse_json]

type = "remap"

inputs = ["docker_logs"]

source = '''

. = parse_json!(string!(.message))

'''

[sinks.elasticsearch]

type = "elasticsearch"

inputs = ["parse_json"]

endpoint = "http://elasticsearch:9200"

index = "vector-%Y-%m-%d"Performance Characteristics:

- Memory usage: ~20MB base + ~10MB per 10,000 events/second

- CPU usage: Low, Rust efficiency

- Throughput: Up to 100,000+ events/second per node

- Latency: Sub-second end-to-end

Key Features to Look For in a Log Shipper

When you're shopping for a log shipper, here's what should be on your checklist:

Performance Overhead

Log shippers should operate with minimal resource impact. It's important to select tools with efficient CPU and memory utilization, particularly when deploying across large-scale environments with hundreds of nodes.

Technical considerations:

- Memory usage per event rate (MB per 1000 events/second)

- CPU usage patterns (constant vs. spiky)

- I/O patterns (how it interacts with disks)

- Network efficiency (protocol overhead, connection reuse)

- Concurrency model (thread pool sizing, async I/O)

Most log shippers publish benchmarks, but take these with a grain of salt – real-world performance depends heavily on your specific workload and configuration.

Protocol Support

Log shippers should support the protocols used by both source systems and destination platforms. This includes compatibility with syslog, HTTP, Kafka, and other common data transport protocols utilized in the environment.

Common protocols to consider:

- Input protocols: Syslog (TCP/UDP), HTTP(S), TCP/UDP raw, Unix sockets, SNMP, JMX

- Output protocols: HTTP(S), TCP, UDP, Kafka, AMQP, Kinesis, SQS, Elasticsearch API

- Authentication mechanisms: Basic auth, OAuth, API keys, AWS IAM, mTLS

- Serialization formats: JSON, Avro, Protobuf, MsgPack, BSON

The more protocols supported natively, the less custom code you'll need to write for integration.

Reliability Mechanisms

Resilience during destination system outages is critical. Effective log shippers incorporate buffering capabilities, disk-based queuing, and retry logic to prevent data loss during temporary disruptions to central logging systems.

Key reliability features:

- Memory buffering: Holding events in RAM before transmission

- Disk-based queuing: Persisting events to disk when destinations are unavailable

- Checkpointing: Tracking what's been successfully sent

- Backpressure handling: Graceful behavior when overloaded

- Circuit breaking: Avoiding overwhelming destinations

- Retry policies: Configurable backoff and limits

- Dead letter queues: Handling repeatedly failed events

Look for configurable persistent queues with clear documentation on capacity planning.

Scalability

As infrastructure scales, log shipping solutions should adapt without requiring fundamental architectural changes. Tools that support horizontal scaling and can efficiently process increasing data volumes are preferable for growing environments.

Scalability factors:

- Horizontal scaling: Adding more instances vs. vertical scaling

- Resource requirements: How they grow with event volume

- Connection pooling: Efficient use of network resources

- Batching capabilities: Reducing per-event overhead

- Multi-threading: Utilizing available cores effectively

- Backpressure mechanisms: Handling traffic spikes

Ideally, your log shipper should scale linearly with the number of nodes and event volume.

Security Features

Logs often contain sensitive information. Log shippers should implement appropriate security measures including encryption in transit, access controls, and data masking capabilities to protect confidential information.

Security capabilities to evaluate:

- TLS support: For encrypted data transmission

- Authentication: For both inputs and outputs

- Authorization: Controlling who can configure the shipper

- Credential management: Secure handling of API keys and tokens

- Data redaction: Removing PII or sensitive data before transmission

- Audit logging: Tracking configuration changes

- Minimal attack surface: Reducing potential vulnerabilities

Remember that your log shipper has access to potentially sensitive data across your infrastructure, so security should be a priority.

Observability of the Log Shipper Itself

You need to monitor the monitor. Look for built-in metrics, health checks, and self-diagnostics.

Self-monitoring features:

- Performance metrics: Events processed, queue sizes, etc.

- Health endpoints: For integration with monitoring systems

- Debug logging: For troubleshooting issues

- Pipeline visualization: Understanding the flow of data

- Hot reloading: Changing config without restart

- Resource usage reporting: CPU, memory, disk, network

These capabilities help ensure your log shipper remains healthy and performant.

Setting Up Your First Log Shipper

Ready to get started? Here's a detailed approach to implementing a log shipper in your environment:

1. Identify Your Log Sources

Start by mapping out all your log sources:

- Application logs: Custom applications, third-party software

- System logs: Kernel, systemd, auth logs

- Container logs: Docker, Kubernetes pod logs

- Database logs: Query logs, error logs, audit logs

- Network device logs: Firewalls, load balancers, routers

- Security logs: Authentication attempts, audit trails

For each source, document:

- Location (file path, socket, API endpoint)

- Format (JSON, plaintext, structured)

- Volume (events per second, size)

- Retention requirements

2. Choose Your Destination

Decide where your logs—or insights from logs—will ultimately live:

- Last9 – Built for high-cardinality, time-series data. If you extract metrics or events from logs (e.g., error rates, latency), this is a solid home.

- Elasticsearch / OpenSearch – Great for search-heavy use cases. Popular in log-heavy stacks.

- Grafana Loki – Lightweight, built for logs, and works well with Kubernetes.

- Splunk – Enterprise-grade and powerful, but it’ll cost you.

- InfluxDB / Prometheus – Best for metrics, not raw logs.

- Cloud services – AWS CloudWatch, Google Cloud Logging, Azure Monitor—easy to plug in if you're already on those platforms.

- S3 / Object storage – Cheap and durable. Great for long-term archiving or compliance.

Consider factors like:

- Query capabilities

- Retention periods and costs

- Integration with existing tools

- Scalability requirements

- Management overhead

3. Select the Right Log Shipper

Based on your sources, destinations, and requirements, choose a log shipper:

For Kubernetes environments:

Fluentd or Fluent Bit → Kafka → ElasticsearchFor traditional VM infrastructure:

Filebeat → Logstash → ElasticsearchFor high-performance needs:

Vector → Kafka → Your analytics platformFor Windows-heavy environments:

NXLog → Elasticsearch or Splunk4. Installation and Configuration

Let's look at a practical example using Vector:

Installation (Ubuntu/Debian):

curl -1sLf https://repositories.timber.io/public/vector/cfg/setup/bash.deb.sh | sudo bash

sudo apt-get install vectorBasic configuration file (/etc/vector/vector.toml):

# Collect logs from files

[sources.app_logs]

type = "file"

include = ["/var/log/myapp/*.log"]

read_from = "beginning"

# Parse JSON logs

[transforms.parse_app_logs]

type = "remap"

inputs = ["app_logs"]

source = '''

. = parse_json!(string!(.message)) || {"raw_message": .message}

.timestamp = to_timestamp!(.timestamp) || now()

.host = get_env_var("HOSTNAME") || "unknown"

.environment = get_env_var("ENVIRONMENT") || "production"

'''

# Send to Elasticsearch

[sinks.es_logs]

type = "elasticsearch"

inputs = ["parse_app_logs"]

endpoint = "http://elasticsearch:9200"

index = "logs-%Y-%m-%d"

compression = "gzip"

# Health monitoring API

[api]

enabled = true

address = "127.0.0.1:8686"Starting the service:

sudo systemctl enable vector

sudo systemctl start vectorValidating operation:

curl http://127.0.0.1:8686/health

vector top # Live dashboard of throughput5. Implementation Best Practices

For a successful deployment:

- Start with a pilot: Choose a non-critical service

- Set up monitoring: Monitor the log shipper itself

- Implement gradually: Roll out in phases

- Document everything: Keep configuration under version control

- Test failure scenarios: Simulate destination outages

- Define alerting: Be notified of shipper issues

- Create runbooks: Document troubleshooting steps

6. Advanced Configuration Techniques

As you mature your implementation:

Dynamic configuration:

# Kubernetes ConfigMap example

apiVersion: v1

kind: ConfigMap

metadata:

name: vector-config

data:

vector.toml: |

[sources.k8s_logs]

type = "kubernetes_logs"

[transforms.add_cluster_name]

type = "remap"

inputs = ["k8s_logs"]

source = '.cluster_name = get_env_var("CLUSTER_NAME")'

[sinks.loki]

type = "loki"

inputs = ["add_cluster_name"]

endpoint = "http://loki:3100"

encoding.codec = "json"

labels = {"app" = "{{.pod_labels.app}}", "namespace" = "{{.namespace}}"}High-availability setup:

- Deploy multiple instances behind a load balancer

- Use shared disk queues for failover

- Implement active/passive configurations

Handling different environments:

- Use environment variables for configuration

- Implement different log levels per environment

- Adjust batch sizes based on instance type

Common Log Shipper Challenges and Solutions

High Volume Handling

Challenge: Your systems generate terabytes of logs daily, overwhelming your log shipper.

Technical impact:

- Buffer overflows

- Increased memory consumption

- Network saturation

- Disk I/O bottlenecks

Solution options:

- Filter at source: Be selective about what you ship

# Vector example of filtering out health checks

[transforms.filter_health_checks]

type = "filter"

inputs = ["http_logs"]

condition = '.path != "/health" && .path != "/readiness"'- Sample high-volume logs: Take a statistically significant portion

# Vector example of sampling

[transforms.sample_debug_logs]

type = "sample"

inputs = ["app_logs"]

rate = 10 # Only keep 1 in 10 events- Aggregate similar events: Roll up repeated messages

# Vector aggregation example

[transforms.aggregate_errors]

type = "reduce"

inputs = ["parsed_logs"]

group_by = ["error_code", "service"]

expires_after_ms = 60000 # 1 minute window

# Count occurrences and keep the latest timestamp

reduce.count = "count() ?? 0"

reduce.latest_timestamp = "max(timestamp) ?? now()"

reduce.message = ".message[0]" # Keep the first message- Distribute processing: Use multiple shippers with load balancing

+-------------+ +-------------+

| Log Shipper | | Log Shipper |

+------+------+ +------+------+

| |

v v

+------+-------------------+------+

| Load Balancer |

+------+-------------------------+

|

v

+------+------+

| Destination |

+-------------+Configuration Complexity

Challenge: Maintaining consistent configurations across hundreds of log shipper instances.

Solution:

- Infrastructure as code: Use Terraform, Ansible, or similar

# Terraform example

resource "kubernetes_daemonset" "vector_agent" {

metadata {

name = "vector"

namespace = "logging"

}

spec {

selector {

match_labels = {

app = "vector"

}

}

template {

metadata {

labels = {

app = "vector"

}

}

spec {

container {

name = "vector"

image = "timberio/vector:0.22.0-debian"

volume_mount {

name = "config"

mount_path = "/etc/vector/"

read_only = true

}

volume_mount {

name = "var-log"

mount_path = "/var/log/"

read_only = true

}

}

volume {

name = "config"

config_map {

name = "vector-config"

}

}

volume {

name = "var-log"

host_path {

path = "/var/log"

}

}

}

}

}

}- Dynamic configuration: Generate config from templates

# Python example of template-based config generation

import jinja2

import yaml

# Load template

template = jinja2.Template(open('vector-template.toml.j2').read())

# Load environment configs

environments = yaml.safe_load(open('environments.yaml'))

# Generate a config for each environment

for env_name, env_config in environments.items():

config = template.render(

environment=env_name,

elasticsearch_hosts=env_config['elasticsearch_hosts'],

log_level=env_config['log_level'],

batch_size=env_config['batch_size']

)

with open(f'vector-{env_name}.toml', 'w') as f:

f.write(config)- Centralized management: Tools like Puppet, Chef, or custom controllers

# Puppet example

class profile::logging::vector {

$config_hash = lookup('vector_config')

package { 'vector':

ensure => 'present',

}

file { '/etc/vector/vector.toml':

ensure => 'present',

content => template('profile/vector/vector.toml.erb'),

notify => Service['vector'],

}

service { 'vector':

ensure => 'running',

enable => true,

require => [Package['vector'], File['/etc/vector/vector.toml']],

}

}Network Constraints

Challenge: Limited bandwidth between your data centers and logging destination.

Solution approaches:

- Compression: Reduce the size of transmitted data

# Vector compression example

[sinks.es_logs]

type = "elasticsearch"

inputs = ["parsed_logs"]

endpoint = "https://elasticsearch:9200"

compression = "gzip" # Typically reduces size by 70-80%- Batching: Send logs in larger chunks

# Vector batching example

[sinks.es_logs]

type = "elasticsearch"

inputs = ["parsed_logs"]

endpoint = "https://elasticsearch:9200"

batch.max_events = 1000 # Send up to 1000 events per request

batch.timeout_secs = 1 # Or send at least every second- Regional aggregation: Set up intermediary collectors

Site A Regional DC Central DC

+------------+ +------------+ +------------+

| Log | | Log | | Central |

| Sources +----------->+ Aggregator +------------>+ Storage |

+------------+ +------------+ +------------+

^

+------------+ |

| Log | |

| Sources +-----------------+

+------------+

Site B- Field filtering: Only ship-needed fields

# Vector field filtering example

[transforms.remove_unnecessary_fields]

type = "remap"

inputs = ["parsed_logs"]

source = '''

# Keep only essential fields

. = {

"timestamp": .timestamp,

"service": .service,

"level": .level,

"message": .message,

"trace_id": .trace_id

}

'''containerPort clears things up.Container Orchestration

Challenge: Containers are ephemeral, making log collection tricky.

Solution patterns:

- Sidecar pattern: Deploy log shipper alongside each pod

# Kubernetes sidecar example

apiVersion: v1

kind: Pod

metadata:

name: app-with-sidecar

spec:

containers:

- name: app

image: my-app:latest

volumeMounts:

- name: logs

mountPath: /app/logs

- name: vector-sidecar

image: timberio/vector:latest

volumeMounts:

- name: logs

mountPath: /app/logs

readOnly: true

- name: vector-config

mountPath: /etc/vector

readOnly: true

volumes:

- name: logs

emptyDir: {}

- name: vector-config

configMap:

name: vector-config- Node-level collectors: DaemonSet approach

# Kubernetes DaemonSet example

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: vector-agent

namespace: logging

spec:

selector:

matchLabels:

name: vector-agent

template:

metadata:

labels:

name: vector-agent

spec:

containers:

- name: vector

image: timberio/vector:latest

volumeMounts:

- name: varlog

mountPath: /var/log

- name: varlibdockercontainers

mountPath: /var/lib/docker/containers

readOnly: true

volumes:

- name: varlog

hostPath:

path: /var/log

- name: varlibdockercontainers

hostPath:

path: /var/lib/docker/containers- Container runtime logging drivers: Direct integration

// Docker daemon.json

{

"log-driver": "fluentd",

"log-opts": {

"fluentd-address": "localhost:24224",

"tag": "{{.Name}}"

}

}- Operator-based management: Kubernetes custom resources

# Custom resource definition example

apiVersion: logging.acme.com/v1

kind: LoggingConfig

metadata:

name: app-logging

spec:

application: my-app

logLevel: info

destination: elasticsearch

retention: 30d

sampling:

enabled: true

rate: 0.1 # Sample 10% of debug logsAdvanced Log Shipper Techniques

Intelligent Filtering

Beyond simple field filtering, modern log shippers can make smart decisions:

Dynamic sampling based on content:

# Vector dynamic sampling example

[transforms.error_focused_sampling]

type = "remap"

inputs = ["parsed_logs"]

source = '''

# Keep all errors, sample everything else

if (.level == "error" || .level == "fatal") {

.sample_rate = 1.0 # Keep 100%

} else if (.level == "warn") {

.sample_rate = 0.5 # Keep 50%

} else {

.sample_rate = 0.1 # Keep 10%

}

'''

[transforms.apply_sampling]

type = "sample"

inputs = ["error_focused_sampling"]

rate = ".sample_rate"Pattern-based dropping:

# Vector pattern dropping example

[transforms.drop_noisy_logs]

type = "remap"

inputs = ["parsed_logs"]

source = '''

# Drop connection reset notices that flood logs

if (.message =~ "Connection reset by peer") {

abort

}

# Drop logs from health checks

if (.path == "/health" && .status == 200) {

abort

}

'''Advanced Enrichment

Enhance your logs with additional context:

GeoIP lookup:

# Vector GeoIP example

[transforms.add_geo]

type = "remap"

inputs = ["parsed_logs"]

source = '''

# Add GeoIP data for client IP

if exists(.client_ip) {

.geo = get_enrichment_table_record("geoip", .client_ip) ?? {}

}

'''Service discovery integration:

# Vector service lookup example

[transforms.add_service_info]

type = "remap"

inputs = ["parsed_logs"]

source = '''

# Add service information from service registry

if exists(.service_id) {

.service_info = get_enrichment_table_record("services", .service_id) ?? {}

.team = .service_info.owner_team

.priority = .service_info.priority

}

'''User - agent parsing:

# Vector user agent parsing example

[transforms.parse_user_agent]

type = "remap"

inputs = ["parsed_logs"]

source = '''

if exists(.user_agent) {

.user_agent_parsed = parse_user_agent(.user_agent)

.browser = .user_agent_parsed.browser.name

.browser_version = .user_agent_parsed.browser.version

.os = .user_agent_parsed.os.name

.device = .user_agent_parsed.device.type

}

'''Streaming Analytics

Process data as it flows through your log shipper to derive immediate insights:

Real-time anomaly detection:

# Vector anomaly detection example

[transforms.detect_latency_anomalies]

type = "remap"

inputs = ["parsed_logs"]

source = '''

# Calculate rolling average for response times

if exists(.response_time) && is_float(.response_time) {

# Get or initialize the metrics state

global.request_count = global.request_count ?? 0

global.total_response_time = global.total_response_time ?? 0

global.average_response_time = global.average_response_time ?? 0

# Update metrics

global.request_count += 1

global.total_response_time += .response_time

global.average_response_time = global.total_response_time / global.request_count

# Detect anomalies (response time > 3x average)

.is_anomaly = .response_time > (global.average_response_time * 3)

.average_response_time = global.average_response_time

}

'''Error burst detection:

# Vector error burst detection

[transforms.detect_error_bursts]

type = "reduce"

inputs = ["parsed_logs"]

group_by = [".service"]

window.type = "tumbling"

window.secs = 60 # 1-minute windows

# Count errors and calculate error rate

reduce.total_events = "count()"

reduce.error_count = "count_if(level == 'error')"

reduce.error_rate = ".error_count / .total_events"

reduce.is_error_burst = ".error_rate > 0.1" # Alert if >10% errorsStatistical aggregation:

# Vector statistical aggregation

[transforms.response_time_stats]

type = "aggregate"

inputs = ["parsed_logs"]

group_by = [".service", ".endpoint"]

interval_secs = 60 # Compute stats every minute

# Calculate percentiles

aggregate.count = "count()"

aggregate.avg_time = "avg(.response_time)"

aggregate.p50 = "quantile(.response_time, 0.5)"

aggregate.p90 = "quantile(.response_time, 0.9)"

aggregate.p99 = "quantile(.response_time, 0.99)"Multi-Destination Routing

Send different logs to different destinations based on various criteria:

Content-based routing:

# Vector routing example

[transforms.route_by_level]

type = "route"

inputs = ["parsed_logs"]

route.errors = '.level == "error" || .level == "fatal"'

route.warnings = '.level == "warn"'

route.info = '.level == "info"'

route.debug = 'true' # Catch-all for remaining events

[sinks.elasticsearch_errors]

type = "elasticsearch"

inputs = ["route_by_level.errors"]

endpoint = "http://elasticsearch:9200"

index = "errors-%Y-%m-%d"

[sinks.s3_archive_all]

type = "aws_s3"

inputs = ["parsed_logs"] # All logs go to archive

bucket = "logs-archive"

key_prefix = "logs/%Y/%m/%d"

compression = "gzip"Tenant-based routing:

# Vector multi-tenant routing

[transforms.route_by_tenant]

type = "route"

inputs = ["parsed_logs"]

# Dynamic routing based on tenant field

{% for tenant in tenants %}

route.{{ tenant.id }} = '.tenant == "{{ tenant.id }}"'

{% endfor %}

# Create a sink for each tenant

{% for tenant in tenants %}

[sinks.elasticsearch_{{ tenant.id }}]

type = "elasticsearch"

inputs = ["route_by_tenant.{{ tenant.id }}"]

endpoint = "{{ tenant.elasticsearch_url }}"

index = "{{ tenant.index_prefix }}-%Y-%m-%d"

{% endfor %}Final Thoughts

There's no one-size-fits-all answer to which log shipper is best. It depends on your:

- Existing infrastructure: Your current tech stack often guides compatibility

- Volume of logs: High-volume environments need performance-optimized solutions

- Processing requirements: Complex transformations need powerful engines

- Destination systems: Native integration can simplify your architecture

- Team expertise: Choose tools that match your team's skills

The good news? Most log shippers are open-source, so you can try before you commit.