Let's talk about something central to DevOps work: logging vs monitoring. While both are essential components of maintaining system health and reliability, they serve distinct purposes and complement each other in different ways. The distinction between them isn't always clear-cut, especially as tooling continues to evolve.

This guide talks about the practical applications, technical differences, and implementation strategies for both logging and monitoring in modern DevOps environments.

What is the difference between logging and monitoring?

Logging records discrete events (errors, requests, state changes) as timestamped entries for later analysis. Monitoring continuously tracks system metrics (CPU, latency, error rates) in real time and triggers alerts when thresholds are breached. Logging answers what happened and why; monitoring answers is something wrong right now. In production, you need both: monitoring detects problems, logging diagnoses root causes.

What's Logging, Anyway?

Logging is like your system's diary – it records what happened when it happened, and sometimes why it happened. Think of it as your digital paper trail.

When your application writes a log, it's essentially saying, "Hey, this just happened." That could be a user login, a failed database connection, or a completed transaction. Logs capture events at a specific point in time.

2023-06-15 09:23:45 [INFO] User john_doe logged in successfully

2023-06-15 09:24:12 [ERROR] Database connection timeout after 30sTypes of Logs You Should Know About

Different logs serve different purposes in your DevOps toolkit:

Application Logs: These come straight from your code. They record exceptions, user actions, and business events.

System Logs: Generated by your OS, these track resource usage, process starts/stops, and kernel events.

Access Logs: Usually from web servers, these document who accessed what resources and when.

Security Logs: These track authentication attempts, permission changes, and potential intrusions.

Why Logging Matters in DevOps

Logs provide essential forensic information for incident investigation and troubleshooting. They create a sequential record that helps technical teams reconstruct the chain of events leading to a system failure or anomaly.

For instance, consider a scenario where a payment processing service experiences an outage at 3 AM. Your logs might contain a sequence like this:

02:56:32 [INFO] Payment service handling request #45678

02:56:33 [WARNING] High database latency detected (450ms)

02:56:45 [ERROR] Database query timeout

02:56:46 [ERROR] Payment processing failed for request #45678

02:56:47 [INFO] Retry attempt #1 for request #45678

02:56:49 [ERROR] Database connection pool exhausted

02:57:00 [ERROR] Circuit breaker triggered for database service

03:00:00 [ALERT] Service health check failedThis chronological record provides critical context – it reveals that the initial database latency escalated to connection pool exhaustion, eventually triggering a circuit breaker and causing service degradation.

The specific error codes, timestamps, and request identifiers allow engineers to precisely trace the failure path. Without this detailed event trail, troubleshooting becomes significantly more challenging and time-consuming.

What's Monitoring All About?

While logging captures discrete events as they occur, monitoring provides continuous observation of system metrics over time. Monitoring functions as a persistent health-checking mechanism that tracks the operational state of applications, infrastructure, and services.

Monitoring systems collect time-series data about performance, availability, and resource utilization. They analyze this data to identify patterns, detect anomalies, and predict potential issues.

The fundamental difference is that monitoring tends to be proactive, allowing teams to detect and address problems before they impact users, whereas logging is typically reactive, helping diagnose issues after they've occurred.

Key Monitoring Components

Metrics Collection: Gathering data points like CPU usage, memory consumption, request counts, and latency.

Visualization: Turning those numbers into charts and dashboards that actually make sense.

Alerting: Setting thresholds and getting notifications when they're breached.

Trend Analysis: Spotting patterns over time to predict future issues.

Why Monitoring Changes the Game

Say your app typically handles 1,000 requests per minute with a 50ms response time. Solid monitoring will alert you when:

- Request volume suddenly drops (potential service outage)

- Response times creep up to 200ms (performance degradation)

- Error rates jump from 0.1% to 5% (something's broken)

- CPU usage steadily increases over weeks (potential memory leak)

The key difference? Monitoring identifies emerging issues by detecting subtle changes in system behavior, often allowing technical teams to intervene before conditions deteriorate to the point of service disruption. This proactive capability represents one of the primary advantages of robust monitoring systems.

Logging vs Monitoring: The Real Differences

Let's break down how these two differ in practice:

| Aspect | Logging | Monitoring |

|---|---|---|

| Focus | Events and transactions | System health and performance |

| Timing | After events occur | Continuous observation |

| Data Type | Textual records | Numeric metrics and thresholds (see logs vs metrics for a deeper comparison) |

| Storage Needs | High (especially for verbose logs) | Lower (aggregated statistics) |

| Analysis Method | Searching and filtering | Dashboards and charts |

| Primary Use | Troubleshooting and forensics | Proactive issue detection |

Practical Example: API Service

Let's see how logging and monitoring work together in a real-world scenario with an API service:

Logging Approach:

10:15:22 [INFO] API request received: GET /users/profiles

10:15:22 [DEBUG] Query parameters: {limit: 100, page: 2}

10:15:23 [ERROR] Database connection failed after 500ms

10:15:23 [ERROR] Returning 503 Service Unavailable to clientThis tells you exactly what happened with a specific request.

Monitoring Approach:

- Dashboard showing API requests per second dropping sharply

- Chart displaying database connection time spiking to 500ms average

- Alert triggered for "High error rate (>5%)"

- Graph showing a correlation between database latency and error rates

This shows you the bigger picture and patterns.

How They Work Together

The best DevOps teams don't think of logging vs monitoring as an either/or choice – they use both as complementary tools.

Here's a typical workflow:

- Monitoring alerts you that error rates have jumped to 10%

- You check your monitoring dashboard to see when it started

- You look at logs from that specific timeframe to find the actual errors

- Logs show a dependency service is timing out

- You check monitoring of that dependency to confirm increased latency

- You fix the root cause and watch your monitoring confirm things are back to normal

It's this cycle that makes for robust systems. Monitoring catches issues, logging helps you solve them.

Setting Up Logging Right

Implementing an effective logging strategy requires careful consideration of format, content, and infrastructure.

The goal isn't to capture every possible event but to ensure that the right information is available when needed for troubleshooting and analysis.

Structured Logging Is Essential for Scalability

Traditional plaintext logging becomes unwieldy at scale. Structured logging—typically in JSON format—transforms logs into machine-parsable data that can be efficiently indexed, searched, and analyzed:

{

"timestamp": "2023-06-15T09:23:45Z",

"level": "ERROR",

"service": "payment-processor",

"message": "Payment authorization failed",

"transaction_id": "tx_45678",

"error_code": "AUTH_DECLINED",

"user_id": "user_789",

"request_ip": "198.51.100.42",

"client_version": "2.5.0",

"payment_provider": "stripe",

"processing_time_ms": 345,

"correlation_id": "corr_abc123"

}This structured format offers significant advantages:

- Consistent parsing: Fields are explicitly defined and typed

- Contextual enrichment: Additional metadata can be included without affecting readability

- Efficient querying: You can perform precise searches like "find all AUTH_DECLINED errors for a specific user" or "list all payment failures with processing times over 300ms"

- Correlation capability: IDs can link related events across distributed systems

- Automated processing: Machine-readable format enables automated alerting and analysis

Log Levels Require Careful Implementation

Log levels provide critical filtering capabilities that help manage log volume and focus attention on events of appropriate significance.

A well-defined log-level strategy improves troubleshooting efficiency and optimizes storage costs.

Standard log levels and their applications:

- FATAL/CRITICAL: Severe errors causing application termination or complete service unavailability. Examples include unrecoverable initialization failures, out-of-memory conditions, or data corruption. These should be extremely rare and warrant immediate alerts.

- ERROR: Significant issues that prevent normal function but don't crash the entire application. Examples include failed user transactions, API integration failures, or database connectivity issues. These require prompt investigation.

- WARNING: Potential issues that don't immediately affect functionality but may indicate emerging problems. Examples include API call retries, degraded performance, deprecated feature usage, or approaching resource limits. These should be monitored for patterns.

- INFO: Normal operational events that document application lifecycle and key business transactions. Examples include application startup/shutdown, user logins, or completed transactions. These provide operational context.

- DEBUG: Detailed technical information useful during development and intensive troubleshooting. Examples include function entry/exit points, variable values, or SQL queries. These should generally be disabled in production unless actively debugging.

- TRACE: Extremely verbose output capturing every detail of application execution. Examples include low-level HTTP request details or step-by-step function execution paths. These should only be enabled temporarily for specific troubleshooting.

In production environments, implement tiered retention policies based on log levels. A common approach is:

- FATAL/ERROR: Retain for 6-12 months

- WARNING: Retain for 1-3 months

- INFO: Retain for 7-30 days

- DEBUG/TRACE: Typically not enabled in production, or retained for only 24-48 hours during active troubleshooting

Centralized Log Management Is Non-Negotiable

Distributed systems generate logs across numerous components and services. Without centralization, troubleshooting becomes fragmented and inefficient.

A centralized logging architecture provides a unified view of system behavior across the entire application stack.

Key components of a centralized logging system include:

- Collection agents: Lightweight processes that run on each server to gather logs and forward them to central storage. Examples include Filebeat, Fluentd, and Logstash.

- Transport layer: The mechanism for reliably moving logs from source to destination, often with buffering capabilities to handle network issues or traffic spikes.

- Processing pipeline: Where logs are parsed, transformed, enriched, and normalized. This stage can add metadata, filter sensitive information, and structure raw logs.

- Storage backend: Optimized databases designed for log data, capable of handling high write loads and complex queries. Examples include Elasticsearch, ClickHouse, and Loki.

- Query and visualization layer: User interfaces for searching, analyzing, and visualizing log data. Examples include Kibana, Grafana, and custom dashboards.

- Retention and lifecycle management: Policies for compressing, archiving, or deleting logs based on age, importance, and compliance requirements.

Popular centralized logging platforms include:

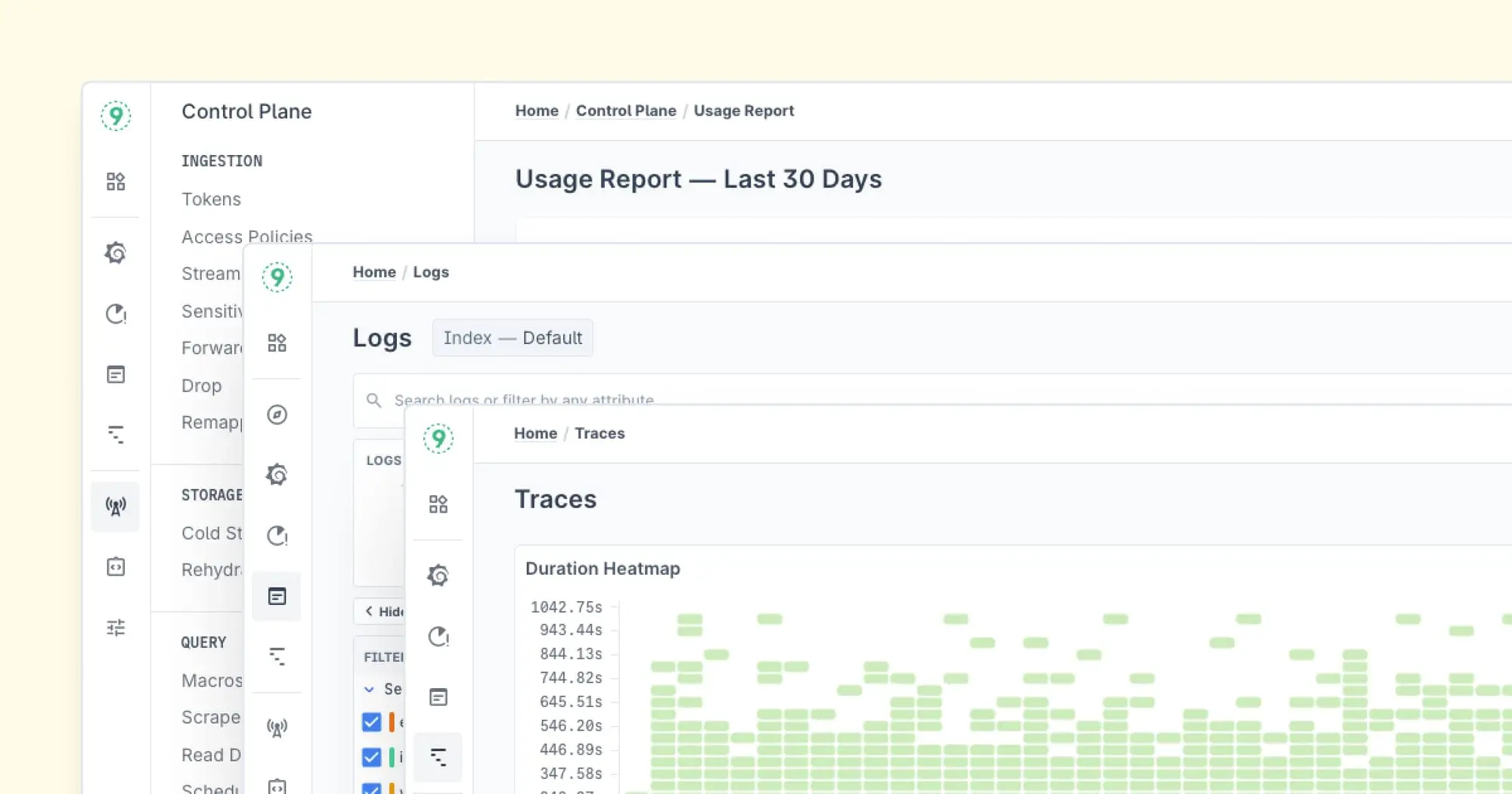

- Last9: A Full-Stack Observability Platform built for High Cardinality, bringing Metrics, Logs, and Traces together in one place.

- ELK/Elastic Stack: Elasticsearch (storage), Logstash (collection/processing), Kibana (visualization)

- Grafana Loki: Lightweight, Prometheus-inspired logging stack with Grafana integration

- Graylog: Built on Elasticsearch with custom search capabilities

- Google Cloud Logging/AWS CloudWatchdo Logs: Cloud-native offerings with integration to respective cloud platforms

When implementing centralized logging, consider:

- Network bandwidth consumption

- Storage requirements and costs

- Scaling strategies for high-volume environments

- Access controls and security for sensitive log data

- Backup and disaster recovery procedures

Building Solid Monitoring

Quality monitoring requires thought about what to track and how to visualize it.

The Four Golden Signals

Google's Site Reliability Engineering book suggests focusing on four key metrics:

- Latency: How long do requests take to process

- Traffic: How much demand your system is experiencing

- Errors: Rate of failed requests

- Saturation: How "full" your system is (CPU, memory, disk I/O)

Monitor these four aspects, and you'll catch most issues early.

Alert Design Principles for Operational Efficiency

Alert design directly impacts both system reliability and team performance. Poorly configured alerts lead to alert fatigue—a condition where teams become desensitized to notifications due to excessive false positives or non-actionable alerts.

Statistical Approaches to Threshold Setting

- Use percentiles instead of averages: Averages mask outliers and can hide real problems. For example, if 95% of requests are completed in 100ms but 5% take 5 seconds, the average might look acceptable at 345ms while users experience significant delays.

- P50 (median): Represents typical user experience

- P90/P95: Captures degradation affecting a meaningful subset of traffic

- P99: Identifies issues affecting your worst-case scenarios

- Implement dynamic thresholds: Static thresholds often fail to account for normal variations in traffic patterns. Consider:

- Time-based thresholds (different limits for business hours vs. overnight)

- Relative change alerts (sudden 50% increase from baseline)

- Seasonal adjustments (accommodating known traffic patterns)

Alert Content Best Practices

Effective alerts provide immediate context and actionability:

- Alert on symptoms, not causes: Monitor end-user impact metrics. Example: Alert on "API success rate < 99.5%" rather than "Database connection pool utilization > 80%"

- Include critical context:

- Affected component/service

- Duration of the issue

- Magnitude/scope (percentage of users/requests affected)

- Current value vs. threshold

- Rate of change (is it getting worse rapidly?)

- Links to relevant dashboards and runbooks

- Implement severity levels with clear definitions:

- P1/Critical: Service is down or severely degraded for most users; requires immediate response regardless of time of day

- P2/High: Significant feature degradation affecting many users; requires prompt attention during business hours, consideration for off-hours

- P3/Medium: Minor feature degradation or early warning signs; address during normal business hours

- P4/Low: Informational; review during regular work scheduling

Example of a well-structured alert:

[P2-HIGH] API Latency Degradation - Payment Service

SYMPTOM: P95 API latency exceeded 500ms for >5 minutes

IMPACT: Affecting ~15% of payment processing requests (est. 300 req/min)

CURRENT VALUE: 780ms (threshold: 500ms)

TREND: Increasing ~50ms per minute

View: Dashboard [link] | Runbook [link] | Similar Incidents [link]This alert structure provides immediate situational awareness and actionable context, enabling faster and more effective responses.

Choose the Right Monitoring Tools

Popular choices include:

- Last9: Managed, High-cardinality observability at scale with integration support

- Prometheus + Grafana: Open-source, powerful, great for Kubernetes

- Datadog: Comprehensive SaaS solution with wide integration support

- Cloudwatch: Native AWS monitoring

- Nagios: Veteran open-source monitoring platform

Pick what fits your stack and budget.

Common DevOps Pitfalls and How to Avoid Them

After working with dozens of teams, I've seen the same mistakes crop up around logging and monitoring.

Logging Too Much or Too Little

Too much: You're paying to store logs nobody reads, and the important stuff gets buried.

Too little: When issues happen, you don't have enough information to fix them.

Solution: Start with moderate logging, then adjust based on what you use during incidents.

Alert Overload

When everything triggers alerts, people start ignoring them – even the important ones.

Solution: Audit your alerts regularly. For each alert, ask: "Has this helped us prevent or solve a real problem in the last month?" If not, reconsider it.

Forgetting the Business Context

Technical metrics are great, but they don't tell the whole story.

Solution: Add business metrics to your monitoring. Track things like:

- Completed purchases per minute

- New signups per hour

- Revenue-generating API calls vs. total calls

These connect your technical work to business outcomes.

Not Testing Observability

How do you know your logging and monitoring work if you never test them?

Solution: Run regular "game days" where you intentionally break things in staging to ensure your observability tools catch the issues.

What is log monitoring?

Log monitoring means continuously watching log output for specific patterns, errors, or anomalies. It sits right between logging and monitoring: instead of digging through logs after an incident, tools like the ELK stack or Loki flag issues as they appear. It's useful for catching errors that don't show up in metric-based monitoring, like slow database queries or authentication failures.

How does logging relate to observability?

Logging is one of the three pillars of observability, alongside metrics (monitoring) and distributed traces. Logs give you the event-level detail you need to diagnose root causes after monitoring detects a problem. Without logs, observability is incomplete. You know something is broken but not why.

Where Does Observability Fit In?

Logging and monitoring are two of the three pillars of observability. The third is distributed tracing. Each solves a different problem, but observability is what you get when you wire all three together so you can actually debug production issues without guessing.

Here's how they break down:

- Logging gives you the raw detail: individual events, stack traces, request payloads. It's forensic.

- Monitoring gives you the aggregate view: dashboards, SLOs, alerting. It's operational.

- Tracing connects the dots across services, showing how a single request flows through your system.

When all three are in place, you can ask arbitrary questions about your system without deploying new code. Your monitoring alerts you to high latency, your traces show which service is slow, and your logs reveal the exact query causing the bottleneck.

The point: don't treat logging and monitoring as separate projects. Set them up as part of the same observability stack, with shared context (like correlation IDs and trace IDs) flowing across all three signals.

Conclusion

In the logging vs monitoring debate, the winner is clear: you need both. They're complementary tools in your DevOps arsenal, not competitors.

Start by implementing basic logging and the four golden signals of monitoring. Then iterate and improve based on real incidents and feedback.

FAQs

Do I need both logging and monitoring?

Yes, absolutely. They serve different purposes and complement each other. Monitoring tells you when something's wrong, logging helps you figure out why.

How much logging is too much?

If your logs are costing a fortune to store and you're not using them to solve problems, you're probably logging too much. Focus on actionable information rather than verbose output.

What metrics should every DevOps team monitor?

At a minimum: CPU, memory, disk usage, request latency, error rates, and throughput for each service. Add business metrics that matter to your specific application.

How do I convince management to invest in better observability?

Track the time spent troubleshooting incidents before and after implementing good logging and monitoring. The reduction in MTTR (Mean Time To Resolution) makes a compelling business case.

Should developers or operations handle monitoring?

In true DevOps fashion, it should be a shared responsibility. Developers know what needs to be logged and monitored, while operations has expertise in setting up the infrastructure to do so.

Can't I just use logs for everything?

While you technically could extract metrics from logs, it's inefficient and expensive. Purpose-built monitoring systems are optimized for time-series data and alerting.