As the need for observability in distributed systems grows, OpenTelemetry has emerged as a crucial tool for gathering metrics, traces, and logs across different environments.

Django, a popular web framework for Python, is no exception, and integrating OpenTelemetry with Django can elevate your application’s monitoring and performance-tracking capabilities.

In this guide, we’ll walk through the process of setting up OpenTelemetry with Django, cover common pitfalls, and explore advanced configuration options to maximize your observability setup.

Introduction to Django

Django is a high-level Python web framework designed to simplify web development by providing robust tools and a clean, pragmatic design. It follows the "batteries-included" philosophy, offering everything you need to build a web application, including:

- MVC architecture: Django uses the Model-View-Controller (MVC) pattern, organizing code into Models (data), Views (UI), and Controllers (logic).

- ORM (Object-Relational Mapping): It simplifies database interactions by allowing developers to work with databases using Python objects.

- Admin interface: Django automatically generates an admin dashboard to manage your application’s content.

- Security features: It includes built-in protection against common web vulnerabilities like SQL injection, CSRF, and XSS.

- Scalability and flexibility: Django is designed to scale with your project, handling high traffic and complex requirements.

- URL routing: It maps URLs to views, making it easy to handle requests and return the appropriate response.

Django’s ease of use, comprehensive features, and focus on security make it a popular choice for developers building modern web applications.

Benefits of Integrating OpenTelemetry with Django

With OpenTelemetry, you can:

- Monitor application performance: Track the response time of views, database queries, and external API calls.

- Trace requests: Follow the path of requests through your application, services, and external systems.

- Improve debugging: With comprehensive tracing and logging, debugging becomes easier by offering context about what’s happening in your app at any point.

Installing and Configuring OpenTelemetry in Django

To get started with OpenTelemetry in Django, we first need to install the required dependencies. OpenTelemetry provides SDKs for automatic and manual instrumentation, allowing flexibility in how you capture telemetry data.

1: Install Required Packages

Run the following command to install OpenTelemetry for Django:

pip install opentelemetry-distro opentelemetry-instrumentation-django

pip install opentelemetry-exporter-otlpopentelemetry-distro: Provides the core OpenTelemetry distribution.opentelemetry-instrumentation-django: Enables automatic tracing for Django applications.opentelemetry-exporter-otlp: Allows exporting telemetry data to OpenTelemetry-compatible backends like Jaeger, Zipkin, or Last9.

2: Enable Auto-Instrumentation (Quick Setup)

For a quick and easy way to get tracing without modifying your application code, use OpenTelemetry’s auto-instrumentation:

opentelemetry-instrument python manage.py runserverThis approach automatically instruments Django and any supported dependencies (like database queries, HTTP calls, etc.), making it an easy way to start collecting traces.

3: Manual Instrumentation (More Control)

If you need granular control over your tracing setup, manual instrumentation is the way to go. This requires initializing OpenTelemetry in your Django settings or middleware.

We'll cover manual instrumentation in the next section.

How Does Manual Instrumentation in Django Work?

While auto-instrumentation is great for a quick setup, it doesn’t provide fine-grained control over what gets traced.

Manual instrumentation allows you to customize trace spans, add meaningful attributes, and get deeper insights into your Django application.

Step 1: Configure OpenTelemetry in Django Settings

In your Django project, modify settings.py to set up OpenTelemetry manually:

from opentelemetry.sdk.resources import Resource

from opentelemetry.sdk.trace import TracerProvider

from opentelemetry.sdk.trace.export import BatchSpanProcessor

from opentelemetry.exporter.otlp.proto.grpc.trace_exporter import OTLPSpanExporter

from opentelemetry import trace

# Define the resource (helps identify the service)

resource = Resource.create({"service.name": "django-app"})

# Set up the tracer provider

trace.set_tracer_provider(TracerProvider(resource=resource))

tracer = trace.get_tracer_provider().get_tracer(__name__)

# Set up the OTLP exporter to send traces

otlp_exporter = OTLPSpanExporter(endpoint="http://localhost:4317", insecure=True)

trace.get_tracer_provider().add_span_processor(BatchSpanProcessor(otlp_exporter))This configuration:

- Defines the service name for easier trace identification.

- Initializes a tracer for capturing spans.

- Exports traces to an OpenTelemetry Collector or a compatible backend.

Step 2: Manually Create Traces in Django Views

Once OpenTelemetry is set up, you can manually create spans in your Django views to track specific operations:

from opentelemetry import trace

from django.http import JsonResponse

tracer = trace.get_tracer(__name__)

def sample_view(request):

with tracer.start_as_current_span("process_request") as span:

span.set_attribute("http.method", request.method)

span.set_attribute("user.id", request.user.id if request.user.is_authenticated else "anonymous")

# Simulate some processing

data = {"message": "OpenTelemetry manual tracing in Django!"}

return JsonResponse(data)What’s Happening Here?

- A new span (

process_request) is created when the view is called. - Custom attributes (HTTP method, user ID, etc.) are added, making it easier to filter and analyze traces.

- The trace will be linked to auto-instrumented traces if auto-instrumentation is also enabled.

Step 3: Adding Tracing to Middleware (Optional)

To trace all incoming requests, you can wrap Django’s middleware:

from django.utils.deprecation import MiddlewareMixin

class OpenTelemetryMiddleware(MiddlewareMixin):

def process_request(self, request):

with tracer.start_as_current_span("middleware_request") as span:

span.set_attribute("path", request.path)

span.set_attribute("method", request.method)

def process_response(self, request, response):

return responseAdd it to MIDDLEWARE in settings.py:

MIDDLEWARE = [

...,

"path.to.OpenTelemetryMiddleware",

]4. Exporting Telemetry Data from Django

Once OpenTelemetry is capturing traces in your Django app, you need to export the data to an observability backend for analysis.

The most common way is to use the OpenTelemetry Protocol (OTLP) exporter, which sends data to an OpenTelemetry Collector, a tracing system like Jaeger, or a full observability platform like Last9.

Step 1: Configure OTLP Exporter

Update your OpenTelemetry setup in settings.py to export traces via OTLP (gRPC or HTTP):

from opentelemetry.exporter.otlp.proto.grpc.trace_exporter import OTLPSpanExporter

from opentelemetry.sdk.trace.export import BatchSpanProcessor

# Define the OTLP exporter

otlp_exporter = OTLPSpanExporter(

endpoint="http://localhost:4317", # Change to your OTLP collector or observability backend

insecure=True # Set to False if using TLS

)

# Attach the exporter to the tracer

trace.get_tracer_provider().add_span_processor(BatchSpanProcessor(otlp_exporter))This configuration initializes the OTLP exporter to send traces and attaches it to the tracer provider, ensuring all spans are exported. The default OpenTelemetry Collector endpoint is localhost:4317, but this should be changed to Last9’s ingestion URL if using Last9.

Step 2: Setting Up OpenTelemetry Collector

The OpenTelemetry Collector is an intermediary service that helps:

- Process telemetry data before sending it to a backend, allowing for filtering, sampling, or transformation.

- Reduce load on your application by handling telemetry separately.

- Send traces to multiple destinations, such as Last9, Jaeger, or Prometheus.

Collector Configuration (otel-collector-config.yaml)

If using an OpenTelemetry Collector, configure it to receive traces from Django:

receivers:

otlp:

protocols:

grpc:

http:

exporters:

otlphttp:

endpoint: "https://api.last9.io/v1/otlp" # Change to your Last9 endpoint

service:

pipelines:

traces:

receivers: [otlp]

exporters: [otlphttp]Start the collector with Docker:

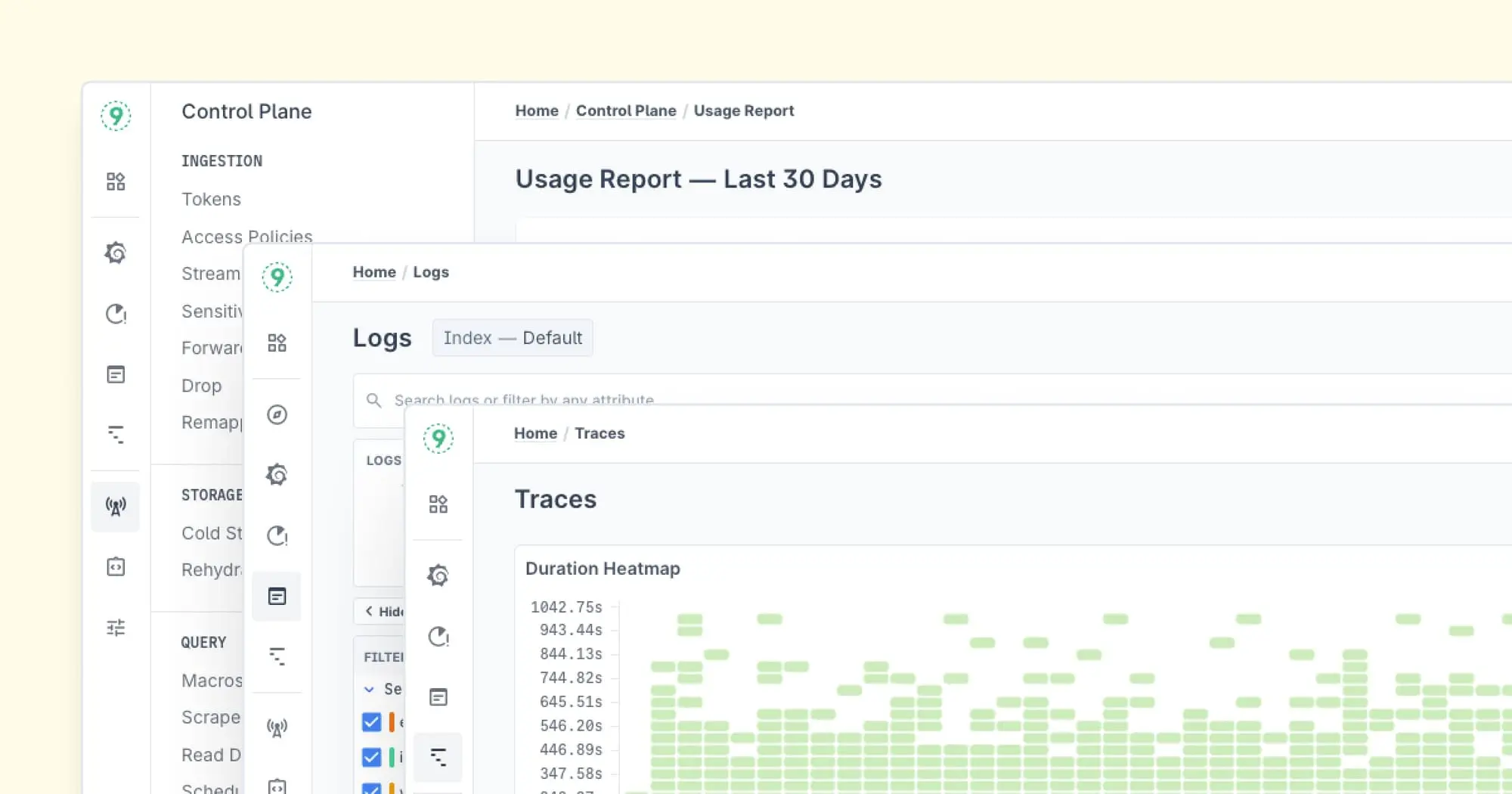

docker run --rm -p 4317:4317 -v $(pwd)/otel-collector-config.yaml:/etc/otel/config.yaml otel/opentelemetry-collectorStep 3: Verifying Traces in Last9

If using Last9, traces should now appear in Last9’s trace visualization UI, where you can analyze trace performance, debug AI workflows alongside infrastructure, and handle high-cardinality data efficiently.

For debugging, run:

curl -X POST http://localhost:4318/v1/traces -d '{}'Or check logs to ensure traces are being exported correctly.

5. Performance Optimization & Best Practices

Adding observability to your Django application should not come at the cost of performance. Here are key optimizations to ensure efficient tracing without excessive overhead.

1. Use Sampling to Control Data Volume

Collecting every trace can lead to high storage costs and performance degradation. OpenTelemetry allows sampling, which determines how many traces to capture.

Modify the tracer provider to use a probabilistic sampler:

from opentelemetry.sdk.trace.sampling import TraceIdRatioBased

trace.set_tracer_provider(

TracerProvider(sampler=TraceIdRatioBased(0.5)) # Captures 50% of traces

)For high-throughput applications, consider tail-based sampling via the OpenTelemetry Collector.

2. Optimize Span Creation

Avoid excessive span creation, which can clutter traces and slow down processing. Use spans only for key operations such as database queries, external API calls, background tasks, and critical business logic.

Example of tracing an API call efficiently:

import requests

from opentelemetry import trace

tracer = trace.get_tracer(__name__)

def fetch_data():

with tracer.start_as_current_span("external_api_call") as span:

response = requests.get("https://api.example.com/data")

span.set_attribute("http.status_code", response.status_code)

return response.json()3. Minimize Context Propagation Overhead

Context propagation ensures traces remain linked across services, but excessive metadata can slow down requests. Use baggage items selectively and avoid unnecessary headers in distributed tracing.

For Django middleware, limit baggage propagation:

from opentelemetry.baggage import get_baggage, set_baggage

set_baggage("user_id", "12345") # Only set essential attributes

user_id = get_baggage("user_id")4. Batch and Compress Trace Exports

Sending telemetry data in real time can introduce network overhead. Instead, use batch processing to export spans efficiently.

Update your exporter configuration:

from opentelemetry.sdk.trace.export import BatchSpanProcessor

trace.get_tracer_provider().add_span_processor(

BatchSpanProcessor(otlp_exporter) # Batches spans before exporting

)Key Features of OpenTelemetry with Django

Once you've got OpenTelemetry set up in your Django app, you'll unlock a wealth of telemetry data that helps you monitor, troubleshoot, and optimize your application.

Let’s break down some of the standout features you’ll get from OpenTelemetry:

Distributed Tracing

One of OpenTelemetry’s most powerful features is distributed tracing. This allows you to track the flow of a request as it hops between services, which is particularly valuable in microservice-based systems.

With tracing, you can trace the path of a single request from the frontend to the backend, pinpointing bottlenecks or errors along the way. It’s like having a bird’s-eye view of your entire request flow, from start to finish, helping you identify the areas that need attention.

Metrics Collection

OpenTelemetry also collects metrics to give you a detailed picture of your app’s health. It tracks various performance aspects, including request latency, database query times, and error rates.

With tools like Prometheus or Grafana, you can easily visualize this data and keep tabs on how your Django application is performing over time. It’s like putting a stethoscope on your application to monitor its health in real-time.

Log Correlation

Log correlation ties your logs to traces and metrics, providing additional context for errors and performance issues.

Integrating OpenTelemetry with logging tools like Elasticsearch or Stackdriver allows you to correlate logs with specific traces and metrics.

This correlation is incredibly useful when debugging, as it lets you see not only where things went wrong but also what led up to the issue. It’s like connecting the dots between different pieces of data to tell a complete story.

Methods for Instrumenting Database Engines in Django

Instrumenting database queries in Django with OpenTelemetry allows you to capture telemetry data for SQL queries, enabling you to monitor database performance and diagnose issues.

Below are the steps for setting up OpenTelemetry with different database engines, such as PostgreSQL, MySQL, and SQLite.

Step 1: Install OpenTelemetry and Database Instrumentation Packages

First, install the required OpenTelemetry packages along with the database-specific instrumentation for Django:

pip install opentelemetry-api opentelemetry-sdk opentelemetry-instrumentation-django opentelemetry-instrumentation-sqlite opentelemetry-instrumentation-postgresql opentelemetry-instrumentation-mysqlThis installs the core OpenTelemetry SDK, Django instrumentation, and specific database instrumentation for SQLite, PostgreSQL, and MySQL.

Step 2: Configure OpenTelemetry in settings.py

Next, configure OpenTelemetry tracing in your settings.py. This includes setting up the trace provider and exporter and enabling middleware to capture telemetry data from your database queries.

Example Configuration for settings.py:

from opentelemetry import trace

from opentelemetry.instrumentation.django import DjangoInstrumentor

from opentelemetry.exporter.jaeger import JaegerExporter

from opentelemetry.sdk.trace import TracerProvider

from opentelemetry.sdk.trace.export import BatchExportSpanProcessor

from opentelemetry.instrumentation.sqlite import SQLiteInstrumentor

from opentelemetry.instrumentation.postgresql import PostgreSQLInstrumentor

from opentelemetry.instrumentation.mysql import MySQLInstrumentor

# Set up OpenTelemetry trace provider

trace.set_tracer_provider(TracerProvider())

# Configure Jaeger exporter (replace with another exporter if needed)

jaeger_exporter = JaegerExporter(

agent_host_name='localhost', # Update with the actual Jaeger agent host

agent_port=6831,

)

# Add a span processor to export data

span_processor = BatchExportSpanProcessor(jaeger_exporter)

trace.get_tracer_provider().add_span_processor(span_processor)

# Instrument Django

DjangoInstrumentor().instrument()

# Instrument specific databases

SQLiteInstrumentor().instrument()

PostgreSQLInstrumentor().instrument()

MySQLInstrumentor().instrument()In this configuration:

- JaegerExporter is used to send tracing data to a Jaeger instance running on

localhost:6831. You can replace it with other exporters like Zipkin if needed. - SQLiteInstrumentor, PostgreSQLInstrumentor, and MySQLInstrumentor are used to capture telemetry data for their respective databases.

Step 3: Configure Database Engines for Telemetry

1. Instrumenting PostgreSQL Database

To capture telemetry data for PostgreSQL queries executed within Django's ORM, ensure your database is set up in settings.py:

DATABASES = {

'default': {

'ENGINE': 'django.db.backends.postgresql',

'NAME': 'your_database_name',

'USER': 'your_username',

'PASSWORD': 'your_password',

'HOST': 'your_host',

'PORT': 'your_port',

}

}The PostgreSQL instrumentation automatically traces queries, capturing execution times and errors.

2. Instrumenting MySQL Database

For MySQL, ensure your database connection is configured properly settings.py:

DATABASES = {

'default': {

'ENGINE': 'django.db.backends.mysql',

'NAME': 'your_database_name',

'USER': 'your_username',

'PASSWORD': 'your_password',

'HOST': 'your_host',

'PORT': 'your_port',

}

}MySQL instrumentation captures SQL queries like SELECT, INSERT, UPDATE, and DELETE.

3. Instrumenting SQLite Database

If you're using SQLite, especially for development, make sure your configuration in settings.py is set as follows:

DATABASES = {

'default': {

'ENGINE': 'django.db.backends.sqlite3',

'NAME': BASE_DIR / 'db.sqlite3',

}

}SQLite queries will be traced similarly to PostgreSQL and MySQL.

Step 4: Monitor Traces and Metrics

Once the instrumentation is in place, OpenTelemetry will trace all database queries. These traces will be sent to your configured exporter (e.g., Jaeger).

Monitoring with Jaeger

To view the traces, you can use the Jaeger UI at http://localhost:16686. The Jaeger dashboard allows you to:

- Search traces by operation name, database queries, or request IDs.

- Visualize the performance metrics and timelines of SQL queries.

Using Other Exporters

If you're using exporters like Zipkin or Prometheus, make sure the corresponding dashboards (e.g., Zipkin UI or Grafana for Prometheus) are configured to visualize the traces and metrics.

Step 5: Advanced Customization (Optional)

If you need more control over your telemetry data, you can extend OpenTelemetry to trace custom SQL queries or database operations outside of Django's ORM.

Example of Custom Instrumentation:

from opentelemetry import trace

from opentelemetry.instrumentation import sqlite

tracer = trace.get_tracer(__name__)

def custom_sql_query():

with tracer.start_as_current_span("custom-sql-query"):

# Execute your custom SQLite query here

passYou can apply the same approach to PostgreSQL or MySQL by using their respective instrumentation packages. This allows you to track additional database operations that may not be captured by the default ORM tracing.

Best Practices for Database Instrumentation with OpenTelemetry

When integrating OpenTelemetry for database instrumentation in your Django application, it's important to balance the benefits of detailed observability with the performance demands of your application.

Here are several best practices and performance considerations to help you optimize your OpenTelemetry setup:

1. Sampling

Implement sampling to control the volume of trace data sent to your backends. Instead of tracing every request, you can trace only a subset based on a defined sampling rate.

- Dynamic Sampling: Use dynamic sampling strategies based on factors like request type, request duration, or custom business logic to trace only critical operations.

- Fixed Sampling: Apply a fixed sampling rate (e.g., 1 out of every 10 requests) to limit trace volume.

Example:

from opentelemetry.sdk.trace import TracerProvider

from opentelemetry.sdk.trace.sampling import TraceIdRatioBased

trace.set_tracer_provider(TracerProvider(sampler=TraceIdRatioBased(0.1))) # 10% sampling rate2. Use of Span Limits

Limit the number of spans created by your application. OpenTelemetry automatically creates spans for various operations, but you can limit span creation for less important tasks. For instance, avoid instrumenting background jobs or static asset handling unless necessary.

3. Selective Instrumentation

Instrument only the critical parts of your Django application that require detailed observability, such as API endpoints or database queries. Disable or adjust instrumentation for less important areas.

- Database: Use OpenTelemetry database instrumentation only for specific queries instead of instrumenting all database queries.

- Custom Instrumentation: Apply manual instrumentation only to key business logic, avoiding unnecessary spans in low-traffic areas.

4. Asynchronous Tracing

For Django applications that use asynchronous views or tasks (e.g., with async/await), ensure that OpenTelemetry tracing is compatible with async code. Use an async-compatible exporter and ensure spans are correctly traced across asynchronous tasks.

Example:

from opentelemetry.instrumentation.asyncio import AsyncIOInstrumentor

AsyncIOInstrumentor().instrument()5. Batching and Exporter Tuning

Configure the OpenTelemetry exporter to batch trace data and control the frequency of exports.

Batching reduces the frequency of network calls and improves performance. Use the appropriate exporter (e.g., Jaeger, Zipkin) and configure it to batch spans before sending them.

Example Configuration:

from opentelemetry.sdk.trace.export import BatchExportSpanProcessor

from opentelemetry.exporter.jaeger import JaegerExporter

jaeger_exporter = JaegerExporter(agent_host_name='localhost', agent_port=6831)

span_processor = BatchExportSpanProcessor(jaeger_exporter)

trace.get_tracer_provider().add_span_processor(span_processor)6. Trace Context Propagation Control

Control how trace context is propagated throughout your application. Avoid unnecessary trace propagation in background jobs, non-critical endpoints, or internal services that don’t require full trace visibility.

7. Optimize the Use of Instrumentation Libraries

Some OpenTelemetry instrumentation libraries may be more resource-intensive than others.

When instrumenting Django, ensure you are using efficient, performance-optimized versions of the instrumentation libraries.

- Minimize dependencies: Only use the necessary instrumentation for your stack.

- Custom Instrumentations: Consider writing custom instrumentation for performance-sensitive areas instead of relying on third-party libraries.

8. Adjust Log Level and Span Data

Limit the amount of data you attach to each span. Avoid adding excessive metadata (e.g., large request bodies, full stack traces), which can increase memory usage and slow down processing. Focus on high-level information such as request paths, statuses, and timings.

9. Use Application Performance Monitoring (APM) Solutions

Integrate OpenTelemetry with APM solutions that support automatic optimizations. These tools can adjust sampling rates dynamically based on application load and help manage overhead more efficiently, providing deeper insights with minimal configuration.

10. Monitor and Fine-Tune Over Time

Regularly monitor the performance impact of OpenTelemetry in production. Fine-tune configurations over time as your traffic patterns and infrastructure evolve. For example, you might increase the sampling rate during peak periods or adjust the span processor batch size for better throughput.

Common Pitfalls and How to Avoid Them

While OpenTelemetry provides powerful observability features, there are a few common pitfalls that can arise during integration with Django.

Here’s how to avoid them:

1. Incorrect Configuration

Ensure that all necessary components—such as tracers, exporters, and middleware—are properly configured in your settings.py file. Missing or incorrect configurations can prevent OpenTelemetry from capturing and exporting the right data.

2. Performance Overhead

Tracing can introduce performance overhead, particularly in high-traffic applications. After integrating OpenTelemetry, monitor your application’s performance and adjust the sampling rate or selectively disable tracing for non-critical parts to mitigate the impact.

3. Missing Dependencies

Make sure that all required OpenTelemetry packages are installed and up to date. Incomplete installations or outdated versions can lead to missing traces or metrics, which might affect your observability setup.

4. Exporter Misconfiguration

Double-check your exporter settings to ensure telemetry data is sent to the correct backend (e.g., Jaeger, Zipkin, etc.). Misconfigured exporters can result in data being sent to the wrong destination or not being exported at all.

Troubleshooting and Debugging

If you encounter issues with tracing or metrics collection, here are a few tips to help you troubleshoot:

1. Check Logs

Ensure your OpenTelemetry exporter is correctly configured and check for any errors in the logs. Log messages can often provide helpful insights into misconfigurations or connection issues.

2. Verify Spans

Use the OpenTelemetry SDK’s built-in debugging tools to verify if spans are being generated and exported. This can help you identify if certain parts of your application are not being traced correctly.

3. Inspect Traces

Use your backend’s UI (e.g., Jaeger, Zipkin) to inspect the traces. Look for gaps or missing data in the traces to identify where issues might be occurring in the instrumentation or tracing flow.

Conclusion

Integrating OpenTelemetry with Django is a game-changer for monitoring and debugging your application.

With real-time tracing, metric collection, and log correlation, you’ll get valuable insights into your app's performance, identify bottlenecks, and resolve issues more efficiently.

FAQs

What is OpenTelemetry, and how does it work with Django?

OpenTelemetry is an open-source observability framework that helps you collect and send telemetry data—such as traces, metrics, and logs—from your application. In Django, it can be used to trace requests, monitor database queries, and correlate logs, providing a comprehensive view of your app’s performance and health.

How do I set up OpenTelemetry in my Django project?

To set up OpenTelemetry with Django, you need to install the necessary OpenTelemetry packages for Django and your database, configure the OpenTelemetry trace provider in settings.py, and enable tracing for Django components like views and database queries. After that, you can export telemetry data to various backends like Jaeger or Prometheus for analysis.

Does OpenTelemetry impact the performance of my Django application?

Yes, tracing adds some overhead, especially in production environments. However, you can mitigate performance issues by using sampling, limiting the number of spans created, and selectively instrumenting critical parts of your application. Monitoring the impact and adjusting settings over time is essential to ensure minimal performance degradation.

Can OpenTelemetry help with database query performance?

Yes, OpenTelemetry allows you to trace database queries, including SQL query execution time, errors, and latency. This helps you identify slow queries or potential bottlenecks in your database interactions. By instrumenting your Django application’s ORM (for databases like PostgreSQL, MySQL, or SQLite), you can get detailed insights into your database's performance.

How can I correlate logs with traces and metrics in Django?

By integrating OpenTelemetry with logging tools like Elasticsearch or Stackdriver, you can correlate logs with specific traces and metrics. This allows you to gain deeper context when troubleshooting performance issues or errors in your application, as you can see the related logs alongside the traces and metrics.

Can I customize the traces collected by OpenTelemetry in Django?

Yes, OpenTelemetry allows you to add custom instrumentation for specific parts of your application. You can manually create spans for custom operations, such as external API calls or complex database queries, to gain more granular insights into your application’s behavior.

What exporters can I use with OpenTelemetry in Django?

OpenTelemetry supports multiple exporters for sending telemetry data to various backends. Some of the most commonly used exporters include Jaeger, Zipkin, Prometheus, and Stackdriver. You can configure the exporter that best fits your monitoring stack by setting it up in your settings.py file.

How do I monitor OpenTelemetry traces in Django?

Once OpenTelemetry is set up, you can use tracing backends like Jaeger or Zipkin to monitor traces. These tools allow you to view detailed trace data, such as request paths, database queries, and any potential errors or bottlenecks. For example, in Jaeger, you can use the UI to search for traces and visualize their performance metrics.

What are the best practices for using OpenTelemetry with Django?

Some best practices for OpenTelemetry in Django include:

- Use sampling to control the volume of trace data and reduce overhead.

- Limit instrumentation to critical areas of your application, such as key API endpoints and database queries.

- Use asynchronous tracing for async views or tasks to ensure accurate trace data.

- Continuously monitor and adjust OpenTelemetry settings as your application scales or changes.

What should I do if I encounter issues with OpenTelemetry in Django?

If you're experiencing issues, start by checking the OpenTelemetry logs for errors related to exporters or configuration. You can also verify that spans are being generated by using OpenTelemetry’s debugging tools. Additionally, inspect the trace data in the backend UI (like Jaeger) to identify any gaps or missing information.