Imagine opening your incident channel to find this alert:

[Alert] PrometheusMemoryHigh• Pod: prometheus-0• Cluster: prod-cluster• Severity: critical• Summary: Prometheus consuming 30GB+ memory due to cardinality growth• Started: 2025-09-29 13:45 UTCPrometheus has long been the backbone of monitoring, but as deployments scale, it faces natural limits.

Kubernetes clusters can generate hundreds of thousands of active time series. Each new label combination — for example, a pod name, replica ID, or unique request path — adds to memory usage, increases index size, and stretches query performance. Once a pod grows past tens of gigabytes of RAM, queries that used to finish in seconds begin to timeout.

The default advice is to drop labels. But which ones?

- Removing

customer_tiermeans you can’t track whether premium users are seeing slower responses. - Dropping

feature_flagtakes away the ability to link failures to experiments. - Ignoring

deployment_versionmakes canary regressions harder to catch.

What has changed is the shape of telemetry itself. Applications no longer emit just counters and gauges like http_requests_total or cpu_usage_seconds_total.

A single request may cross five services, span three availability zones, and include attributes such as service.version, feature.flag, and user.tier. Each of these dimensions multiplies series counts, but they also tell the real story of system behavior.

The real question is whether your observability stack can manage that dimensional data without sacrificing either performance or visibility.

In our High Cardinality series, we talked about high cardinality, how dimensionality drives it, and why rich data often reveals aspects that aggregated metrics miss. This piece explores how OpenTelemetry and modern tooling help you work with high-cardinality data without the usual trade-offs.

Scaling Limits in Metrics Systems

If you’re running cloud-native systems today, you’re almost certainly using a metrics platform — Prometheus, InfluxDB, or a SaaS observability platform like Datadog and New Relic. Each of these has proven its value in production, but they all face the same challenge when high-cardinality data enters the picture.

A base HTTP metric may start simple:

# Typical HTTP latency metrichttp_request_duration_seconds{ service="checkout", method="POST", endpoint="/api/payment"}But once you add the context developers rely on for debugging — user tiers, regions, feature flags — the series count grows quickly:

http_request_duration_seconds{ service="checkout", method="POST", endpoint="/api/payment", user_tier="premium", # 3 values: free, pro, premium region="us-west-2", # 6 deployment regions feature_flag="new_checkout" # 8 active flags}The math explains why:

# Base metric: 5 services × 4 methods × 50 endpoints = 1,000 series# Add dimensions: 1,000 × 3 tiers × 6 regions × 8 flags = 144,000 series# Scale to 15 services: 144,000 × 15 = 2.16M seriesWhy Memory Usage Climbs

Most metrics libraries, including Prometheus clients, maintain in-memory structures for every unique label combination.

// Example from a Go service with Prometheus clientvar httpDuration = prometheus.NewHistogramVec( prometheus.HistogramOpts{Name: "http_request_duration_seconds"}, []string{"service", "method", "endpoint", "user_tier", "region", "feature_flag"},)

// Every unique combination is a distinct in-memory objectmap[string]*prometheus.Histogram{ "checkout,POST,/api/payment,premium,us-west-2,new_checkout": &histogram1, "checkout,POST,/api/payment,free,us-east-1,old_checkout": &histogram2,}This model works reliably at lower scales, but as attributes multiply, the state explodes. The effect is similar across platforms:

-

Prometheus pods: ~100k series → ~2.5 GB RAM; 1M series → ~25 GB RAM; beyond 2M series, OOMKills are common.

-

Datadog: Each unique series counts as a custom metric; millions of series can translate into six-figure monthly bills.

-

InfluxDB: InfluxDB’s series index grows with cardinality, driving up memory use

Coveo’s case study documented their Prometheus pod hitting 30GB memory limits with high-cardinality metrics, while another production environment reported memory usage climbing past 55GB due to cardinality issues.

The Trade-off Dilemma

When metric cardinality grows, teams often respond with compromises — dropping labels, sampling data, pre-aggregating metrics, or relying on vendor-enforced limits.

Each of these approaches helps you stay within resource budgets, but all of them come at the same cost: you lose visibility into the details that matter most during debugging.

Why Averages Can Miss Critical Issues

Averages are useful for understanding overall system health, but they often hide the very problems your users feel. The effect becomes sharper as applications become more distributed and user conditions more variable.

Take API latency monitoring as an example. A dashboard might show an average response time of 200 ms — a number that looks healthy at first glance. But behind that average:

- Most requests complete in 50–100 ms.

- A small percentage takes 2–5 seconds because of specific downstream conditions.

- Edge cases affect only certain user segments or regions.

The overall average is fine, but some users are having a very poor experience. And without dimensional context, it’s difficult to isolate those patterns.

That’s when the right questions become unanswerable:

- Are premium users in

us-west-2the ones experiencing latency? - Did the last deployment add delays for traffic behind a specific feature flag?

- Are retries increasing only for one customer cohort?

This is the core tension: the dimensions that make debugging possible are the same ones that traditional monitoring architectures struggle to handle at scale.

And this is where OpenTelemetry’s design makes a difference — it treats attributes as first-class data, giving you the context you need without forcing you to sacrifice performance.

OpenTelemetry’s Architectural Solution

OpenTelemetry (OTel) is an open-source framework for generating, collecting, and exporting telemetry data from your applications. It provides a standardized approach to observability that works across different languages, frameworks, and backends.

One of the architectural choices that makes OTel well-suited for high-cardinality data is delta temporality — a way of reporting metrics based on changes over time rather than maintaining cumulative state.

The Two Approaches

Cumulative temporality: Consider it as a car’s odometer - it keeps track of the total distance traveled since the car was made. Your applications work the same way with metrics like http_requests_total = 1,247 (total since the last restart).

Every time your application sees a new combination of labels - maybe a new user tier or a different endpoint - it creates another “odometer” in memory to track that specific combination. If you have 50,000 unique label combinations, you’re maintaining 50,000 running totals in RAM. And once created, these totals stay in memory for the life of the process.

Delta temporality: This is more like tracking your daily step count. Instead of reporting “I’ve walked 2.3 million steps since I was born,” you report “I walked 8,500 steps today.” Your applications work similarly - they report changes like http_requests = +12 (in the last 15 seconds).

The key difference: after each report, your application resets its counters and starts fresh. As OpenTelemetry explains, applications can “completely forget about what has happened during previous collection periods.”

This means that whether your application encounters 1,000 or 1 million different label combinations over its lifetime, it only needs memory for whatever combinations appear in the current reporting window.

// Configure OpenTelemetry for delta temporalityexporter, err := otlpmetricgrpc.New( context.Background(), otlpmetricgrpc.WithTemporalitySelector( func(kind sdkmetric.InstrumentKind) metricdata.Temporality { return metricdata.DeltaTemporality }, ),)The Memory Impact

Delta temporality is a way to “unburden the client from keeping high-cardinality state.” Services processing millions of interactions can use the same RAM footprint as those handling thousands.

Grafana puts it this way: cumulative temporality requires remembering “every combination of the metric and label sets ever seen,” while delta only needs the combinations present between exports.

Where the Work Goes Instead

With delta temporality, applications focus on emitting telemetry rather than managing long-lived metric state. The responsibility for aggregation and storage shifts to the OTel Collector and your backend, which are designed for horizontal scaling and can handle high-cardinality workloads more efficiently.

This design allows you to capture rich, dimensional telemetry without running into the memory pressure that often forces teams to drop labels or pre-aggregate metrics.

OpenTelemetry Collector: The Data Processing Layer

Delta temporality keeps your applications lean, but once telemetry leaves the app, something still has to process it, decide what’s useful, and route it to the right destination. That role falls to the OpenTelemetry Collector.

The Collector is where you shape telemetry before it reaches storage. Since every metric, span, and log flows through it, this becomes the control point where you can refine data — keeping what’s valuable, reducing what’s redundant, and making sure backends get data they can handle efficiently.

What You Can Do in the Collector

Filter low-value attributes: Fields like request_id or session_id are useful in logs or traces but add little to metrics. Dropping them early keeps the metric series meaningful.

Normalize unpredictable values: Endpoints like /user/123/orders/456 can balloon into thousands of unique series. A quick transform to /user/{id}/orders/{id} keeps queries useful without flooding storage.

Aggregate before export: Instead of shipping every raw data point, you can roll up metrics at the edge. That reduces noise and keeps backends focused on trends that matter.

Route flexibly: The same telemetry can feed multiple systems — for example, traces to your debugging platform, metrics to Prometheus, logs to your search system.

Example:

Kubernetes metadata illustrates the challenge clearly. Every pod gets its own name, IP, and container ID — all of which change constantly as workloads scale up, down, or move across nodes. If you attach these directly as metric labels, you’ll end up with millions of unique series that don’t actually help you understand system health.

In practice, most teams only need stable signals like namespace, deployment, or service to monitor application behavior. The Collector gives you a clean way to filter out the noisy, ever-changing values while keeping the metadata that matters for queries and dashboards.

# Collector configuration for managing Kubernetes attributesprocessors: k8sattributes: extract: metadata: - k8s.deployment.name - k8s.namespace.name - k8s.node.name labels: - service.name - app.version # Skip high-cardinality pod-specific attributes annotations: []

transform: metric_statements: - context: metric statements: # Keep deployment info, drop instance-specific data - delete_key(attributes, "k8s.pod.name") - delete_key(attributes, "k8s.pod.uid")This approach means backends get consistent, queryable data without drowning in unnecessary labels, while applications stay focused on business logic rather than telemetry management.

How to Work with High Cardinality

The usefulness of telemetry depends on how attributes are defined, refined, and applied consistently. Most teams work through a sequence of choices that determine whether high-cardinality data becomes an advantage or creates operational overhead.

Start with Attribute Hierarchy

Attributes exist at multiple layers of detail.

- Resource-level attributes such as

deployment.environment,orcloud.regiondescribes the execution environment. - Service-level attributes such as

service.nameandservice.versiontie telemetry to a particular workload or rollout. - Request-level attributes such as

http.methodorhttp.routecapture individual operations.

Thinking in this hierarchy provides a framework for deciding which attributes belong in metrics for long-term aggregation and which are better suited to traces or logs, where granularity is preserved without multiplying series counts.

Distinguish Value from Noise

Not every attribute adds meaningful analytical value. Fields such as customer, segment, or feature.flag help identify differences across user groups or staged rollouts. In contrast, identifiers such as request_id or session_id generate effectively unbounded uniqueness while offering little value for metrics analysis.

Allowing these into metrics inflates series counts and storage requirements without making queries more insightful. A practical approach is to retain them in traces or logs, where they improve debugging without overwhelming metrics.

Normalize Before It Multiplies

Some attributes are useful but too granular in raw form. Paths such as /user/123/orders/456 generate a unique label for every embedded ID. Normalizing them into /user/{id}/orders/{id} preserves meaning while reducing unnecessary uniqueness.

Numeric values can be bucketed into ranges, and categorical values can be grouped to prevent queries from fragmenting across hundreds of low-value series. Normalization retains the signal while keeping series counts bounded.

OTel Built-in Guardrails

OpenTelemetry includes several mechanisms to enforce these choices consistently across services:

Views are configuration rules that determine which attributes remain attached to a metric and how that metric is aggregated. For example, an HTTP server latency histogram can be configured to keep service.name, http.method, and http.route, while discarding user.id. This ensures that metrics remain consistent and queryable.

SDK cardinality limits protect against runaway series growth. Most OpenTelemetry SDKs enforce a maximum number of unique series a metric can produce (for example, around 2000 combinations in the .NET SDK). Once the cap is reached, additional values are handled through overflow attributes, keeping the system responsive while maintaining visibility into what’s being dropped.

Semantic conventions are OpenTelemetry’s standard for naming attributes across signals. They specify consistent keys for concepts such as HTTP requests (http.route, http.method), databases (db.system, db.statement), or messaging operations (messaging.system, messaging.operation). By aligning on conventions, attributes correlate naturally across services, and queries remain coherent.

PromQL Still Works — With More Dimensions

When OTel metrics are exported to Prometheus-compatible backends, the query language you already rely on continues to work exactly as before.

# The same PromQL queries still applyrate(http_request_duration_seconds_bucket[5m])The difference is that you can now safely include richer attributes without worrying about overwhelming the backend. Attributes that were once too costly to keep — such as user tiers, feature flags, or deployment versions — remain queryable.

# PromQL with additional dimensionsrate(http_request_duration_seconds_bucket{ user_tier="premium", feature_flag="new_checkout"}[5m])Your PromQL knowledge becomes more powerful: the syntax stays the same, but the scope of what you can ask expands. Instead of dropping labels to keep queries responsive, you can analyze behavior across customer segments, experimental rollouts, or environment versions with the same fluency you already have.

High Cardinality Without Compromise

OTel makes it possible to collect and shape telemetry effectively, but the backend determines whether that data remains usable at scale.

Last9 is built specifically to handle high-cardinality without the usual trade-offs. As an OTel-native platform, every attribute you emit — service.version, customer.segment, feature.flag — remains queryable without schema translation or silent dropping of labels.

And because Last9 is also fully Prometheus-compatible, the queries and PromQL skills you already rely on continue to work, now with the ability to include richer dimensions without slowing down.

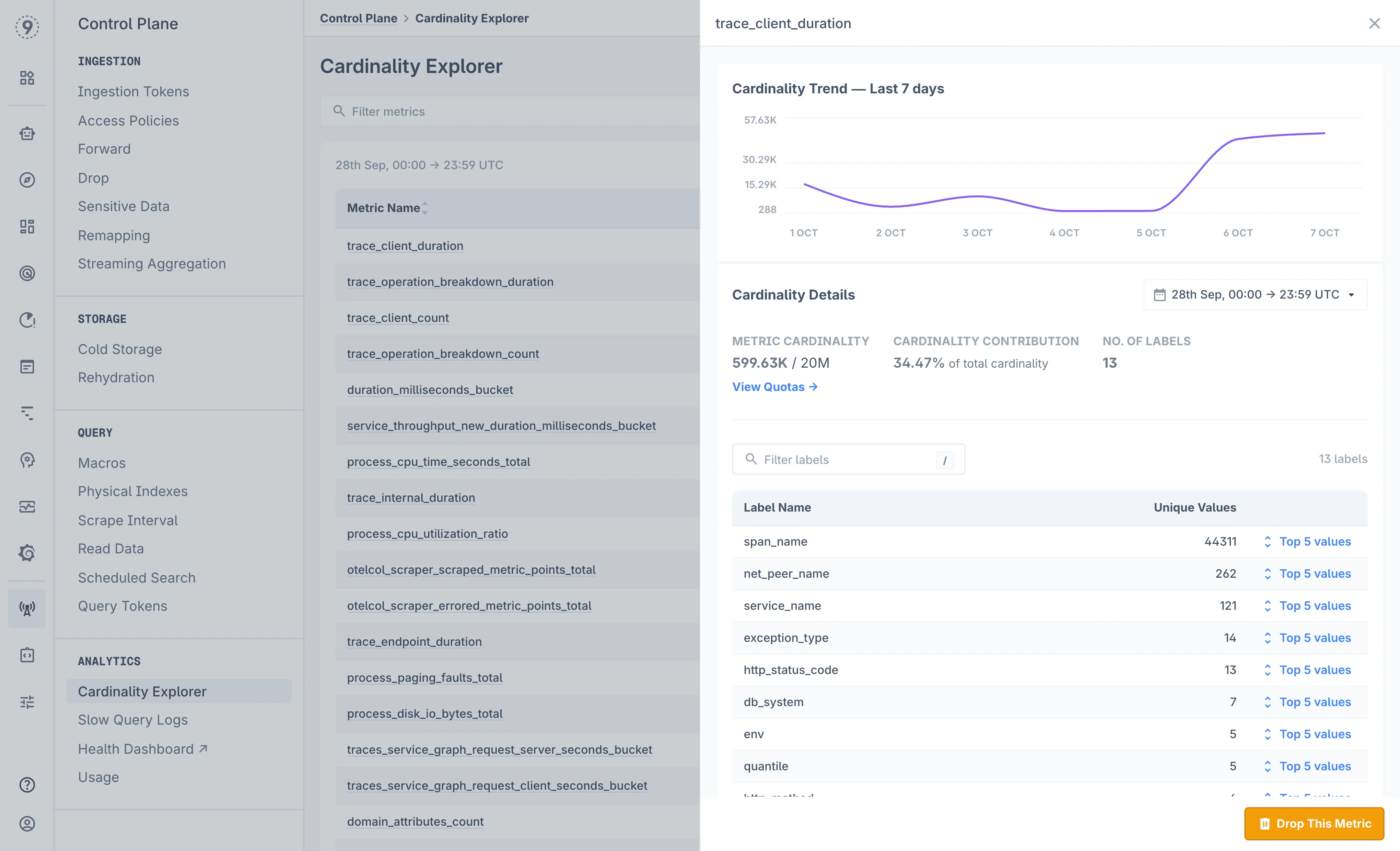

With Cardinality Explorer, you get visibility into how your metrics evolve:

- Track how metric series counts change over time

- Get early warnings when metrics approach system limits

- Drill into individual metrics to identify the top labels and values driving growth

If a new deployment or feature flag suddenly multiplies label combinations, you’ll know exactly which attributes caused the spike. Instead of discovering the issue when dashboards start timing out, you can act early — normalize routes, adjust labels, or apply streaming aggregation rules.

Predictable Costs

Costs scale with the number of events ingested, not infrastructure metrics like host counts or users. This means you can instrument comprehensively without worrying that adding dimensions or services will trigger unexpected billing spikes.

For engineers, this translates to confidence. The attributes you instrument survive ingestion, remain queryable, and won’t impact performance or budgets as systems grow.

Start for free today and explore how high-cardinality telemetry behaves at scale without risk.

In our next part, we’ll dig into Cost Optimization and Emergency Response — covering economic strategies for high cardinality, intelligent sampling, techniques for diagnosing cardinality spikes, and fast mitigation approaches.