High-cardinality data often shows up when you want precise answers from your systems. Additional dimensions help you track per-customer SLAs, see how a feature flag changes latency, or understand how specific endpoints behave across user tiers. Metrics with detailed labels provide this context, and they’re often the quickest way to identify what changed and where.

As traffic grows, the shape of your data naturally expands. A label such as customer_id may behave predictably in staging and then widen in production as more customers and workflows appear. This isn’t unusual — it reflects how real workloads evolve and how your visibility needs shift with them.

In this edition of the HC series, we look at the patterns that introduce more dimensional variation, how that variation usually surfaces in dashboards and backends, and the steady habits that help keep metrics predictable while preserving the depth you depend on.

How Dimension Growth Usually Shows Up

As new services and features roll out, you add more context to your telemetry. A label that helps compare customer tiers, another that captures rollout behavior, or a short-term flag that stays useful longer than expected — each addition expands the set of dimensions your backend tracks.

What matters more than the absolute number of series is how the variation grows over time. Prometheus can handle millions of series with the right resources, but rapid, unexpected expansion will affect any backend.

You’ll usually notice that growth in a few familiar ways:

Dashboards feel heavier: Panels begin taking slightly longer to load. Shortening time ranges keeps queries responsive.

Memory use increases: Backends allocate more memory as they manage a wider set of active series.

Query responses change subtly: Some queries may return partial results when internal limits are reached. These aren’t errors; the backend adapts to the broader data shape.

Storage consumption grows: Data volume increases in line with the expanded metric set, even when retention stays constant.

These signals often reflect natural expansion as telemetry becomes richer and workloads introduce more variation.

Design Patterns That Expand Dimensions Faster Than Expected

Some telemetry patterns introduce more variation than planned. They’re added for valid reasons — clearer attribution, easier debugging, or more granular insights. Each works well on its own, but together they can widen the label space faster than expected.

Cardinality grows multiplicatively rather than linearly. A metric with 10 HTTP methods × 50 paths × 5 status codes × 100 hosts creates 250,000 unique series.

Below are the patterns that usually broaden dimensions when traffic, services, or features evolve.

Unbounded Identifiers as Labels

Identifiers such as user_id, session_id, and request_id help link metrics to specific actions or flows. During debugging, that level of detail is reassuring. The only catch is that these values don’t have a defined range, so variation grows with production traffic.

Examples:

user_idsession_idrequest_idtrace_idtransaction_id

Why growth appears:

- Each unique identifier becomes a separate series

- Variation multiplies when paired with labels such as

endpointormethod

A steadier approach is to keep identifiers in traces or logs, where individual request detail fits naturally. Metrics can stay aggregated at the service or route level, which keeps them predictable.

If deeper detail is needed temporarily, a short-retention metric tier works well:

# Core metrics — long retentionhttp_requests_total{service, endpoint, method, status_code}

# High-detail tier — short retentionhttp_requests_debug_total{service, endpoint, method, user_id, session_id}Dynamic Path Segments as Labels

HTTP paths often include values that change per request — user IDs, order numbers, or UUIDs. Using the full path as a label captures that detail but also creates a series for every unique path. OpenTelemetry recommends using url.template to keep these stable.

Examples of dynamic segments:

/user/12345/orders/orders/67890/details/customers/uuid-abcd-1234/profile

Where variation shows up:

- A single logical route becomes thousands of unique values

- Raw paths add more detail than most metric queries need

Normalizing paths keeps route-level visibility while keeping the label set predictable:

/user/12345/orders → /user/{id}/orders/orders/67890/details → /orders/{id}/detailsExample in code:

# Example in codenormalized = re.sub(r'/user/\d+/', '/user/{id}/', request.path)metric.record(duration, attributes={'http.target': normalized})Ephemeral Infrastructure Labels

In Kubernetes or cloud setups, identifiers such as pod names, container IDs, and instance IDs change frequently. They’re useful for debugging a specific workload, but when used as metric labels, they widen the series count with every rollout or autoscaling event.

Common ephemeral values:

pod_namecontainer_idinstance_idnode_name

Why variation increases:

- New pods create new label values

- Autoscaling adds more

- Old series remain in storage until they age out

Stable labels such as deployment, namespace, service, and cluster usually provide the right level of visibility.

Prometheus relabeling example:

metric_relabel_configs: - source_labels: [pod_name] action: labeldrop - source_labels: [container_id] action: labeldropOpenTelemetry Collector example:

processors: resource: attributes: delete: - k8s.pod.name - container.id keep: - k8s.deployment.name - k8s.namespace.name - service.nameDebug Labels That Stay Longer Than Intended

Short-term labels appear during incidents, experiments, or feature rollouts — such as debug_mode, trace_enabled, or experiment_id. They help isolate behavior quickly, but it’s common for them to stay in the instrumentation longer than planned.

Examples:

debug_mode=trueexperiment_id=A/B/Xtrace_enabled=on

Why variation expands:

- Experiments rotate and create new values

- Short-term labels accumulate across releases

A simple approach is to enable these labels only when needed:

if config.ENABLE_DEBUG_METRICS: attributes["experiment_id"] = current_experimentCustomer or Tenant-Specific Metrics

In multi-tenant systems, per-customer visibility is valuable for product teams, support, and engineering. Customer identifiers, however, grow naturally with adoption and feature usage.

Examples:

customer_idtenant_idaccount_id

Why variation increases:

- Each customer introduces a new set of series

- New features add new dimensions across the customer base

A more predictable pattern is to track tenants at an aggregated level and use short retention for per-customer metrics where needed:

# Service-level metrics — long retentionservice_requests_total{service, status_code}

# Tier-based visibility — moderate retentionservice_requests_by_tier_total{service, customer_tier, status_code}

# Per-customer metrics — short retentioncustomer_requests_total{customer_id, service, status_code}Numeric Values as Labels

Numeric fields such as timestamps, exact durations, or byte counts vary per request. When used directly as labels, each unique number forms a new series.

Where variation comes from:

- Timestamps are always unique

- Exact latency values differ per request

- Payload sizes vary constantly

Histograms provide the same distribution-level insight without unbounded label values:

# Instead of:http_request_duration_seconds{latency="0.234"} 1

# Use:http_request_duration_seconds_bucket{le="0.5"} 450http_request_duration_seconds_bucket{le="1.0"} 890http_request_duration_seconds_sum 523.45http_request_duration_seconds_count 1000Design Patterns for Sustainable Cardinality

A few steady patterns make your metrics easier to work with and help keep dimensional growth predictable. These aren’t about restricting detail — they help you place detail where it fits best.

Bounded Label Sets

Labels work best when the range of values is clear. Prometheus generally encourages keeping per-label variation small, with only a few metrics stretching into larger sets.

Stable examples:

http.method(GET, POST, PUT, DELETE)- grouped

status_code(2xx, 4xx, 5xx) - Region

service_tier

Unbounded examples:

user_id- unnormalized request paths

ip_address

A simple way to keep things steady is to document expected label values and use enums or constants instead of arbitrary strings.

A quick review during instrumentation or code review — “How many values will this label produce?” — surfaces patterns early.

Hierarchical Aggregation

Different use cases need different levels of detail. Instead of choosing one level, you can emit multiple tiers.

# High detail — short retentionhttp_requests_total{service, endpoint, method, status_code, pod}

# Service-level — 30 dayshttp_requests_total{service, endpoint, method, status_code}

# Cluster-level — long retentionhttp_requests_total{service, status_code}This keeps detailed debugging data available without carrying that level everywhere.

Normalize at the Source

Many high-variation values shrink naturally with small transformations.

Examples:

- Normalize dynamic paths

- Reduce user-agent strings into browser families

- Bucket durations into “fast”, “medium”, “slow”

# Path normalizationnormalized = re.sub(r'/user/\d+/', '/user/{id}/', request.path)Applying this during instrumentation keeps the label space predictable and reduces the need for downstream relabeling.

Use Histograms for Distributions

Numeric values are almost always high-variation. Histograms let you capture distributions without generating a separate series for every unique value.

http_request_duration_seconds_bucket{le="0.5"} 450http_request_duration_seconds_bucket{le="1.0"} 890You still get distribution shapes and percentile visibility with a stable number of series.

Tiered Metrics for Different Use Cases

Instead of loading every label into a single metric, separate them based on who uses the data and for what.

# Operationalhttp_requests_total{service, endpoint, method, status_code}

# Customer trendshttp_requests_by_customer_tier_total{service, customer_tier, status_code}

# Debugging — short retentionhttp_requests_debug_total{service, endpoint, method, status_code, pod}Each metric stays focused, and retention can match the purpose.

Monitoring Your Monitoring Stack

Your metrics backend gives you clear signals as the data shape changes. Tracking a few steady indicators helps you understand how your dimensions evolve and whether new workloads introduced additional variation.

Active Series Count

A straightforward starting point is the active series count. It shows how many unique series the backend is holding at a given moment. The absolute number matters less than how it changes.

A steady upward trend or a sharp jump often means new labels were added or existing labels started varying more than before.

prometheus_tsdb_symbol_table_size_bytesSeries Churn

Series churn reflects how frequently new series appear and old ones fade out. High churn usually comes from short-lived identifiers, rollout-driven labels, or autoscaling events.

rate(prometheus_tsdb_head_series[5m])Tracking churn gives you a sense of how dynamic your dimensions are and whether recent changes introduced more variation than usual.

Query Performance

Query latency offers another signal. When P95 or P99 latency shifts upward, it usually means queries are scanning a wider set of series or looking across longer time windows. This doesn’t point to a single metric — it reflects how the underlying data has broadened.

Resource Usage

Memory and CPU usage during compaction or heavy query periods often increase as dimensional range expands. This isn’t unusual; it shows the backend working through a richer dataset. Tracking these helps you understand how backends respond as workloads change.

A Simple Dashboard Helps

You can bring these signals together in a compact dashboard. The most useful panels tend to be:

- total active series over time

- metrics contributing the most series

- growth rates (hourly, daily, weekly)

- labels with the widest value range

- P95/P99 query latency

- memory and CPU trends

Reviewing this periodically — or when instrumentation changes — provides a clear picture of how your telemetry is evolving.

Alerts Based on Change, Not Absolute Counts

Alerts work best when they focus on unexpected shifts rather than raw numbers. A small rise can mean more than a large but steady baseline.

- alert: CardinalityGrowthSpike expr: increase(prometheus_tsdb_head_series[1h]) > 100000

- alert: QueryLatencyIncreasing expr: rate(prometheus_engine_query_duration_seconds_sum[5m]) > 10These alerts help highlight when the shape of the data changed and deserves a closer look.

Recovery When Your Data Shape Changes

Even with well-structured metrics, the shape of your data will shift occasionally. New deployments, additional labels, or changes in traffic can introduce more variation than before. Recovery is about understanding that shift and keeping the system steady while you review what changed.

When Memory Usage Rises

If memory usage climbs above its usual baseline, a quick look at the active series count helps you see how much new variation was introduced.

From there, checking which metrics contribute most of the series usually points to where the new range came from.

A few steps that help restore balance:

- Drop short-lived or highly variable labels at the collector

- Review recent instrumentation or config updates

- Add temporary resource headroom if needed

metric_relabel_configs: - source_labels: [pod_name, container_id] action: labeldropWhen Dashboard Queries Slow Down

Backend logs usually highlight which query took longer than expected. While investigating, you can keep dashboards responsive by:

- Reducing the look-back window

- Adding a narrower filter

- Using recording rules to pre-compute repeated aggregations

Recording rules help offload work that dashboards repeatedly run:

- record: http_requests:rate5m expr: rate(http_requests_total[5m])This reduces query load without changing the underlying metrics.

When Storage Usage Increases

A rise in storage often aligns with broader label ranges. Identifying which metrics expanded helps you adjust retention where it matters.

Shorter retention for high-detail tiers gives you space to refine labels, add normalization, or reorganize how metrics are shaped. Once the data stabilizes, you can restore your usual retention policies.

Streaming Aggregation Before Storage

Some backends support applying aggregation during ingestion. This lets you trim highly variable labels or merge dimensions before the data reaches long-term storage.

It keeps queries fast and storage predictable, especially for labels tied to short-lived infrastructure or request-level metadata.

Example outcomes:

- ephemeral labels removed at ingest

- series counts stay steady

- long-term storage holds the aggregated view

- detailed data can still be emitted in short-retention streams

This approach reduces storage pressure without requiring changes to your application code.

Keeping the System Steady While Data Evolves

All these steps help bring the backend to a balanced state. The goal isn’t to remove detail — it’s to keep dashboards responsive and storage predictable while you evaluate how workloads are shaping the data.

Get the Context You Need Without the Cardinality Tax with Last9

The approaches we’ve discussed — relabeling configs, recording rules, manual aggregation — all work. But they share a common pattern: you’re constantly reacting to cardinality growth. You spot a spike, write a fix, deploy it, then repeat when the next pattern emerges. You end up spending time managing your monitoring instead of using it.

Last9 takes a different approach. Instead of making you choose between rich context and system stability, our platform is built to handle high-cardinality data as a first-class capability.

You Don’t Trade Off Detail for Stability

A few backends force a tradeoff: either limit your labels to keep costs down, or accept that detailed instrumentation will balloon your bill. With Last9, you instrument your code the way you want.

The platform is designed to ingest and query high-cardinality data efficiently, so adding customer_id or per-endpoint labels doesn’t require you to immediately start dropping dimensions or writing complex aggregation rules.

Defaults allow up to one million new series per hour and around 20 million per day, and these can be tuned based on your workload.

Streaming Aggregation Before Storage

Traditional setups apply aggregation during query time. Last9 lets you define aggregation rules that run during ingestion, using familiar PromQL-like expressions.

This means:

- highly variable labels can be trimmed at ingest

- ephemeral details stay available in short-retention streams

- long-term storage holds the aggregated view

- queries stay fast even when dimensions grow

You can adjust these rules in the UI without touching application code or waiting for a deploy.

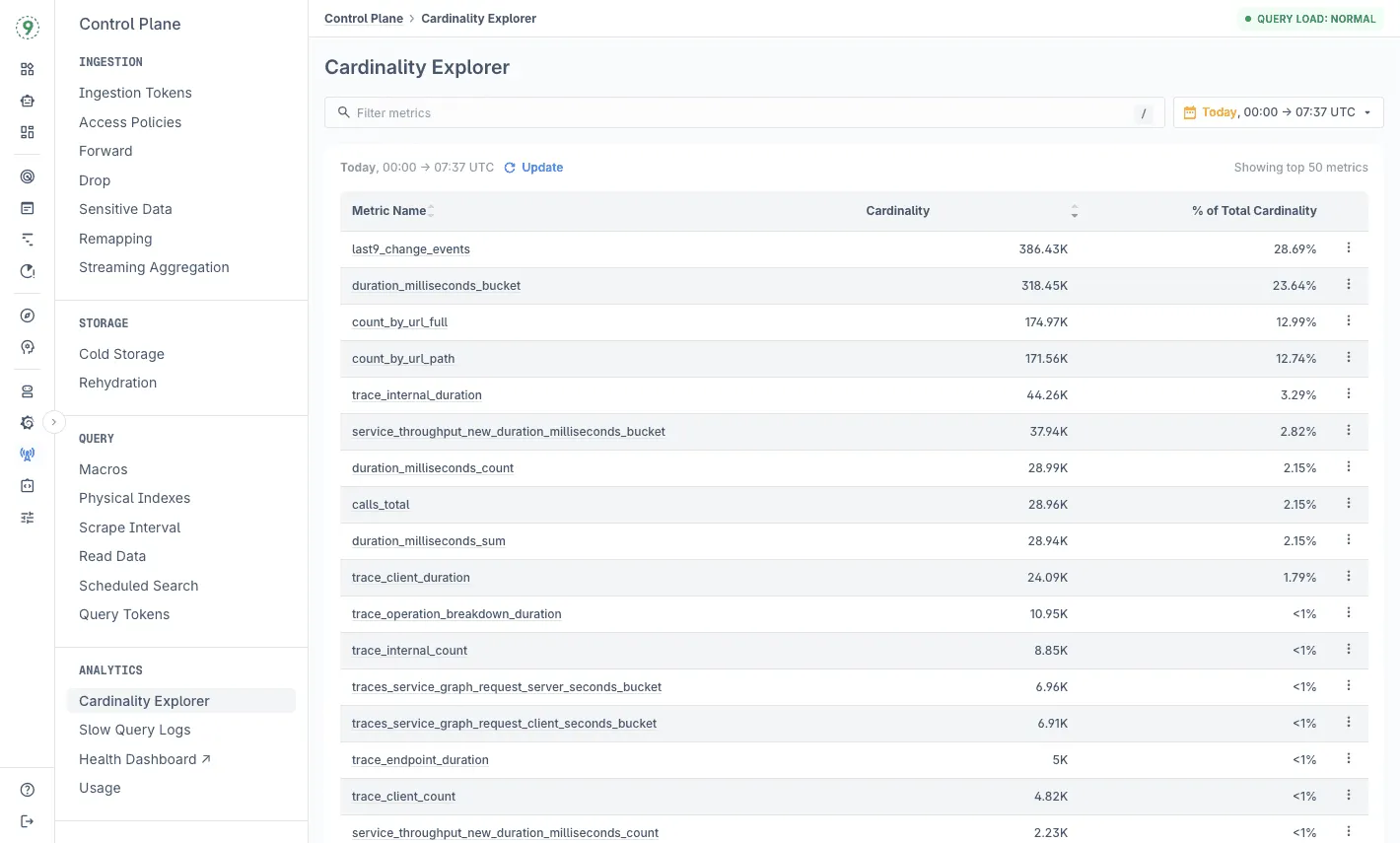

Cardinality Insights Are Built In

In Prometheus, tracking cardinality means running queries against the TSDB, building custom dashboards, and correlating spikes with recent deployments yourself. Understanding how label ranges evolve shouldn’t require building custom dashboards.

Last9’s Cardinality Explorer gives a real-time view of:

- which metrics introduced new series

- how label values are changing

- which deployments or services contributed to the shift

This helps you notice changes early and understand the data shape as it evolves.

Costs Scale With Your Data, Not Your Cardinality

Datadog ties pricing to unique time series, New Relic focuses on data ingest volume, and Prometheus consumes more memory as dimensional range grows. Each model naturally shapes how teams design their metrics.

Last9’s event-based approach keeps costs predictable, so you can instrument with the detail your services need.

This keeps pricing predictable as datasets grow.

Plan Ahead Instead of Responding Later

With traditional setups, cardinality management is reactive. A dashboard slows down, you investigate, you find the label that exploded, you write a relabeling rule, you deploy it. Repeat monthly. Last9 gives you the visibility and controls to handle cardinality proactively. You see trends before they become incidents. You adjust aggregation rules without code changes. You configure retention policies that match how long different metrics actually need to live.

Our goal is to remove the constant tradeoff between observability depth and system stability. You get the context you need for debugging, the speed you need for dashboards, and the predictability you need for budgets.

Try Last9 today, or book sometime with our team to walk through how to instrument with the depth you need!

Next in the HC series: gaming and entertainment. We’ll walk through how studios use high-cardinality data to understand individual players, optimize matchmaking in real time, predict churn, and keep viewer experiences smooth at global scale.