You’re three weeks into a new deployment when your Slack lights up at 2 AM:

Slack pings:

#incidents: prometheus-0 OOMKilled — 4 restarts in 10 minutes.

You pause.

First thought: What changed?

Second: What’s this going to cost us?

High cardinality gives observability its depth — every label, trace, and metric tells a more complete story about system behavior. But when data volume spikes, so do storage and compute needs. And that’s a reminder that data has a cost, and managing it well is part of running reliable systems.

This part of the High Cardinality series talks about how to plan for telemetry budgets, handle unexpected spikes, and put cost controls in place so you can keep every insight that’s important without slowing down or overspending.

The Economic Reality of High Cardinality

With high cardinality, each label introduces another perspective on performance across services, environments, and users. As systems scale, your telemetry grows too. When series counts rise from 100,000 to a few million, your infrastructure simply works harder to handle and query that additional context.

The Cost Multiplication Effect

Most observability platforms charge based on metrics ingested or time series stored. Adding a label with 50 unique values can multiply the number of metric combinations and, in turn, the resources needed to store them.

For example, you might track payment latency like this:

payment_latency_seconds{ service="checkout", region="us-west-2", payment_method="card"}This produces a steady number of time series.

Adding customer_id expands that dramatically. With 10,000 active customers, a few hundred series can grow into millions.

Prometheus memory may rise from roughly 2.5 GB at 100k series to 25 GB+ at 1M+ series. In practice, each time series typically requires 4-8 KB of memory, with actual RSS memory usage often doubling due to Go’s garbage collection overhead.

Here’s what that scaling looks like across systems:

- Prometheus: Each unique time series increases memory and query load

- Datadog: Every unique metric–tag pair counts as a custom metric; larger tag spaces scale cost proportionally

- InfluxDB: High cardinality expands the series index, which raises memory use and query latency

Balance Cost and Visibility

You can tune what you retain without losing valuable visibility:

- Drop unused labels when they no longer add analytical value.

- Sample selectively to control ingestion volume while keeping trends clear.

- Pre-aggregate older data to save storage on long-term metrics.

- Set quotas or budgets to track usage and prevent unplanned spikes.

The goal is to shape data growth in a way your systems and budgets can comfortably support.

Build a Cardinality Budget System

Cardinality, like compute or storage, is a resource. It deserves the same kind of ownership, budgeting, and review as any other production capacity.

Establish Team and Service Quotas

Different services generate different volumes of telemetry. A checkout API usually benefits from richer metrics than a background data sync, and your budgets should reflect that.

A simple way to assign allocations is by business impact:

- Critical services (payments, authentication, checkout): Allow higher cardinality. These systems justify richer telemetry because every label can shorten debugging time and protect revenue.

- Standard services (search, recommendations, notifications): Keep cardinality moderate enough for performance insights without inflating storage.

- Background services (batch jobs, pipelines, internal tools): Apply lower limits since issues here can often wait until business hours.

To start, find your current baseline. For example:

SELECT count(distinct(concat(label1, label2, label3)))FROM metricsWHERE service = 'checkout';This shows how many unique time series your service generates today. Use that as a reference point and adjust gradually based on actual debugging needs rather than assumed completeness.

Automate Budget Enforcement

Manual oversight doesn’t scale well. By the time you notice a spike, the data’s already written — and billed. Automated checks help you stay within limits.

You can enforce budgets at multiple layers:

At the OpenTelemetry Collector

Set cardinality limits based on your observability backend’s capabilities. Monitor metrics like memory usage and query performance to determine appropriate thresholds for your environment.

Configure the OpenTelemetry Collector to manage cardinality:

processors: # Remove high-cardinality attributes attributes: actions: - key: request_id action: delete - key: session_id action: delete - key: trace_id action: delete

# Group by specific attributes to control dimensions groupbyattrs: keys: - cloud.region - service.name - http.route

# Transform processor for conditional logic transform: metric_statements: - context: datapoint statements: # Apply custom cardinality rules - set(attributes["environment"], "overflow") where attributes["instance_count"] > 100

# Filter processor to drop high-cardinality metrics entirely filter: metrics: exclude: match_type: regexp metric_names: - ".*.request_id..*" - ".*.session_id..*"

# Complete pipeline exampleservice: pipelines: metrics: receivers: [otlp] processors: [attributes, groupbyattrs, transform, filter, batch] exporters: [prometheus, otlp]At the Observability Backend

If using Prometheus as your backend, apply relabeling rules to drop high-cardinality labels before storage:

# Prometheus scrape configurationscrape_configs: - job_name: "my-service" metric_relabel_configs: # Keep only specific metrics - source_labels: [__name__] regex: "http_requests_total" action: keep

# Drop high-cardinality labels - action: labeldrop regex: "request_id|session_id|trace_id"

# Drop specific label values - source_labels: [status_code] regex: "2..|3.." action: dropDesign a Dimensional Hierarchy for Smart Aggregation

Structure your attributes in a clear hierarchy. This lets you query broadly and zoom in when necessary:

cloud.region > cloud.availability_zone > service.name > deployment.version > http.routeThis hierarchy aligns with OpenTelemetry semantic conventions for resource attributes and follows best practices for organizing telemetry dimensions.

Here are a few benefits of this approach:

- Query region-level trends: “How’s us-west performing overall?”

- Drill down into specifics: “Which AZ or deployment is slow?”

- Aggregate intelligently: Roll up data by region for long-term storage while keeping detailed dimensions for recent time windows

Here’s how you can implement it in OTel:

processors: # Define resource attributes following the hierarchy resource: attributes: - key: cloud.region value: us-west-2 action: upsert - key: cloud.availability_zone value: us-west-2a action: upsert - key: service.name value: api-gateway action: upsert - key: deployment.version value: v2.1.0 action: upsert

# Use groupbyattrs to aggregate at different hierarchy levels groupbyattrs: keys: - cloud.region # Coarsest granularity - cloud.availability_zone - service.name - deployment.version # Finer granularity # Note: http.route excluded here to reduce cardinality

# Create separate aggregated metrics for long-term storage metricstransform: transforms: - include: http_requests_total match_type: regexp action: update operations: # Aggregate by region only for long-term trends - action: aggregate_labels label_set: [cloud.region] aggregation_type: sumYou rarely need every dimension in every query. Most investigations start broad and narrow as needed — a clear hierarchy keeps your data both efficient and insightful.

Monitor Your Cardinality

Track your cardinality metrics to validate your budget enforcement:

# OpenTelemetry Collector configuration for cardinality monitoringprocessors: # Count unique metric series spanmetrics: metrics_exporter: prometheus dimensions: - name: cloud.region - name: service.name

exporters: prometheus: endpoint: "0.0.0.0:8889" # Enable metric cardinality reporting enable_open_metrics: trueFor Prometheus backends, track:

prometheus_tsdb_symbol_table_size_bytes- Memory used by label names/valuesprometheus_tsdb_head_series- Current number of active seriesprometheus_tsdb_head_chunks- Number of chunks in memory

For OpenTelemetry Collector, monitor:

otelcol_processor_refused_metric_points- Dropped due to cardinality limitsotelcol_exporter_sent_metric_points- Successfully exported metrics

Budgets and quotas give you control over what enters your system. But sometimes the volume of telemetry itself becomes the challenge - not just how many labels you track, but how much raw data you’re collecting in the first place. When every request generates a trace, and every trace carries dozens of spans, storage and query costs can climb quickly even with careful label management.

Sampling helps here. Instead of capturing every trace, you can focus on the crucial ones - keeping enough data to debug issues and understand system behavior without overwhelming your storage or query performance.

Choose What to Keep: Sampling Approaches

More traffic means more traces. Keeping every single one isn’t always practical — storing and querying all that data can quickly outpace what your infrastructure or budget can handle.

Sampling exists to make this manageable. It helps you keep signals that matter most while still preserving enough context to understand what’s happening across services.

The challenge is balance. Different sampling approaches trade completeness for control in different ways.

Random Sampling

Random sampling is often where teams start. You define a percentage — say 10% of traces — and collect those consistently. It’s predictable and lightweight, making it ideal for spotting broad patterns.

The limitation is subtle: random sampling represents what’s normal, not what’s interesting. The rare traces — failed calls, latency spikes, or one-off timeouts — are least likely to appear in that random subset. So, while you get a good view of system health, you may miss the few traces that explain why something broke.

And with high-cardinality data, that trade-off deepens — random selection can lose the dimensions that connect those patterns to real user or regional context.

Tail-Based Sampling

Tail-based sampling flips the process. Instead of choosing traces upfront, it waits until each trace finishes, reviews the full context, and then decides what’s worth keeping.

You might keep:

- Traces with

5xxor error responses. - Slow traces that exceed your latency threshold.

- Requests tied to key customers or critical regions.

- A small sample of fast, healthy traces for baseline comparison.

This approach captures the events that matter most while trimming routine noise. It does need a bit more infrastructure to buffer traces until completion, but it saves time when you’re debugging — because the traces you need are always there.

Adaptive Sampling

Adaptive sampling brings flexibility. It adjusts how much data you keep based on what’s happening right now. During normal operation, it might capture 5% of traces. When latency spikes or errors surge, it can automatically increase sampling for the affected services.

You don’t have to guess when to collect more data — the system responds dynamically. That means fewer surprises during incidents and less noise when things are stable.

Manage High-Cardinality Labels

Fields like region, service_name, and http_method help you see meaningful trends. Others — like user_id, session_id, or request_id — create far more unique combinations, which increases storage and query costs.

Smart label management finds the middle ground.

- Keep the dimensions that explain system behavior.

- Filter or group identifiers that generate unnecessary combinations.

Normalize variable paths to reduce redundant series:

/user/123/orders/456 → /user/{id}/orders/{id}

You end up with data that’s rich enough for diagnosis but light enough to stay efficient.

Identify Early Cardinality Growth

Cardinality growth usually begins with small shifts in system behavior. A dashboard that was always quick now pauses for a few extra seconds. A Prometheus query that normally runs cleanly starts taking longer. Nightly restarts appear in logs. Nothing dramatic — just subtle changes that hint at growing data volume.

Traffic patterns may look steady, but Prometheus responds differently when the number of active time series increases. These early signals are often the first indication that the system is working harder than before.

How Cardinality Growth Shows Up

Several technical indicators tend to appear around the same time:

- Memory pressure: Prometheus pods move closer to their memory limits. TSDB heap messages show up, or pods restart during scrape or WAL replay.

- Slower queries: Dashboards return results more slowly as TSDB needs to search through a larger set of series.

- Restarts during WAL replay: Higher series counts can make replay heavier. Prometheus may restart more often, especially after deployments or scaling events.

- Higher usage in managed backends: Costs increase when new label combinations generate more series, even if request traffic stays consistent.

- Backend warnings: Logs begin showing messages related to rising series counts or fast series creation rates.

Individually, these signals may look routine. Seen together, they often point to increasing cardinality.

Identify Which Metrics Are Growing

A reliable way to understand growth is to examine which metrics contribute the most series.

Start with a topk() query:

topk(10, count by (__name__, job)({__name__=~".+"}))This surfaces the metrics generating the highest number of series. Once a metric stands out, inspect how its labels expand:

count by (__name__, label1, label2)({__name__="http_requests_total"})Common sources of series expansion include:

- User-level identifiers like

user_id,session_id, ortrace_id - Dynamic URL paths where unique IDs appear in the route

- Kubernetes metadata that changes with restarts or scaling

- Temporary debug labels that move into production by accident

- Autoscaling events that introduce fresh instance-specific labels

Tracing the change back to a deployment, config update, or new instrumentation usually reveals the cause.

Track Trends Before They Affect Operations

Instead of waiting for dashboards or queries to slow down, it helps to watch cardinality trends directly.

Platforms now expose metrics such as:

- Active series over time

- Series creation rates

- Labels contributing the fastest growth

- Metrics with consistently rising cardinality

Grafana Cloud provides views for series growth and label distribution.

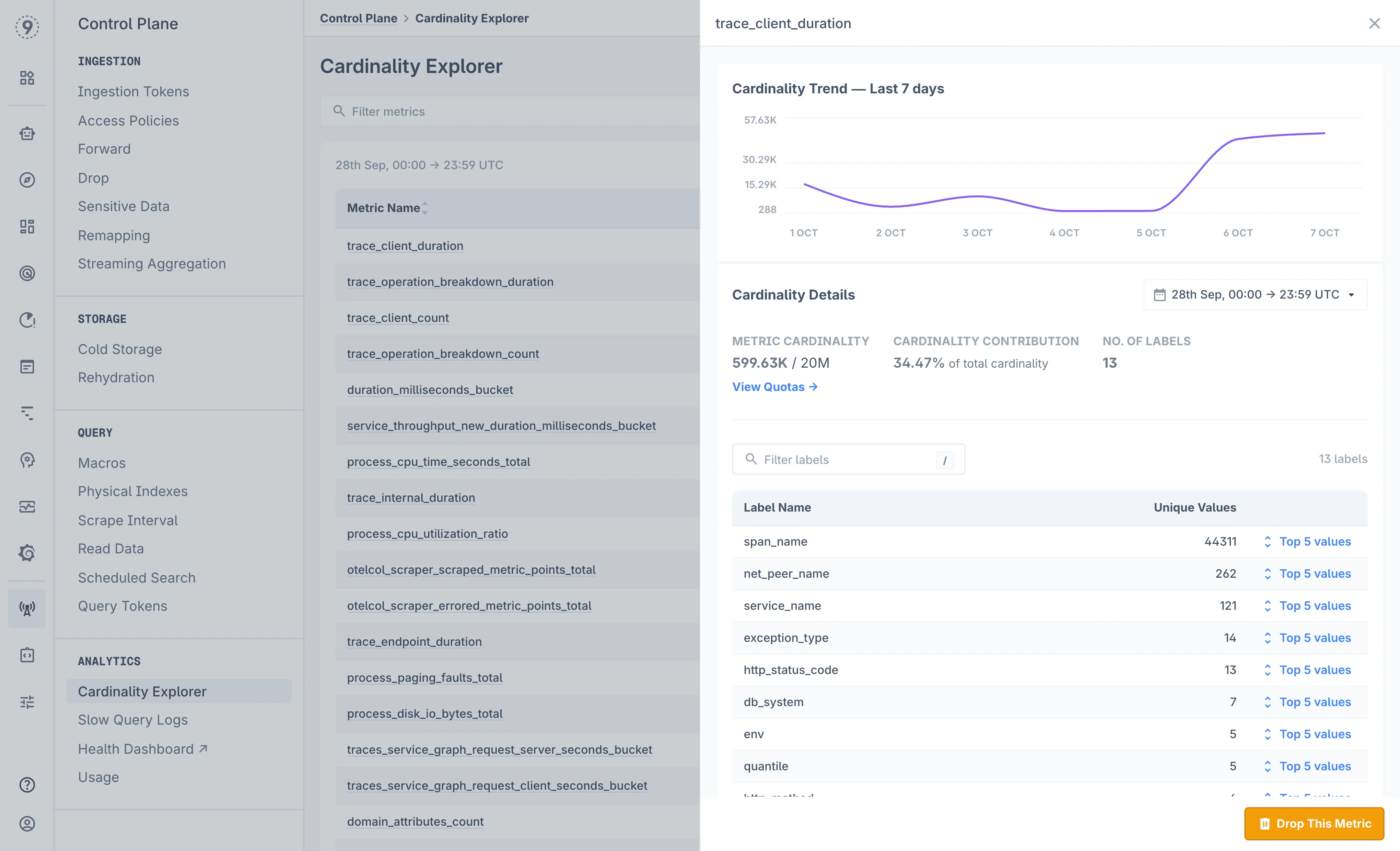

Last9’s Cardinality Explorer shows how specific metrics and label combinations evolve and offers early signals when growth accelerates.

Monitoring these trends makes it easier to adjust label choices, refine instrumentation, or introduce aggregation before Prometheus encounters memory pressure or extended replay times.

Stabilize Your Monitoring Pipeline During Volume Spikes

When series count grows suddenly, Prometheus can hit memory limits, restart more often, and slow down query execution. The goal at this stage is simple: keep Prometheus running long enough to examine what’s expanding and apply fixes cleanly.

Increase memory temporarily

Give Prometheus additional memory so it stays online while you investigate:

resources: requests: memory: 8Gi limits: memory: 16GiThis is only a short-term adjustment and can be reduced once the source of growth is addressed.

Pause ingestion for the expanding metric

If a single metric is responsible for most of the new series, pause its ingestion:

metric_relabel_configs: - source_labels: [__name__] regex: "problematic_metric_name" action: dropThis stops new time series while existing ones age out through normal retention.

Remove high-cardinality labels

If the metric is needed but one or more labels are expanding quickly, drop only those labels:

metric_relabel_configs: - source_labels: [__name__] regex: 'http_requests_total' action: labeldrop regex: 'user_id|session_id'Ensure the remaining labels still preserve meaningful separation between series.

Reduce scrape frequency

Lower the scrape interval to decrease ingestion load and reduce pressure on TSDB:

scrape_configs: - job_name: "high-cardinality-service" scrape_interval: 60s # previously 15sThis trades resolution for short-term stability.

Build Systems That Scale With Your Data

Recovering from a spike is important, but long-term reliability comes from systems that handle growth without constant intervention. The distinction between isolated incidents and recurring pain usually depends on whether your infrastructure absorbs increasing data volume on its own or requires manual action each time.

Process Data at Ingestion

As telemetry volume grows, both storage load and query time increase. One effective approach is to process data at the moment it arrives. Streaming aggregation reduces raw series into structured, meaningful metrics before they hit long-term storage.

How this works in practice:

-

Metrics reach the aggregation layer

This is typically an OpenTelemetry Collector pipeline or a dedicated stream processor. -

Define which attributes to keep

- Keep dimensions that add analytical value:

region,service,http_method - Remove unbounded identifiers:

user_id,session_id,request_id

- Keep dimensions that add analytical value:

-

Store the aggregated output

http_requests_total{region="us-west", service="checkout", method="POST"} -

Instead of thousands of user-specific series, you retain a stable set of dimensions that still help pinpoint issues.

-

Choose retention for each layer Short-term raw data (e.g., 24 hours) for detailed debugging

- Longer-term aggregated data for operational and historical analysis

Integrate Cost Visibility Into Daily Operations

Cost becomes predictable when teams can see resource usage as part of their everyday workflow instead of waiting for periodic accounting reviews. Making these signals visible helps teams understand how instrumentation choices translate into storage and compute overhead.

Practical ways to embed cost awareness:

- Cardinality budgets Track series count per service and define acceptable ranges based on traffic and purpose.

- Automated alerts Notify teams when label combinations or new metrics approach expected limits.

- Dynamic capacity adjustments Scale storage or adjust retention when ingestion patterns shift.

- Periodic usage summaries Share service-level metrics: series growth, label expansion patterns, and ingestion volume.

With this structure, teams work with clear boundaries and can adjust early, long before cost or performance issues appear.

Optimize for Faster Diagnosis

Lowering costs is helpful, but faster diagnosis brings the largest operational improvement. Detailed, well-chosen dimensions shorten the time it takes to locate the source of an issue.

Rich cardinality helps you answer questions like:

- Are failures concentrated in a single region?

- Are mobile clients affected differently?

- Is one endpoint slower for a specific deployment variant?

The efficiency gain is straightforward: less time searching, more time fixing.

Track signals that reflect improved reliability:

- Time from alert → diagnosis

- Incidents where missing telemetry slowed the investigation

- Outage duration over time

- Issues resolved proactively due to early pattern detection

When these indicators improve, your observability setup translates directly to reduced pressure during incidents and fewer late-night responses.

The Last9 Approach to High Cardinality

Most observability platforms force you to choose: either limit your labels to control costs, or accept unpredictable bills and performance issues. Last9 removes that trade-off.

No Memory Scaling Issues

Remember how Prometheus needs 4-8 KB per series and can OOM at 1M+ series? Last9’s architecture handles millions of high-cardinality series without those memory constraints. You don’t need to add RAM, restart pods, or drop dimensions when traffic spikes.

Real-Time Cardinality Controls

When cardinality explodes, Last9 lets you respond immediately from the UI—no config reloads, no pod restarts, no waiting for deployments. Apply streaming aggregation rules in real-time to control which dimensions to keep and how to aggregate high-cardinality labels before they hit storage.

The Cardinality Explorer shows exactly which metrics and label combinations are expanding. When you spot problematic growth, you can temporarily drop labels directly from the UI to stabilize the system, then update your instrumentation configs once things are under control.

The emergency response tactics we discussed earlier—editing configs, reducing scrape intervals, restarting Prometheus — become instant UI actions instead of firefighting.

Pricing Based on Events, Not Dimensions

Unlike Datadog’s per-custom-metric pricing or managed Prometheus charging by series count, Last9 bills on events ingested. Add customer_tier, introduce feature flags, roll out new services, expand to more regions—your cost scales with data volume, not label combinations. Your existing PromQL queries keep working as you add dimensions.

Start for free today, or reach out to our team for a detailed walkthrough.

In the next edition of this series, we’ll cover where cardinality explosions usually come from, how they unfold, practical mitigation steps, design patterns that prevent runaway dimensions, ways to monitor your monitoring stack, and reliable recovery playbooks for production incidents.