While PromQL is the de-facto standard query language in the monitoring world, it has multiple challenges. Improper or broad queries can significantly load the Prometheus server, especially in large setups with millions of time-series data. This can impact the performance and responsiveness of the entire monitoring system.

Challenges with PromQL

Lack of higher-level abstraction

The challenges with PromQL arise due to the lack of a higher-level abstraction that typically exists in the programming world, which solves –

- Reusability

- Standardization

- Maintainability

Reusability

- Reusing queries is not possible without copy-pasting of queries, which may result in errors.

- If one has to change the SLO threshold, imagine the need to change that in 100s of dashboards and alert rules, and this problem keeps growing with more and more adoption. This also has a chance of manual errors due to oversight.

Standardization

- It takes special effort to ensure the same standard queries are used across teams and components.

- Crafting performant and accurate queries is hard, but once such queries are written, it is equally hard to standardize them across services and components due to the lack of a sharing mechanism.

Maintainability

- Maintaining queries over time becomes problematic due to repeating queries spread across multiple places, such as Grafana Dashboards and alert rules YAML files.

- There is no single place to look at all the critical queries together.

Macros

In programming, a Macro is a rule or pattern that specifies how specific input sequences should be mapped to output sequences according to a defined procedure. Macros are a way to automate repetitive tasks within the source code, enabling more concise or human-readable code that can be expanded into more detailed code by the compiler or preprocessor.

There are different contexts where the term "macro" is used, but the core idea is typically the same.

Macro - Defining a shorthand or abstraction for more extensive code or operations.

Fun fact: The word Macro is derived from a Greek word μακρο which means large. Macros replace large code blocks with a name :)

You may have encountered Macros in programming languages like Lisp, C, C++, and Elixir, but what if you can use Macros with PromQL? Can you imagine complicated PromQLs being replaced just by short-form Macro? That's precisely what you can achieve with Levitate.

PromQL Macros in Levitate

Levitate - our managed time series data warehouse supports defining PromQL-based Macros.

We added PromQL Macros support in Levitate so that one can separate the definition of complicated PromQL queries from their use. The queries can be defined in one place and used as Macros by all downstream consumers.

Imagine a PromQL query to calculate the availability of an HTTP service.

(sum(rate(http_requests_duration_milliseconds_count{status=~"2.*"}[1m])) / sum(rate(http_requests_duration_milliseconds_count[1m])))*100We calculate the ratio of all Good requests with HTTP status code 200 and the total number of requests.

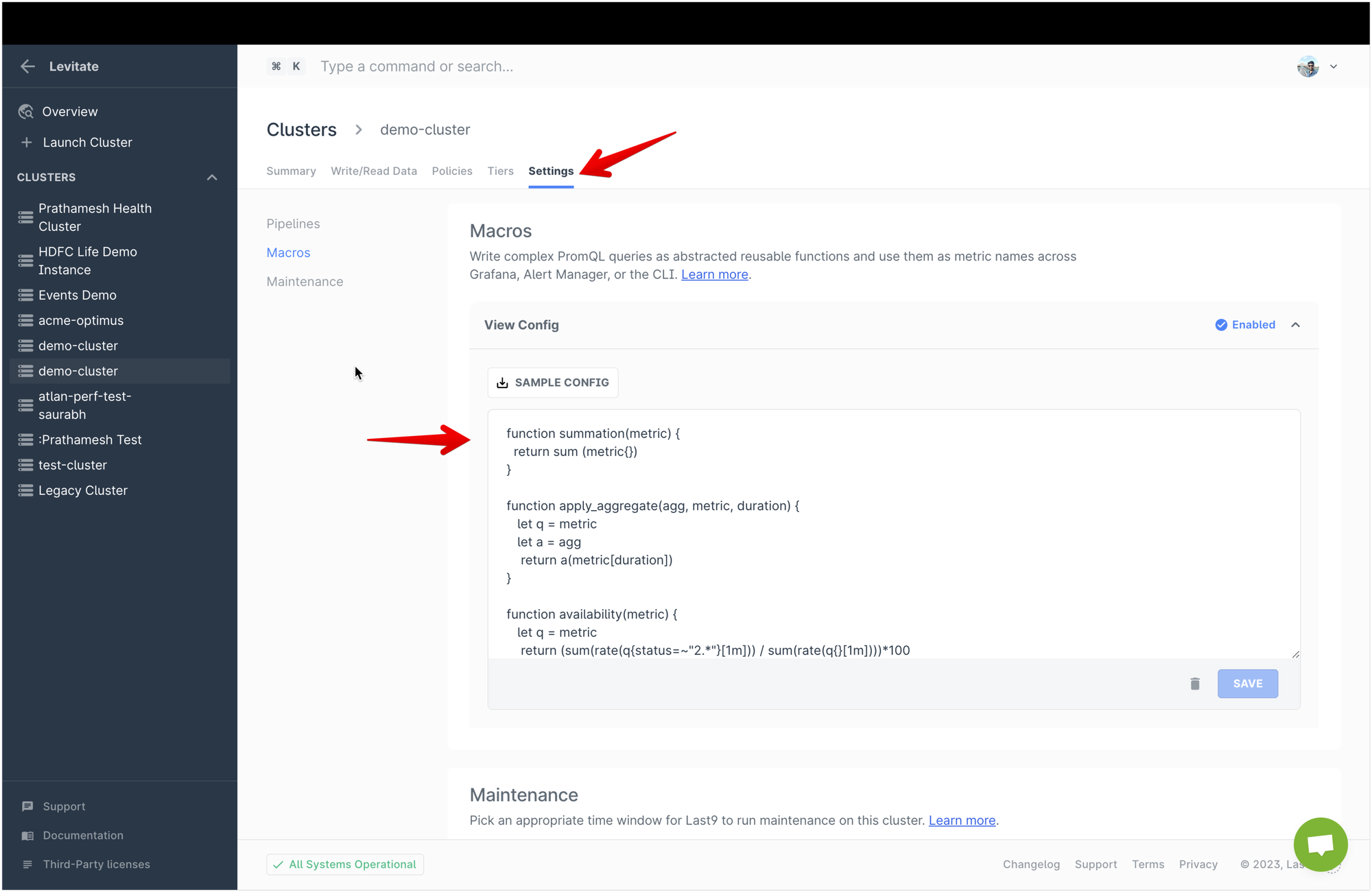

Levitate allows defining a Macro for this query as follows.

function availability(metric) {

let q = metric

return (sum(rate(q{status=~"2.*"}[1m])) / sum(rate(q{}[1m])))*100

}It can then be used in the Grafana dashboards and alerting as follows.

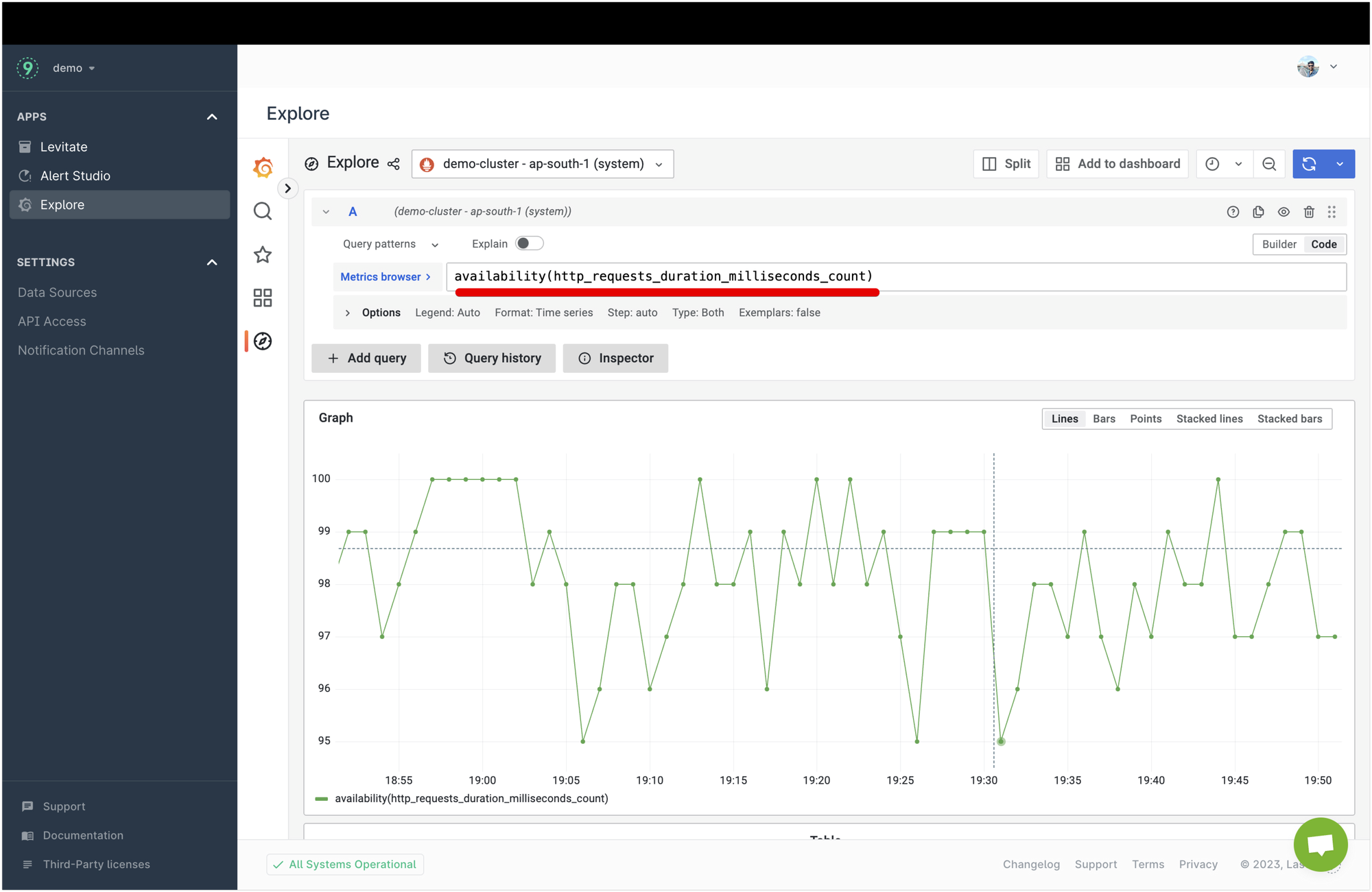

availability(http_requests_duration_milliseconds_count)Advantages of using PromQL Macros

PromQL Macros address the challenges that I mentioned earlier.

Reusability

- They allow reusing the complex PromQL queries at multiple places without duplicating.

- The same queries can also be used for different metrics at once—for example, the Availability of HTTP requests and the Availability of the turnaround time of a queue.

Standardization

- SRE teams can easily enforce the standard of queries across services and teams by creating a core set of Macros for downstream consumers.

- This also helps ensure that the performance of complex PromQL queries is maintained across components.

Maintainability

- One single source of truth for PromQL queries ensures they are maintained in the long run.

- It also makes it easier to change the queries if needed, and the change gets reflected in all places simultaneously.

- Not everyone on the team has to worry about maintaining and understanding PromQLs. They can be consumed easily across teams as higher-level abstractions.

Syntax of PromQL Macros

PromQL Macros in Levitate is written in an ES6-like syntax where you define a function which has to return a PromQL as a result. You can pass any dynamic variables to the Macro definition, and they will be expanded at the time of the evaluation of the Macro.

function name_of_the_macro(variable_1, variable_2, ..variable_n) {

var local_var_1 = "local variable"

return <promql using variables>

}Defining your first Macro in Levitate

Macros can be defined for any Levitate cluster. Head over to the settings tab and define the Macro definitions. You can define multiple Macro definitions once.

Using Macros

Once the Macro is defined, it becomes automatically activated. Then, you can use it in the Grafana dashboards and panels and even alert queries using the Levitate cluster as a data source.

In this way, Macros in Levitate allow you to standardize complex PromQL queries and the ability to reuse PromQL queries across teams and services in a seamless way.

Macros vs. Recording Rules

Recording rules evaluate the PromQL queries and save the result as new metrics, whereas Macros enable expanding complex PromQL queries at runtime. You may not want to evaluate and store a new metric to handle the complexity of PromQL queries.

Thus, Macros can be used instead of recording rules to enable reusability, standardization, and maintainability of complex PromQL queries without creating new metrics. However, Macros are not a direct replacement for Recording Rules, as sometimes you want to compute the queries and save their result.

Define your first Macro today by getting started today.