What are SLOs and how do you define them? We usually set SLOs that might not accurately define what the requirements are. Here's a look at SLOs That Lie!

SLO is an acronym for Service Level Objective. But before I explain SLO, you need one more acronym SLI (Service Level Indicator)

An SLI is a quantitative measurement of a (and not the) quality of a Service. It may be unique to each use case, but there are certain standard qualities of services that practitioners tend to follow.

- Availability The amount of time that a service was available to respond to a request. Referred to as Uptime

- Speed How fast does a service respond to a request? Referred to as Latency

- Correctness Response alone isn’t good enough. It also matters whether it was the right one. Referred to as ErrorRatio

SLOs are boundaries of these measurements where you would begin to worry about your service. Example: If the speed of delivery at a restaurant falls below 5dishes per hour. You know customers are going to have longer waiting periods. Having the right SLOs helps you take better decisions (More in this blog)

Uptime

One of the primary indicators and objectives is Uptime. If the site isn’t serving, let alone the speed and accuracy. Also, why uptime is usually desired to be towards 100%.

If you are a restaurant that sees footfall throughout the day, why shut and lose business.

How do you compute uptime? Fairly straightforward. The time that you were up / total time.

x = Up / ( Up + Down ) %

99% uptime means that out of total time, we have 1% allowance to not be available. Over the course of a year, this turns out to be 3.7 (~4) days.

But would a business, say a retail store be OK remaining shut for consecutive 4 days from 25th December — 28th December?

Uptime is like a periodic allowance that we get a refill on, not ration that we can keep accumulating. If it wasn’t spent, there’s no carry forward.

Based on each bucket, the downtime allowance may seem more palpable.

| 9s | Downtime per Day | Downtime per Week | Downtime per Month | Downtime per Year |

| 99% | 14.4 Mins | 1.7 Hrs | 7.3 Hrs | 3.7 Days |

| 99.9% | 1.4 Mins | 10.1 Mins | 43.8 Mins | 8.7 Hrs |

| 99.99% | 8.6 Secs | 1 Mins | 4.4 Mins | 52.6 Mins |

| 99.999% | 0.864 Secs | 6.1 Secs | 26.3 Secs | 5.3 Mins |

Measure of Availability in 9s

How do you measure the time that the service is up?

A request comes in, the request was served. The service is Up. There are plenty of tools that can be use for this including Prometheus, Stackdriver, etc. These tools piggyback on the software components to emit a logline or a metric.

How do you measure the time that the service is down?

A request came in but the request wasn’t served. For a request that wasn’t served, there cannot be a logline or a metric value emitted.

In a store that is supposed to be up 24x7, the staff bunks randomly, how do you measure how long were they away for?

So how do we measure it at the receiver? And how do we control the sender?

Option 1: SDK (Measure at each caller)

We could use an SDK that tracks every outbound request to our service. Depending on the design, one may be using https://envoymobile.io/ or https://segment.com to emit constant metrics. But,

- In a world where everything is becoming an API, the control we can exercise over the callers is diminishing.

- There will be so many senders! Before concluding from that pattern, whether it was a problem with the sender or one of our receivers, we’d have to wait for the data to arrive and wait for it to be collated.

- What if the sender’s network is jittery? Say 1% of the senders had an ISP fault and the stats never make it to our aggregates.

Waiting on a response to be aggregated across 100% callers, with their own delay, where the goal is a 99.99% uptime SLO with only 4 minutes of downtime available, we may have already lost the uptime target.

Clearly, using this method will weaken the definition of uptime, where 99.99% will feel like a joke.

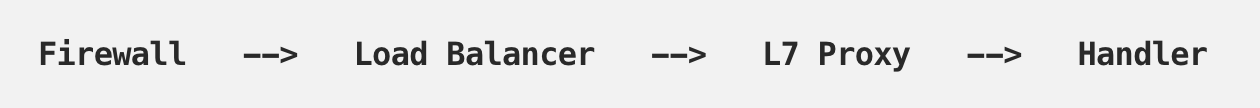

In reality, the request never really reaches the server directly. There will be a Firewall upstreaming to a Load Balancer upstreaming to an L7 proxy upstreaming to your servers.

SLOs is an aggregate of all layers underneath.

We should be talking about the SLO of each layer before the service as a whole. A breach in the Uptime SLO of a backend service would impact NOT the uptime but the ErrorRatio of the calling L7Proxy. Similarly, if the L7Proxy is down, the Load Balancer’s ErrorRatio will increase and not the Uptime. All the way till we reach the CDN, probably.

So, Uptime (as customer experiences) is best measured at the layer which is closest to the Customer and farthest from the code.

For each such layer, we are probably monitoring the uptime of a component which is not our core business unit and outside our control. In the -> LB -> CDN -> … journey, we have probably lost the uptime essence of the actual code deployed.

It’s possible that the business calls the SLO Uptime, but what we’re actually addressing is the ErrorRatio!!

Option 2: Uptime (actually Downtime) Monitors

This is where we introduce uptime monitors and downtime checkers. Simple services that have existed forever, but are extremely crucial. Outsource the trust of uptime to these services where some poor bot(s) have been assigned the mundane work of periodically hitting a service endpoint.

Uptime = timeUp / ( timeUp + timeDown ) %

This introduces two compromises:

- Uptime is now as the monitor sees it, Not how the real customer sees it. Those 1% of ISP faults may still be affected.

- How about the reliability of the uptime monitor? If we have trouble staying up 100% of the time, sure they cannot be up 100% of the time.

Say, uptime monitor guarantees an uptime of 99.99%, What if those 4 minutes don’t overlap? For the 4 minutes that the uptime monitor was down for, the service may be up or down, the monitor would not know.

We don’t keep all our eggs in the same basket. We introduce a multi-geography downtime monitor. Say a downtime monitor requests from 4 Geos.

- Should a failure across 1 Geo- be called a downtime?

- What if one of the Geos is highlighting downtime for a geographical region?

- Also, CAP and Network failures.

Downtime monitors aren’t holier-than-thou. They go through glitches too. They need to retry too. Say if a request failed, wisdom says retry. Wisdom says Hystrix. But what about the failed attempt? Was it a failure or was it counted as a success?

Before we proceed, there is a frequency too. How often do we check? We cannot go per second. We cannot go per minute. It’s a balance that we pick.

- The faster we check, the shallower will be the health check.

- The slower we check, the deeper can be the health check.

The depth of a health check is basically a trade-off we make. A shallow check only checks for a static response. A deeper health check will check for a DB operation in and out. The DB call obviously will take considerably longer and eat a transaction. We can’t have that come in every 10 seconds.

Frequency vs depth is an argument that doesn’t have one right answer. And like other things, we may just need both.

Option 3: State-based Monitors

Because the uptime is not being requested every ms, we count the time between states of the service.

We need states. OK, Unknown, and Error.

Error is confirmed down. The Unknown could be any emission that was interrupted or pending for retry or the duration that was in-between maintenance.

- Time spent between OK and Unknown is OK since you cannot tell for sure.

- Time spent between Unknown and Error is Error.

- Time spent between Error and Unknown is Error since you cannot tell whether the service is up or not.

- Similarly, the time between Error to OK is considered down, since it was down.

This in itself is the first step of aggregation.

Let’s take these two situations

- Counted as OK for 20. Uptime = 100%

┬──────────┬───────────┬─────────┬───────────────┬──────────┐

│ Status │ OK │ OK │ Unknown │ Ok │

├────┼─────┼──────────┼──────────┼───────────────┤──────────┤

│ Time │ 10:00:01 │ 10:00:10 │ 10:00:11 │ 10:00:21 │

└────┴─────┴──────────┴──────────┴───────────────┴──────────┘2. This will be counted as OK for 10 secs and Down for 10 secs. Uptime: 50%

┬──────────┬───────────┬─────────┬───────────────┬──────────┐

│ Status │ OK │ Unknown │ Down │ Ok │

├────┼─────┼──────────┼──────────┼───────────────┤──────────┤

│ Time │ 10:00:01 │ 10:00:10 │ 10:00:11 │ 10:00:21 │

└────┴─────┴──────────┴──────────┴───────────────┴──────────┘We can keep these rules to be configurable per service. But these are rules and subject to Interpretation. The absolute 99.99% is long gone!

We have not discussed the condition where downtime monitor’s sleep period overlaps with our actual downtime. A unique situation where every hit that comes in is only a fraction of the actual flapping status. The uptime figures that will come, are going to be really skewed from reality.

Conclusion

- There is not one single SLO. They are formed at layers, and the uptime SLO of one could be the error SLO of another.

- The uptime number is massively aggregated, and always approximate.

- As the uptime reaches the higher 9s, the support structure and the mindset need to shift towards proactive efforts, since waiting on an outage and then reacting to bring it up will not always work.

Last9 is a Site Reliability Engineering (SRE) Platform that removes the guesswork from improving the reliability of your distributed systems.