LogicMonitor is well-suited for monitoring servers and networks, but developers today work in environments that demand more. With microservices, Kubernetes, and high-cardinality telemetry, teams need tools that integrate into their workflow and scale with modern systems. This list highlights 12 alternatives in 2025 that support those needs.

What LogicMonitor Does Well

LogicMonitor delivers reliable infrastructure monitoring with:

- Network and server monitoring using automatic device discovery - efficient for static IPs and long-lived hosts, but less suited for orchestrated systems where pods or nodes are frequently created and destroyed.

- Cloud resource tracking across AWS, Azure, and GCP - good for tracking VM instances and managed services, though it doesn’t extend into workload-level metrics like container CPU usage or request traces.

- Synthetic checks and performance metrics for application health - useful for uptime and response time, but limited when you need distributed tracing or service-level objective (SLO) monitoring.

- Alert management with escalation policies and routing - integrates into incident workflows, but lacks the flexibility of rule-based or label-driven alerting in observability-first systems.

Its collector-based architecture scales well in traditional deployments, but because collectors require explicit configuration, it struggles with highly dynamic environments such as Kubernetes or autoscaling groups.

LogicMonitor’s pricing

LogicMonitor’s pricing starts at $22 per resource monthly for infrastructure monitoring. This model is predictable for static servers, but costs increase sharply when applied to ephemeral workloads, since each container, pod, or transient node counts as a billable resource.

Log analysis adds $4–14 per GB, which becomes costly in environments with high log throughput, such as systems emitting detailed request/response logs or verbose debugging data.

12 LogicMonitor Alternatives Worth Considering

1. Last9: Built for High-Cardinality Data

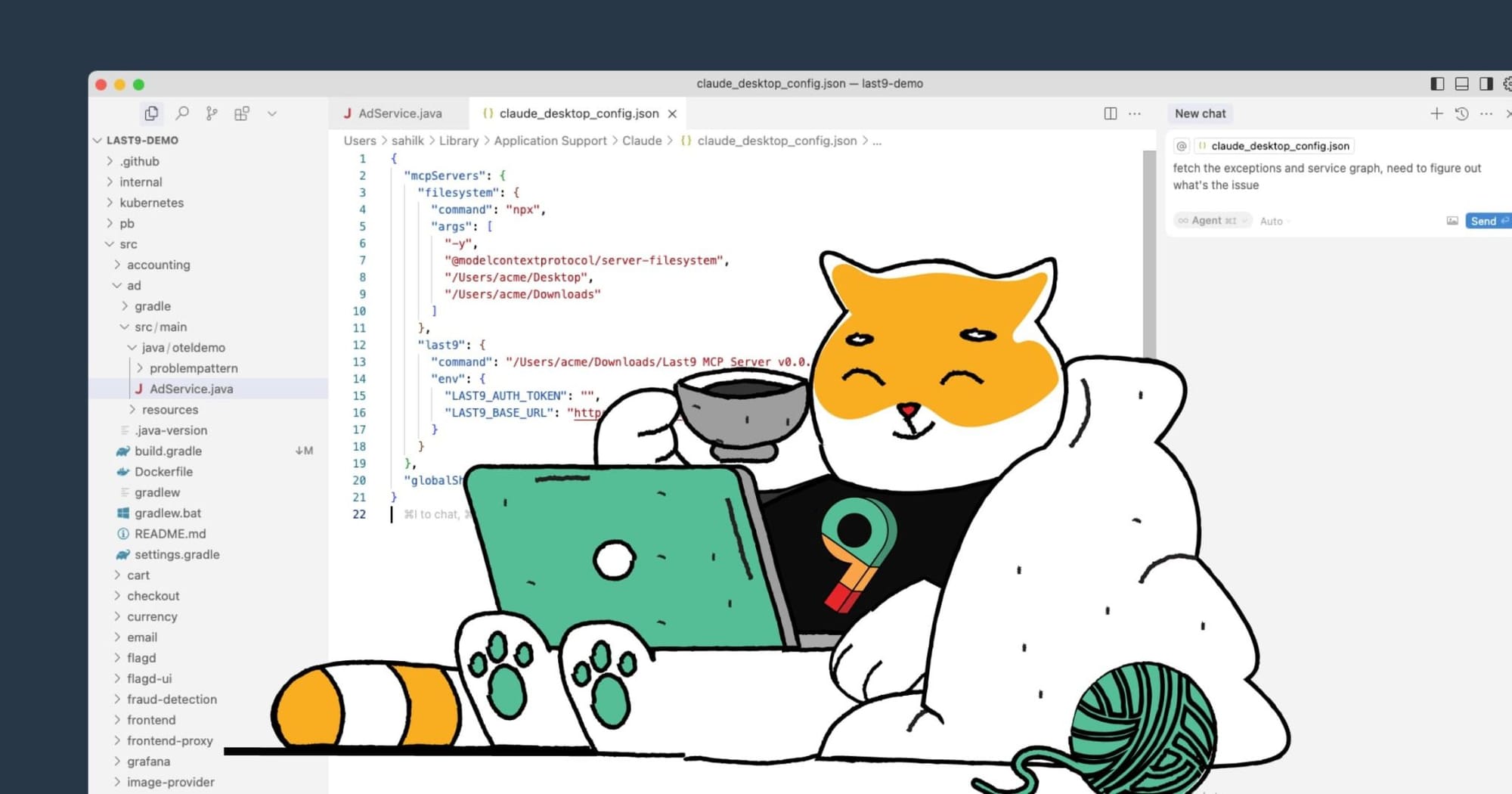

If you’ve ever struggled with high-cardinality metrics or watched observability bills spiral out of control, this is exactly where Last9 helps. We built the platform so your team can triage issues faster and ship changes without worrying about losing visibility.

Here’s how we make that possible:

- Unified telemetry platform - metrics, logs, traces, APM, and RUM in one place, so you don’t have to stitch multiple tools together.

- AI-powered control plane - track and control costs in real time, instead of dealing with post-ingestion billing shocks.

- High-cardinality support - more than 20M unique series per metric, with no sampling, so every detail stays intact.

- Cost efficiency - teams running at scale cut costs by up to 70% compared to incumbent vendors, while keeping query performance predictable.

Teams like Probo, Games 24*7, CleverTap, Replit, and more trust Last9 for running complex distributed systems. By storing telemetry in full detail, you can track user sessions, API performance, or feature usage across multiple dimensions, without running into slow queries or surprise bills.

Best for: Cloud-native applications where detailed observability and cost predictability matter.

2. Grafana Cloud: Managed Prometheus Stack

Grafana Cloud delivers the complete observability ecosystem, Prometheus, Loki, Tempo, and Grafana, as a managed service. You get the flexibility of open-source tooling without the operational overhead of running it yourself.

What it offers:

- Prometheus metrics, Loki logs, and Tempo traces - integrated into a single platform, all visualized through Grafana dashboards.

- Managed scaling and storage - no need to size or maintain Prometheus clusters manually.

- Built-in alerting - extend existing Prometheus rules while routing alerts centrally across telemetry types.

This setup is especially useful if your team already uses Grafana and Prometheus. You can carry over dashboards and alert rules while letting Grafana Labs manage scaling, upgrades, and availability.

Limitations: Since the backend is fully managed, you have less control over storage tuning, retention policies, and infrastructure-level optimizations. Costs can also climb as telemetry volume grows, particularly if you need long-term retention.

Best for: Teams familiar with Prometheus/Grafana who want the benefits of managed infrastructure.

3. AWS CloudWatch: Native AWS Integration

CloudWatch is AWS’s built-in monitoring and observability service, tightly integrated with the entire AWS ecosystem. Metrics are collected automatically from services like EC2, RDS, DynamoDB, S3, and Lambda, and logs can be ingested directly into CloudWatch Logs for centralized visibility.

What it offers:

- Native AWS integration - metrics and logs are automatically available without installing agents for most AWS services.

- Custom metrics and dashboards - publish your application metrics to the same platform and build dashboards that mix them with AWS resource metrics.

- X-Ray integration - enables distributed tracing across AWS services, useful for debugging serverless and microservice-based workloads.

- Managed service - no infrastructure to provision or scale, as CloudWatch runs natively inside AWS.

CloudWatch is especially effective when your workloads are primarily AWS-based. It minimizes setup, provides built-in retention and alarms, and integrates seamlessly with other AWS services like SNS, Lambda, and EventBridge for automation.

Limitations:

- Granularity and retention - default metrics are collected at one-minute intervals, and retaining high-resolution or long-term data incurs additional costs.

- Pricing model - logs are billed per GB ingested and stored, metrics per custom series, and traces per request sampled. This can become expensive in large or high-cardinality environments.

- Limited cross-platform scope - CloudWatch is optimized for AWS workloads; monitoring external or hybrid infrastructure often requires additional tooling.

- Querying complexity - CloudWatch Logs Insights provides querying, but can feel limited compared to dedicated log analytics tools.

Best for: Teams running predominantly on AWS and looking for a monitoring solution with deep service integration and minimal setup.

4. Zabbix: Flexible Open Source

Zabbix is an open-source monitoring platform that supports both legacy infrastructure and modern cloud-native environments. It provides a high degree of customization, making it suitable for mixed setups where you need to monitor everything from physical servers to Kubernetes clusters.

What it offers:

- Multiple data collection methods - supports agents, SNMP polling, HTTP checks, IPMI, and custom scripts for maximum flexibility.

- Template-based monitoring - reusable templates allow consistent monitoring setups across similar applications or services.

- Scalable architecture - supports distributed monitoring through proxies, useful for large or geographically spread infrastructures.

- Visualization and alerting - built-in dashboards, triggers, and notification routing provide a complete monitoring workflow.

Zabbix works well when you need flexibility to integrate different environments under a single interface. It’s particularly attractive for teams with a mix of traditional infrastructure and containerized workloads who want full control without vendor lock-in.

Limitations:

- Setup and maintenance overhead - while powerful, Zabbix requires significant configuration effort compared to managed solutions.

- Scalability challenges - large-scale deployments with millions of metrics may need careful tuning of proxies, databases, and storage layers.

- No native tracing - while it covers metrics and logs, distributed tracing isn’t a built-in capability and requires external tools.

- Learning curve - flexibility comes at the cost of complexity, and teams may spend time building and maintaining templates or custom scripts.

Best for: Organizations with a mix of traditional and cloud-native infrastructure that need deep customization and control.

5. Datadog: Comprehensive Platform

Datadog offers full-stack observability by combining infrastructure monitoring, APM, log management, and security into a single platform. The unified model makes it easier to correlate metrics, traces, and logs without switching tools.

What it offers:

- Automatic service discovery - detects applications and services, then builds dependency maps to visualize interactions.

- Application Performance Monitoring (APM) - traces requests across distributed systems, helping diagnose latency and errors.

- Integrated security monitoring - adds threat detection and compliance monitoring on top of performance data.

- Wide ecosystem of integrations - supports hundreds of services, frameworks, and cloud providers out of the box.

This makes Datadog appealing when you want to consolidate observability, tracing, and security under one platform. It’s especially useful for organizations running complex application architectures where cross-layer correlation is critical.

Limitations:

Datadog’s pricing model is usage-based across multiple dimensions:

- Hosts/containers are billed per instance, which can spike with autoscaling workloads.

- Logs are billed per GB ingested and retained, so high-throughput applications can incur significant costs.

- Custom metrics are priced separately, and in high-cardinality environments, the number of unique series can grow faster than expected.

- APM traces are sampled by default to control volume, which may limit visibility into edge cases unless you pay for higher retention.

This means while Datadog provides breadth, cost predictability is a common challenge. Teams often need to fine-tune sampling, retention policies, and log pipelines just to keep bills under control.

Best for: Teams that want comprehensive observability and security in a single managed platform.

6. Elastic Observability: Search-Powered Monitoring

Elastic Observability brings metrics, logs, and traces together on the Elasticsearch platform. For teams already running Elasticsearch, it can reduce operational overhead by consolidating observability and search into a single system.

What it offers:

- Log analysis at scale - optimized for ingesting and querying large volumes of unstructured logs.

- APM agents - provide automatic instrumentation for popular frameworks, capturing traces and performance data with minimal setup.

- Anomaly detection - built-in machine learning jobs detect unusual patterns across metrics, logs, or traces.

- Unified data platform - integrates with Kibana for visualization and Elastic Security for threat detection, enabling a broader observability and security stack.

Elastic works best when your team is already invested in the Elastic ecosystem. It allows you to reuse existing infrastructure, knowledge, and integrations while extending observability coverage.

Limitations:

- Resource requirements - Elasticsearch clusters can grow quickly with observability data, requiring careful planning for storage, sharding, and scaling.

- Operational overhead - self-managing Elasticsearch involves tuning JVM heap sizes, shard allocations, and index lifecycles, which adds complexity.

- Cost visibility - although open source at its core, enterprise features like advanced security, ML, and longer retention require Elastic’s commercial licensing.

- Query latency - while search is powerful, high-cardinality queries and aggregations may become slow under heavy loads compared to purpose-built time series databases.

Best for: Teams already using Elasticsearch that want to consolidate observability into a unified data platform.

7. Dynatrace: AI-Powered Analysis

Dynatrace emphasizes automated observability with its Davis AI engine, which establishes baselines for normal system behavior and detects anomalies in real time. It automatically correlates events across infrastructure, applications, and services to identify likely root causes without requiring manual correlation.

What it offers:

- Davis AI engine - continuously analyzes telemetry to detect anomalies and suggest probable root causes.

- PurePath tracing - captures every transaction end-to-end, giving granular visibility into latency, dependencies, and bottlenecks.

- Full-stack coverage - spans infrastructure monitoring, APM, logs, digital experience monitoring (DEM), and security.

- Automation focus - reduces manual setup by automatically discovering applications, services, and dependencies.

Dynatrace is particularly effective in large, distributed, or complex environments where the volume of telemetry data makes manual troubleshooting impractical.

Limitations:

- Proprietary approach - while powerful, Dynatrace relies on its own agents and data models, which limits flexibility compared to open standards like OpenTelemetry.

- Cost structure - pricing scales by host units, DEM sessions, and data retention, which can become expensive in high-scale or high-cardinality systems.

- Learning curve - the AI-driven model surfaces correlations and root causes, but tuning trust in automated insights often takes time for engineering teams.

- Less portability - tight integration with Dynatrace’s ecosystem means migration or exporting data to other platforms can be challenging.

Best for: Complex enterprise environments needing automated root cause detection and detailed transaction tracing.

8. VictoriaMetrics: Prometheus at Scale

VictoriaMetrics is a time-series database designed to extend Prometheus to high-scale environments. It focuses on efficient storage and query performance, making it a popular choice for teams running large Prometheus deployments.

What it offers:

- Prometheus compatibility - supports the remote write protocol, so you can plug it into existing Prometheus setups without changing exporters or dashboards.

- Efficient storage - uses advanced compression techniques to store billions of time series while keeping resource usage predictable.

- High availability - clustering support provides redundancy and horizontal scalability with less complexity than other long-term storage options.

- Performance focus - optimized for fast queries across large datasets, even as telemetry volumes grow.

VictoriaMetrics works best when Prometheus is already central to your observability stack, but standard Prometheus storage is hitting scale or cost limits.

Limitations:

- Narrow scope - focused on metrics; logs and traces require additional systems, which may fragment observability.

- Operational overhead - while simpler than some alternatives, managing large clusters still requires tuning storage, retention policies, and scaling parameters.

- Ecosystem fit - works well with Prometheus-native tools but lacks some of the broader ecosystem integrations offered by full observability platforms.

- Query language constraints - relies on PromQL, which is powerful for metrics but less expressive than combined query engines across telemetry types.

Best for: High-scale Prometheus environments that need better storage efficiency and faster queries without abandoning the Prometheus ecosystem.

9. Lightstep (by ServiceNow): Tracing-First, Developer-Friendly

Lightstep is built around distributed tracing, making it a strong fit for debugging microservices and event-driven systems. Originally created by the team behind OpenTracing, it emphasizes deep visibility into how requests flow across services.

What it offers:

- Tracing at scale - designed to handle millions of spans per second without sampling, useful for highly distributed systems.

- Change Intelligence - correlates recent code or infrastructure changes with performance regressions to speed up root cause analysis.

- OpenTelemetry-native - vendor-neutral instrumentation ensures portability and avoids lock-in.

- Developer-centric workflows - lightweight UI and APIs geared toward debugging, not just dashboards.

Lightstep works best for developer teams who want tracing to be the foundation of their observability practice, while still having access to metrics and logs where needed.

Limitations:

- Tracing-first focus - while metrics and logs are supported, they’re not as mature or feature-rich as those in full observability suites like Datadog or Elastic.

- SaaS-only - requires sending telemetry to Lightstep’s managed backend, which may not work for strict data residency requirements.

- Learning curve for tracing - teams new to distributed tracing often need to adjust workflows to fully benefit from span-level data.

Best for: Teams building microservices or event-driven systems that need tracing as the backbone of their observability strategy.

10. Nagios: Proven Infrastructure Monitoring

Nagios is one of the earliest monitoring tools, widely adopted for traditional IT environments. It focuses on availability and health checks, making it reliable for monitoring servers, network devices, and critical applications.

What it offers:

- Plugin-driven monitoring - a large ecosystem of community and commercial plugins supports almost any service or protocol.

- Host and service checks - lightweight checks for system availability, CPU, memory, disk usage, and application endpoints.

- Alerting and escalation - flexible rules for notification and incident routing.

- Mature ecosystem - decades of adoption with strong community support and documentation.

Nagios works well for teams that want straightforward infrastructure monitoring without the complexity of high-volume telemetry pipelines.

Limitations:

- Not built for modern observability - lacks native support for logs, traces, or high-cardinality metrics.

- Visualization gaps - dashboards are basic compared to Grafana or Datadog.

- Manual configuration - scaling requires heavy use of configuration files and plugins.

- Cloud-native mismatch - better suited for static infrastructure than ephemeral workloads like containers.

Best for: Traditional infrastructure monitoring where availability checks and alerts are the priority.

11. Icinga: Modernized Nagios Fork

Icinga began as a fork of Nagios but has since evolved with modern features. It retains Nagios compatibility while adding APIs, better dashboards, and a more scalable architecture.

What it offers:

- REST APIs and automation - allows monitoring setups to integrate with CI/CD pipelines and configuration management tools.

- Improved UI - richer dashboards and visualization compared to Nagios’ basic interface.

- Scalable architecture - supports distributed monitoring setups for large organizations.

- Nagios compatibility - can use many of the same plugins and checks.

Icinga is a solid choice if you like Nagios’ plugin-driven flexibility but need a more modern, API-first monitoring solution.

Limitations:

- Check-based focus - like Nagios, it’s built around host/service checks rather than full observability telemetry.

- Requires integrations - logs, metrics, and traces need external systems for complete observability.

- Learning curve - setup and scaling require significant operational knowledge.

- Less adoption in cloud-native - designed primarily for static or hybrid infrastructures.

Best for: Teams that want Nagios-style monitoring with modern APIs, dashboards, and distributed scalability.

12. Netdata: Real-Time Infrastructure Monitoring

Netdata is an open-source monitoring agent designed for real-time visibility. It automatically discovers and collects high-resolution metrics from systems, containers, and applications with minimal setup.

What it offers:

- 1-second resolution metrics - lightweight agents collect and stream granular data in real time.

- Auto-detection - detects system services, containers, and applications with no manual configuration.

- Built-in anomaly detection - statistical models highlight unusual behavior without external ML tooling.

- Rich interactive dashboards - out-of-the-box visualizations with drill-down capabilities.

Netdata works best for teams that want instant, detailed infrastructure metrics without the overhead of setting up a large observability stack.

Limitations:

- Narrower scope - optimized for real-time metrics, but lacks deep log and trace capabilities.

- Data retention - not built for long-term storage; exporting to external systems is often required.

- Scaling - while agents are efficient, managing many across large fleets requires orchestration.

- Limited enterprise features - doesn’t offer the governance, multi-tenancy, or compliance controls of commercial platforms.

Best for: Teams needing fast, detailed infrastructure visibility with minimal setup effort.

Conclusion

The right LogicMonitor alternative depends on your environment and monitoring priorities. For cloud-native workloads, Last9 provides predictable costs and supports high-cardinality telemetry without sampling, critical for microservices, Kubernetes, and event-driven systems.

A practical way to evaluate is to define your key use cases, whether that’s tracking container-level metrics, analyzing application traces, or monitoring infrastructure uptime. and run real workloads across a couple of platforms.

You can start using Last9 for free, or book sometime with our team to see how it can fit into your observability setup.

FAQs

Q: What is the best alternative to LogicMonitor?

A: It depends on your environment. For cloud-native applications, Last9 is purpose-built for high-cardinality telemetry without sampling. For teams already invested in Prometheus/Grafana, Grafana Cloud offers a managed option. Traditional setups may prefer Zabbix for flexibility or AWS CloudWatch for native AWS integration.

Q: Who does LogicMonitor compete with?

A: LogicMonitor competes with infrastructure monitoring tools like Zabbix and PRTG, as well as observability platforms such as Last9, Datadog, Dynatrace, and Elastic Observability. In AWS-heavy environments, CloudWatch is also a common alternative.

Q: Why do teams look for LogicMonitor alternatives?

A: The main reasons are better support for cloud-native and dynamic workloads, more cost-efficient handling of high-cardinality data, deeper application-level insights, and tighter integration with developer workflows and CI/CD pipelines.

Q: How does LogicMonitor compare with Datadog or Dynatrace?

A: Datadog and Dynatrace are full-stack SaaS platforms with broader coverage (APM, logs, security). LogicMonitor is stronger in infrastructure monitoring but less optimized for high-cardinality telemetry.

Q: What features should I look for in a LogicMonitor alternative?

A: Important features include:

- Automatic service discovery for dynamic environments.

- Support for custom metrics and application telemetry.

- Correlation across metrics, logs, and traces for faster debugging.

- Flexible, rule-based alerting that avoids noise.

- APIs and integrations for automation and incident workflows.

- Cost visibility and control to avoid billing surprises.

Q: What are some strong open-source alternatives?

A: Zabbix is a mature option for mixed environments, while Netdata provides real-time infrastructure metrics with minimal setup. Elastic Observability is useful for teams already invested in the Elastic stack. These tools require more operational effort than SaaS platforms but offer flexibility and cost control.

Q: What are some popular commercial alternatives?

A: Last9 for high-cardinality observability, Grafana Cloud for Prometheus/Grafana users, Datadog for all-in-one SaaS monitoring, Dynatrace for AI-driven root cause analysis, and Lightstep for tracing-first workflows. Each addresses different priorities depending on scale, data volume, and developer needs.