You can cut your log costs by removing repetitive, low-value logs early and keeping only the parts that genuinely help you understand issues.

Modern systems generate logs far faster than you expect. Even when your workload stays stable, infrastructure components, retries, and background workers continue producing a steady stream of repeated entries. If you’re running Kubernetes, distributed queues, or microservices, you’ve likely seen log volume climb while your actual visibility stays the same.

Why Log Volume Grows Even When Traffic Doesn’t

Log growth is usually the result of multiple system-level behaviours happening continuously across your stack. Each component contributes a small amount of repetitive output, and the combined effect leads to steady volume increases even when request traffic stays flat.

1. High-frequency system components

Infrastructure services emit logs on fixed intervals or lifecycle events:

kubeletwrites liveness and readiness probe results every few seconds.- Container runtimes log pull, start, stop, and restart events.

- Load balancers, API gateways, and service meshes generate structured access logs for every inbound and outbound request.

Example: readiness probe repetition

Successfully probed container readiness

Successfully probed container readiness

Successfully probed container readinessWith 200 pods, each running 5 containers, and probes firing every 5 seconds, you generate thousands of identical entries per minute.

2. Application-level repetition

Application components also produce repeated patterns:

- Retry loops (

attempt=1,attempt=2, ...) - Polling loops that emit a log line on each iteration

DEBUGorINFOlogs left enabled inproduction- Background workers logging “processed item” messages for every event in a queue

Example:

INFO: processing job queue

INFO: processing job queue

INFO: processing job queue3. Access and audit logs

Access logs and audit logs often follow a fixed schema:

- CDN logs

- Ingress controller logs

- Proxy logs

- Firewall logs

- Identity provider audit logs

If your throughput is stable, the log structure stays identical even as the total count grows.

4. Default pipelines collect everything

Many teams use a simple, default ingestion path:

- Fluent Bit → Elasticsearch

- Vector → Loki

- Filebeat → OpenSearch

These pipelines operate in “collect-and-forward” mode with minimal filtering. It’s a fast way to get logging in place, but as volume grows 30–50% year over year, the cost surfaces quickly.

What Makes Log Data Expensive

When your log volume grows for the reasons above, the impact shows up directly in your bill. Logging systems don’t just charge you for the raw bytes - you pay for every stage your data moves through.

Where the cost comes from

Most platforms price across several layers:

- Ingest - every GB shipped from agents or pipelines.

- Indexing - CPU-heavy parsing and field-level indexing, especially for JSON logs.

- Retention - keeping logs hot and searchable for 30–90 days.

- Search - compute required to query large indexes during investigations.

As each of these layers scales with volume, even small increases in daily log output can create noticeable cost jumps.

For example, consider a pipeline that produces 200 GB/day

- You pay to ingest all 200 GB.

- The platform indexes every field in those logs.

- A 30-day hot retention window becomes 6 TB of searchable data.

- During incidents, queries run across large indexes, increasing compute consumption.

High-cardinality fields such as session_id, customer_id, or trace_id multiply the index size and query cost further.

How to Reduce Log Data Costs

Below are practical techniques you can use to bring log volume under control while keeping the signals you rely on during incidents:

1. Filter Low-Value Logs at the Source

Filtering noise before it enters your pipeline gives you the biggest and most predictable reduction. You’re removing data you never needed in the first place.

You can start by looking for patterns that rarely change in production: health-check endpoints, liveness/readiness probes, polling loops, and verbose DEBUG logs.

To give you a sense of how this looks in practice, here are two patterns you can adopt:

Filter Kubernetes readiness probe logs using Fluent Bit:

[FILTER]

Name grep

Match kube.*

Exclude message "readiness probe"Control application verbosity using environment variables:

export LOG_LEVEL=warnBoth approaches stop repetitive entries before they hit your backend, which means you avoid ingest, indexing, and search costs entirely.

2. Collapse or Deduplicate Repeated Logs

Many logs repeat the same message with identical metadata. Instead of storing thousands of copies, you can collapse them into a single line with a counter.

Here’s what that looks like with Vector’s reduce transform:

[transforms.collapse]

type = "reduce"

group_by = ["message"]

limit = 10000

[transforms.collapse.merge]

count = "count + 1"So instead of storing:

ERROR: db timeout

ERROR: db timeout

ERROR: db timeoutYou store a single structured record:

{ "message": "ERROR: db timeout", "count": 3 }You still preserve frequency information, but at a fraction of the storage and indexing cost.

3. Apply Log Sampling (Where Safe)

Some logs fire so frequently-and with such repetitive structure-that sampling becomes an efficient way to retain the trend without storing every occurrence.

This applies to patterns like:

- repeated warnings

- polling loops

- duplicate security logs with identical payloads

For example, here’s how you’d configure Vector to keep 5% of a repetitive log stream:

[transforms.sample]

type = "sample"

rate = 0.05You still see behaviour changes and spikes, but without carrying the cost of indexing every line.

(Errors, security events, and transaction paths should never be sampled.)

4. Trim Unused Fields and Reduce Payload Size

Structured logs often contain fields that never change or are never queried. Removing them shrinks your indexing footprint significantly.

A common case is HTTP metadata or container fields that appear on every log line but add no debugging value in search.

Here’s how you’d strip those fields using the OpenTelemetry Collector:

processors:

attributes:

actions:

- key: http.user_agent

action: delete

- key: container.id

action: deleteYou reduce both storage and query overhead while keeping the parts of the payload you actually rely on.

5. Tier Logs by Purpose (Hot vs. Cold)

Not all logs need full indexing. You can route them to different storage tiers depending on how you use them.

| Log Type | Storage Tier | Purpose |

|---|---|---|

| Errors, warnings | Hot, indexed | Fast troubleshooting |

| Debug logs | Cold object storage (S3/GCS) | Occasional deep dives |

| Infra & audit logs | Cold | Compliance / long-term reference |

| High-volume access logs | Hot for 24h → cold | Short-term analysis |

A simple routing rule in Fluent Bit might look like:

[OUTPUT]

Name s3

Match access.*

bucket my-cold-logsThis keeps recent logs queryable, pushes older or low-value logs to cheaper storage, and keeps your index lean.

6. Use an Observability Pipeline to Enforce Rules Consistently

Once you have more than a handful of services, maintaining per-service log rules becomes difficult. An observability pipeline enforces consistency and prevents the drift that happens when each service configures logging separately.

A pipeline can:

- Apply the same filtering rules everywhere

- enrich logs with service metadata

- Route logs based on type or volume

- Collapse duplicate patterns

- Push older data to cold storage

- Attach trace context for correlation

Here’s a typical OpenTelemetry Collector setup that does this:

service:

pipelines:

logs:

receivers: [otlp, filelog]

processors: [batch, attributes, filter]

exporters: [loki, s3]If you're using Last9, our platform centralizes filtering and routing across all your services and stores high-cardinality logs without index-based cost penalties. You keep full fidelity, and you avoid the per-field indexing blowups that make logs expensive in traditional systems.

Production Notes & Best Practices

- Keep logging levels environment-specific (

DEBUGin dev,WARN/ERRORin prod). - Track your top log sources using simple queries (Loki, Elastic, or ClickHouse).

- Review field-level usage monthly; remove unused fields.

- Enforce log standards across services (JSON, fixed schema).

- Monitor ingest cost per service; alert on abnormal spikes.

- Make routing rules explicit: “These logs stay hot; these go cold.”

- For Kubernetes: exclude container runtime noise and probe logs early.

Last9 is helping us handle log analytics at scale without the cost overhead. With Last9, we can ingest, store, and query logs from large, distributed systems efficiently. The ability to scale log analytics seamlessly while keeping performance consistent has been a big win.

- Prashant, DevOps Lead

How Last9 Helps You Pay Less for Logs (Without Sampling)

All the techniques above work well on their own, but they can be hard to coordinate across multiple services and teams. Last9 helps when you want the same benefits-lower log cost, full visibility, predictable behaviour-without stitching everything together manually.

Here’s how Last9 keeps your log bill under control while preserving every log line.

1. Full-fidelity storage

We don't sample logs or traces. You get the complete timeline of what happened in your system, which is especially important during rare or edge-case failures that sampling would otherwise hide. You avoid the common tradeoff where saving money means losing visibility.

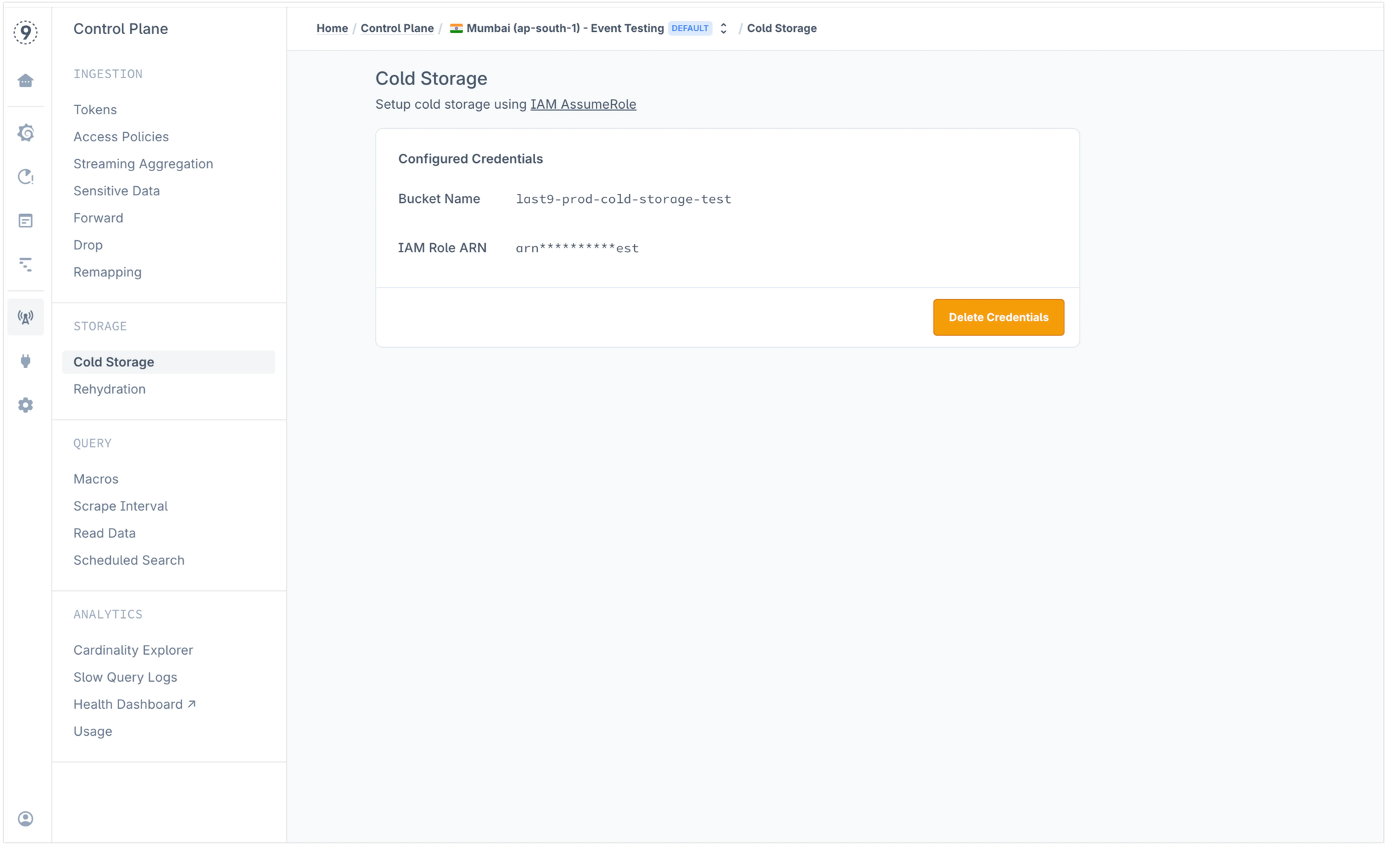

2. Short hot retention, long cold retention

The platform keeps logs searchable for 14 days in a fast, hot tier. After that, older data automatically moves to your S3 bucket as cold storage.

- You query recent data quickly.

- You pay object-storage prices for everything older.

You’re effectively reducing your hot footprint without giving up access to historical logs.

3. On-demand rehydration for historical analysis

If you run a query that reaches beyond the 14-day hot window, Last9 pulls the relevant logs from your S3 bucket and rehydrates them automatically-up to 10M lines.

You only pay for historical access when you actually need it, instead of keeping everything indexed indefinitely.

4. LogMetrics for High-Volume Patterns

LogMetrics lets you turn high-volume, repetitive log streams into metrics. You define a rule in the UI - for example: filter on level=error, group by service, and aggregate with a count over a 5-minute window. Last9 processes the stream continuously and emits a metric while keeping the underlying logs available.

You rely on compact metric series for trend analysis and alerting, which reduces how many of repetitive log lines you store or index. Aggregation logic lives in the platform, so changes don’t require collector updates or redeploys.

5. Built-in tiering across all telemetry

Metrics and traces follow the same tiering model, so long-term trends remain visible even as older data moves to cold storage. You keep historical context without carrying the cost of a large, always-hot index.

You keep full signal, reduce the amount of log data held in hot storage, avoid index-driven cost spikes, and keep bulk logs in low-cost object storage. You still can investigate older issues, but you’re no longer paying for 24/7 hot indexing of your entire log history.

Getting started with Last9 is easy and just takes a few minutes, and if you'd like a detailed walkthrough, book sometime with us!

FAQs

How can I tell which logs are noise versus a useful signal?

Start with usage data. Check your log backend for:

- Top talkers: streams producing the most GB/day

- Query heatmaps: streams that haven't been queried in weeks or months

- Fields with zero variance: metadata that never changes across thousands of lines (good candidate for removal)

- High-frequency endpoints: health checks, load-balancer probes, retry loops

You can also run simple technical checks:

- Use

count_over_time()(PromQL-style) orstats count by message(Loki/Elastic) to spot duplicate patterns - Look at per-service logging levels - if

PRODstill usesINFOorDEBUG, volume will explode - Inspect structured logs for fields that aren't used in search or dashboards; drop them at the logger/agent level

Noise usually reveals itself as high volume + low query rate + repeated payloads. Those are the best candidates for filtering, sampling, or summarizing.

Will dropping or sampling logs hide important information later?

Not if you target the right patterns. Engineers usually keep full fidelity for:

- Error logs

- Security-relevant entries

- Payment/transaction paths

- Anything tied to compliance

And trim volume where the structure never changes. For example:

- Sample identical warnings:

rate_limit_exceededfired on every request → keep 1 in 50 - Collapse duplicates with processors like Fluent Bit's

modifyor Vector'sreducetransform - Drop verbose logs in

PRODusing environment-based logging configs (LOG_LEVEL=warn)

If you need a safety net, archive everything to S3/GCS using your agent or pipeline, but only index what you need. That gives full coverage without the high index cost.

What is an observability pipeline, technically?

It's a processing layer that receives raw telemetry, transforms it, and routes it to different backends.

In practice, a pipeline can:

- Filter entries (

drop_when(message =~ "health.*")) - Sample (

probabilistic_sampler: ratio: 0.05) - Normalize fields (

rename("req_id", "request_id")) - Batch + compress logs before shipping

- Split routing: send logs to

S3, metrics to Prometheusremote_write, traces to Tempo/Last9

Examples:

- OpenTelemetry Collector: processors like

filter,attributes,transform,batch,routing - Vector:

remap,reduce,sample,routetransforms - Fluent Bit:

grep,rewrite_tag,modify,luafilters

A managed platform (like Last9) wraps this with automatic filtering of noisy components, routing rules for high-cardinality data, and built-in storage/retention controls.

You don't need a pipeline for a small setup, but once you run dozens of services, it becomes the easiest way to enforce consistency and cost control.

How much can we realistically save with log optimization?

The savings depend on your data shape. A few technical examples:

- Turning off debug logs in

PRODoften reduces per-service volume by an order of magnitude - Sampling repeated warnings (1%) keeps the trendline visible but cuts 99% of the ingest

- Collapsing duplicates using an aggregator (Vector

reduce) can shrink CloudTrail-style logs by large percentages - Tiered storage (hot →

S3→ Glacier) immediately lowers retention cost, because hot index storage is the expensive part

Realistically, logs that are repetitive or never queried tend to dominate storage. Removing or down-sampling those logs is where most teams see the largest drop.

Should you build your own optimization system or use a managed service?

Both approaches work; the choice depends on what you need and how much operational complexity you want to manage. If you have strict compliance rules or very specific pipeline behaviour, building your own setup may give you the control you want. If you prefer predictable costs and less operational overhead, a managed platform usually fits better.

Here’s the technical tradeoff:

If you build your own:

- You use tools like

OTel Collector,Vector, orFluent Bit. - You control transforms, routing rules, PII handling, and sampling logic.

- You also own the configuration drift, scaling, upgrades, on-call, and integration with every backend you use.

If you use a managed platform:

- You get one place to define filtering and routing rules that apply consistently across services.

- High-cardinality attributes (IDs, customer labels, request metadata) are handled by the platform without you tuning index behaviour.

- Hot/cold routing happens automatically, without glue code or per-service config changes.

- You also get a single view of volume growth, top log sources, and retention pressure across your entire system.

Both paths are valid - the right one depends on how much control you want to maintain versus how much operational load you want to avoid.