Modern application architectures have evolved from simple monoliths to complex distributed systems spanning multiple environments. This evolution has transformed how we approach monitoring and troubleshooting.

Traditional monitoring methods that focus solely on uptime and basic health checks are no longer sufficient for understanding system behavior in cloud-native environments.

This is where APM observability comes in—providing the depth and context needed to maintain reliability in today's complex systems.

Understanding APM Observability Fundamentals

Application Performance Monitoring (APM) observability extends beyond traditional monitoring by focusing on system introspection and the ability to understand internal states through external outputs. While traditional monitoring answers "what's happening," observability answers "why it's happening."

The concept stems from control theory in engineering, where observability refers to how well a system's internal states can be understood by examining its outputs. In software terms, this translates to gaining insights through telemetry data without modifying the code each time you have a new question about system behavior.

The Technical Foundation of Observability

Observability is built on three core types of telemetry data:

| Data Type | Description | Primary Use Cases | Data Volume Characteristics |

|---|---|---|---|

| Metrics | Numeric measurements collected at regular intervals | Performance monitoring, trend analysis, capacity planning | Low volume, highly structured |

| Logs | Time-stamped records of discrete events | Debugging, audit trails, security analysis | High volume, semi-structured |

| Traces | Records of request flows through distributed services | Performance bottleneck detection, dependency mapping | Medium volume, highly structured |

Effective observability requires all three types to work together:

- Metrics provide an early warning system—alerting you to abnormal patterns and potential issues

- Logs supply the contextual details—helping you understand what was happening when problems occurred

- Traces deliver the causal relationships—showing exactly how requests flow through your system and where they slow down

The technical implementation typically involves:

- Instrumentation libraries for generating telemetry data

- Collection agents or pipelines for aggregating and forwarding data

- Storage systems optimized for time-series data

- Query engines for analysis and correlation

- Visualization tools for dashboards and exploration

Implementation Strategies for Different Organization Sizes

Implementing APM observability looks different depending on your organization's scale and maturity. Here's how to approach it based on your team size and resources.

Small Teams (1-5 Engineers)

For small teams, simplicity and efficiency are paramount:

- Focus on critical paths: Instrument only your most important user journeys

- Leverage managed services: Choose solutions that minimize operational overhead

- Standardize instrumentation: Use OpenTelemetry to future-proof your approach

- Start with metrics and logs: Add distributed tracing only when you've mastered the basics

Small teams benefit most from:

- Auto-instrumentation for common frameworks and libraries

- Pre-built dashboards and alerts

- Integrated solutions that handle multiple telemetry types

- Solutions with predictable pricing like Last9 that won't surprise you with unexpected costs

Mid-size Organizations (5-20 Engineers)

With more resources available, mid-size teams can:

- Implement comprehensive coverage: Instrument all services, prioritizing by business impact

- Establish observability standards: Create team guidelines for instrumentation and tagging

- Build custom dashboards: Create role-specific views for different stakeholders

- Integrate with CI/CD: Automatically validate observability as part of deployment

Useful approaches include:

- Observability champions within each team

- Shared libraries for consistent instrumentation

- Cross-service correlation capabilities

- Cost attribution to specific teams or projects

Large Enterprises (20+ Engineers)

Large organizations require a more structured approach:

- Create an observability center of excellence: Establish a team responsible for best practices

- Implement multi-environment visibility: Ensure consistent observability across dev, test, and production

- Develop advanced correlation capabilities: Connect technical metrics to business outcomes

- Implement governance processes: Manage cardinality and retention to control costs

Enterprise-specific considerations include:

- Multi-team access controls and visibility boundaries

- Compliance and audit capabilities

- Cross-region and cross-cloud aggregation

- Custom integrations with existing enterprise tools

Technical Components of APM Observability

Understanding the technical building blocks of observability helps you make informed architectural decisions.

Instrumentation Approaches

There are three primary methods for instrumenting your applications:

- Automatic instrumentation: Requires minimal code changes but offers limited customization

- Example: Java agents that use bytecode manipulation to insert monitoring code

- Best for: Getting started quickly, monitoring third-party dependencies

- Limitations: Less control over what's captured, the potential performance impact

- Semi-automatic instrumentation: Uses libraries and SDKs with higher-level abstractions

- Example: OpenTelemetry SDKs that provide standard instrumentation for common operations

- Best for: Most production applications, balancing ease and customization

- Limitations: Requires some code changes, learning curve for developers

- Manual instrumentation: Complete control through direct API calls

- Example: Custom span creation and attribute tagging for business-specific operations

- Best for: Capturing domain-specific metrics and custom business logic

- Limitations: Higher development overhead, risk of inconsistent implementation

Code example for OpenTelemetry semi-automatic instrumentation in Node.js:

const { trace } = require('@opentelemetry/api');

const tracer = trace.getTracer('my-service-name');

async function processOrder(orderId) {

// Create a span for this operation

const span = tracer.startSpan('process-order');

try {

// Add business context as span attributes

span.setAttribute('order.id', orderId);

span.setAttribute('order.type', 'standard');

// Perform the actual work

const result = await doOrderProcessing(orderId);

// Record success metrics

span.setAttribute('order.status', 'completed');

span.end();

return result;

} catch (error) {

// Record error information

span.setAttribute('order.status', 'failed');

span.recordException(error);

span.end();

throw error;

}

}Data Collection and Processing

Once generated, telemetry data follows a pipeline:

- Collection: Gathering data from multiple sources

- For metrics: Pull-based (Prometheus) vs. push-based (StatsD)

- For logs: File-based agents, syslog receivers, direct API ingestion

- For traces: Agent-based collection, gateway proxies, direct export

- Processing: Transforming and enriching raw data

- Filtering to reduce volume

- Aggregation for statistical analysis

- Enrichment with metadata (environment, service version, etc.)

- Sampling to control data volume (especially for traces)

- Storage: Specialized databases optimized for telemetry types

- Time-series databases for metrics (InfluxDB, TimescaleDB)

- Document stores or search engines for logs (Elasticsearch)

- Graph or columnar databases for traces (Jaeger, Tempo)

- Analysis: Query engines and correlation capabilities

- Query languages (PromQL, LogQL)

- Pattern detection algorithms

- Anomaly detection systems

- Cross-telemetry correlation engines

Each layer involves technical tradeoffs around latency, throughput, and storage efficiency.

Cost Management Techniques for APM Observability

One of the biggest challenges with observability is managing costs as data volumes grow.

Here are detailed strategies to control expenses without sacrificing visibility.

Intelligent Data Sampling

Not all transactions need to be captured at the same fidelity:

- Head-based sampling: Randomly samples a percentage of transactions from the start

- Simplest to implement but may miss important outliers

- Typically configured as a simple percentage (e.g., sample 10% of all requests)

- Tail-based sampling: Makes sampling decisions after transactions complete

- Preserves complete information for interesting transactions

- Requires buffering complete traces before deciding what to keep

- Example rule set:

- Keep 100% of errors

- Keep 100% of transactions >500ms duration

- Keep 100% of transactions from high-value customers

- Keep 5% of remaining normal transactions

- Priority-based sampling: Dynamically adjusts sampling rates based on context

- Services or endpoints can request higher sampling rates for important flows

- Sampling budget can be distributed across services based on criticality

- Requires coordination between services for consistent sampling decisions

Implementation example for tail-based sampling configuration:

sampling_rules:

- name: errors

condition: "span.status_code != OK"

sampling_percentage: 100

- name: slow_transactions

condition: "span.duration_ms > 500"

sampling_percentage: 100

- name: high_value_customers

condition: "span.attributes['customer.tier'] == 'premium'"

sampling_percentage: 100

- name: default

condition: "true"

sampling_percentage: 5Cardinality Control

Cardinality—the number of unique time series or tag combinations—is a primary cost driver:

- Tag standardization: Limit the allowed values for certain dimensions

- Example: Use standardized error codes instead of free-form error messages

- Example: Group similar API endpoints under common patterns

- Label filtering: Drop high-cardinality labels before storage

- Common candidates: session IDs, request IDs, user IDs

- Instead of dropping entirely, consider moving to logs or sample storage

- Metric aggregation: Pre-aggregate metrics before storage

- Example: Store p50/p90/p99 percentiles instead of raw histograms

- Example: Roll up per-pod metrics to per-deployment metrics for long-term storage

A real-world example of cardinality explosion and solution:

Poor design:

http_requests_total{path="/user/123456", method="GET", status="200"}

http_requests_total{path="/user/123457", method="GET", status="200"}

# Thousands of unique time series for each user IDBetter design:

http_requests_total{path="/user/:id", method="GET", status="200"}

# Single time series for all user requestsData Lifecycle Management

Not all data needs to be kept at full resolution forever:

- Tiered storage: Move data through storage tiers based on age

- Hot tier (recent data): Full resolution, fast access, expensive storage

- Warm tier (medium-term): Downsampled, slower access, less expensive

- Cold tier (long-term): Highly aggregated, batch access, cheap storage

- Resolution downsampling: Reduce the granularity of older data

- Example storage policy:

- 0-14 days: 10-second resolution

- 15-60 days: 1-minute resolution

- 61-180 days: 10-minute resolution

- 181+ days: 1-hour resolution

- Example storage policy:

- Selective retention: Keep different data types for different periods

- Critical business metrics: 1+ years

- Service-level indicators: 6 months

- Detailed technical metrics: 30 days

- Raw traces: 7 days

- Debug logs: 3 days

This tiered approach can reduce storage costs by 70-90% compared to keeping everything at full resolution.

Advanced Observability Practices

Once you've established the basics, these advanced techniques will elevate your observability practice.

Implementation of SLOs and Error Budgets

Service Level Objectives provide a data-driven framework for reliability:

- Defining SLIs (Service Level Indicators):

- Availability: Successful requests / total requests

- Latency: Percentage of requests faster than threshold

- Throughput: Requests handled per second

- Error rate: Percentage of requests resulting in errors

- Setting SLOs (Service Level Objectives):

- Target performance for each SLI (e.g., 99.9% availability)

- Must be measurable through your observability platform

- Should align with actual user experience, not just technical metrics

- Managing error budgets:

- Remaining acceptable failures within the measurement period

- Used to balance feature development vs. reliability work

- Calculated as: (100% - SLO%) × total requests

Example SLO definition for an API service:

service: payment-api

slos:

- name: availability

target: 99.95%

measurement: success_rate

window: 28d

- name: latency

target: 99.9%

measurement: requests_under_300ms

window: 28dThis approach creates a shared language between engineering and business stakeholders about reliability goals and tradeoffs.

Contextual Intelligence

Adding business context to technical metrics enhances their value:

- Customer impact correlation:

- Tag metrics with customer tiers

- Associate traces with specific user journeys

- Link technical metrics to business outcomes (conversions, revenue)

- Business transaction tracking:

- Instrument key business flows end-to-end

- Track completion rates and latencies for critical transactions

- Map technical dependencies for business processes

- Revenue impact analysis:

- Quantify the monetary impact of performance issues

- Calculate the opportunity cost of downtime

- Prioritize fixes based on business impact

Implementation typically involves:

- Custom instrumentation with business-specific attributes

- Integration with business intelligence systems

- Creation of executive dashboards showing technical-business correlation

Automated Remediation

The ultimate evolution of observability is automatic problem resolution:

- Automated diagnosis:

- Pattern recognition for common failure modes

- Anomaly detection to identify emerging issues

- Root cause analysis based on service dependencies

- Self-healing systems:

- Automatic scaling based on load metrics

- Circuit breakers for failing dependencies

- Pod or VM replacements for unhealthy instances

- Feedback loops:

- Continuous analysis of incident patterns

- Automated generation of SRE tickets for systemic issues

- Performance regression detection in CI/CD pipelines

While full automation is the goal, most organizations implement this gradually, starting with automated diagnosis and simple remediation actions before progressing to more complex healing.

Tool Selection and Integration

Choosing the right tools is critical for observability success. Here's how to evaluate options.

Open Source vs. Commercial Solutions

Understanding the tradeoffs helps you make appropriate selections:

| Aspect | Open Source | Commercial | Managed Services |

|---|---|---|---|

| Initial cost | Low | High | Medium |

| Operational burden | High | Medium | Low |

| Customization | Extensive | Limited | Variable |

| Support | Community | Vendor | Vendor |

| Integration | Manual | Pre-built | Pre-built |

| Scaling complexity | High | Medium | Low |

Many organizations adopt a hybrid approach:

- Open source for core collection and instrumentation

- Managed services for storage and analysis

- Commercial tools for specialized use cases

Key Integration Points

Observability should connect with your existing systems:

- CI/CD integration:

- Auto-generate dashboards for new services

- Validate instrumentation coverage before deployment

- Test SLO compliance in pre-production

- Incident management:

- Bidirectional integration with ticketing systems

- Attach relevant metrics and traces to incidents

- Track MTTR and other incident metrics

- Infrastructure automation:

- Correlate infrastructure changes with performance impacts

- Tag telemetry data with deployment markers

- Connect infrastructure metrics with application metrics

Look for tools with robust APIs and webhooks to enable these integrations.

Recommended Tool Stack

A balanced observability stack often includes:

- Instrumentation framework:

- OpenTelemetry for standardized instrumentation across languages

- Telemetry pipeline:

- Collection: OpenTelemetry Collector

- Processing: Vector or Fluentd for logs, OpenTelemetry Collector for metrics and traces

- Storage and analysis:

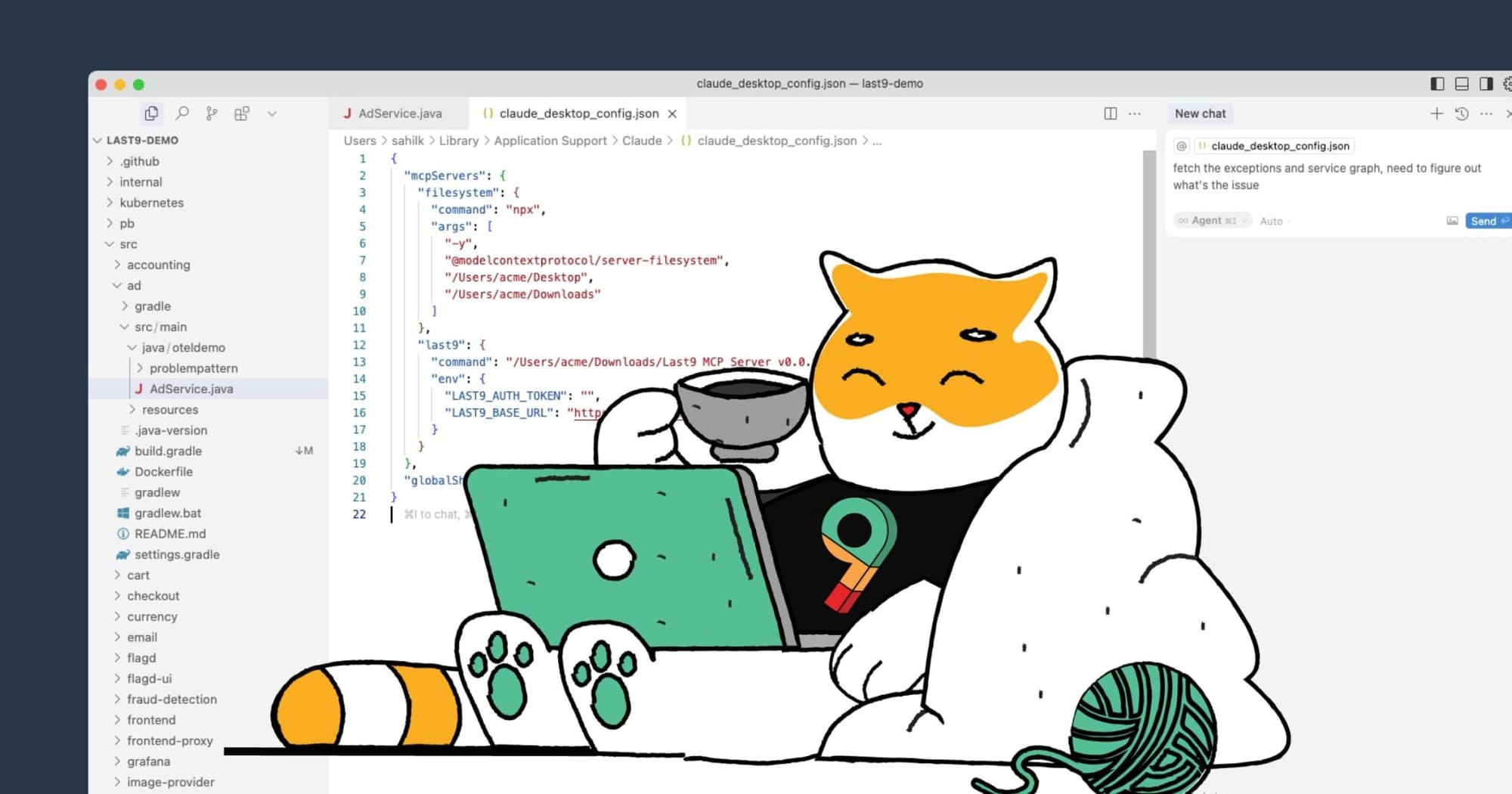

- Last9 for unified telemetry management with predictable costs

- Alternatives include open-source options like Prometheus (metrics), Loki (logs), and Jaeger (traces)

- Grafana for visualization across data sources

- Specialized components:

- Continuous profiling: Pyroscope or Parca

- Synthetic monitoring: Grafana Synthetic Monitoring

- Real user monitoring: Open source RUM libraries

Remember that tool selection should follow your strategy, not drive it. Define your observability goals first, then choose tools that support them.

Conclusion

APM observability has evolved from a nice-to-have into a business necessity for organizations running modern distributed applications. The ability to understand complex systems through connected telemetry data directly impacts user experience, engineering efficiency, and ultimately, business outcomes.

FAQs

What's the difference between monitoring and observability?

Monitoring focuses on known issues and predefined metrics. Observability extends this by enabling the exploration of unknown issues through rich, connected telemetry data. While monitoring tells you when something is wrong, observability helps you understand why it's wrong without having to deploy new instrumentation.

How do I calculate the ROI of an observability implementation?

Measure quantifiable benefits like:

- Reduced mean time to detection (MTTD) and resolution (MTTR)

- Decrease in customer-impacting incidents

- Engineering time saved in troubleshooting

- Prevention of revenue loss from outages

- Improved developer productivity

Most organizations see ROI through incident reduction and faster resolution times. A 15-minute reduction in average resolution time can translate to hundreds of engineering hours saved annually.

Do I need specialized staff for an observability implementation?

While having expertise is valuable, you don't necessarily need dedicated specialists. Start by training existing team members on observability principles and tools. Many organizations find success by identifying "observability champions" within each team who receive additional training and help implement best practices.

How do I handle observability across multiple clouds or hybrid environments?

Use these approaches for multi-environment observability:

- Adopt cloud-agnostic instrumentation standards like OpenTelemetry

- Implement consistent tagging/labeling strategies across environments

- Use a centralized observability platform that supports multi-cloud data collection

- Create unified dashboards that normalize data from different sources

The key is consistency in how you collect, label, and analyze data across environments.

How much data retention do I need for effective observability?

Retention requirements vary by data type and use case:

- Metrics: 13 months for year-over-year comparison

- Traces: 7-30 days for detailed troubleshooting

- Logs: Tiered approach with 3-7 days for verbose logs, 30-90 days for error logs

Consider regulatory requirements that may necessitate longer retention for certain data types. Using tiered storage and downsampling can make longer retention more cost-effective.