APIs (Application Programming Interfaces) are critical in enabling communication between software systems.

They form the backbone of modern applications, facilitating integrations, data sharing, and functionality across diverse platforms.

However, as APIs grow in complexity and scale, ensuring their performance, reliability, and availability becomes a key challenge. This is where API monitoring becomes indispensable.

In this guide, we’ll explore API monitoring in-depth, breaking it down for developers from beginner to intermediate levels. We’ll cover the fundamentals, explain its importance, outline best practices, and introduce a selection of tools to help you get started.

What is API Monitoring?

API monitoring is the process of observing and analyzing the performance, availability, and correctness of APIs in real-time. It ensures that APIs deliver consistent responses, perform within acceptable thresholds, and remain accessible to users and systems.

Key Aspects of API Monitoring

- Uptime Monitoring: Ensures that the API is reachable and operational.

- Performance Tracking: Measures response times, latency, and throughput.

- Functional Testing: Validates that API endpoints return expected results.

- Error Tracking: Detects and logs failed requests or unexpected responses.

Why is API Monitoring Important?

APIs are often the backbone of applications, and their failure can lead to cascading issues across connected services. Here are the key reasons to prioritize API monitoring:

- User Experience: API downtime or slow response times directly affect users, leading to dissatisfaction.

- Operational Continuity: Monitoring helps identify and resolve issues before they escalate into major outages.

- Revenue Protection: For APIs handling transactions or payments, monitoring ensures revenue streams remain unaffected.

- Troubleshooting Efficiency: Comprehensive monitoring reduces time spent diagnosing problems, allowing teams to focus on resolving them.

Key Metrics to Monitor

Effective API monitoring involves tracking specific metrics that provide actionable insights. These include:

- Availability/Uptime: The percentage of time an API is operational.

- Response Time: The time taken for an API to process a request and return a response.

- Error Rates: The percentage of failed API calls, such as 4xx and 5xx HTTP errors.

- Latency: The delay between the client request and server response.

- Throughput: The number of requests processed per second.

Types of API Monitoring

API monitoring can be divided into several categories, each serving a unique purpose:

1. Synthetic Monitoring

Simulates API requests at regular intervals to test functionality, performance, and availability.

- Use Case: Detecting issues in APIs before users encounter them.

2. Real User Monitoring (RUM)

Tracks actual user interactions with your API in real-time.

- Use Case: Understanding real-world performance experienced by users.

3. Functional Monitoring

Ensures that API endpoints perform as expected and return correct results.

- Use Case: Validating the integrity of responses.

4. Security Monitoring

Focuses on identifying vulnerabilities and ensuring compliance with security protocols.

- Use Case: Protecting sensitive data and preventing unauthorized access.

Best Practices for API Monitoring

To achieve effective API monitoring, follow these industry best practices:

1. Establish Key Performance Indicators (KPIs)

Define the metrics that matter most to your use case, such as latency, error rates, or uptime.

2. Set Thresholds and Baselines

Establish normal performance baselines to detect anomalies. For example, if an API typically responds in under 200ms, set an alert for deviations beyond that range.

3. Monitor Critical Paths

Prioritize monitoring APIs that are essential to user workflows or business processes.

4. Use Alerts Judiciously

Configure alerts for meaningful deviations to avoid noise. Group related alerts to provide actionable insights instead of overwhelming notifications.

5. Integrate Monitoring with CI/CD Pipelines

Incorporate API testing and monitoring into your development lifecycle to catch issues early.

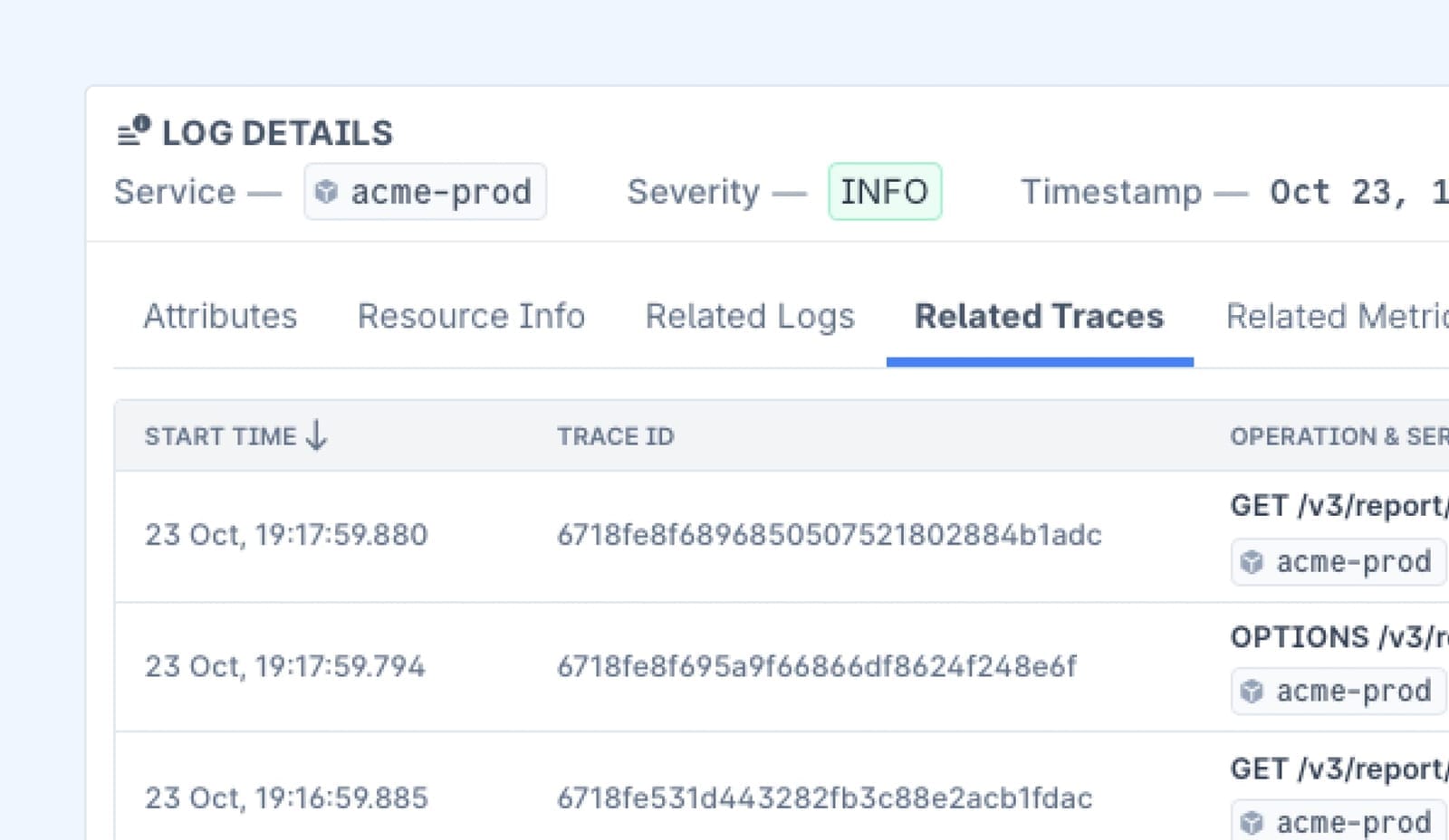

6. Enable Logging and Traceability

Store detailed logs of API requests and responses to aid in diagnosing issues and identifying trends.

API Monitoring Tools

Here are some tools to help you implement API monitoring effectively:

1. Postman

- Features: Offers API testing and monitoring with scheduled requests and detailed reports.

- Best For: Teams focusing on API development and testing.

2. Last9

- Features: A high-cardinality telemetry warehouse that integrates metrics, logs, and traces into a unified platform.

- Best For: Managing high-cardinality data while achieving comprehensive observability across applications and APIs.

3. Dynatrace

- Features: AI-driven insights into API dependencies, performance bottlenecks, and user impact.

- Best For: Large-scale applications with complex dependencies.

4. SolarWinds API Monitoring

- Features: Focuses on uptime, response times, and availability with real-time dashboards.

- Best For: IT teams managing enterprise-grade APIs.

5. Assertible

- Features: Automated API testing and monitoring integrated with CI/CD workflows.

- Best For: Developers looking for automation-friendly solutions.

Challenges in API Monitoring

Despite its benefits, API monitoring has its challenges:

- Handling Complex Microservices Architectures

APIs often interact with multiple microservices, making it difficult to isolate issues. - Scalability

Monitoring high-traffic APIs can generate massive amounts of data, requiring robust tools to manage. - False Positives and Negatives

Poorly configured thresholds can either miss critical issues or trigger unnecessary alerts. - Security Concerns

Monitoring often involves sensitive data, necessitating stringent security measures.

Last9 has been crucial for us. We’ve been able to find interesting bugs, that were not possible for us with New Relic. — Shekhar Patil, Founder & CEO, Tacitbase

Complete API Monitoring Implementation Guide

Effective API monitoring is more than just checking for uptime—it’s about ensuring robust performance, timely alerts, and insightful analytics.

Here’s a structured implementation guide to help you build a comprehensive API monitoring framework:

1. Basic API Health Monitoring Setup

Start by capturing basic metrics like request counts and latency using Prometheus metrics in a FastAPI application.

from fastapi import FastAPI, HTTPException

from prometheus_client import Counter, Histogram, generate_latest

import time

import logging

# Initialize FastAPI

app = FastAPI()

# Define Prometheus metrics

REQUEST_COUNT = Counter(

'api_requests_total',

'Total API requests',

['method', 'endpoint', 'status']

)

REQUEST_LATENCY = Histogram(

'api_request_latency_seconds',

'Request latency',

buckets=[0.1, 0.5, 1.0, 2.0, 5.0] # Define latency buckets in seconds

)

# Configure logging

logging.basicConfig(level=logging.INFO)

logger = logging.getLogger(__name__)

@app.middleware("http")

async def monitor_requests(request, call_next):

start_time = time.time()

try:

# Process the request

response = await call_next(request)

# Record metrics

REQUEST_COUNT.labels(

method=request.method,

endpoint=request.url.path,

status=response.status_code

).inc()

logger.info(f"Request completed: {request.method} {request.url.path}")

return response

except Exception as e:

REQUEST_COUNT.labels(

method=request.method,

endpoint=request.url.path,

status=500

).inc()

logger.error(f"Request failed: {request.method} {request.url.path} - {str(e)}")

raise e

finally:

duration = time.time() - start_time

REQUEST_LATENCY.observe(duration)Explanation:

- Metric Definition:

REQUEST_COUNT: Tracks total requests with labels for method, endpoint, and statusREQUEST_LATENCY: Measures request duration using histogram buckets

- Middleware Function:

- Captures timing information

- Records successful and failed requests

- Logs request details

- Measures request latency

2. Advanced Error Handling and Response Monitoring

Enhance monitoring by tracking errors and response sizes.

from typing import Dict, Any

from datetime import datetime

import json

class APIMonitor:

def __init__(self):

self.error_counter = Counter(

'api_errors_total',

'Total API errors',

['error_type', 'endpoint']

)

self.response_size = Histogram(

'api_response_size_bytes',

'Response size in bytes',

buckets=[100, 1000, 10000, 100000]

)

async def monitor_response(self, response: Dict[str, Any], endpoint: str) -> None:

response_size = len(json.dumps(response))

self.response_size.observe(response_size)

self.record_timestamp(endpoint)

def record_error(self, error_type: str, endpoint: str) -> None:

self.error_counter.labels(

error_type=error_type,

endpoint=endpoint

).inc()

def record_timestamp(self, endpoint: str) -> None:

timestamp = datetime.utcnow().timestamp()

Gauge(

f'api_last_success_{endpoint}',

f'Last successful response timestamp for {endpoint}'

).set(timestamp)Explanation:

- Error Tracking:

- Counts errors by type and endpoint

- Provides error metrics for alerting

- Response Monitoring:

- Measures response size distribution

- Records timestamps for availability tracking

3. API Performance Tracking

Monitor endpoint performance using a rolling window of metrics.

from dataclasses import dataclass

from collections import defaultdict

import statistics

@dataclass

class PerformanceMetrics:

endpoint: str

latency_ms: float

status_code: int

timestamp: float

class PerformanceTracker:

def __init__(self):

self.metrics_buffer = defaultdict(list)

self.window_size = 100 # Track last 100 requests

def add_metric(self, metric: PerformanceMetrics) -> None:

endpoint_metrics = self.metrics_buffer[metric.endpoint]

endpoint_metrics.append(metric)

if len(endpoint_metrics) > self.window_size:

endpoint_metrics.pop(0)

def get_endpoint_stats(self, endpoint: str) -> Dict[str, float]:

metrics = self.metrics_buffer[endpoint]

latencies = [m.latency_ms for m in metrics]

return {

'avg_latency': statistics.mean(latencies),

'p95_latency': statistics.quantiles(latencies, n=20)[18],

'error_rate': sum(1 for m in metrics if m.status_code >= 400) / len(metrics)

}Explanation:

- Metric Collection:

- Uses dataclass for structured metric storage

- Maintains rolling window of recent requests

- Statistical Analysis:

- Calculates average latency

- Computes 95th percentile latency

- Determines error rate

4. Alert Configuration

Set up alerts based on thresholds for critical metrics.

from abc import ABC, abstractmethod

from typing import List

class AlertCondition(ABC):

@abstractmethod

def check(self, metrics: Dict[str, float]) -> bool:

pass

class LatencyAlert(AlertCondition):

def __init__(self, threshold_ms: float):

self.threshold = threshold_ms

def check(self, metrics: Dict[str, float]) -> bool:

return metrics['p95_latency'] > self.threshold

class AlertManager:

def __init__(self):

self.conditions: List[AlertCondition] = []

self.notifications = []

def add_condition(self, condition: AlertCondition) -> None:

self.conditions.append(condition)

def check_alerts(self, metrics: Dict[str, float]) -> List[str]:

alerts = []

for condition in self.conditions:

if condition.check(metrics):

alerts.append(f"Alert: Threshold exceeded - {metrics}")

return alertsExplanation:

- Alert Definition:

- Abstract base class for alert conditions

- Specific implementations for different metrics

- Alert Management:

- Manages multiple alert conditions

- Checks metrics against thresholds

- Generates alert messages

5. Dashboard Integration

Create time-series data for real-time dashboards.

interface DashboardMetrics {

timestamp: number;

endpoint: string;

metrics: {

latency: number;

errorRate: number;

requestCount: number;

};

}

class DashboardUpdater {

private metrics: DashboardMetrics[] = [];

private readonly maxDataPoints = 1000;

public addMetric(metric: DashboardMetrics): void {

this.metrics.push(metric);

if (this.metrics.length > this.maxDataPoints) {

this.metrics.shift();

}

}

public getTimeSeriesData(): DashboardMetrics[] {

return this.metrics;

}

}Explanation:

- Data Structure:

- Defines interface for dashboard metrics

- Maintains time-series data

- Data Management:

- Rolling window of data points

- Methods for data access and updates

Conclusion

API monitoring is key to delivering reliable performance and ensuring a smooth user experience for modern applications. At Last9, we are more than just an observability platform—we're about redefining how observability should be approached.

While many platforms offer fragmented solutions, we focus on achieving the perfect balance between performance, user experience, and thoughtful insights. Our approach ensures that you not only track your APIs but truly understand their impact on your systems and users.

Schedule a demo with us to know or even try it for free to experience the features!

FAQs

1. How often should APIs be monitored?

Ideally, APIs should be monitored continuously, with synthetic tests running regularly.

2. Can API monitoring improve security?

Yes, by identifying unauthorized access attempts, tracking unusual request patterns, and enforcing compliance standards.

3. What’s the difference between API monitoring and testing?

API testing is a pre-deployment activity to validate functionality, while monitoring is an ongoing process to ensure reliability and performance in production.

4. How do I secure API monitoring data?

Encrypt sensitive information, implement access controls, and anonymize logs where necessary.