Search plays a key role in accessing information efficiently. Whether you're navigating an online store, querying enterprise data, or exploring a large content repository, a well-designed search engine helps retrieve relevant results quickly.

Apache Solr is an open-source search platform built on Apache Lucene. It is widely used for handling large-scale search and analytics, powering platforms like eBay, Netflix, and Twitter. This guide explores Solr’s architecture, features, and use cases to help you understand its capabilities and how it can be applied effectively.

What is Solr?

Apache Solr is a powerful, enterprise-grade search platform designed for full-text search, faceted navigation, and real-time indexing.

It provides a flexible and customizable framework to efficiently handle both structured and unstructured data. Thanks to its speed, scalability, and extensibility, Solr is widely used across industries.

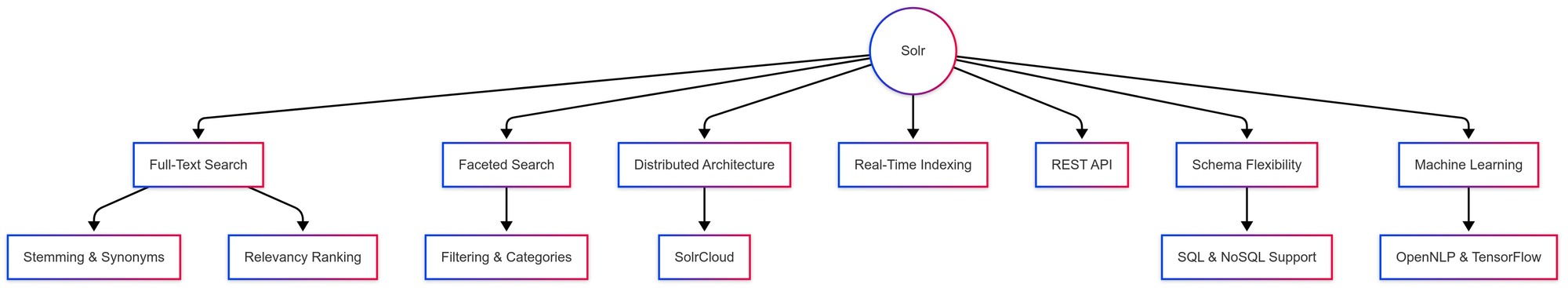

Key Features of Solr

- Full-Text Search – Advanced text search with features like stemming, synonyms, and relevancy ranking.

- Faceted Search – Allows users to filter and categorize results, making it ideal for e-commerce and large datasets.

- Distributed Architecture – Supports SolrCloud for seamless scalability across multiple nodes.

- Real-Time Indexing – Ensures near-instant updates for the most up-to-date search results.

- REST API Support – Easily integrates with other applications and platforms.

- Schema Flexibility – Works with both structured (SQL-based) and unstructured (NoSQL) data.

- Machine Learning Integration – Enhances search ranking with AI-powered tools like Apache OpenNLP and TensorFlow.

How Solr is Built: Architecture and Key Components

At its core, Solr runs on Apache Lucene and supports distributed indexing and searching through SolrCloud mode. Let’s break down the key architectural components that make Solr robust and scalable.

Nodes and Clusters

A Solr node is a single running instance of Solr on a server. When multiple nodes work together, they form a Solr cluster, which distributes indexing and query processing across multiple machines.

Clusters allow horizontal scaling, meaning you can add more nodes to handle increasing workloads. This setup ensures:

- Better performance by distributing searches across multiple servers.

- Redundancy and fault tolerance in case a node fails.

- Efficient load balancing to manage high search traffic.

For example, an e-commerce website with millions of products might use a Solr cluster to ensure fast searches, even during peak shopping times.

ZooKeeper Management

SolrCloud relies on Apache ZooKeeper, which acts as a cluster coordinator to manage configurations, node communication, and leader elections.

ZooKeeper ensures that:

- Nodes stay synchronized and are aware of each other.

- Shard assignments and replicas are properly distributed.

- If a node fails, a new leader is elected automatically.

A typical SolrCloud deployment includes a separate ZooKeeper ensemble (a group of ZooKeeper servers) to maintain high availability and reliability.

Sharding and Replication

To manage large-scale data, Solr uses sharding, which splits an index into smaller parts distributed across different nodes. Each shard contains a portion of the data, making it easier to scale.

Each shard can have multiple replicas for fault tolerance and performance optimization. There are four types of replicas in Solr:

- Leader Replica – Handles all indexing operations and synchronizes updates with other replicas.

- NRT (Near-Real-Time) Replica – Supports both indexing and querying, making it great for real-time searches.

- Tlog (Transaction Log) Replica – Focused on durability by using a write-ahead log to ensure data safety.

- Pull Replica – Optimized for read-heavy workloads, with minimal impact on write performance.

For example, a news website indexing millions of articles can use sharding to distribute data while replicas ensure that searches remain fast and reliable.

Multi-Region Setups – Scaling Across the Globe

For global-scale applications, Solr supports multi-region deployments, where indexes are distributed across different data centers worldwide.

This setup helps:

- Reduce query response times by serving users from the closest data center.

- Improve redundancy with data mirrored across regions.

- Ensure failover protection in case of regional outages.

Multi-region deployments typically follow two models:

- Active-Active Replication – Data is available for both reading and writing in multiple regions.

- Active-Passive Replication – One region handles writes while others serve as read replicas for failover support.

For example, a global travel website can store indexes in North America, Europe, and Asia, ensuring that users get fast results no matter where they are.

Why Use Solr Over Other Search Engines?

Several search platforms, including Elasticsearch, Algolia, and Meilisearch, compete with Solr. However, Solr stands out in key areas:

| Feature | Apache Solr | Elasticsearch | Algolia |

|---|---|---|---|

| Open Source | Yes | Yes | No |

| Enterprise-Grade Scalability | Yes | Yes | Limited |

| Full-Text Search | Yes | Yes | Yes |

| Native Faceted Search | Yes | Limited | Yes |

| Built-in Clustering (SolrCloud) | Yes | No (Requires Elasticsearch clusters) | No |

| SQL-Like Queries | Yes | No | No |

| Community Support | Large | Large | Smaller |

Solr is an excellent choice for businesses that need flexibility, customization, and enterprise-level support without vendor lock-in. Its ability to scale, handle complex queries, and offer native faceting makes it a strong contender in the search space.

How to Set Up Apache Solr

Getting started with Solr is straightforward. Follow these steps:

Step 1: Install Solr

Solr runs on Java, so make sure you have Java 11+ installed. Then, download and extract Solr:

wget https://downloads.apache.org/lucene/solr/9.3.0/solr-9.3.0.tgz

tar -xvzf solr-9.3.0.tgz

cd solr-9.3.0/bin

./solr startThis starts Solr on its default port 8983, which you can access via http://localhost:8983/solr/.

Step 2: Create a Core

Solr organizes data into cores (similar to databases in a relational system). To create a new core, run:

./solr create -c mycollectionThis sets up a new core named mycollection, ready to store and index your data.

Step 3: Index Documents

You can index data in formats like JSON, CSV, or XML. Here’s an example of indexing a simple JSON document:

curl -X POST -H "Content-Type: application/json" --data '[{"id":"1","title":"Hello Solr"}]' http://localhost:8983/solr/mycollection/update?commit=trueThis command adds a document with an ID of 1 and a title of "Hello Solr" to the collection.

Step 4: Search Data

To query Solr, use the following URL in a browser or command line:

http://localhost:8983/solr/mycollection/select?q=title:HelloThis retrieves all documents where the title contains "Hello."

How Solr Ensures High Availability and Fault Tolerance

Solr is designed to stay online even when failures occur. It achieves high availability through automatic failover mechanisms that ensure seamless operation. If a node or a shard leader goes down, ZooKeeper steps in to elect a new leader and reroute queries to available replicas.

To maintain consistent performance and avoid downtime, Solr uses:

- Replica distribution to ensure that no single failure disrupts the search.

- Load balancing through Solr’s built-in routing or external tools.

- Query rerouting to available nodes when failures occur.

With these mechanisms, Solr can handle failures without impacting search availability.

Infrastructure Considerations

Deploying Solr at scale requires careful planning to ensure performance, reliability, and efficiency. Key factors to consider include:

- Storage – Fast, scalable storage is essential for handling large indexes efficiently.

- Memory and CPU – JVM settings must be optimized to balance query execution and indexing speed.

- Networking – Proper inter-node communication and data replication are crucial, especially in multi-region setups.

- Monitoring and Logging – Using Last9 for real-time observability and performance tuning helps detect and fix issues quickly.

With the right infrastructure strategy, organizations can build scalable and resilient search solutions.

Solr Pain Points: Issues You Might Face

Despite its strengths, Solr comes with challenges that users must navigate.

1. Complex Setup and Configuration

- Requires deep knowledge of indexing, sharding, and replication.

- Large-scale deployments often involve complex configurations.

2. Scaling Challenges

- While SolrCloud enables distributed search, managing clusters can be tricky.

- Balancing shards and replicas efficiently requires careful tuning.

3. Resource-Intensive Operations

- Solr can be memory- and CPU-intensive, especially for large indexes and frequent queries.

- Performance tuning is essential to prevent bottlenecks.

4. Indexing Latency

- Real-time updates can introduce latency, making Solr less suitable for use cases requiring instant search results.

5. Limited Native Support for Structured Queries

- Unlike relational databases, Solr is optimized for full-text search rather than structured queries.

- Complex joins and aggregations may require workarounds or external processing.

6. Learning Curve

- Requires an understanding of query syntax, schema design, and relevancy tuning.

- Documentation can be dense, and best practices continue to evolve.

7. Security Considerations

- Out-of-the-box security features are minimal; additional configuration is needed to secure endpoints.

- Lacks fine-grained access control compared to some other search platforms.

Solr vs. Relational Databases: Key Differences and Use Cases

Solr and relational databases (RDBMS) serve different purposes, and understanding their strengths helps in choosing the right tool for the job.

1. Data Structure and Storage

| Feature | Solr (Search Engine) | Relational Databases (PostgreSQL, MySQL, etc.) |

|---|---|---|

| Data Model | Schema-based but optimized for documents (JSON, XML) | Structured, table-based with rows and columns |

| Indexing | Optimized for full-text search and inverted indexing | Uses B-Trees and hash indexes for quick lookups |

| Joins | Limited support, not as efficient as SQL joins | Strong support for complex joins |

Use Case: Solr is better for unstructured or semi-structured data, while RDBMS is better for structured data with relationships.

2. Querying Capabilities

| Feature | Solr | Relational Databases |

|---|---|---|

| Query Language | Solr Query Parser (or JSON API) | SQL |

| Full-Text Search | Highly optimized, with ranking, stemming, and faceting | Basic, with support for LIKE and full-text indexes |

| Aggregations | Supports facets and stats but lacks advanced SQL-style aggregations | Strong support for GROUP BY, HAVING, and window functions |

Use Case: If you need fast text search with ranking and faceting, Solr is superior. If you need transactional operations and structured queries, an RDBMS is a better choice.

3. Scalability and Performance

| Feature | Solr | Relational Databases |

|---|---|---|

| Read Performance | Fast due to indexing and distributed search | Slower for full-text queries, but optimized for structured data |

| Write Performance | Slower due to indexing overhead | Faster for transactional writes (ACID compliance) |

| Scaling | Horizontal scaling with SolrCloud | Typically scales vertically, but sharding is possible |

Use Case: Solr is better for high-speed search across large datasets, while databases handle frequent writes and updates efficiently.

4. Transaction Support

| Feature | Solr | Relational Databases |

|---|---|---|

| ACID Compliance | No, uses optimistic concurrency | Yes, ensures atomicity, consistency, isolation, and durability |

| Real-Time Updates | Near real-time, but with some latency | Immediate, strong consistency |

Use Case: If strict consistency and transactions are required (e.g., financial applications), a relational database is the right choice. If search speed and relevance are the priority, Solr is better.

5. Best Use Cases for Each

| Use Case | Best Tool |

|---|---|

| Full-text search (e.g., product search, document search) | Solr |

| Structured data with strong relationships (e.g., financial records) | RDBMS |

| E-commerce catalogs with faceted filtering | Solr |

| Complex analytical queries with joins and aggregations | RDBMS |

| Log search and analysis (e.g., ELK stack alternative) | Solr |

| High-concurrency transactional applications | RDBMS |

Conclusion

Apache Solr is a powerful and flexible search platform, well-suited for enterprise applications that require scalability and robust search capabilities. For deeper insights into its advanced features and integrations, the official Apache Solr documentation is a great place to start.