This piece is part of a series on OpenTelemetry — we’ll walk you through OpenTelemetry from the ground up — what it solves, how it works, and how you can start using it today.

Traditionally, teams had to use different tools for each type of signal:

- Logs might go to Elasticsearch

- Metrics to Prometheus

- Traces to Jaeger or Zipkin

Each tool had its setup, language, and data format. Nothing was connected. So when you needed to troubleshoot, you were stuck flipping between dashboards, trying to match timestamps, and hoping something lined up.

Today, systems span many services, often running in containers across multiple cloud environments. Troubleshooting means digging through all these layers to find where something went wrong. And with AI-native workflows adding to the mix, telemetry data and complexity grow even further. More agents, more data points, and more interactions make it harder to get a clear view.

It’s messy — and that’s exactly the problem OpenTelemetry was built to solve.

But why now? The amount of telemetry data generated by modern systems has skyrocketed in recent years. According to Grand View Research, the global data observability market hit $2.14 billion in 2024 and is projected to grow 12.2% annually through 2030. This reflects organizations’ growing need for unified visibility and analysis across increasingly complex environments, especially as AI-native workflows add new layers of telemetry and complexity.

OpenTelemetry: A Unified Way to Understand Your Systems

OpenTelemetry (or OTel) is an open-source observability framework that helps you understand what’s happening inside your applications, without having to set up a separate tool for every signal.

Instead of managing different pipelines for logs, metrics, and traces, OpenTelemetry provides one consistent way to collect them all. It acts like a common language for observability data that works across all your services, regardless of the programming language.

The project came about by merging two earlier efforts — OpenCensus (from Google) and OpenTracing — into a single, unified framework. This means OpenTelemetry replaces both and is quickly becoming the standard way to collect telemetry data. Many established monitoring tools now support OpenTelemetry, reflecting how widely it’s been adopted.

Today, OpenTelemetry is the second most active project in the Cloud Native Computing Foundation (CNCF), the same organization behind Kubernetes.

But what’s the difference between tools that support OpenTelemetry and those built to be OpenTelemetry-native?

Tools that support OpenTelemetry can accept data collected using OTel standards, but their internal systems may not be designed specifically around those standards. They often add OpenTelemetry compatibility alongside existing methods.

On the other hand, OpenTelemetry-native tools are built from the ground up with OTel in mind. Their core architecture is designed to work smoothly with OTel data formats and protocols. This usually means easier integration, better performance, and fewer gaps in data collection.

At its core, OpenTelemetry:

- Uses a standard format to collect telemetry data

- Works consistently across services and languages

- Sends data to the tools you already use — like Prometheus, Jaeger, or Last9

What is OpenTelemetry not?

OpenTelemetry is not a monitoring or alerting tool. While it provides the means to collect telemetry data from applications and systems, it does not offer built-in monitoring capabilities or the ability to configure alerts based on that data.

OpenTelemetry focuses on the collection, processing, and export of telemetry data, leaving the monitoring and alerting aspects to other observability tools like Last9 that can consume the exported telemetry data.

The Three Signals OpenTelemetry Focuses on

OpenTelemetry collects three key types of signals: traces, metrics, and logs. Each offers a different perspective on your system — and together, they help you understand not just what broke, but why.

This unified view is becoming especially important as AI-driven tools increasingly rely on connected telemetry data to speed up debugging and root cause analysis.

Metrics

Metrics track things like traffic, errors, and response times over time. They help you spot issues before users feel them.

Example:

Your checkout service usually responds in 200ms. One day, it jumps to 600ms. That’s your cue to investigate.

Common metrics include:

- Requests per minute

- Error counts

- Response times

- CPU and memory usage

Logs

Logs are time-stamped messages — things like errors, warnings, and debug info. They help explain why something happened when your other signals point to what and where.

Example:

Your payment service fails. Traces show it broke during the payment step. Metrics show the failure rate. The log gives you the reason: “Payment gateway timeout after 3 retries.”

OpenTelemetry links logs with traces and metrics, so you get the full picture, without needing to manually piece things together.

Traces

Traces help you see how a request flows through your system — which services it touches and how long each step takes.

Example:

A “Place Order” request might go through the frontend, order API, inventory check, payment, and email confirmation. A trace captures this path from start to finish.

Each step is a span — a small unit of work with timing and context. With traces, you get a clear picture of how your system behaves under the hood.

Having these three signals is powerful on their own, but the real advantage comes when they’re connected and correlated, especially as AI tools start playing a bigger role in speeding up debugging and root cause analysis.

Why Linking Traces, Metrics, and Logs Matters for AI-Powered Debugging

When traces, metrics, and logs are connected, AI tools can put the pieces together more easily. Instead of looking at each signal separately, these tools can match a spike in metrics with the exact trace where the problem happened. Then they pull up the related logs to show what caused it.

For example, if your metrics show a sudden increase in error rates for a payment service, the AI tool can find the trace that follows a failed payment request through your system and show the logs from that trace explaining the cause, like a “timeout connecting to payment gateway” message.

This means engineers don’t have to spend time jumping between different tools and dashboards to find answers. AI can quickly point to the likely cause, spot patterns, and suggest what to check next.

Now, let’s understand what Opentelemetry is built of.

How OpenTelemetry Is Built

OpenTelemetry is made up of a few key components that work together to collect and export telemetry data. Let’s break them down.

API & SDK: The Core Building Blocks

Every language supported by OpenTelemetry has two main parts:

The API – what you write code against

The SDK – what handles processing, batching, and exporting

The API: One Interface, No Lock-In

This is what you use in your application code to create spans, set attributes, or define metrics. It’s designed to stay stable, so if you switch backends or upgrade your SDK later, your code doesn’t need to change.

The SDK: Processing, Sampling, Exporting

The SDK handles the behind-the-scenes work:

- Chooses what data to keep (sampling)

- Maintains context across services

- Batches and formats telemetry

- Sends data to your collector or backend (like Prometheus, OTLP, Jaeger, Zipkin, or Last9)

Exporters are pluggable — you can wire up different destinations without rewriting your code.

Auto-Instrumentation: Less Boilerplate, More Signal

Writing instrumentation by hand works, but it’s a slog. OpenTelemetry supports auto-instrumentation for common libraries and frameworks (like HTTP servers or DB clients), so you can get telemetry with little to no code changes. Some languages also offer agents that inject this at runtime — no need to touch your code at all.

A Shared Spec Across Languages

Everything — APIs, SDKs, exporters, instrumentation — follows a common spec. That means consistent behavior, whether your service is in Go, Java, Python, or something else. You get a shared language for observability across your stack, and fewer surprises when things span multiple languages.

Note on semantic conventions: This guide uses OpenTelemetry semantic conventions as of version 1.27. Some attribute names have evolved over time (for example, http.status_code became http.response.status_code in version 1.21+). Always check the latest semantic conventions for your OpenTelemetry version to ensure compatibility.

Before you start wiring everything to a backend, there’s one more piece worth knowing: the OpenTelemetry Collector.

The OpenTelemetry Collector: Flexible and Pluggable

The Collector acts as a central hub. It takes in telemetry from different sources — not just OpenTelemetry SDKs, but also tools like Jaeger, Zipkin, or Prometheus — processes it, and sends it wherever you want: Prometheus, Last9, Jaeger, or Kafka. You don’t need to hardwire your app to any backend. The Collector handles routing, formatting, and cleanup.

Where Does It Run?

The Collector can run in different modes. In agent mode, it runs on the same machine as your app and filters or enriches data before sending it out. In gateway mode, it runs as a central service that collects telemetry from multiple apps or instances. You can also use both — collect locally, then forward centrally.

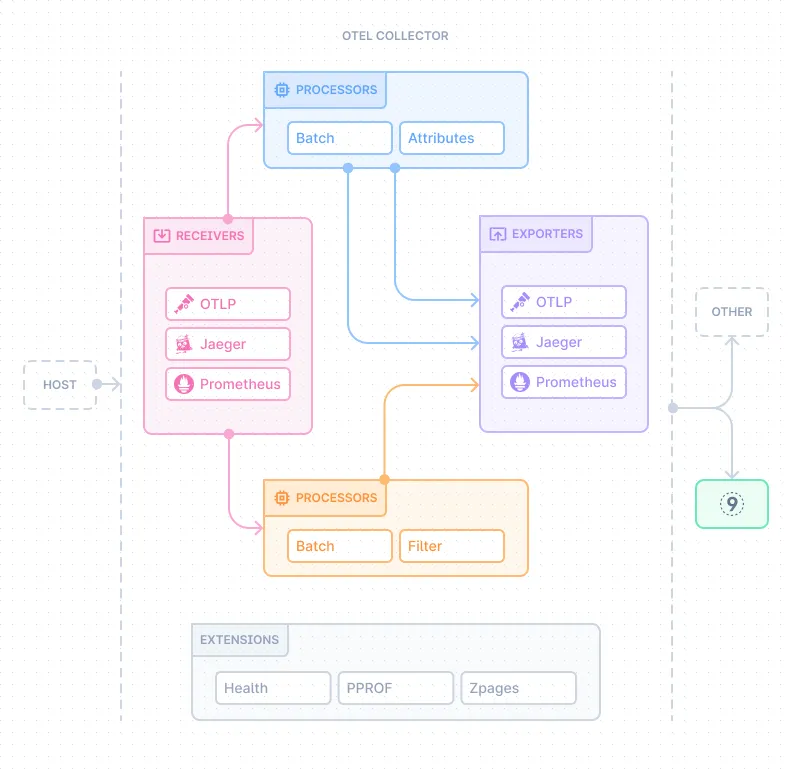

The Collector works as a modular pipeline with four key components.

- Receivers that handle incoming data from various protocols,

- Processors that modify, filter, or batch data,

- Exporters that send processed data to different backends, and

- Connectors that pass data between pipelines or generate new telemetry types.

OTLP: The OpenTelemetry Protocol That Moves the Data

With the Collector handling routing and processing, the next question is: how does telemetry data move between components?

That’s where OTLP — the OpenTelemetry Protocol helps.

OTLP is a vendor-neutral, tool-agnostic protocol designed specifically for telemetry. It defines how data is encoded and reliably delivered between OpenTelemetry components.

Because OTLP standardizes data movement, you’re not tied to a specific backend or vendor. If you switch tools later, you don’t have to change your instrumentation — just update your Collector configuration.

Where OTLP Is Used You’ll typically see OTLP in two key places:

- From SDK to Collector — your application sends telemetry to a local or remote Collector using OTLP.

- From Collector to backend — the Collector forwards that data using OTLP or converts it to another supported format, depending on the backend, like Prometheus, Last9, Jaeger, or Elastic.

Since OTLP supports traces, metrics, and logs, you don’t need separate protocols for each. It simplifies your setup and keeps things consistent.

What If You’re Using Another Receiver?

If you’re already using formats like Zipkin, Jaeger, or Prometheus, the Collector can work with those too. It supports multiple receivers, so even if your app doesn’t emit OTLP data directly, the Collector can still ingest and convert it into the OpenTelemetry data model.

Recap: How OpenTelemetry Fits Together

Here’s the TL;DR of how all the parts come together in a typical setup:

Collection

Your application emits traces, metrics, and logs using the OpenTelemetry API — either manually or through auto-instrumentation.

SDK Processing

The SDK handles sampling, batching, enrichment, and formatting to prepare data for export.

Transmission

Telemetry is sent over the wire using OTLP (via gRPC or HTTP).

Aggregation (Collector)

The OpenTelemetry Collector (running as an agent or gateway) can receive, process, filter, and route the data to one or more backends.

Analysis

Your backend tools — like Prometheus, Jaeger, or Last9 — ingest the data. You can now trace requests, graph metrics, and search logs.

How to Get Started with Opentelemetry

Want a backend that speaks native OTLP and lets you control telemetry costs while boosting developer productivity? Start with Last9 — an OpenTelemetry-native observability platform.

You can send telemetry directly from your application using the OpenTelemetry SDKs or run the OpenTelemetry Collector. Last9 supports both OTLP over gRPC and HTTP, so you can choose what fits your stack best.

Last9 is built from the ground up for OpenTelemetry, which means:

- Unified observability — One platform for traces, metrics, and logs with automatic correlations across services, so you can jump from a metric spike to the exact trace and log without switching tools

- Full semantic fidelity — Every custom attribute you emit stays queryable. Unlike platforms that convert OTLP to proprietary formats, Last9 preserves your semantic conventions end-to-end

- High-cardinality support — Send detailed fields like

user_idortransaction_idwithout the usual cost penalties or performance degradation - No vendor lock-in — Your instrumentation stays portable because Last9 doesn’t transform OTLP into proprietary schemas

Simple to set up. Powerful when you need it. And built to scale cost-effectively as your telemetry grows.

Our next piece will focus on instrumentation in OpenTelemetry—how auto vs. manual instrumentation works, and when to choose one over the other.