Logs are crucial for understanding what's happening in your system, but they can often be hard to make sense of. Log parsing is the key to turning raw, unstructured data into something useful.

In this blog, we'll explore the basics of log parsing, its importance, and how it helps you extract valuable insights from your logs without all the clutter.

What is Log Parsing?

Log parsing is the process of transforming raw log data into a structured, readable format that reveals important insights. Logs are generated by systems, applications, and devices to track events, errors, and performance metrics.

Without parsing, these logs remain a jumble of unstructured data that's tough to analyze. Log parsing helps you organize and interpret this data—whether you're troubleshooting issues, monitoring for security threats, or optimizing system performance.

Features of Log Parsers You Should Know

A good log parser goes beyond simple text processing—it structures raw logs into meaningful data for easier analysis.

Here are some key features to look for:

- Automation – Automatically extracts, normalizes, and categorizes log data, reducing manual effort and improving efficiency.

- Customization – Supports custom parsing rules, allowing teams to tailor log processing to specific formats and needs.

- Real-Time Processing – Enables instant log analysis, helping detect anomalies and trigger alerts without delay.

- Visualization Capabilities – Integrates with dashboards to present log data in graphs, charts, and tables for better insights.

- Multi-Format Support – Handles diverse log formats (JSON, syslog, CSV, etc.), making it adaptable across different systems.

- Filtering and Search – Allows quick querying and filtering, so teams can find relevant logs without sifting through mountains of data.

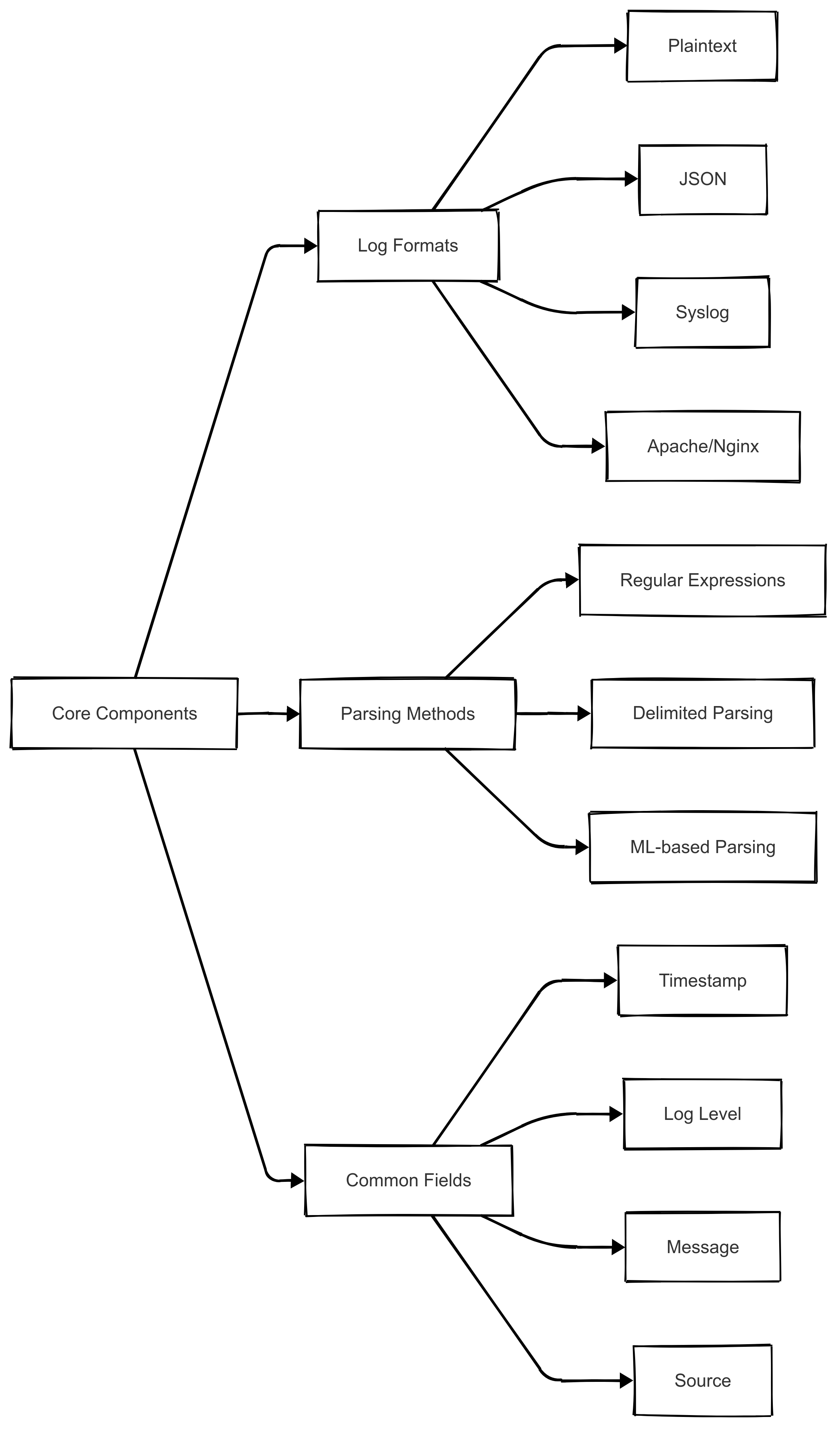

The Core Components of Log Parsing

Understanding log parsing requires familiarity with key components:

1. Log Formats

Logs can be structured, semi-structured, or unstructured. Common formats include:

- Plaintext logs – Raw, human-readable messages.

- JSON logs – Key-value structured format used in modern applications.

- Syslog – Standard format used in Unix/Linux systems.

- Apache/Nginx logs – Web server logs tracking HTTP requests.

2. Parsing Methods

- Regular Expressions (Regex): Useful for extracting patterns in unstructured logs.

- Delimited Parsing: Splitting log entries based on a known delimiter (e.g., commas, spaces, or tabs).

- Machine Learning-based Parsing: Advanced methods that detect log structures dynamically without predefined rules.

3. Common Log Fields

Parsed logs typically contain fields such as:

- Timestamp – When the event occurred.

- Log Level – Severity (INFO, WARN, ERROR, etc.).

- Message – Description of the event.

- Source – The origin (application, service, server, etc.).

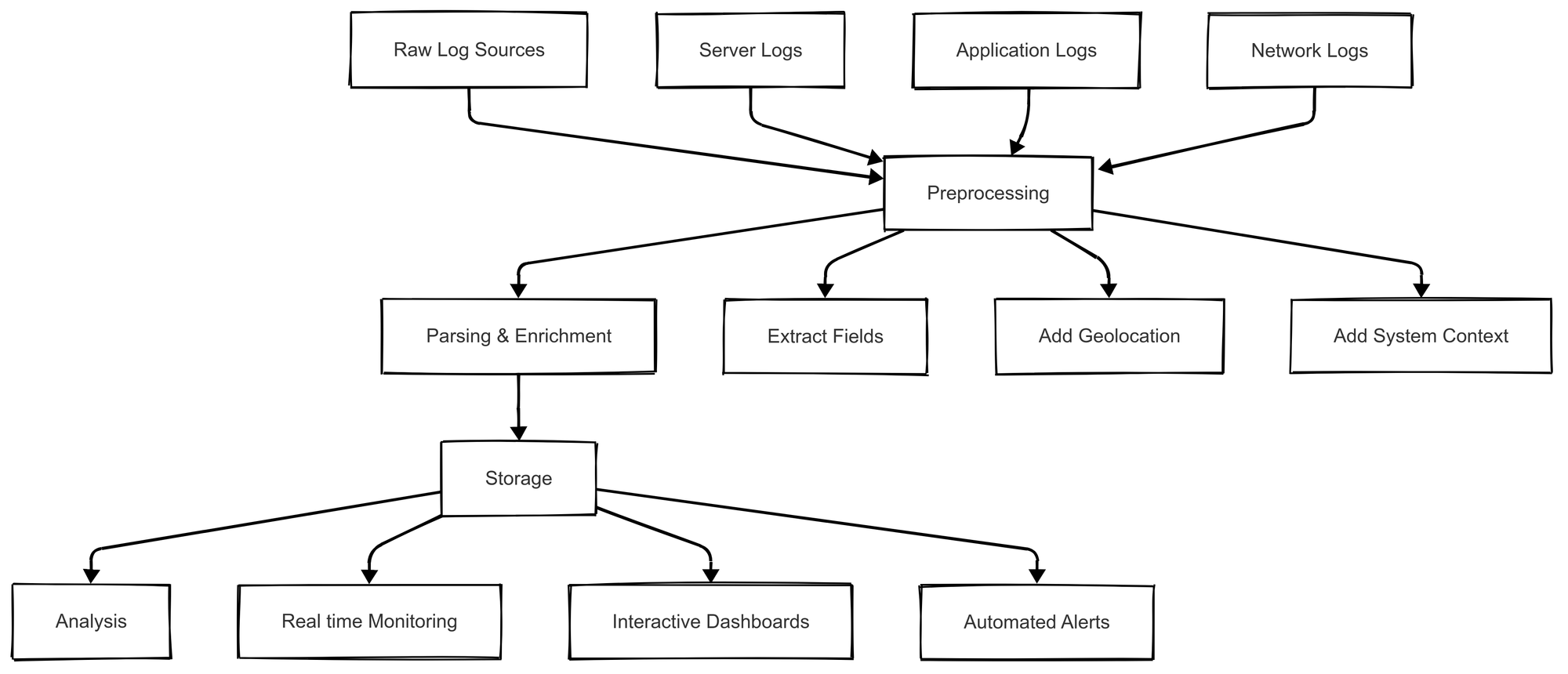

How Log Parsers Work

Log parsers transform unstructured logs into structured data, making them easier to analyze. Here’s how they operate:

- Preprocessing – Raw logs are collected from various sources (servers, applications, network devices) and normalized into a consistent format. This step may involve deduplication, timestamp correction, and log classification.

- Parsing and Enrichment – The parser extracts key fields (timestamps, error codes, user IDs) and enriches data by adding context, such as geo-location or system metadata.

- Storage and Indexing – Parsed logs are stored in databases or log management systems, often indexed for quick retrieval and searchability.

- Analysis and Visualization – Structured logs are fed into monitoring tools and dashboards, enabling real-time alerts, trend analysis, and visual insights.

Applications of Log Parsing

Log parsing plays a crucial role in various IT environments, helping teams extract valuable insights from raw logs.

Here are some key applications:

- DevOps and SRE Support – Engineers use log parsing to troubleshoot issues, detect anomalies, and optimize system performance. Parsed logs help pinpoint failures, track deployments, and ensure smooth CI/CD operations.

- Real-Time Monitoring – Log parsing enables real-time alerting and incident response. By structuring logs, monitoring tools can detect unusual patterns and trigger alerts before minor issues escalate.

- Security and Compliance – Security teams analyze parsed logs to identify threats, track access logs, and ensure compliance with regulations like GDPR and HIPAA.

- Application Performance Monitoring (APM) – Parsed logs help diagnose slow queries, memory leaks, and API failures, improving user experience.

- Cloud and Microservices Observability – Distributed systems generate vast logs. Parsing helps correlate logs across microservices, making debugging more efficient.

Why Log Parsing Matters

1. Faster Troubleshooting

When issues arise, well-parsed logs help you quickly pinpoint the problem. Instead of sifting through thousands of lines of raw data, structured logs highlight key information.

2. Security & Compliance

Log parsing plays a crucial role in identifying unauthorized access, anomalies, and compliance violations. Many organizations must store and analyze logs to meet regulatory requirements (e.g., GDPR, HIPAA, PCI-DSS).

3. Performance Optimization

Efficiently parsed logs provide insights into system performance, helping engineers detect bottlenecks, optimize resources, and improve uptime.

3 Important Log Parsing Techniques

1. Using Command-Line Tools

If you're comfortable working in a terminal, command-line tools are a fast and effective way to parse logs. Some of the most commonly used tools include:

- grep: This tool helps you search for specific patterns or keywords in your log files. For example, if you want to find all instances of an error, you can use

grepto search for words like "error" or "exception." - awk/sed: These are powerful text-processing tools that allow you to extract specific pieces of data from logs and manipulate the text. For instance, you could use

awkit to pull out specific columns orsedto replace certain patterns in your logs. - cut/sort/uniq: These tools help you filter and organize log data.

cutcan be used to separate log fields (such as dates or log levels),sorthelps you arrange log entries in a specific order (e.g., by timestamp), anduniqremoves duplicate entries to make logs easier to analyze.

2. Using Log Management Tools

If you need a more robust solution, log management tools can help automate the process of collecting, parsing, and analyzing logs. These tools provide user-friendly interfaces and advanced features to make parsing easier:

- Logstash (Elastic Stack): This is a popular tool for ingesting and parsing log data. It can collect logs from various sources, parse them, and send the structured data to a search engine like Elasticsearch for easy querying and visualization. It's a great option if you're already using the Elastic Stack for monitoring or analytics.

- Fluentd: Fluentd is a lightweight alternative to Logstash that’s used for collecting and parsing logs. It’s flexible and can handle large volumes of data, making it suitable for complex environments.

- GoAccess: This tool specializes in real-time log analysis, particularly for weblogs. It’s easy to set up and can give you instant insights into web traffic, errors, and performance.

3. Custom Parsing with Python

If you need more control over how logs are parsed, or you want to handle specific use cases, you can create custom parsing solutions using a programming language like Python.

- Using Python's built-in libraries:

- re (Regex): Python’s

remodule allows you to use regular expressions (regex) to search for and extract patterns in log files. For example, you could use regex to pull out timestamps, log levels (like "ERROR" or "INFO"), or error messages.

- re (Regex): Python’s

Here’s an example of how to use Python and re to parse a log entry:

import re

def parse_log(log_line):

"""

Parses a log entry and extracts timestamp, log level, and message.

Args:

log_line (str): A single log entry in the format 'YYYY-MM-DDTHH:MM:SS LEVEL MESSAGE'

Returns:

dict: A dictionary with extracted fields (timestamp, level, message) or None if no match.

"""

pattern = r'(?P<timestamp>\d{4}-\d{2}-\d{2}T\d{2}:\d{2}:\d{2}) (?P<level>[A-Z]+) (?P<message>.*)'

match = re.match(pattern, log_line)

return match.groupdict() if match else None

# Example log entry

log_entry = "2024-02-01T12:45:00 ERROR Something went wrong"

# Parse and print result

print(parse_log(log_entry))In this example, we define a function parse_log that uses a regular expression pattern to match a log line. It extracts the timestamp, log level, and message into a dictionary. If the log line doesn't match the pattern, it returns None.

- pandas: If your logs are more structured, like CSV files or JSON, you can use the

pandaslibrary to load the data into a data frame and easily analyze or manipulate it. For example, you could parse logs into columns for date, time, error codes, and more.

Example: Let's say you have a log file where each line contains a timestamp and an error message. You could write a Python script using re to search for specific error messages and pandas to organize the results into a table for easier analysis.

Advanced Log Parsing Strategies

Once you're comfortable with basic log parsing, you can dive into more advanced strategies that enhance your ability to analyze and gain insights from log data.

Here are three key strategies to consider:

1. Normalization

Normalization involves standardizing log formats across multiple systems and services. Logs often come in different formats depending on the source, making it difficult to analyze them effectively.

Standardizing log data ensures that logs from various sources are consistent and can be processed efficiently. This improves searchability, integration with log management systems, and overall analysis.

- Example: One system might log timestamps as

MM-DD-YYYY HH:MM:SS, while another usesYYYY-MM-DDTHH:MM:SS. - Benefit: Normalized logs make it easier to correlate data, visualize patterns, and spot issues across different services.

Tools like Last9 or Fluentd help automate the normalization process by converting logs into a consistent format as they are collected.

2. Anomaly Detection

Anomaly detection helps you identify unusual patterns in log data that could indicate security threats or system performance issues. By using statistical models or machine learning algorithms, you can automatically detect abnormalities in log entries before they escalate into bigger problems.

- Security Threats: Abnormal spikes in failed login attempts or access requests from unusual IPs.

- Performance Issues: Sudden spikes in error rates or slow response times.

3. Correlation Across Multiple Logs

In complex systems, especially microservices architectures, logs are often spread across multiple services. Each service logs different parts of the user journey, making it hard to see the full picture.

Correlating logs from various services can help you trace an event across the entire system, making troubleshooting and performance optimization much easier.

- Example: A single user request might generate logs in several microservices, each with its real entry.

- Benefit: By correlating logs (using shared identifiers like request or session IDs), you can track the full path of the request, find failures, and pinpoint the root cause of issues.

Log correlation tools like Last9 or Prometheus can help automate this process, giving you a complete view of your system's behavior.

3 Main Challenges in Log Parsing and How to Deal with Them

Here’s a breakdown of three main obstacles you might face and how to tackle them:

1. Handling Large Log Volumes

As systems grow, so do the log volumes. Logs can quickly spiral into the gigabytes or terabytes range, especially in large-scale environments. Processing and analyzing these massive logs in real time can overwhelm your system.

- Solution:

- Indexing: Use indexing to make searching and filtering logs faster. Indexing helps break logs down into searchable chunks, speeding up queries.

- Distributed Processing: Tools like Apache Kafka or Elasticsearch distribute log processing across multiple machines, allowing you to scale and handle larger volumes.

- Efficient Storage Solutions: Utilize cost-effective storage like cloud-based log storage (e.g., AWS S3 or Google Cloud Storage) to store logs and access them only when needed.

2. Dealing with Inconsistent Log Formats

Legacy systems or diverse platforms may produce logs in different formats, making it harder to parse and analyze them effectively. One service might log timestamps as DD/MM/YYYY, while another uses YYYY-MM-DDTHH:MM:SS.

- Solution:

- Standardized Formats: Adopt a standardized log format, like JSON, across all services. JSON is widely supported, easy to parse, and allows you to include structured data, making it easier to analyze.

- Log Parsers: Use tools like Last9 or Fluentd, which can handle different log formats and convert them into a unified structure.

- Pre-processing: Before ingestion, preprocess the logs to convert them into a common format, reducing inconsistencies down the line.

3. Balancing Performance and Accuracy

When you're parsing large logs, accuracy is important, but overly detailed parsing can slow down your system. If parsing rules are too strict or comprehensive, it can lead to performance bottlenecks.

- Solution:

- Optimize Parsing Rules: Focus on parsing only the most relevant data and avoid unnecessary fields that don’t add value.

- Indexing: Efficiently index your logs to ensure fast search and retrieval. Properly indexing key fields, such as timestamps or log levels, can drastically improve performance.

- Parallel Processing: Break up the parsing process into smaller tasks and process them in parallel across multiple nodes or servers to maintain high performance without compromising accuracy.

Tools for Log Parsing: A Comparison

| Tool | Best For | Pros | Cons |

|---|---|---|---|

| Logstash | Large-scale log ingestion | Highly extensible, integrates with Elasticsearch | Heavy resource usage |

| Fluentd | Cloud-native environments | Lightweight, flexible | Can be complex to configure |

| GoAccess | Real-time web log analysis | Fast, simple setup | Limited to web server logs |

| Python | Custom log parsing | Highly customizable | Requires coding expertise |

| Last9 | Distributed system observability | Real-time insights, simplified log aggregation and correlation | May require specific setup based on environment |

When to Use Log Parsing Tools vs. Custom Solutions

Use Pre-Built Tools When:

- Logs are structured and follow a predictable format.

- You need real-time log analysis and dashboards.

- Integration with existing monitoring tools is required.

Use Custom Parsing When:

- Log formats are highly inconsistent.

- You need tailored parsing logic beyond regex and standard tools.

- Performance and efficiency are critical in resource-constrained environments.

Best Practices for Log Parsing

To get the most out of log parsing, follow these best practices:

- Standardize Log Formats – Ensure consistency across logs by using structured formats like JSON or key-value pairs. This makes parsing easier and reduces errors.

- Filter Noisy Logs – Avoid clutter by excluding unnecessary log entries. Focus on logs that provide real value for monitoring and troubleshooting.

- Use Enrichment Wisely – Enhance logs with metadata (such as request origins or user details) to add context, but avoid excessive enrichment that bloats storage.

- Use Automation – Automate log parsing workflows to reduce manual effort and minimize the risk of human error. Implement Indexing and Search – Store parsed logs in a searchable format to enable quick retrieval and analysis.

- Monitor and Validate Regularly – Continuously check parsing accuracy to ensure logs are correctly structured and useful for analysis.

Final Thoughts

Log parsing is all about finding the right tools and strategies for your needs—whether it’s command-line utilities, log management platforms, or custom scripts. The important part is picking what works best for you.

If you’re on the lookout for a managed observability platform to handle all your logging needs, Last9 could be a great fit. Book a demo to see it in action, or if you’d rather explore at your own pace, we’ve got a free trial for you.