In software development, making sure your apps perform well is key. Performance issues, hidden delays, and wasted resources can quickly hurt user experience and increase costs. That’s where OpenTelemetry profiling steps in to help.

In this blog, we’ll break down what OpenTelemetry profiling is, why it’s important, and how you can use it to optimize your applications.

What is OpenTelemetry Profiling?

OpenTelemetry profiling builds on the core features of OpenTelemetry—tracing, metrics, and logs—but goes even deeper. Profiling gives you a closer look at performance data, helping you pinpoint the exact areas in your code that are causing slowdowns.

What is Continuous Profiling?

Imagine being able to check your app’s performance anytime—not just when something breaks or when you're deep in debugging. Continuous profiling makes this possible.

It gives you a constant, 24/7 view of your code’s behavior by collecting profiling data—like CPU, memory, and I/O usage—over time, rather than just in isolated snapshots.

Why does this matter?

Because today’s systems are complex, workloads change, and issues can pop up unexpectedly. Continuous profiling helps you track resource usage trends and spot performance bottlenecks as they develop. It’s like a time-lapse video of your app’s health, letting you rewind and figure out exactly what went wrong.

Why Profiling Matters in the Software Landscape

Software systems today are more complicated than ever.

Microservices, distributed architectures, and cloud-native apps create layers of abstraction that make it harder to troubleshoot performance problems.

OpenTelemetry profiling steps in here, filling the gaps left by traditional tools:

- Deeper Insights: See exactly how your resources are being used at a detailed level.

- Actionable Data: Pinpoint where performance bottlenecks are happening in real-time.

- Cost Optimization: Save on costs by identifying inefficient code and avoiding over-provisioning.

How OpenTelemetry Profiling Works

Step 1:Instrumenting Your Application for Profiling

Before you can start profiling, you need to instrument your application. This means adding the necessary code to collect performance data.

Fortunately, OpenTelemetry SDKs and agents make this pretty straightforward. You can integrate profiling alongside your existing traces, metrics, and logs.

Example:

from opentelemetry import trace

from opentelemetry.instrumentation import install_all

# Install OpenTelemetry instrumentation for all available libraries

install_all()

# Configure profiling

trace.set_preferred_tracer_provider(my_tracer_provider)Step 2: Collecting Key Performance Data

Once your app is instrumented, profiling will start collecting data about key performance metrics like CPU usage, memory consumption, and thread activity.

This data is stored as "profiles," which you can analyze to find performance bottlenecks (like memory hogs or slow-running functions).

Example:

from opentelemetry.profiling import Profile

# Start a profile collection session

profile = Profile()

# Collect CPU, memory, and thread data

profile.collect()

# You can also specify custom settings for profiling

profile.start(cpu=True, memory=True, threads=True)Step 3: Exporting and Analyzing Profiling Insights

Once the profiling data is collected, it's exported to a backend system for storage and further analysis.

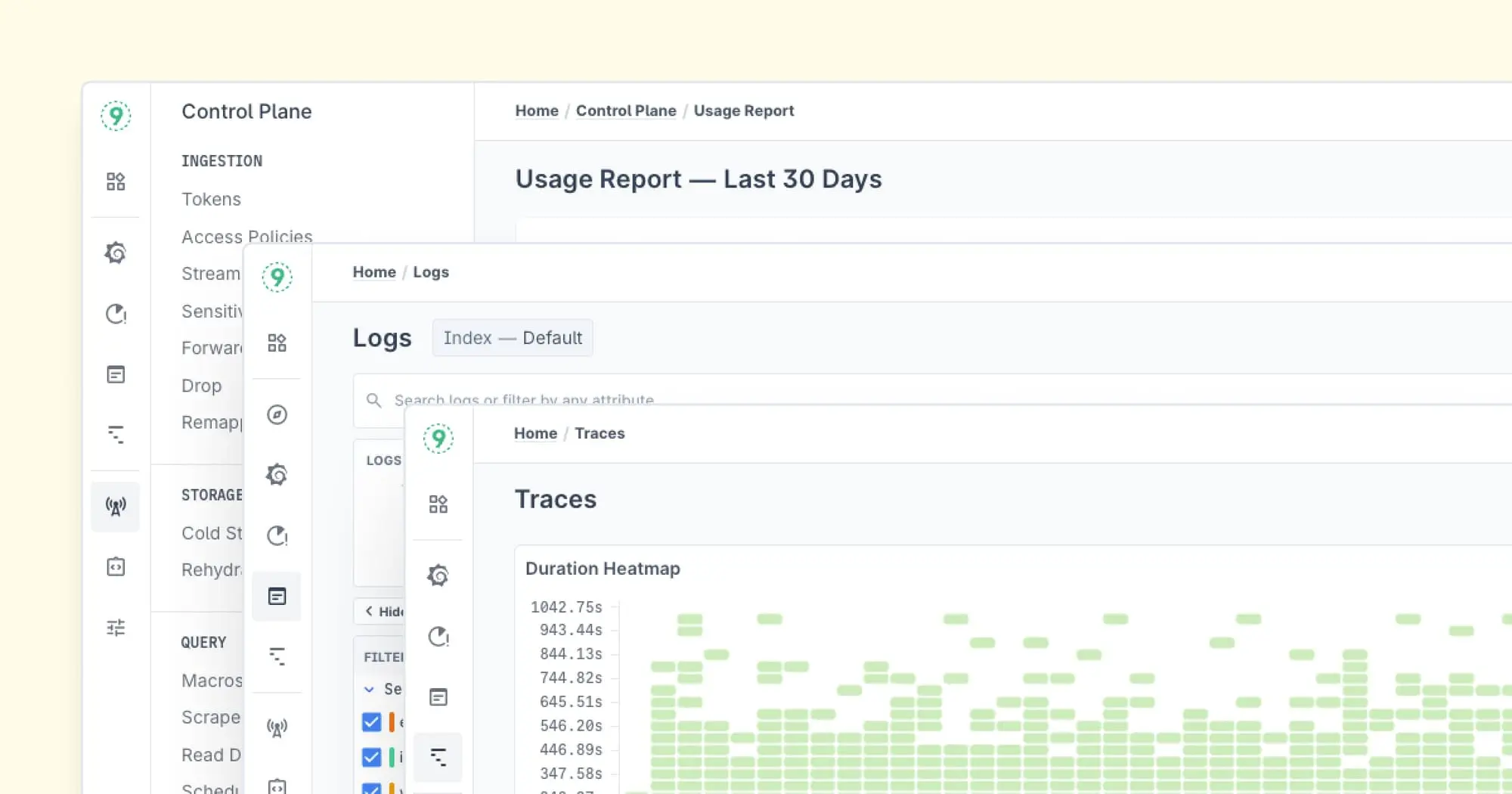

Popular tools like Prometheus, Jaeger, or even custom OpenTelemetry backends can visualize this data. This makes it easier for you to spot issues and gain actionable insights.

Example:

from opentelemetry.exporter.prometheus import PrometheusMetricsExporter

from opentelemetry.sdk.metrics import MeterProvider

# Set up the exporter to send data to Prometheus

exporter = PrometheusMetricsExporter()

meter_provider = MeterProvider(metric_readers=[exporter])

# Export the collected metrics

exporter.export(profiles)What Are Different Profiling Techniques?

Understanding your app’s performance is crucial, but how you collect the data is just as important as why you need it.

Let’s jump into two key profiling techniques that help uncover what’s going on behind the scenes:

1. Runtime Profiling

Runtime profiling gives you real-time performance data directly from your app. It’s like having a built-in performance meter that tracks things like CPU usage, memory allocation, and garbage collection.

Tools like pprof in Go or Java’s Flight Recorder hook into your application during runtime, giving you detailed insights into your code.

Key Points:

- Ideal for getting close-up insights into your app’s performance.

- Tools like pprof and Flight Recorder provide data on CPU, memory, and garbage collection.

- Can introduce overhead, so it’s best used in development, staging, or low-traffic production windows.

2. eBPF-Based Profiling

eBPF (extended Berkeley Packet Filter) takes things up a notch by attaching performance profiling to the operating system itself. This allows you to monitor multiple processes or containers across a node without modifying your applications.

With minimal performance impact, eBPF-based tools like Parca and Pixie provide continuous profiling and deeper system-level insights.

Key Points:

- Provides a host-wide view of performance across multiple processes or containers.

- Doesn’t require changes to the applications themselves.

- Tools like Parca or Pixie offer continuous profiling with low overhead.

3 Key Challenges and How OpenTelemetry Profiling Addresses Them

1. Noise in Distributed Systems

With microservices and distributed systems, pinpointing the source of a performance issue can feel like searching for a needle in a haystack. The problem could be anywhere in the stack.

This is where profiling shines—OpenTelemetry helps narrow down the search, letting you focus on the specific service or function that's causing the issue. No more sifting through irrelevant data!

2. Overhead Concerns

Performance monitoring tools can often slow things down with their overhead. This can be especially tricky when you’re trying to monitor a high-performance system.

OpenTelemetry profiling minimizes this by using smart sampling techniques and efficient data collection methods, so you get the insights you need without dragging down performance.

3. Integration with Existing Tools

One of OpenTelemetry’s biggest strengths is its open standard. This ensures that it works well with a wide range of existing observability tools—whether it’s Prometheus, Jaeger, or custom backends.

This makes it much easier to integrate profiling into your current monitoring stack without needing to completely overhaul your setup.

How to Choose the Right Tool for the Job

Choosing the right profiling tool depends on the level of detail you need. Runtime profiling is perfect when you want to dive deep into a specific application’s performance. It’s great for focusing on individual code-level issues.

On the other hand, eBPF-based profiling offers a broad, system-wide view, giving you insights into everything happening across your host or containers.

Both tools complement each other well. Use runtime profiling for pinpointing specific app performance issues, and eBPF when you need to look at the bigger picture, including system-wide resource allocation.

How Do You Turn Profiling Data into Real Performance Gains?

Collecting profiling data is just the first step. The real magic happens when you turn that data into actionable insights.

Profiling gives you raw metrics—things like CPU time, memory usage, and disk I/O—but it’s the patterns and context within that data that make a difference when optimizing performance.

What Patterns and Bottlenecks Should You Watch For?

When you look at profiling data over time, you start to spot trends and potential bottlenecks. Here are some examples:

- CPU Spikes: Did a particular function start eating up more CPU after a recent update?

- Memory Leaks: Is memory usage steadily growing, hinting at a problem with object lifecycles?

By pairing profiling data with other telemetry like logs or traces, you can figure out the who, what, and when of performance issues, making troubleshooting a lot easier.

Performance Issues You Should Fix First

Not all inefficiencies need to be fixed immediately. Profiling helps you identify which issues are worth optimizing.

Flame graphs, for example, show you exactly which functions are consuming the most resources, helping you prioritize the ones that’ll have the biggest impact.

How Can Profiling Help You Continuously Improve?

When profiling data feeds into your observability platform, it creates a continuous feedback loop. This helps your team monitor the effectiveness of optimizations, catch regressions early, and get a better understanding of how the app performs in real-world conditions.

It’s not just about fixing problems—it’s about making smarter, data-driven decisions. Profiling is more than just a troubleshooting tool; it enables proactive performance improvements.

Use Case: How Can OpenTelemetry Profiling Optimize a Payment Gateway?

A payment gateway is a critical component of online transactions, responsible for securely processing payments between customers, merchants, and banks. Given the high volume of transactions—sometimes thousands per second—any inefficiency in the system can lead to slow processing, transaction failures, or increased operational costs. OpenTelemetry profiling provides deep insights into system performance, helping engineering teams identify and resolve these inefficiencies.

Key Challenges in Payment Gateway Performance

- High Latency Due to Inefficient Database Queries

- Payment gateways rely on multiple database interactions to validate transactions, check fraud indicators, and store records.

- Poorly optimized SQL queries, lack of indexing, or redundant calls can cause high latency, delaying transaction approvals.

- How OpenTelemetry Profiling Helps:

- Profiles database query execution times, helping engineers pinpoint slow queries.

- Highlights redundant queries and inefficient table scans.

- Helps implement optimizations like caching, indexing, and query restructuring to reduce latency.

- CPU-Intensive Encryption Algorithms Affecting Performance

- Payment transactions require secure encryption for data protection (e.g., AES, RSA, TLS encryption).

- Some encryption algorithms are CPU-intensive, leading to slow transaction processing under heavy loads.

- How OpenTelemetry Profiling Helps:

- Identifies CPU bottlenecks caused by encryption tasks.

- Suggests alternative approaches like hardware acceleration (e.g., AES-NI instructions) or more efficient algorithms.

- Helps balance encryption workloads across multiple processors for better efficiency.

- High Resource Consumption Increasing Operational Costs

- Payment gateways operate in cloud or on-premise environments with auto-scaling costs tied to resource usage.

- Suboptimal resource allocation leads to over-provisioning, higher infrastructure costs, or under-provisioning causing transaction delays.

- How OpenTelemetry Profiling Helps:

- Monitors resource usage in real time, identifying inefficient resource consumption.

- Helps optimize thread and memory management, reducing CPU and RAM overhead.

- Assists in autoscaling strategies to allocate resources dynamically based on demand.

The Result

Using OpenTelemetry profiling, engineering teams can:

- Reduce transaction latency, ensuring smoother payments.

- Optimize resource usage, cutting down operational expenses.

- Enhance security and compliance without sacrificing performance.

Ultimately, a well-optimized payment gateway ensures a faster, more reliable payment experience for users, leading to increased customer satisfaction and trust.

Best Practices for OpenTelemetry Profiling

1. Start with Key Services

It’s tempting to profile everything, but that can quickly become overwhelming and resource-heavy. Instead, focus on the services that are most critical to your app’s performance. Prioritizing the high-impact areas first can make the biggest improvements without getting lost in the details.

2. Monitor Regularly

Profiling isn’t something you set and forget. To stay on top of performance issues as your app evolves, make regular monitoring a habit. This helps you spot problems before they become bigger headaches.

3. Combine with Tracing and Metrics

Profiling is powerful, but it works best when paired with tracing and metrics. Rather than treating profiling as a separate tool, use it alongside other observability data for a complete picture of how your system is performing. Together, they give you the full story—so you can tackle issues more effectively.

Community and Development Resources

OpenTelemetry is a movement powered by a growing group of developers, contributors, and organizations.

In recent years, it’s taken off, and with the introduction of profiling, we can see how the community is always pushing for better, more accessible ways to understand complex systems.

The Power of Collaboration

What makes OpenTelemetry stand out is the people behind it. Developers from all over the world are constantly contributing code, offering feedback, and sharing their experiences.

Take the profiling features, for example—they’re a direct result of the community listening to real-world needs. Now, teams can track CPU and memory usage alongside the other telemetry data, making the tool even more useful.

A Focus on Interoperability

One thing OpenTelemetry nails is working well with other tools. Its profiling capabilities are designed to fit right in with your existing setup—whether that’s traces, metrics, or logs.

It also plays nice with platforms like Grafana, Jaeger, and others, so if you’re already using them, adding OpenTelemetry to the mix is simple.

Ongoing Development

OpenTelemetry’s profiling is still a work in progress, and that’s a good thing. The team is constantly making it better—improving data collection, supporting more languages, and tackling any issues the community brings up.

The focus isn’t just on adding features, but on making sure those features are practical, lightweight, and easy to use.

Conclusion

OpenTelemetry profiling gives you a clearer picture of how your app is performing. It’s not just about fixing problems—it’s about understanding what’s going on, improving over time, and making sure your app stays reliable and efficient.

Start small, get it into your workflow, and see how it helps you work smarter.