Effective microservices logging requires standardized formats, centralized storage, correlation IDs, and strong security measures. This guide walks you through setup strategies, best practices, and tool recommendations that won't break your budget.

Why Traditional Logging Fails in Microservices Environments

You've split your monolith into microservices—congrats on the upgrade. But now instead of checking one log file, you're hunting through dozens. Sound familiar?

Microservices logging isn't just about scaling your old logging practices. It's a whole new game with its own rules.

In a traditional app, logs live in one place. With microservices, they're scattered across multiple services, containers, and sometimes even data centers. What used to be a simple grep command now feels like solving a mystery without most of the clues.

How to Create a Robust Microservices Logging Strategy in 5 Steps

Before you start implementing, you need a plan. Here's how to build one:

Identify Critical Logging Objectives for Your Architecture

What are you trying to achieve with your logs? Common goals include:

- Troubleshooting issues

- Monitoring system health

- Tracking user journeys

- Meeting compliance requirements

Each goal affects what you log and how you structure it. For troubleshooting, you need detailed error information. For user journeys, you need consistent IDs to track requests across services.

Select the Right Centralized Logging Platform for Your Scale

You need a central place where all logs come together. Options include:

| Solution Type | Pros | Cons |

|---|---|---|

| Self-hosted (like ELK Stack) | Full control, customizable | Requires maintenance, scaling challenges |

| Cloud-native (like AWS CloudWatch) | Integrates with cloud services | Potential vendor lock-in |

| Managed services (like Last9) | Low maintenance, built-in analytics | Monthly subscription costs |

At Last9, we’ve built our platform to handle high-cardinality data without the usual performance trade-offs. We bring metrics, logs, and traces together in one place—exactly what’s needed for debugging and operating modern microservices.

Our platform has supported massive scale, including 11 of the 20 largest livestreaming events in history. So whether you're running a growing startup or a sprawling enterprise setup, we're built to keep up.

How to Transform Your Logs with Structured Data Formats

For microservices, you need structured logging.

Convert Plain Text to JSON for Powerful Search Capabilities

JSON logs give you searchable, parsable data instead of text blobs:

// Instead of this:

"User logged in at 2025-05-19 10:23:45"

// Do this:

{

"event": "user_login",

"timestamp": "2025-05-19T10:23:45Z",

"user_id": "usr_123456",

"service": "auth-service"

}This structure lets you filter, search, and analyze without complex regex patterns.

Add These 6 Essential Context Fields to Every Log

Every log entry should contain:

- Timestamp (in ISO 8601 format with timezone)

- Service name

- Log level (INFO, WARN, ERROR, etc.)

- Request ID (for tracking across services)

- User ID (when applicable)

- Container/instance ID

This context helps you trace issues across your distributed system.

Request Tracing with Correlation IDs Across Services

The secret weapon of microservices logging is correlation IDs (sometimes called trace IDs).

The Mechanics Behind Effective Correlation ID Systems

When a request first hits your system, generate a unique ID. Then pass this ID to every service that handles the request. Include it in all log entries related to that request.

Later, when you need to debug an issue, you can search for this ID and see the complete journey of a request across all your services.

Add Correlation IDs to HTTP Headers and Message Queues

In HTTP-based communications, pass the ID in a header:

X-Correlation-ID: 550e8400-e29b-41d4-a716-446655440000For message queues, include it in the message metadata.

Most logging frameworks support adding these IDs automatically, so you don't have to modify every logging statement in your code.

Optimize Log Levels for Maximum Troubleshooting Value

Not all logs are created equal. Use log levels strategically:

ERROR

Use for exceptions and failures that need attention. These should trigger alerts.

logger.error("Payment processing failed", {

error: err.message,

order_id: order.id,

payment_provider: "stripe"

});WARN

Use for unusual but non-critical issues that might need investigation later.

logger.warn("API rate limit at 80%", {

service: "recommendation-engine",

current_rate: 480,

limit: 600

});INFO

Use for normal operational events that show what's happening.

logger.info("User checkout completed", {

user_id: user.id,

cart_value: order.total,

items_count: order.items.length

});DEBUG

Use for detailed information helpful during development or deep troubleshooting.

logger.debug("Cache miss for product data", {

product_id: product.id,

cache_key: cacheKey,

fetch_time_ms: timing

});Construct an End-to-End Logging Pipeline for Microservices

A solid microservices logging setup needs a pipeline to collect, process, and store logs.

Collection

Each service should:

- Generate structured logs

- Write to stdout/stderr (for containerized apps)

- Include correlation IDs

Aggregation

Use agents like Fluentd, Logstash, or Vector to:

- Collect logs from all services

- Add metadata (hostname, container info, etc.)

- Buffer logs to handle spikes

Storage and Analysis

Send logs to your central platform for:

- Indexing and search

- Visualization

- Alerting on patterns

Last9 excels here by correlating your metrics, logs, and traces in one place. We give you the full picture when troubleshooting, not just isolated data points.

4 Critical Microservices Logging Mistakes and How to Avoid Them

Learn from others' mistakes to save yourself some headaches:

Inconsistent Formats

When each team uses different logging formats, searching across services becomes nearly impossible. Create logging standards and shared libraries to ensure consistency.

Logging Too Much (or Too Little)

Too much data drowns out important signals and costs more. Too little leaves you in the dark when problems happen. Find the right balance and adjust based on experience.

Missing Correlation IDs

Without correlation IDs, tracing requests across services is like finding a needle in a haystack while blindfolded. Make them non-negotiable in your architecture.

Logging Security Best Practices

Security isn't optional when it comes to microservices logging. With services communicating across networks, logs containing sensitive data can become a security liability if not properly protected.

Encrypt Log Data at Rest and in Transit

- Use TLS/HTTPS for all log transfers between services and your central logging platform

- Encrypt stored logs using industry-standard encryption methods

- Rotate encryption keys regularly, following security best practices

Implement Strong Access Controls

- Restrict log access based on role and need-to-know principles

- Use multi-factor authentication for access to logging systems

- Create audit trails of who accesses log data and when

Sanitize Sensitive Data Before Logging

Create a clear policy about what should never appear in logs:

- Passwords and authentication tokens

- Credit card numbers and financial details

- Personal identifiable information (PII) like social security numbers

- Session tokens or cookies

- API keys and encryption keys

Use automated tools to redact or mask sensitive data that might slip through. Most logging libraries provide redaction features that you can configure with regex patterns matching sensitive data formats.

Create a Backup and Disaster Recovery Plan

- Regularly back up your log data to secure locations

- Encrypt all backups

- Test restoration procedures to ensure you can recover when needed

- Define retention policies based on regulatory requirements

These security measures aren't just good practice—they're increasingly required by regulations like GDPR, HIPAA, and others that mandate the protection of sensitive data.

Step-by-Step Example: Building a Node.js Microservices Logging System

Let's walk through a practical example using Node.js with Express:

First, set up a logging library:

// Using Winston for structured logging

const winston = require('winston');

const logger = winston.createLogger({

level: 'info',

format: winston.format.json(),

defaultMeta: { service: 'user-service' },

transports: [

new winston.transports.Console()

]

});Next, add middleware to create and track correlation IDs:

app.use((req, res, next) => {

// Generate or use existing correlation ID

req.correlationId = req.headers['x-correlation-id'] || uuidv4();

// Add it to response headers

res.setHeader('x-correlation-id', req.correlationId);

// Add it to all logs in this request

logger.defaultMeta.correlationId = req.correlationId;

next();

});Then use the logger in your routes:

app.post('/users', async (req, res) => {

logger.info('Creating new user', {

email: req.body.email.substring(0, 3) + '***' // Partial email for privacy

});

try {

const user = await createUser(req.body);

logger.info('User created successfully', { userId: user.id });

res.status(201).json(user);

} catch (err) {

logger.error('Failed to create user', {

error: err.message,

stack: process.env.NODE_ENV === 'development' ? err.stack : undefined

});

res.status(400).json({ error: 'User creation failed' });

}

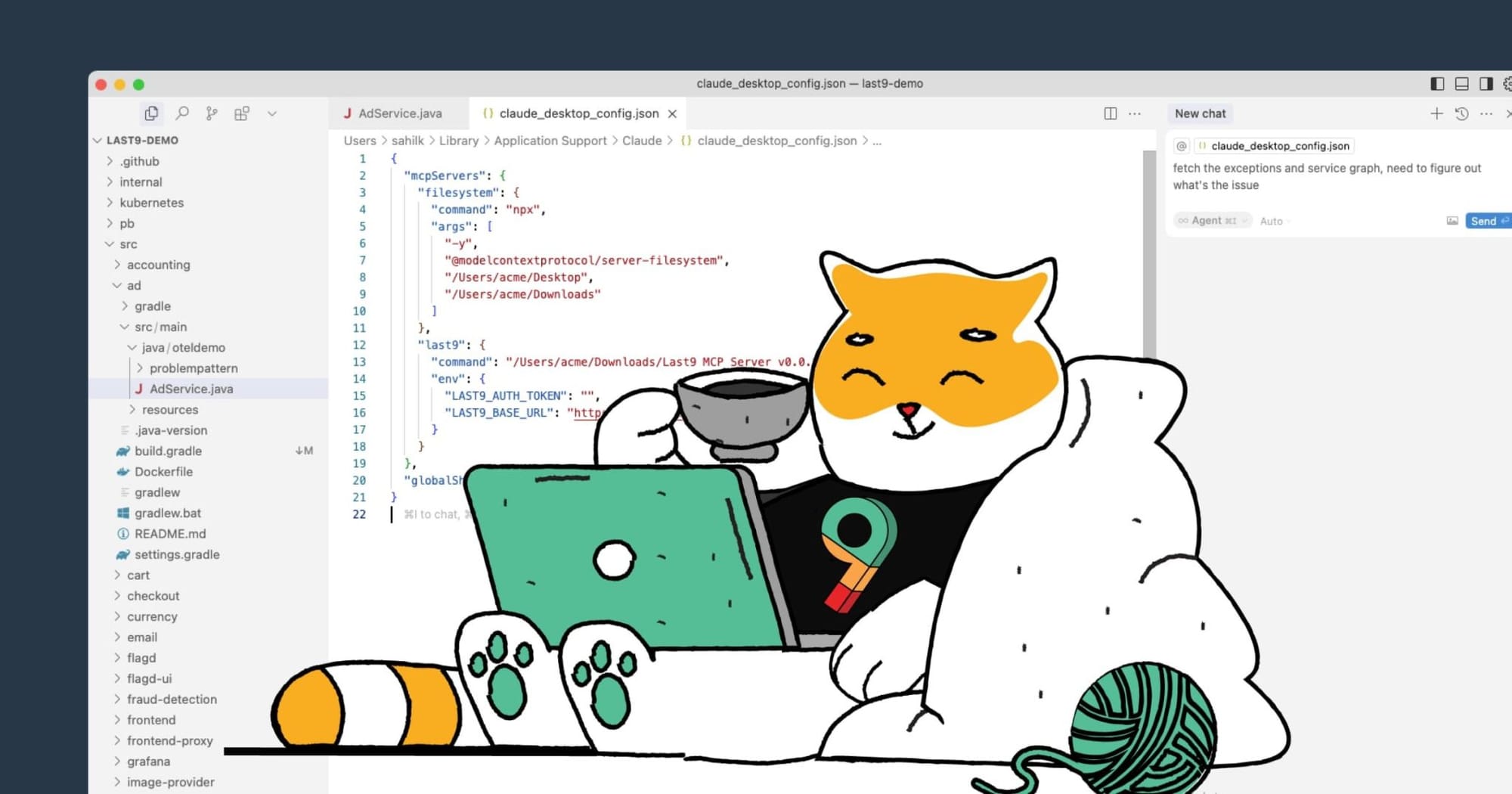

});Finally, configure log shipping to your centralized logging platform. With Last9, you could use OpenTelemetry to unify your logs with metrics and traces:

const { NodeTracerProvider } = require('@opentelemetry/node');

const { registerInstrumentations } = require('@opentelemetry/instrumentation');

const { getNodeAutoInstrumentations } = require('@opentelemetry/auto-instrumentations-node');

const { CollectorTraceExporter } = require('@opentelemetry/exporter-collector');

// Set up the tracer provider

const provider = new NodeTracerProvider();

provider.register();

// Configure the exporter to send to Last9

registerInstrumentations({

instrumentations: [getNodeAutoInstrumentations()],

tracerProvider: provider,

});

// Connect tracer to logs by including trace IDs in log entries

logger.defaultMeta.getTraceId = () => {

const span = provider.getTracer('default').getCurrentSpan();

return span ? span.context().traceId : undefined;

};This setup gives you structured logs with correlation IDs that connect to your traces, all flowing to your central platform.

Essential Tools for Professional Microservices Logging Setups

The right tools make all the difference. Here are some worth checking out:

Logging Libraries

- Winston/Bunyan (Node.js): Great for structured JSON logging

- Logback/SLF4J (Java): Flexible, powerful logging for JVM languages

- Zap (Go): Super fast, structured logging for Go services

Log Shippers

- Fluentd: Lightweight log collector with hundreds of plugins

- Vector: High-performance observability data pipeline

- Filebeat: Simple, reliable log shipping from the Elastic stack

Observability Platforms

- Last9: Purpose-built for high-cardinality microservices environments, with unified metrics, logs, and traces

- Grafana Loki: Horizontally scalable log aggregation system

- Jaeger: Open-source distributed tracing system

Wrapping Up

As your system grows, your logging needs will evolve. Keep refining your approach based on real troubleshooting experiences. The best microservices logging setup isn't the most complex—it's the one that helps you solve problems faster.

FAQs

How many logs should I keep and for how long?

Balance retention needs with costs. Most teams follow a tiered approach:

- 7-14 days of all logs for immediate troubleshooting

- 30-90 days of ERROR and WARN logs for recent issue investigation

- 6-12 months of security and audit logs for compliance purposes

Consider implementing log sampling for high-volume, low-value logs to reduce storage costs while maintaining visibility into system behavior.

Should I log in to development the same way as production?

Yes! Use the same logging setup everywhere to avoid "it works on my machine" problems. The main differences should be:

- More DEBUG level logs enabled in development environments

- Local storage instead of central platform in development

- Less sensitive data redaction during early development phases (but still no passwords or tokens!)

Having consistent logging across environments means developers can troubleshoot issues in their local environment just like they would in production.

How do I debug logs across multiple services?

Use these techniques in order of increasing complexity:

- Filter by correlation ID in your centralized logging platform to see all logs for a specific request

- If using distributed tracing, find the trace for the relevant request and follow it through each service

- Look for ERROR logs around the time of the incident, then expand your search to related services

- Create dashboards that visualize log patterns across multiple services

Remember that the power of centralized logging isn't just in collecting logs—it's in being able to query and correlate them effectively.

How can I reduce logging costs without losing visibility?

- Sample high-volume, low-value logs (like routine health checks) at a percentage (e.g., log only 1 in 100)

- Use dynamic log levels to increase detail only when issues arise

- Compress logs during transmission and storage (many formats like GELF are designed for efficient compression)

- Set appropriate retention policies by log level (keep ERROR logs longer than INFO logs)

- Filter out noisy log sources that provide little value

- Use structured logging to make searches more efficient and reduce the need to log excessive context

What should I never put in logs?

- Passwords or authentication tokens

- Credit card numbers or financial data

- Personal identifiable information (PII) like social security numbers, full names, or addresses

- Session tokens or cookies

- Encryption keys or security certificates

- Database connection strings with credentials

- API keys or other service credentials

- JWT tokens (which can contain sensitive claims)

When in doubt, err on the side of caution. It's better to log too little sensitive data than too much.

How do I handle logging in containers and Kubernetes?

In containerized environments, follow these best practices:

- Always write logs to stdout/stderr rather than to files

- Let the container runtime handle log collection

- Use a DaemonSet to run log collectors on each node

- Consider using a sidecar pattern for applications with special logging requirements

- Add Kubernetes metadata (pod name, namespace, container) to your logs

- Use Kubernetes annotations to configure logging behavior

- Keep log files outside your container image to avoid increasing image size

In Kubernetes specifically, tools like Fluentd or Vector can be deployed as DaemonSets to collect logs from all nodes and forward them to your centralized logging platform.

How do I transition from monolith to microservices logging?

Follow this step-by-step approach:

- Start by implementing structured logging in your monolith before breaking it apart

- Add correlation IDs to track requests through your monolith

- Set up a centralized logging platform that can scale with your future architecture

- As you extract services, ensure they follow your logging standards from day one

- Implement a consistent approach to error handling and logging across all new services

- Set up monitoring and alerting based on your logs early in the transition

- Consider implementing distributed tracing from the beginning of your transition

The key is to establish good logging practices before you decompose your monolith, making the transition to microservices logging much smoother.

How do I monitor logs effectively across many microservices?

Raw logs are valuable, but for effective monitoring:

- Create alerts for critical error patterns that require immediate attention

- Build dashboards showing error rates across services

- Set up anomaly detection to catch unusual log patterns

- Monitor the absence of expected logs (sometimes the problem is what you don't see)

- Create synthetic transactions that test your entire system and generate logs

- Establish baseline metrics for normal log volumes and alert on deviations

- Use log-based metrics to track business KPIs

Last9's platform excels at transforming your logs into actionable metrics and alerts without requiring you to pre-define what's important—it can help identify patterns that weren't obvious at first.

How do I handle multi-language logging in microservices?

When your microservices are built with different programming languages:

- Create a logging standards document that all teams follow regardless of language

- Focus on output format (JSON) rather than specific libraries

- Provide example configurations for popular logging libraries in each language

- Consider building thin wrapper libraries that enforce your standards

- Use a log collector that can normalize different formats if needed

- Ensure timestamp formats and timezone handling are consistent across languages

- Test that correlation IDs are properly passed between services written in different languages

The goal is consistent log output regardless of the underlying implementation details.