Kubernetes has revolutionized how we manage containerized applications, bringing scalability, reliability, and flexibility to the forefront.

Two fundamental components of Kubernetes are Pods and Nodes, and understanding their differences is crucial for anyone working with Kubernetes clusters.

While most people are familiar with these terms, a deeper dive into the specifics can help you optimize your Kubernetes setup and avoid common pitfalls. So, let's break it down: What exactly are Pods and Nodes, and how do they differ?

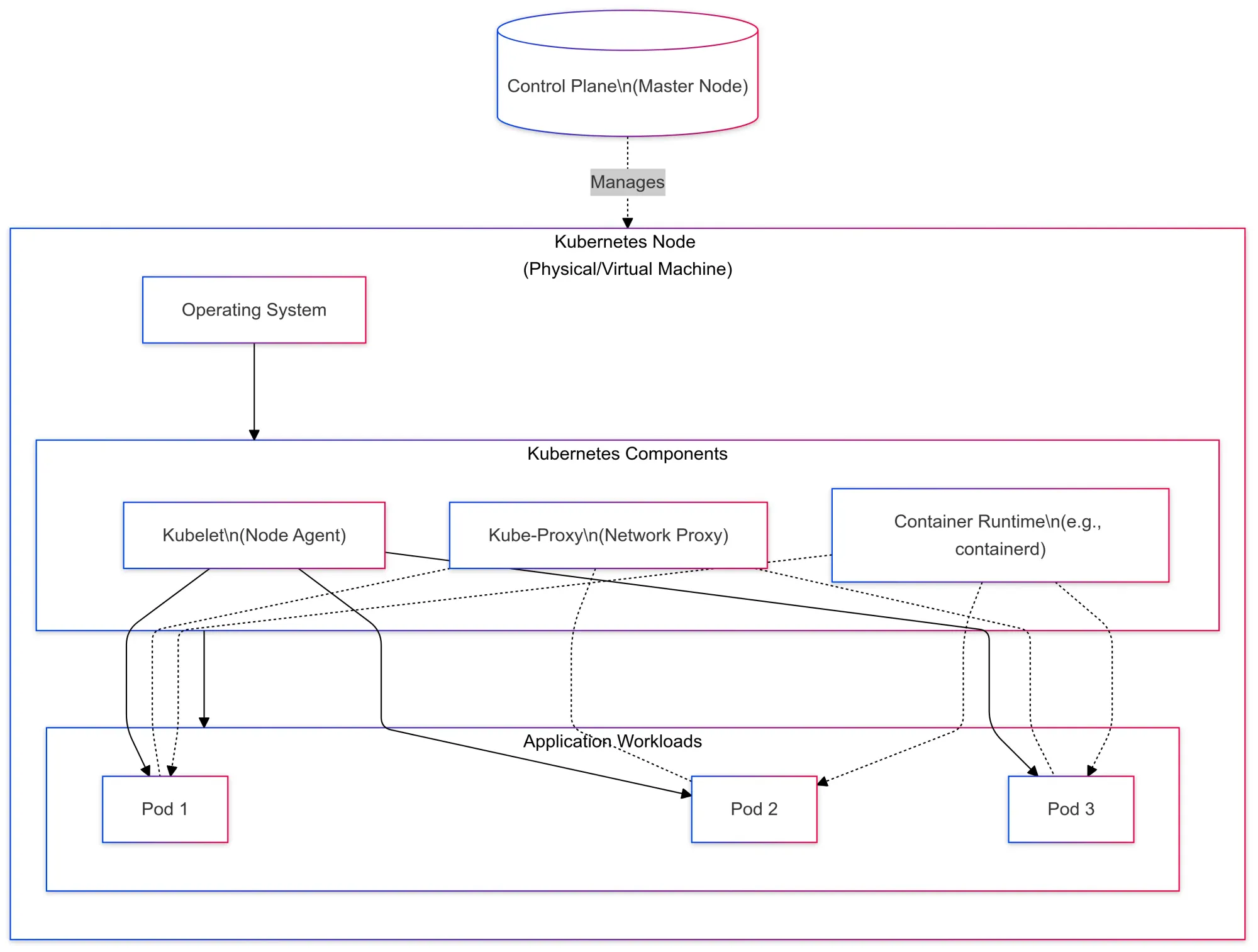

What is a Kubernetes Node?

A Kubernetes Node is essentially a physical or virtual machine where your application’s containers are run. It serves as the computational resource within a Kubernetes cluster.

A Node includes everything required to run a container, including the necessary operating system and the Kubernetes components like kubelet, kube-proxy, and container runtime.

Key Features of a Kubernetes Node:

- Hardware: Each Node represents a machine, whether it's on-premise or cloud-based.

- Multiple Pods: A Node can host one or more Pods, depending on its resources.

- Resource Management: Nodes are responsible for providing CPU, memory, and networking capabilities to Pods.

- Control: Nodes are managed by the Kubernetes control plane, which ensures they remain healthy and can scale as necessary.

A Node acts as the worker machine within the cluster, and each Node is part of a larger ecosystem designed to handle the deployment, scaling, and operations of containerized applications.

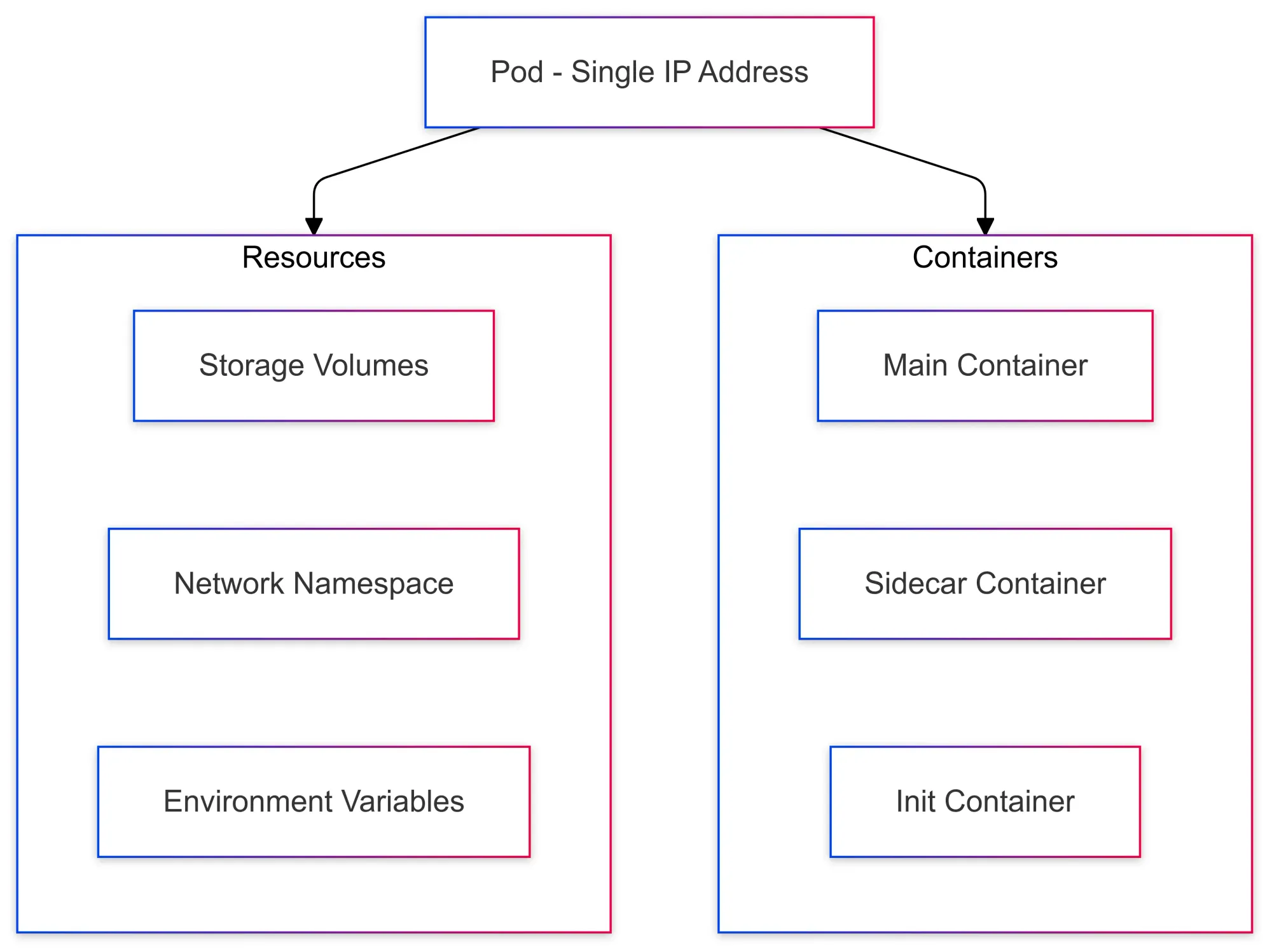

What is a Kubernetes Pod?

A Kubernetes Pod is the smallest deployable unit within Kubernetes. It’s essentially a group of one or more containers that share the same network namespace and storage.

Pods ensure that containers that need to communicate closely are co-located on the same machine (or Node), sharing resources like the IP address, storage, and even environment variables.

Key Features of a Kubernetes Pod:

- Container Grouping: A Pod can hold multiple containers, though it's typically one container per Pod for simplicity.

- Shared Resources: Containers within the same Pod share networking and storage resources, making them highly effective for tightly coupled applications.

- Ephemeral Nature: Pods are considered ephemeral — they don't live forever and are often replaced by new Pods when needed.

- Replicas: Kubernetes allows you to scale Pods by creating multiple replicas, which can be distributed across multiple Nodes.

Pods are where your actual applications and workloads reside. They encapsulate everything the container needs to run and ensure consistency in how applications are deployed across a Kubernetes cluster.

Kubernetes Pod vs Node: Key Differences

Here’s a breakdown of the most important distinctions:

| Feature | Kubernetes Pod | Kubernetes Node |

|---|---|---|

| Definition | A group of one or more containers | A machine (physical or virtual) in the cluster |

| Scope | Represents a logical host for containers | Represents the physical infrastructure |

| Resources | Shares CPU, memory, and storage within a Pod | Provides the resources to multiple Pods |

| Life Cycle | Ephemeral and managed by the control plane | Persistent and managed by Kubernetes |

| Scaling | Scaled by creating replicas across Pods | Scaled by adding more Nodes to the cluster |

| Role | Runs the application containers | Executes Pods and provides the underlying hardware |

| Location | Can be spread across Nodes | Resides in a single physical or virtual machine |

When to Use Pods and Nodes

While both Pods and Nodes serve different purposes, knowing when to use each and how to manage them can greatly enhance the performance of your Kubernetes applications.

- When to Focus on Nodes: Nodes are the foundational resource in Kubernetes. You need to monitor and manage the health of Nodes to ensure your cluster remains stable. Consider adding more Nodes if your applications require more CPU, memory, or storage.

- When to Focus on Pods: Pods are the building blocks of your application. You’ll need to optimize Pod placement and replication for better load balancing, scaling, and redundancy. Pay attention to the resource requirements of your Pods to ensure they’re properly allocated.

How Does Scaling in Kubernetes Happen? Pods vs. Nodes

Scaling in Kubernetes can be tricky if you don’t understand the differences between Pods and Nodes.

Pods are typically scaled horizontally by creating more Pods. But what happens when you run out of Node resources? You scale your Nodes by adding more machines to the cluster.

This distinction is crucial because scaling Pods won’t work if your Nodes can’t handle them.

Horizontal Scaling: Pod Replicas

One of the key advantages of Kubernetes is the ability to scale Pods horizontally by increasing or decreasing the number of Pods running in your cluster based on demand.

- If your web application experiences a traffic spike, Kubernetes will automatically spin up more Pods to handle the increased load.

- When traffic decreases, Kubernetes reduces the number of Pods to free up resources.

This dynamic scaling ensures efficient resource utilization without manual intervention.

Node Scaling

If scaling Pods isn’t enough to handle your workloads, you need to scale your Nodes by adding more physical or virtual machines to your Kubernetes cluster.

- Node scaling is a more complex operation because it involves adding infrastructure to your environment.

- In managed Kubernetes environments (e.g., AWS EKS, GCP GKE), this process is often automated, making it easier to manage.

- However, understanding how Node scaling works is still essential for optimizing cluster performance.

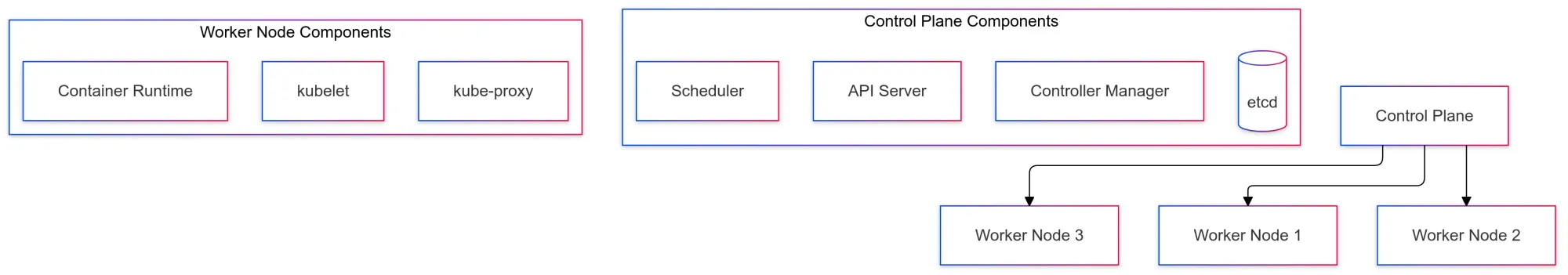

What Are Kubernetes Clusters?

A Kubernetes Cluster is the foundation of any Kubernetes deployment. It consists of physical or virtual machines (Nodes) that work together to run containerized applications efficiently. A cluster has two main components:

1. Control Plane (The Brain of Kubernetes)

The Control Plane manages the cluster’s state, schedules workloads, and ensures everything is running smoothly. It includes:

- API Server – The gateway for all Kubernetes operations.

- Controller Manager – Handles controllers that regulate cluster behavior (e.g., ensuring desired Pod counts).

- Scheduler – Assigns workloads to available Nodes.

- etcd – A distributed key-value store that keeps track of the cluster’s configuration and state.

2. Worker Nodes (Where Applications Run)

Worker Nodes are the machines that host Pods, the smallest deployable units in Kubernetes. Each Node includes:

- kubelet – Ensures containers inside Pods are running properly.

- kube-proxy – Manages network communication between Pods and services.

- Container Runtime – Runs the actual containers (e.g., Docker, containerd).

Why Are Kubernetes Clusters Important?

A Kubernetes cluster ensures:

- Workload orchestration – Automatically manages containerized applications.

- Scaling – Adjusts resources based on demand.

- Self-healing – Detects and recovers from failures.

- Load balancing – Distributes traffic efficiently across running instances.

What Is the Relationship Between Pods and Containers?

In Kubernetes, Pods act as the wrapper for one or more containers, providing the necessary environment for them to operate. While containers run the actual applications, Pods serve as the smallest deployable unit in Kubernetes.

How Pods and Containers Work Together

- Pods group containers that need to work closely together.

- Containers within the same Pod share:

- Network namespace – They can communicate via

localhostas if they were on the same machine. - Storage volumes – They can access shared data easily.

- Network namespace – They can communicate via

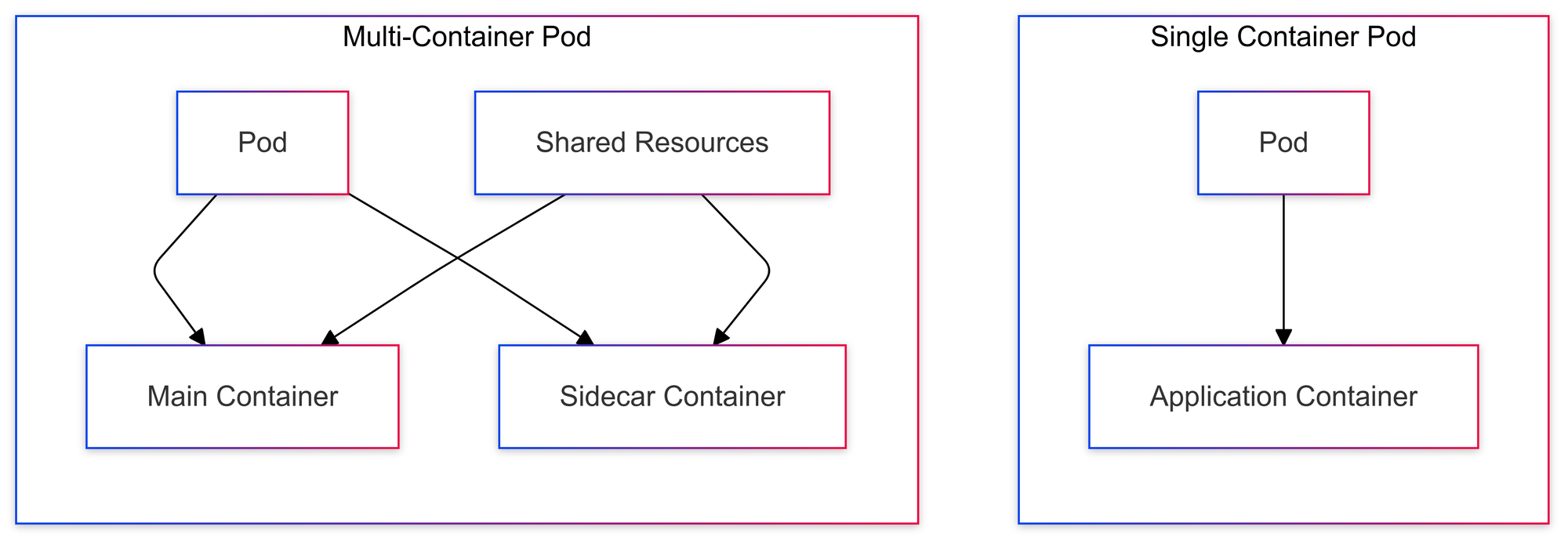

Single vs. Multi-Container Pods

- Single-container Pods (most common) – Each Pod hosts one application, simplifying deployment and management.

- Multi-container Pods – Used for tightly coupled applications, such as:

- A web server container with a logging agent container.

- A main application container with a sidecar for monitoring or security.

Even though containers in the same Pod share networking and storage, they remain individual entities with their own filesystems and process spaces. This allows for flexibility while maintaining isolation where needed.

Advanced Concepts: Pods, Nodes, and Resource Requests

A key aspect of managing Kubernetes workloads is resource allocation, which ensures Pods use only the CPU and memory they need—without starving or overwhelming Nodes. Kubernetes allows you to define resource requests and limits to manage this efficiently.

Resource Requests and Limits in Pods

- Requests – The minimum amount of CPU and memory a container needs to run.

- Limits – The maximum amount of CPU and memory a container can use.

By setting these values, Kubernetes can:

✔ Schedule Pods efficiently across Nodes.

✔ Prevent resource starvation by ensuring fair allocation.

✔ Avoid overloading Nodes, keeping the cluster stable.

Properly configuring resource requests and limits ensure better performance, reliability, and cost efficiency in Kubernetes environments.

Conclusion

Kubernetes Pods and Nodes are both essential, but they play very different roles. Nodes provide the infrastructure and computing power, while Pods serve as the smallest deployable unit, housing one or more containers.

The key to running Kubernetes effectively is balancing these two components—ensuring you have the right number of Pods for your workloads and enough Nodes to support them.