Kubernetes has truly changed the game for anyone working with complex applications that need to be scalable, resilient, and cloud-ready. But what is it about Kubernetes that makes it such a go-to choice for microservices?

In this guide, we'll talk about how Kubernetes makes managing microservices easier, with features like load balancing, service discovery, and container orchestration.

What is Microservices Architecture and Why Use Kubernetes?

In a microservices architecture, applications are split into smaller, self-contained units (microservices), each focusing on a specific functionality.

Unlike monolithic applications, which have all components tightly packed together, microservices allow each unit to be independently deployed, scaled, and updated. This modular setup helps development teams work faster and more flexibly, a major advantage in today's world.

Why Kubernetes for Microservices?

Kubernetes simplifies complex tasks, like automating rollouts and scaling, managing workloads, and self-healing in microservices.

When a container crashes, Kubernetes restarts it automatically—meaning less downtime. It also provides efficient load balancing and service discovery, which helps different microservices communicate smoothly.

Simply put, Kubernetes brings the agility and resilience that a microservices architecture needs to thrive.

Key Components of Kubernetes Microservices Architecture

Let’s break down some core components that power microservices on Kubernetes:

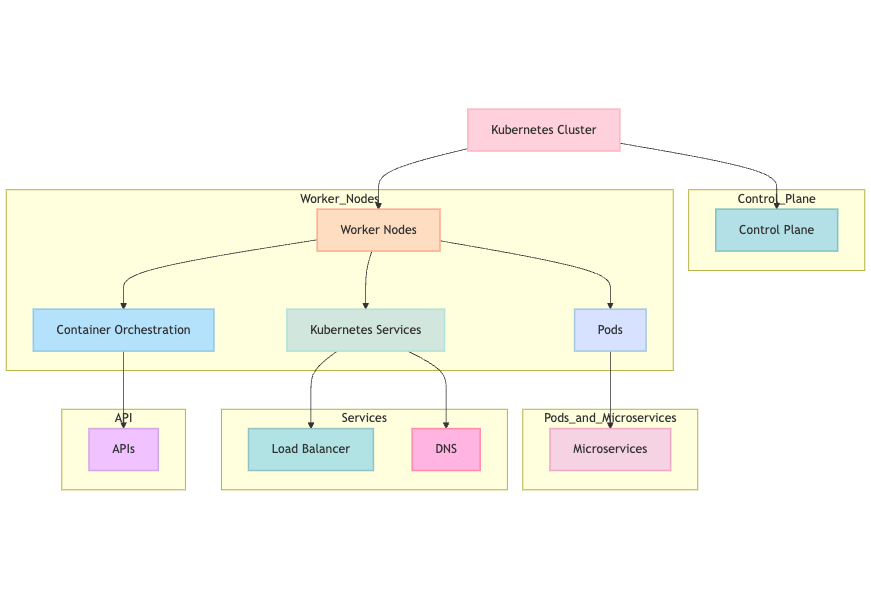

Kubernetes Cluster

At the heart of Kubernetes is the cluster—a group of nodes (or virtual machines) that run applications. A Kubernetes cluster has two main parts:

- Control Plane: Manages and schedules workloads, keeping the cluster running.

- Worker Nodes: Where your application containers run. Each node can host multiple pods, ensuring that microservices stay isolated but are still able to interact when needed.

Pods and Microservices

You might be thinking, “Is a pod a microservice?”

Well, pods are the smallest deployable units in Kubernetes, and each pod typically contains one or more containers running a single instance of a microservice.

You can think of a pod as a wrapper for your microservice that keeps it running and connected to the rest of the app. If you want to deploy a service that runs across multiple instances, Kubernetes lets you easily set up and manage multiple replicas of the pod.

Kubernetes Services

Kubernetes services are critical for ensuring that pods (and, by extension, your microservices) can talk to each other.

Services manage access points, using IP addresses and DNS to allow different parts of your application to locate and connect. They’re also responsible for load balancing, and distributing requests among available pods for higher availability and reliability.

Container Orchestration and APIs

As a container orchestration platform, Kubernetes manages the deployment, scaling, and operation of containers across a cluster.

APIs are a key part of this architecture, enabling microservices to interact without needing to know about each other’s internals. Kubernetes API resources also allow you to manage components like deployments, replicas, and services.

How to Deploy Microservices in Kubernetes

Deploying microservices in Kubernetes starts with defining each component (pods, services, etc.) in a YAML configuration file. These files set the stage, specifying your service details, replicas, and network requirements.

Once the configurations are ready, you can deploy them with commands like kubectl apply -f <filename.yaml>.

Kubernetes Deployment Strategies

Kubernetes offers flexible strategies for deployments:

- Rolling Updates: Roll out new versions gradually, without downtime, so that users always have access.

- Blue-Green Deployments: Run both the old and new versions in parallel, allowing easy rollback if issues arise.

The good part of Kubernetes comes with its automated rollback and self-healing features. If something goes wrong with a new deployment, Kubernetes can roll back to the last stable version, minimizing disruptions and keeping your app running smoothly.

Best Practices for Managing Microservices

- Namespace Segmentation: Organize microservices within namespaces to avoid resource conflicts and improve security.

- Config Maps and Secrets: Store configurations and secrets separately from containers to keep sensitive data safe.

- Load Balancing and Autoscaling: Use Kubernetes’ built-in load balancing and autoscaling to handle changes in traffic automatically.

Common Architectures in Kubernetes

3-Tier Architecture in Kubernetes

One popular architecture for microservices in Kubernetes is the 3-tier model, which splits the application into three parts:

- Frontend (UI): This tier is user-facing and handles requests.

- Backend (Business Logic): Handles data processing and core functions.

- Database (Storage): Manages data storage and retrieval.

Each tier can run as a separate microservice within Kubernetes, allowing independent scaling and management.

Docker and Kubernetes: Understanding the Connection

Are Docker and Kubernetes Microservices?

This is a common question. Docker and Kubernetes aren’t microservices themselves but essential tools for running microservices.

Docker handles containerization—packaging an app’s code and dependencies into a container. Kubernetes then takes these containers and manages their deployment, scaling, and lifecycle, creating an environment where microservices can thrive.

What is a Docker Image?

A Docker image is a blueprint for creating containers. It includes the application code, libraries, dependencies, and runtime needed for your microservices.

You can think of it as a pre-packaged setup that makes sure your app runs the same way, no matter where it’s deployed. Images are stored in registries like Docker Hub, making them easy to pull into Kubernetes clusters.

Building and Deploying Docker Images in Kubernetes

To deploy microservices, you first need to build Docker images using docker build. Once your images are ready, push them to a registry. With, you can then create Kubernetes deployments that pull these images into your pods.

Advanced Management and Best Practices for Kubernetes Microservices

Database Management in Kubernetes Microservices Architecture

Managing databases in a microservices setup can be challenging, especially when it comes to data consistency and storage. Kubernetes offers tools like StatefulSets for managing persistent applications that need stable storage and unique network identifiers.

Combining Persistent Volumes (PVs) and Persistent Volume Claims (PVCs) allows you to keep databases running even when containers are rescheduled, ensuring your data is safe and accessible.

Scaling and Load Balancing Microservices

Kubernetes makes it easy to manage replicas, automatically adjusting the number of pods based on demand.

Use Horizontal Pod Autoscaling to adjust replicas and load balancing to distribute traffic. This keeps your application responsive, even as demand changes.

Access Control and Security

Namespaces play a big role in access control by isolating different parts of an application. Use Role-Based Access Control (RBAC) to assign permissions and keep your microservices safe.

Kubernetes also supports secrets management, allowing you to keep sensitive data, like API keys and passwords, out of container images.

Observability and Monitoring

Observability tools like Prometheus, Grafana, and Last9 are invaluable for monitoring Kubernetes microservices.

You can track key metrics—CPU usage, memory, container restarts—and get real-time insights into your system’s health.

Monitoring your microservices gives you visibility, so if a microservice fails, you can quickly diagnose the issue and keep downtime minimal.

Cloud-Native Microservices with Kubernetes

Managing Microservices Across Providers (AWS, Azure, GCP)

Kubernetes is cloud-agnostic, which means it works across AWS, Azure, GCP, and other providers. With services like EKS (Elastic Kubernetes Service on AWS), GKE (Google Kubernetes Engine), and AKS (Azure Kubernetes Service), you can run Kubernetes clusters on any cloud while integrating with their native tools.

Continuous Integration and Continuous Delivery (CI/CD) Pipelines

CI/CD pipelines are essential for speeding up development and maintaining quality. By integrating CI/CD tools, you can automate testing, version control, and deployments, making it easy for development teams to release new features. Platforms like GitHub Actions, Jenkins, and CircleCI help automate the entire process, from code to production.

Integrating Service Meshes and Observability Tools

Service meshes enhance traffic management between microservices, while observability tools provide in-depth metrics and logs. Using tools like Istio for service mesh and Prometheus for monitoring helps you handle complex architectures more effectively, ensuring stability and scalability.

Conclusion

Kubernetes is the backbone of cloud-native applications, giving development teams the tools they need to build, scale, and manage microservices with ease. Its self-healing, load balancing, and orchestration features make Kubernetes ideal for handling the complex needs of microservices architectures.

FAQs

Is Kubernetes a microservice architecture?

No, Kubernetes itself isn’t a microservice architecture; rather, it’s a container orchestration platform that supports deploying, managing, and scaling microservices. It provides the infrastructure and tools to run microservices-based applications in a distributed environment.

How do I deploy microservices in Kubernetes?

Deploying microservices in Kubernetes involves defining each service as a deployment in YAML files and using kubectl commands to apply configurations. Kubernetes handles scaling, load balancing, and communication between microservices.

What is the 3-tier architecture in Kubernetes?

The 3-tier architecture splits an application into three layers: frontend (UI), backend (business logic), and database. In Kubernetes, each tier can be run as separate microservices with dedicated pods and services to manage communication and scaling.

Is a pod considered a microservice?

Not exactly. A pod is a small deployable unit in Kubernetes that can contain one or more containers. Each pod often hosts one instance of a microservice, but it can also contain supporting containers as needed.

Why is Kubernetes a good fit for microservices?

Kubernetes simplifies complex tasks like scaling, load balancing, and service discovery, making it easier to manage microservices. Its self-healing and automation features help applications stay resilient and adapt to changes in demand.

What is microservices architecture used for?

Microservices architecture is used to build flexible, scalable applications by breaking down large applications into smaller, independently deployable services. It’s commonly used in cloud-native applications to improve development speed and resilience.

How are APIs used in microservices architecture?

APIs serve as communication bridges between microservices, enabling each service to function independently while remaining connected. They define how services interact, share data, and trigger operations.

Are Docker and Kubernetes the same as microservices?

No, Docker is a containerization platform, and Kubernetes is an orchestration tool. Both support microservices by packaging and managing individual service components, but neither are microservices themselves.

What is a Docker image?

A Docker image is a pre-packaged setup that includes an application, its dependencies, and the configuration needed to create containers. Kubernetes uses these images to deploy services within its cluster.

Who can create or update resources within a namespace?

Permissions within a Kubernetes namespace are managed through Role-Based Access Control (RBAC). Only users or service accounts with the right roles and permissions can create or update resources within a namespace.

How do Kubernetes and microservices work together?

Kubernetes provides the infrastructure to deploy and manage microservices, handling container orchestration, scaling, and inter-service communication. It makes managing a distributed microservices application much simpler.

What are the best practices for managing microservices in Kubernetes?

Use namespaces for segmentation, config maps and secrets for secure configuration management, autoscaling for handling traffic changes, and service meshes to streamline network policies. Monitoring tools like Prometheus and Grafana are also essential for observability.

How do you handle database management in Kubernetes microservices architecture?

For databases, Kubernetes uses StatefulSets to maintain stable network identities and persistent volumes for data storage. These tools ensure database persistence and availability even if pods restart or move within the cluster.