After years of working with monitoring solutions at both startups and big-name companies, I've realized something important: knowing why you’re doing something is just as crucial as knowing how to do it.

When we talk about host metrics monitoring, it’s not just about gathering data; it’s about figuring out what that data means and why it’s important for you.

In this guide, I want to help you make sense of host metrics monitoring with OpenTelemetry Collector. We'll explore how these metrics can give you valuable insights into your systems and help you keep everything running smoothly.

What are Host Metrics?

Host metrics represent essential performance indicators of a server’s operation. These metrics help monitor resource utilization and system behavior, allowing for effective infrastructure management.

Key host metrics include:

- CPU Utilization: The percentage of CPU capacity being used.

- Memory Usage: The amount of RAM consumed by the system.

- Filesystem: Disk space availability and I/O operations.

- Network Interface: Data transfer rates and network connectivity.

- Process CPU: CPU usage of individual system processes.

These metrics provide critical insights into the performance and operational status of a host.

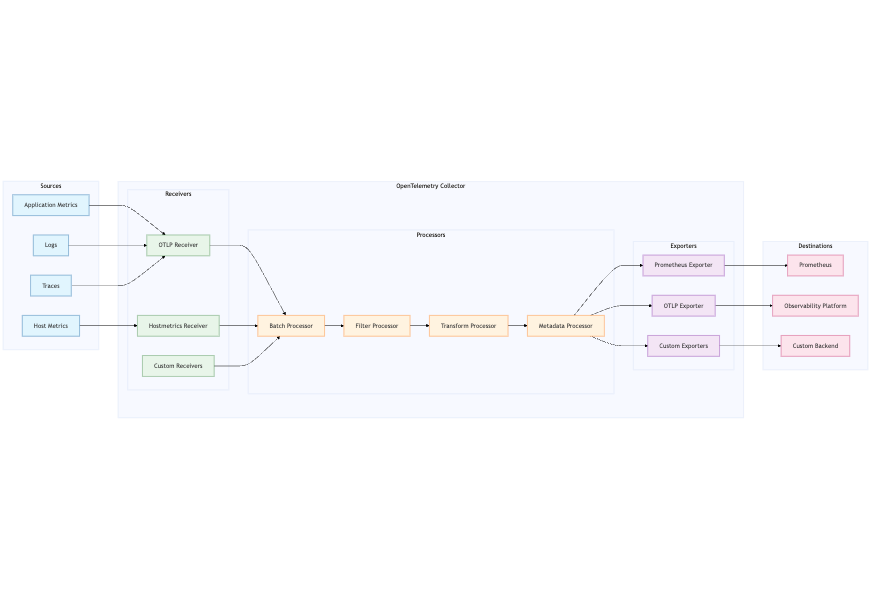

The OpenTelemetry Collector Architecture

The OpenTelemetry Collector operates on a pipeline architecture, designed to collect, process, and export telemetry data efficiently.

Key Components

- Receivers: These are the entry points for data collection, responsible for gathering metrics, logs, and traces from various sources. For host metrics, we use the host metrics receiver.

- Processors: These components handle data transformation, enrichment, or aggregation. They can apply modifications such as batching, filtering, or adding metadata before forwarding the data to the exporters.

- Exporters: These send the processed telemetry data to a destination, such as Prometheus, OTLP (OpenTelemetry Protocol), or other monitoring and observability platforms.

The data flow through the pipeline looks like this:

Implementing OpenTelemetry in Your Environment

1. Basic Setup (Development Environment)

To start with a simple local setup, here’s a minimal configuration that I use for development. It includes the hostmetrics receiver for collecting basic metrics like CPU and memory usage, with the data exported to Prometheus.

# config.yaml - Development Setup

receivers:

hostmetrics:

collection_interval: 30s

scrapers:

cpu: {}

memory: {}

processors:

resource:

attributes:

- action: insert

key: service.name

value: "host-monitor-dev"

- action: insert

key: env

value: "development"

exporters:

prometheus:

endpoint: "0.0.0.0:8889"

service:

pipelines:

metrics:

receivers: [hostmetrics]

processors: [resource]

exporters: [prometheus]Start the OpenTelemetry Collector with debug logging to ensure everything works correctly:

# Start the collector with debug logging

otelcol-contrib --config config.yaml --set=service.telemetry.logs.level=debug

2. Production Configuration

For production, a more robust setup is recommended, incorporating multiple exporters and enhanced metadata for better observability. This example adds more detailed metrics and uses environment-specific variables.

# config.yaml - Production Setup

receivers:

hostmetrics:

collection_interval: 10s

scrapers:

cpu:

metrics:

system.cpu.utilization:

enabled: true

memory:

metrics:

system.memory.utilization:

enabled: true

disk: {}

filesystem: {}

network:

metrics:

system.network.io:

enabled: true

processors:

resource:

attributes:

- action: insert

key: service.name

value: ${SERVICE_NAME}

- action: insert

key: environment

value: ${ENV}

resourcedetection:

detectors: [env, system, gcp, azure]

timeout: 2s

exporters:

otlp:

endpoint: ${OTLP_ENDPOINT}

tls:

insecure: ${OTLP_INSECURE}

prometheus:

endpoint: "localhost:8889"

service:

pipelines:

metrics:

receivers: [hostmetrics]

processors: [resource, resourcedetection]

exporters: [otlp, prometheus]This production configuration ensures low-latency metric collection and supports exporting to both Prometheus and an OTLP-compatible endpoint, useful for integrating with larger observability platforms.

3. Kubernetes Deployment

For Kubernetes environments, deploying the OpenTelemetry Collector as a DaemonSet ensures that metrics are gathered from every node in the cluster. Below is a configuration for deploying the collector on Kubernetes.

ConfigMap for Collector Configuration

The ConfigMap contains the collector configuration, defining how the metrics are scraped and where they are exported.

# kubernetes/collector-config.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: otel-collector-config

data:

config.yaml: |

receivers:

hostmetrics:

collection_interval: 30s

scrapers:

cpu: {}

memory: {}

disk: {}

filesystem: {}

network: {}

process: {}

processors:

resource:

attributes:

- action: insert

key: cluster.name

value: ${CLUSTER_NAME}

resourcedetection:

detectors: [kubernetes]

timeout: 5s

exporters:

otlp:

endpoint: ${OTLP_ENDPOINT}

tls:

insecure: false

cert_file: /etc/certs/collector.crt

key_file: /etc/certs/collector.key

service:

pipelines:

metrics:

receivers: [hostmetrics]

processors: [resource, resourcedetection]

exporters: [otlp]This ConfigMap defines the receivers, processors, and exporters necessary for collecting host metrics from the nodes and sending them to an OTLP endpoint. The resource processor adds metadata about the cluster, while the resourcedetection processor uses the Kubernetes detector to gather node-specific metadata.

DaemonSet for Collector Deployment

The DaemonSet ensures that one instance of the collector runs on every node in the cluster.

# kubernetes/daemonset.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: otel-collector

spec:

selector:

matchLabels:

app: otel-collector

template:

metadata:

labels:

app: otel-collector

spec:

containers:

- name: otel-collector

image: otel/opentelemetry-collector-contrib:latest

resources:

limits:

cpu: 200m

memory: 200Mi

requests:

cpu: 100m

memory: 100Mi

volumeMounts:

- name: config

mountPath: /etc/otelcol/config.yaml

subPath: config.yaml

env:

- name: CLUSTER_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: OTLP_ENDPOINT

value: "your-otlp-endpoint"

volumes:

- name: config

configMap:

name: otel-collector-configIn this DaemonSet configuration:

- The otel-collector container is deployed on every node.

- The CLUSTER_NAME environment variable is injected dynamically based on the node’s metadata.

- Metrics are exported to the specified OTLP endpoint, making it compatible with cloud-based or self-hosted observability backends.

This setup ensures an efficient collection of host metrics from all nodes in a Kubernetes cluster, making it ideal for large-scale environments.

Common Pitfalls and Solutions

When implementing the OpenTelemetry Collector in a production environment, there are several common challenges you might encounter. Here’s a look at these pitfalls along with solutions to address them.

1. High CPU Usage in Production

Issue: If you notice high CPU utilization from the collector itself, it may be due to a short collection interval that generates excessive load.

Solution: Adjust the collection interval to reduce the frequency of data collection, allowing the collector to operate more efficiently.

receivers:

hostmetrics:

collection_interval: 60s # Increase from default 30s2. Missing Permissions on Linux

Issue: When running the OpenTelemetry Collector without root privileges, you might encounter permission errors, particularly when trying to access disk metrics.

Solution: Grant the necessary capabilities to the collector binary to allow it to access the required system resources.

# Add capabilities for disk metrics

sudo setcap cap_dac_read_search=+ep /usr/local/bin/otelcol-contrib

3. Memory Leaks in Long-Running Instances

Issue: In some cases, long-running instances of the collector can exhibit memory leaks, especially if too many processors are configured.

Solution: Optimize the processor chain to include memory-limiting configurations to prevent excessive memory usage.

processors:

batch:

timeout: 10s

send_batch_size: 1024

memory_limiter:

check_interval: 5s

limit_mib: 150Best Practices from Production

When deploying the OpenTelemetry Collector, adhering to best practices can significantly improve the efficiency and reliability of your monitoring setup. Here are some recommendations based on production experience:

1. Resource Attribution

Always use the resource detection processor to automatically identify and enrich telemetry data with cloud metadata. This enhances the context of the metrics collected, making it easier to understand the performance and health of your applications within the infrastructure.

processors:

resourcedetection:

detectors: [env, system, gcp, azure]

timeout: 2s2. Monitoring the Monitor

Set up alerting mechanisms on the collector's own metrics. By monitoring the health and performance of the collector itself, you can quickly identify issues before they affect your overall observability stack.

3. Graceful Degradation

Configure timeout and retry policies for exporters to ensure that transient issues don’t lead to data loss. Implementing these policies allows the system to handle temporary failures gracefully without impacting the overall monitoring setup.

exporters:

otlp:

endpoint: ${OTLP_ENDPOINT}

tls:

insecure: ${OTLP_INSECURE}

timeout: 10s

retry_on_failure:

enabled: true

attempts: 3

interval: 5s4. Versioning and Upgrades

Regularly update the OpenTelemetry Collector to leverage the latest features, improvements, and bug fixes. Always test new versions in a staging environment before rolling them out to production to ensure compatibility with your existing setup.

5. Configuration Management

Maintain a version-controlled repository for your configuration files. This allows for easier tracking of changes, rollbacks when necessary, and collaboration across teams.

Conclusion

Getting a handle on host metrics monitoring with OpenTelemetry is like having a roadmap for your system’s performance. With the right setup, you’ll not only collect valuable data but also gain insights that can help you make smarter decisions about your infrastructure.

FAQs

What does an OpenTelemetry Collector do?

The OpenTelemetry Collector is like a central hub for telemetry data. It receives, processes, and exports logs, metrics, and traces from various sources, making it easier to monitor and analyze your systems without being tied to a specific vendor.

What are host metrics?

Host metrics are performance indicators that give you a glimpse into how your server is doing. They include things like CPU utilization, memory usage, disk space availability, network throughput, and the CPU usage of individual processes. These metrics are essential for keeping an eye on resource utilization and ensuring your infrastructure runs smoothly.

What is the difference between telemetry and OpenTelemetry?

Telemetry is the general term for collecting and sending data from remote sources to a system for monitoring and analysis. OpenTelemetry, on the other hand, is a specific framework with APIs designed for generating, collecting, and exporting telemetry data—logs, metrics, and traces—in a standardized way. It provides developers with the tools to instrument their applications effectively.

Is the OpenTelemetry Collector observable?

Absolutely! The OpenTelemetry Collector is observable itself. It generates its own telemetry data, such as metrics and logs, that you can monitor to evaluate its performance and health. This includes tracking things like resource usage, processing latency, and error rates, ensuring it operates effectively.

What is the difference between OpenTelemetry Collector and Prometheus?

While both are important in observability, they play different roles. The OpenTelemetry Collector acts as a data pipeline, collecting, processing, and exporting telemetry data. Prometheus is a monitoring and alerting toolkit specifically designed for storing and querying time-series data. You can scrape metrics from the OpenTelemetry Collector using Prometheus, and the collector can also export metrics directly to Prometheus.

How do you configure the OpenTelemetry Collector to monitor host metrics?

To configure the OpenTelemetry Collector for host metrics monitoring, set up a hostmetrics receiver in the configuration file. Specify the collection interval and the types of metrics you want to collect (like CPU, memory, disk, and network). Then, configure processors and exporters to handle and send the collected data to your chosen monitoring platform.

How do you set up HostMetrics monitoring with the OpenTelemetry Collector?

Setting up HostMetrics monitoring is straightforward. Create a configuration file that includes the HostMetrics receiver, define the metrics you want to collect, and specify an exporter to send the data to your monitoring solution (like Prometheus). Once your configuration is ready, start the collector with the file you created.