This piece is part of a series on high cardinality — what it means, where it shows up in real systems, how it affects your infrastructure and database operations, and why it’s worth embracing rather than looking for ways to minimize it.

High cardinality data gives you the kind of detail that aggregated metrics often miss. It’s the difference between seeing an average response time of 200ms and knowing that VIP customers are timing out at 2 seconds while bulk operations are cruising at 50ms. That level of granularity helps you identify genuine issues and take action on them.

This is exactly why averages can be misleading. When you aggregate data, problems get buried in the overall numbers. A payment service might show healthy average performance, while critical customers in specific regions are experiencing failures.

You’ve probably seen the signs: slow dashboards, exploding label sets, rising storage costs, and queries that feel heavier than they should. These aren’t just isolated performance issues — they’re often symptoms of high cardinality creeping into your telemetry data.

So what exactly is cardinality, and why does it have such a big impact on your observability stack?

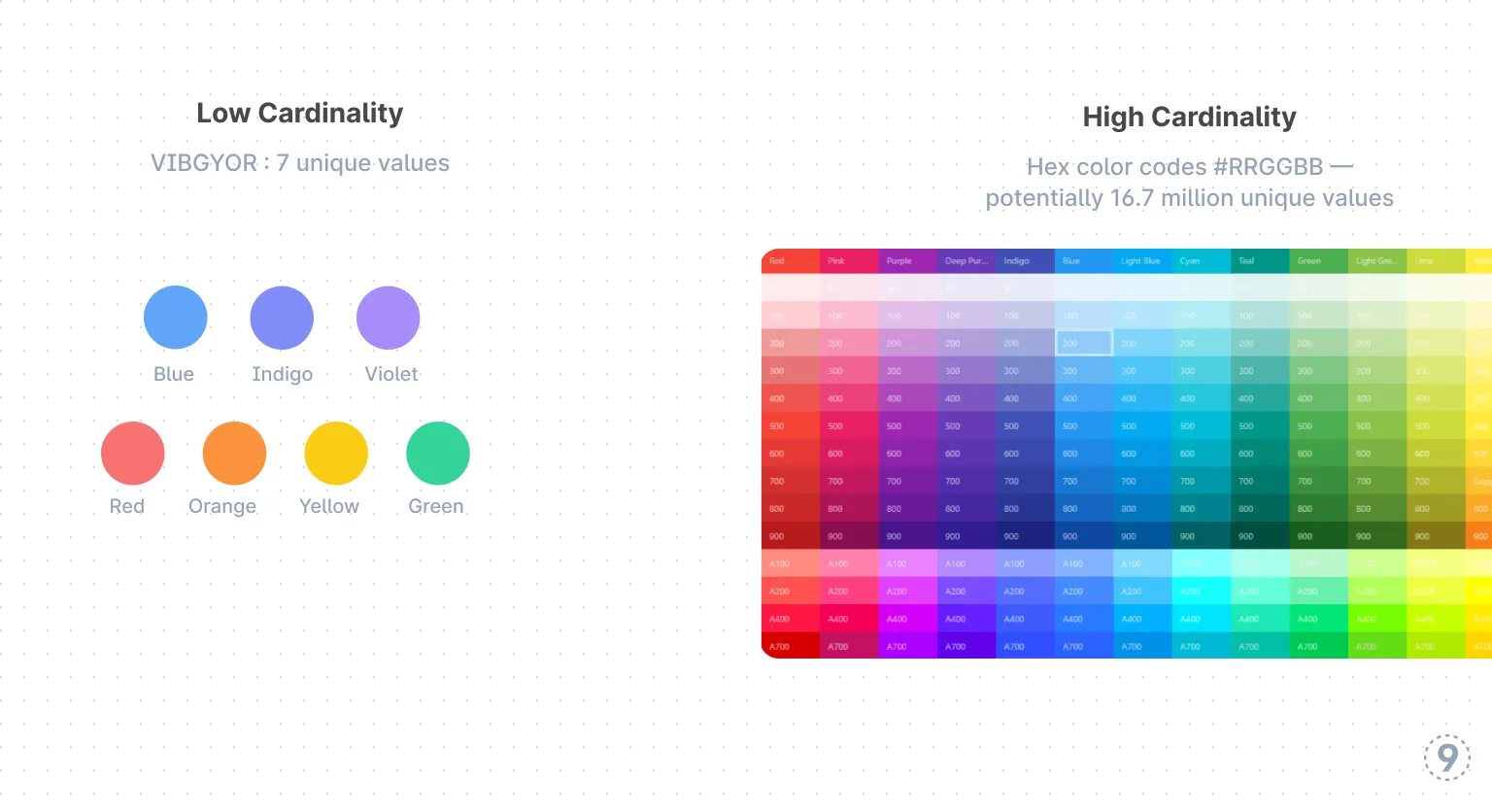

At its core, Cardinality refers to the number of unique values or elements in a set. The higher the number of unique elements, the higher the cardinality.

In databases and monitoring systems, cardinality refers to the number of unique values in a specific field or column. Consider a user authentication system: tracking just success/failure gives you low cardinality (2 values), but tracking by user_type, region, device_type, and feature_flag creates thousands of unique combinations —

that’s high cardinality.

The difference is between knowing “login works 99% of the time” versus “enterprise users in APAC are experiencing 15% failure rates on mobile during peak hours.”

Example:

- Low cardinality: The basic colors of the rainbow (VIBGYOR) — just 7 unique values.

- High cardinality: Hex color codes (

#FFFFFF,#000000, etc.) — potentially 16.7 million unique values.

In a database context:

- Low cardinality fields: Gender (typically few options), country codes, status indicators like “active/inactive.”

- Medium cardinality fields: Cities, product categories, age groups.

- High cardinality fields: Email addresses, user IDs, IP addresses, timestamps, session IDs.

For instance, in a user database, the “country” field might have at most 195 unique values, while the “user_id” field could have millions.

In monitoring systems, high cardinality creates operational challenges:

-

Prometheus handles metrics well — until cardinality spikes. In environments like Kubernetes, exploding label combos (user_id, pod_name, container_id) lead to slow queries, memory strain, and instability. And adding more hosts doesn’t magically fix it.

-

With Datadog, high cardinality increases costs, as each unique metric-tag combination counts as a separate time series. As cardinality grows, you may hit higher billing tiers, especially with custom metrics.

Why Legacy Tools Struggle with Dimensional Data

Many legacy systems struggle with the granular telemetry that modern architectures naturally generate. This often creates a tension between having rich data and maintaining system performance.

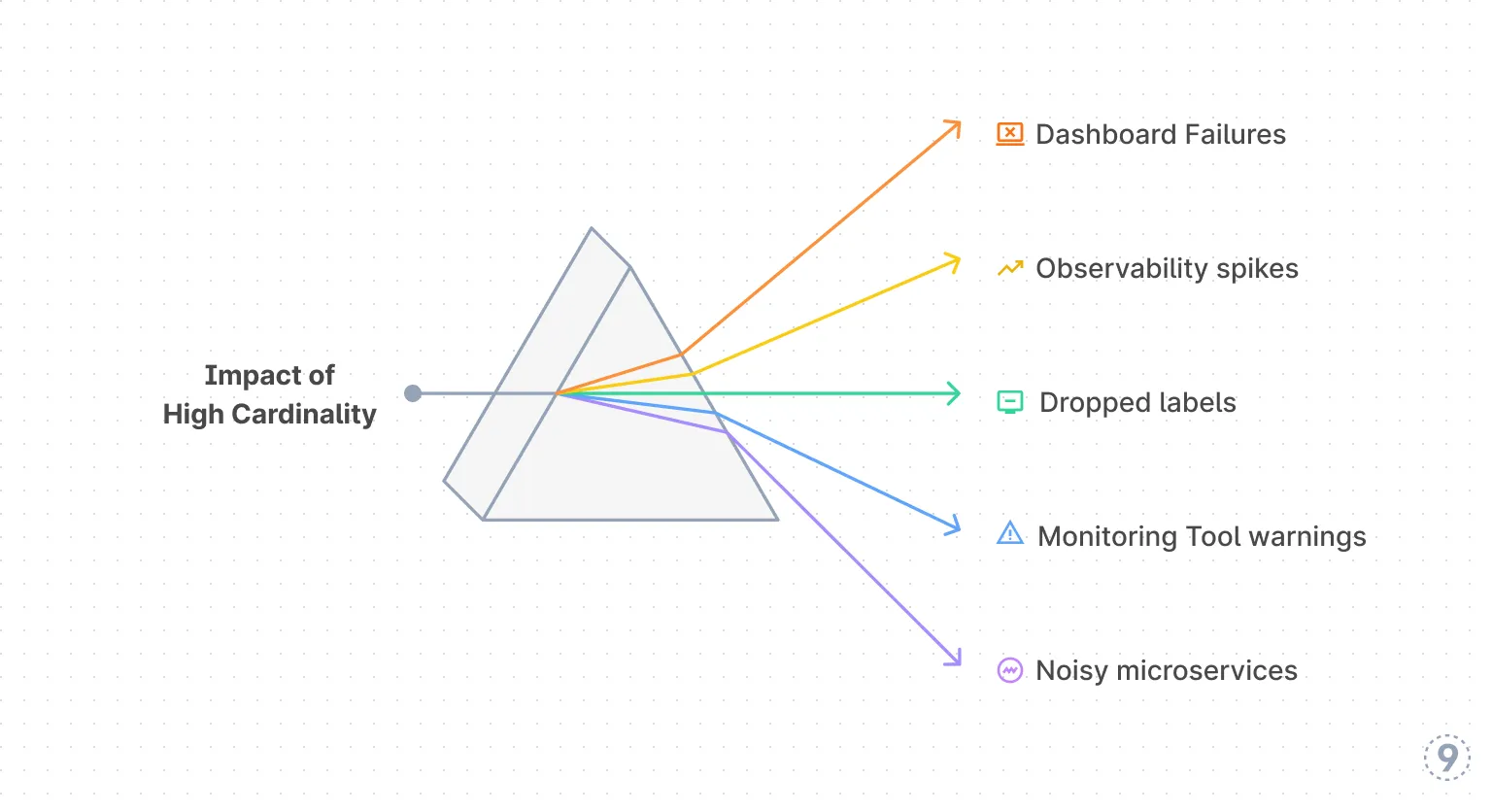

You might recognize these symptoms:

- Dashboards timeout or fail to load when using filters like user_id, session_id, or feature_flag

- You see spikes in observability bills without any clear traffic increase

- You’ve had to drop labels in Prometheus or Datadog to avoid memory issues or hitting quotas

- Your monitoring tool warns you: “Too many time series.”

- You had to create a second monitoring system to manage noisy microservices

These challenges stem more from tooling limitations than from the nature of dimensional data.

Let’s understand them more closely.

Database Performance Challenges

When dealing with high cardinality data, databases can struggle with:

- Index size: More unique values mean larger indexes

- Query performance: Queries that filter on high cardinality fields may not benefit as much from indexes

- Storage requirements: More unique values often mean more storage space

A classic example is deciding whether to index a field like “gender” versus a field like “email_address”. Indexing the low cardinality “gender” field provides significant performance benefits since the database can quickly narrow down results. But indexing a high cardinality field like “email_address” might result in an index almost as large as the table itself, potentially reducing its efficiency.

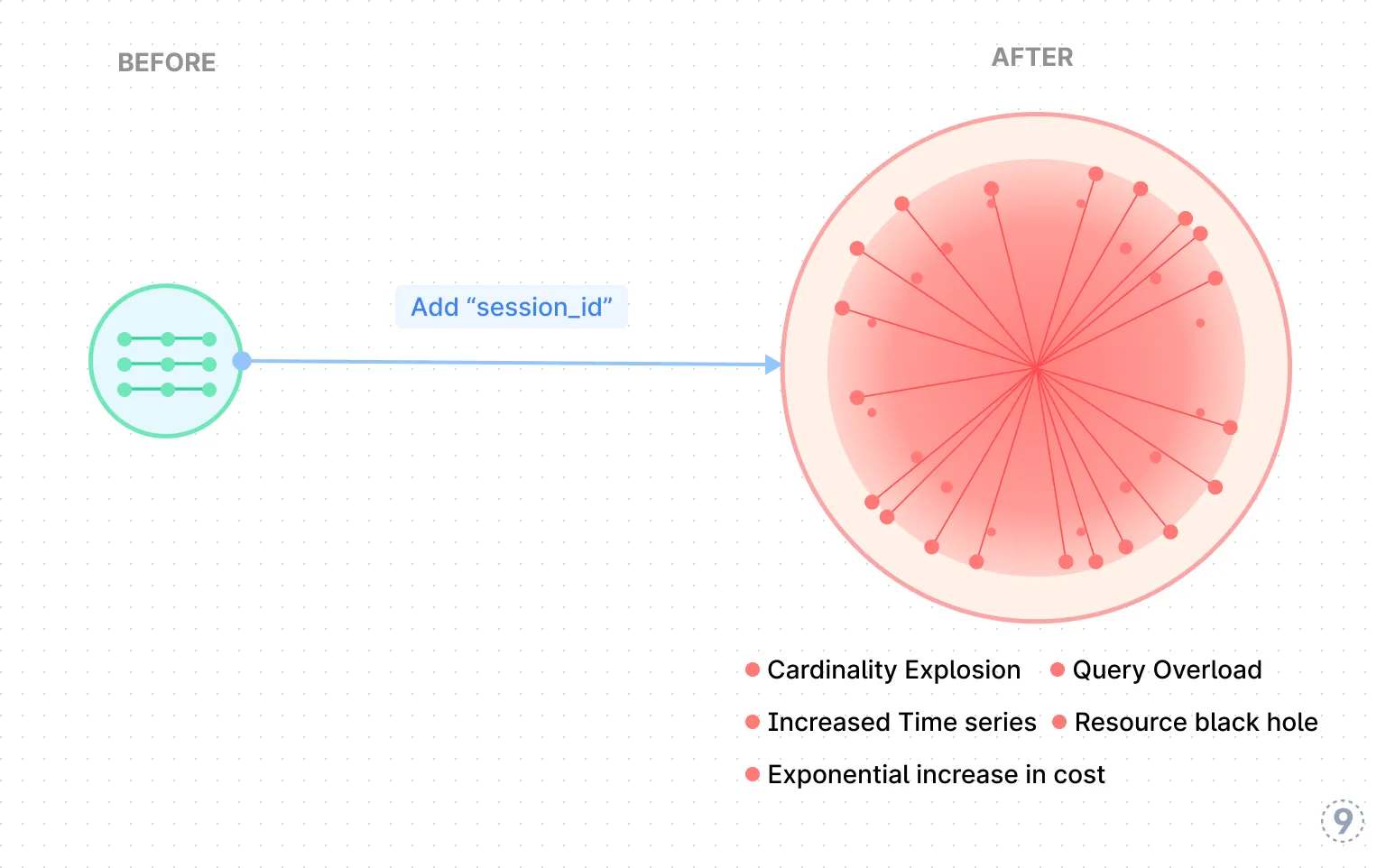

Monitoring System Challenges

High cardinality has enormous implications for monitoring systems. This is where things can get really painful if you’re not careful. When you tag or label metrics with high cardinality dimensions, you can end up with a combinatorial explosion of time series.

A typical e-commerce platform tracking user behavior across regions (50), device types (20), and product categories (100) could generate 100,000 unique combinations (50 × 20 × 100 = 100,000) just from those three dimensions alone. Add services, hosts, and user segments, and you’re quickly into millions of time series.

This can cause:

- Storage issues

- Query slowdowns

- Higher costs

- System crashes

This is why monitoring systems often limit the cardinality of tags or labels they accept.

Data Science and ML Implications

In data analysis and machine learning, high cardinality can complicate how you prepare and model data.

-

Raw high cardinality fields are hard to use directly: For example, a “user_id” with millions of unique values doesn’t provide much value if fed directly into a model — it’s just noise. You need to transform it into something meaningful.

-

One-hot encoding: This turns each unique value (like every city name) into its own column. So, 10,000 cities = 10,000 new columns — mostly filled with zeros. It works, but it’s inefficient and bloats your data.

-

Feature engineering solutions:

- Feature hashing: Reduces the number of columns by applying a hash function, making it faster but with some trade-offs.

- Embeddings: Turns high-cardinality fields into compact vectors, which are much more efficient for deep learning models.

How Granular Data Improves Incident Response

High cardinality metrics don’t just change how incidents get investigated; they dramatically accelerate resolution times. Teams with dimensional observability typically resolve incidents 5-10x faster than those relying on averaged metrics.

When the data reflects real dimensions like user type, region, or feature flag, it becomes easier to ask precise questions and get useful answers fast.

1. Faster Root Cause Isolation

- Filter metrics by

region,user_id,deployment_version, orfeature_flag - Compare behavior across segments to identify what’s broken and where

If a new deployment is slowing down only one region or impacting just enterprise users, high cardinality metrics help you spot it immediately, without digging through logs or jumping between tools. While teams with averaged metrics spend hours investigating “something’s wrong somewhere,” dimensional correlation pinpoints the exact problem in minutes.

2. Detect Problems Before They Escalate

- Track small cohorts that don’t affect global averages

- Surface issues earlier by watching the right dimensions

A spike in error rates for premium users won’t move the overall success rate, but it still matters. Granular metrics help teams catch these signals before they hit a wider audience.

3. Better SLA Coverage

- Break down latency and error budgets by user or region

- Monitor experience consistency, not just system-wide targets

SLOs often require more than just meeting a global P95. They depend on making sure specific users aren’t consistently degraded. High cardinality data lets you track that directly.

The business advantage is clear: fewer support tickets, happier customers, and you can offer better SLAs compared to your competitors.

The Far-Reaching Consequences of High Cardinality

System Observability

The observability challenge of high cardinality affects the very tools we rely on to understand system behavior.

When monitoring systems encounter high cardinality dimensions, they produce alert fatigue — thousands of similar notifications trigger simultaneously, hiding critical signals within noise. This leads to alert fatigue among operations teams, who begin ignoring potentially important alerts.

Visualization systems suffer equally:

- Dashboard limitations: Dashboards become slow or timeout when rendering high cardinality metrics

- Complex troubleshooting: Root cause analysis becomes exponentially more difficult

- Limited historical data: High cardinality forces aggressive downsampling, restricting long-term analysis

This often leads teams to create separate monitoring systems when central tools can’t handle their cardinality needs.

Infrastructure Costs

Infrastructure Costs

High cardinality significantly impacts infrastructure costs in an exponential rather than linear relationship. This is especially true in time-series databases, where high cardinality workloads often necessitate vertical scaling since adding more nodes doesn’t address the fundamental data structure problem.

Organizations frequently encounter:

- Unexpected billing spikes: New high cardinality dimensions can cause sudden cost increases

- Network saturation: High cardinality queries generate larger result sets, consuming more bandwidth

- License cost implications: Many enterprise products base licensing on data volume or node count

However, systems built specifically for high-dimensional data, like Last9, handle this growth differently. Instead of fighting cardinality, we use techniques like streaming aggregation that make rich data economically viable, often at significantly lower total cost than traditional approaches.

When you can isolate a payment processing issue to “mobile users in Germany using stored payment methods” in minutes instead of spending hours digging through logs, the 5-10x improvement in incident resolution time more than justifies the infrastructure investment.

Database Operations

Databases with high cardinality face a cascade of operational challenges that go beyond simple storage concerns.

For example, it can cause query plan instability, meaning your database’s strategy for fetching data can change unexpectedly, leading to performance issues. Optimizers struggle to keep up with high cardinality because they rely on statistics that can quickly become inaccurate or outdated. What worked efficiently yesterday might slow down today if the optimizer makes the wrong decision.

Other key impacts include:

- Memory pressure: High-cardinality aggregations can eventually force operations to spill to disk

- Backup inefficiency: Incremental backups become less effective with frequently changing high-cardinality columns

- Uneven data distribution: In distributed databases, high cardinality can create unbalanced shards and hot spots

Testing and Development

The development lifecycle becomes increasingly complex as cardinality grows. Local environments cannot simulate production cardinality, resulting in code that works in development but fails in production. This leads to:

- Schema evolution constraints: Changes become increasingly risky

- Code complexity: Applications must implement sophisticated caching and data access patterns

- Technical debt acceleration: Quick fixes for high cardinality issues become entrenched

Over time, innovation slows as teams become cautious about changes that might worsen cardinality issues.

The Dual Nature of High Cardinality

Up until now, high cardinality has been viewed primarily as a problem due to challenges like:

- Storage and Processing: More unique values mean more data to store and process

- Indexing Issues: Traditional database indexes lose effectiveness as cardinality increases, sometimes becoming almost as large as the table itself

- Memory Strain: In-memory operations can hit limits when dealing with too many unique values

- Slow Queries: Queries against high cardinality fields tend to take longer to execute

- Visualization Hurdles: Visualizing millions of unique values meaningfully is nearly impossible

However, this perspective misses the fundamental value of granular data in modern systems. High cardinality isn’t just noise — it’s what enables pinpoint troubleshooting, personalized user experiences, and meaningful insights in distributed systems.

The real question now isn’t “How do we reduce cardinality?” but rather,

“How do we make the most of the rich detail it provides?”

Here’s why high cardinality is worth embracing:

- Granular analysis: Get deeper insights into individual users, transactions, or events

- Personalization: Drive smarter recommendations and tailored user experiences

- Precise monitoring: Track specific services or requests to pinpoint issues faster

- Rich insights: More unique values lead to more valuable data and patterns

This is especially crucial as we integrate AI into our workflows. AI systems thrive on detailed, unique data points to identify patterns and make predictions. High cardinality provides the granularity needed for training more accurate models, fine-grained personalization, anomaly detection, and predictive analytics.

Rethinking High Cardinality

High cardinality isn’t just noise or something to avoid — it’s a natural part of how modern systems behave.

As the team at Replit put it, “high cardinality can be a valuable signal when handled correctly.”

The question isn’t whether your data will contain high cardinality; it’s whether you have the right tools to turn that complexity into an operational advantage.

That’s exactly what Last9 is built for. Instead of forcing a trade-off between rich telemetry and system performance, Last9 treats high cardinality as a first-class citizen. Teams using our platform have seen significantly faster incident resolution while reducing their observability costs by 20 to 40% compared to legacy tools.

Think this will help your team? Let’s chat about your setup, or just try it for free and experience high-cardinality monitoring.

In the next piece, we’ll explore High-Cardinality Observability and how it strengthens the business case for faster, more accurate incident resolution.