gRPC is becoming the go-to choice for high-performance, low-latency communication between services in a distributed architecture. Combined with OpenTelemetry, it provides a powerful observability framework, ensuring developers can monitor, trace, and troubleshoot their microservices with ease.

In this guide, we’ll walk you through setting up gRPC with OpenTelemetry, highlight best practices, and explore how you can gain deeper insights into your systems.

The Significance of OpenTelemetry in Cloud-Native Applications

Cloud-native applications are designed to take full advantage of cloud environments, often involving microservices architectures, containers, and dynamic scaling.

This complexity, while beneficial for flexibility and scalability, also introduces significant challenges in monitoring, tracing, and debugging.

OpenTelemetry plays a crucial role in addressing these challenges by providing a unified, standard approach to observability across cloud-native systems.

Here’s a closer look at the significance of OpenTelemetry in the context of cloud-native applications:

1. Unified Observability Across Distributed Systems

In a cloud-native architecture, applications are typically composed of multiple microservices that interact with each other. These services might run across different environments—on-premises, in various cloud providers, or in hybrid cloud scenarios.

OpenTelemetry enables comprehensive observability by capturing metrics, logs, and traces from all parts of the application, providing a consistent view across services.

Why it matters: OpenTelemetry allows you to correlate traces and logs from different services in real-time, making it easier to detect performance bottlenecks, troubleshoot issues, and gain insights into application health.

Open telemetry with Flask Guide

2. End-to-End Tracing Across Service Boundaries

In cloud-native applications, a single user request often traverses multiple services. OpenTelemetry enables distributed tracing, which tracks the flow of requests across service boundaries.

This allows developers and operators to see the entire journey of a request, from the initial entry point to the final service that processes it.

Why it matters: Without tracing, identifying performance bottlenecks, failed requests or service interactions is like piecing together a puzzle without all the pieces. OpenTelemetry provides the pieces needed to see the full picture, making troubleshooting and optimization much easier.

3. Vendor-Agnostic Instrumentation

Cloud-native applications often use a variety of tools, frameworks, and services, many of which are provided by different vendors.

OpenTelemetry is an open-source, vendor-neutral standard that ensures you can integrate observability across multiple platforms without being locked into a specific vendor’s ecosystem.

Why it matters: OpenTelemetry gives teams the flexibility to choose the best tools for their needs and change them over time without significant re-instrumentation of the application. This is especially important in cloud-native environments where applications might evolve rapidly.

4. Scalability and High Availability

In cloud-native applications, services can scale up or down dynamically based on demand. OpenTelemetry is designed to handle high volumes of data generated by large-scale systems and provides flexible exporters to send trace and metric data to various observability backends (e.g., Prometheus, Grafana, Last9, etc.).

Why it matters: As cloud-native applications scale, the need for observability grows. OpenTelemetry helps ensure that the observability data is collected efficiently, even in large and highly dynamic environments, without affecting the performance of the application itself.

Kafka with OpenTelemetry: Distributed Tracing Guide

5. Standardization of Observability Data

OpenTelemetry provides a standardized way to collect and export telemetry data (such as traces, metrics, and logs) from applications. This standardization makes it easier for teams to analyze and compare data, regardless of the underlying infrastructure or technology stack used in the cloud-native application.

Why it matters: Standardization reduces the complexity of integrating and correlating data from multiple sources. Teams can easily combine insights from different parts of the system and focus on improving the user experience rather than managing disparate observability tools.

6. Integration with Modern Development and CI/CD Pipelines

OpenTelemetry’s instrumentation can be integrated into cloud-native CI/CD (Continuous Integration/Continuous Deployment) pipelines, enabling automated tracing and monitoring of services as they are deployed and updated.

This continuous feedback loop is invaluable for catching issues early in the development process.

Why it matters: With OpenTelemetry, teams can ensure that observability is built into the development lifecycle, leading to faster detection of regressions, easier debugging, and better quality assurance in cloud-native applications.

7. Improved Performance Monitoring and Optimization

With cloud-native applications, performance monitoring goes beyond checking server uptime and resource utilization. OpenTelemetry enables deeper insights into service performance, such as request latencies, service dependencies, and error rates.

Why it matters: Tracking detailed performance data across microservices allows teams to proactively identify and address performance issues before they impact end-users, making cloud-native systems more reliable and efficient.

Getting Started with OpenTelemetry Logging: A Practical Guide

8. Cloud-Native Compliance and Security

In cloud-native environments, maintaining compliance with security standards and regulations can be challenging due to the distributed nature of the application. OpenTelemetry's ability to capture detailed telemetry data can help organizations monitor security events, detect anomalies, and maintain compliance.

Why it matters: With OpenTelemetry, security teams can track unauthorized access, detect potential threats, and ensure that cloud-native applications adhere to regulatory requirements, all while providing developers with the insights they need to fix vulnerabilities quickly.

How to Set Up OpenTelemetry for gRPC in Go

Integrating OpenTelemetry into your gRPC-based application can be a game-changer in terms of observability. Here's a step-by-step process for adding OpenTelemetry support to your gRPC server and client in Go.

Step 1: Install Required Dependencies

Before we get started, you’ll need to install the OpenTelemetry Go SDK and related gRPC instrumentation packages.

go get github.com/grpc/grpc-go go get go.opentelemetry.io/otel go get go.opentelemetry.io/otel/exporters/trace go get go.opentelemetry.io/otel/instrumentation/google.golang.org/grpcStep 2: Configure OpenTelemetry in Your gRPC Server

To capture and export traces, configure OpenTelemetry in your gRPC server. This involves initializing the OpenTelemetry SDK and setting up a trace exporter, such as Jaeger or Zipkin.

import (

"context"

"fmt"

"log"

"os"

"go.opentelemetry.io/otel"

"go.opentelemetry.io/otel/sdk/trace"

"go.opentelemetry.io/otel/exporters/trace/jaeger"

"go.opentelemetry.io/otel/instrumentation/google.golang.org/grpc/otelgrpc"

"google.golang.org/grpc"

)

func main() {

// Initialize Jaeger exporter

exporter, err := jaeger.NewRawExporter(

jaeger.WithCollectorEndpoint(jaeger.WithEndpoint("http://localhost:5775")),

)

if err != nil {

log.Fatalf("failed to create exporter: %v", err)

}

// Set up the trace provider

tp := trace.NewTracerProvider(trace.WithBatcher(exporter))

otel.SetTracerProvider(tp)

// Create gRPC server with OpenTelemetry interceptor

server := grpc.NewServer(

grpc.UnaryInterceptor(otelgrpc.UnaryServerInterceptor()),

)

// Add your gRPC server logic here

fmt.Println("gRPC server is running")

}Step 3: Instrument the gRPC Client

To propagate traces from client to server, instrument your gRPC client with OpenTelemetry interceptors.

import (

"log"

"go.opentelemetry.io/otel/instrumentation/google.golang.org/grpc/otelgrpc"

"google.golang.org/grpc"

)

func createClient() {

// Create gRPC client with OpenTelemetry interceptor

conn, err := grpc.Dial(

"localhost:50051",

grpc.WithInsecure(),

grpc.WithUnaryInterceptor(otelgrpc.UnaryClientInterceptor()),

)

if err != nil {

log.Fatalf("failed to connect: %v", err)

}

// Your client logic here

fmt.Println("gRPC client is connected")

}

Instrumenting AWS Lambda Functions with OpenTelemetry

Key OpenTelemetry API Functions for Instrumenting gRPC

1. otelgrpc.NewServerHandler()

This function creates an OpenTelemetry handler for a gRPC server. It's used to instrument the server for tracing, allowing OpenTelemetry to capture spans for each incoming gRPC request.

Usage Example:

s := grpc.NewServer(

grpc.StatsHandler(otelgrpc.NewServerHandler()),

)Explanation:

otelgrpc.NewServerHandler()Creates a new handler that instruments the server for automatic tracing.- It wraps the gRPC server to allow OpenTelemetry to record spans for incoming calls.

2. otelgrpc.NewClientHandler()

This function creates a client-side handler to instrument gRPC client calls for tracing. It allows the tracing of outgoing RPC requests from the client to the server.

Usage Example:

conn, err := grpc.Dial("localhost:50051", grpc.WithStatsHandler(otelgrpc.NewClientHandler()))Explanation:

otelgrpc.NewClientHandler()is used on the client-side to collect spans for outgoing gRPC requests.- It tracks the request lifecycle from the client, capturing the necessary telemetry data to create spans.

3. otel.SetTracerProvider()

This function sets the global tracer provider, which OpenTelemetry uses to manage the tracing configuration. It must be called to initialize the tracer in your application.

Usage Example:

otel.SetTracerProvider(tp)Explanation:

SetTracerProvider()assigns the tracer provider (tp) to the global OpenTelemetry tracer.- This is critical for ensuring that spans and trace data are correctly collected and exported.

Kubernetes Observability with OpenTelemetry Operator

4. sdktrace.NewTracerProvider()

This function creates a new trace provider, which is responsible for managing trace data and exporting it to a backend (such as Last9).

Usage Example:

tp := sdktrace.NewTracerProvider(

sdktrace.WithBatcher(exporter),

sdktrace.WithResource(resources),

)Explanation:

NewTracerProvider()creates a new provider with the necessary configuration.- The

WithBatcher(exporter)option enables exporting traces in batches for efficiency. - The

WithResource(resources)option adds metadata to the trace data (such as environment or service information).

5. otlptracegrpc.New()

This function creates a new OTLP exporter for traces, using gRPC to send trace data to an OpenTelemetry-compatible backend.

Usage Example:

exporter, err := otlptracegrpc.New(context.Background())

if err != nil {

log.Fatalf("failed to create exporter: %v", err)

}Explanation:

otlptracegrpc.New()sets up a gRPC exporter that sends trace data in OTLP (OpenTelemetry Protocol) format.- This is how OpenTelemetry communicates with systems like Last9 or other observability platforms.

6. otel.SetTextMapPropagator()

This function sets the global text map propagator for the OpenTelemetry SDK. Propagators are used to manage trace context propagation across service boundaries (e.g., HTTP headers, gRPC metadata).

Usage Example:

otel.SetTextMapPropagator(propagation.NewCompositeTextMapPropagator(propagation.TraceContext{}, propagation.Baggage{}))Explanation:

SetTextMapPropagator()defines how trace context (such as trace IDs) is carried between services.- In this example, a composite propagator is used that handles both trace context and baggage (additional metadata).

7. resource.New()

This function creates a new resource, which holds metadata about the service, host, and environment. This information is added to each trace, helping to identify the context of the trace data.

Usage Example:

resources, err := resource.New(context.Background(),

resource.WithFromEnv(),

resource.WithTelemetrySDK(),

resource.WithHost(),

)

if err != nil {

log.Fatalf("failed to create resource: %v", err)

}Explanation:

resource.New()allows you to define additional information about the service, host, or environment that will be attached to each trace.- In this example, it adds telemetry SDK details and host-related metadata.

8. context.WithTimeout()

While not strictly a part of OpenTelemetry, context.WithTimeout() is commonly used in conjunction with tracing. It helps you set a timeout for operations like gRPC calls, and the context can propagate trace information to maintain consistency.

Usage Example:

ctx, cancel := context.WithTimeout(context.Background(), time.Second)

defer cancel()Explanation:

context.WithTimeout()is used to manage request cancellation, timeouts, and tracing across service boundaries.- The OpenTelemetry tracing context will automatically be passed along when making gRPC calls, ensuring consistent trace data.

Advanced Observability Features with gRPC and OpenTelemetry

OpenTelemetry offers several advanced features that improve observability in distributed systems, including metrics collection, logging, and advanced filtering.

1. Integration with Prometheus

Integrating OpenTelemetry with Prometheus helps you collect detailed metrics for your gRPC services. Prometheus can monitor key metrics like request rate, latency, and error counts.

Below is an example of how to set up OpenTelemetry to export metrics to Prometheus:

import (

"github.com/prometheus/client_golang/prometheus"

"go.opentelemetry.io/otel/exporters/metric/prometheus"

"go.opentelemetry.io/otel/sdk/metric"

"go.opentelemetry.io/otel"

)

func setupPrometheusExporter() (*prometheus.Exporter, error) {

exporter, err := prometheus.NewExportPipeline(prometheus.Config{})

if err != nil {

return nil, err

}

// Set up the OpenTelemetry meter provider

meterProvider := metric.NewMeterProvider(metric.WithReader(exporter))

otel.SetMeterProvider(meterProvider)

return exporter, nil

}This setup exports metrics that Prometheus can scrape for monitoring.

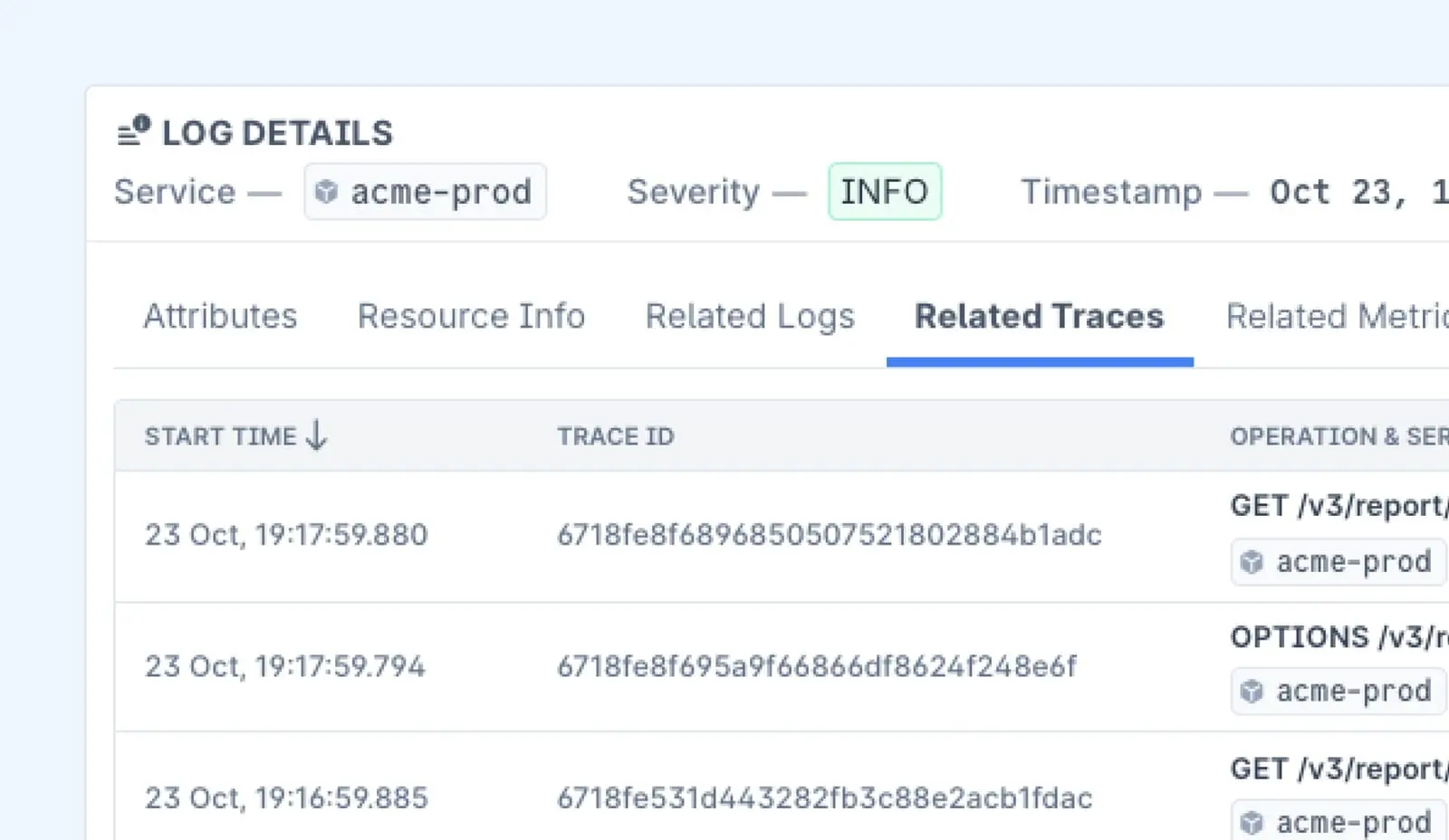

2. Log Correlation with Traces

To link logs with traces, use OpenTelemetry’s logging capabilities. Here’s an example of how to correlate logs with traces in a gRPC server:

import (

"context"

"log"

"go.opentelemetry.io/otel/api/trace"

"go.opentelemetry.io/otel/sdk/trace"

"google.golang.org/grpc"

)

func logWithTrace(ctx context.Context, tracer trace.Tracer) {

_, span := tracer.Start(ctx, "operation-name")

defer span.End()

// Correlate log with trace

log.Printf("Starting operation: %s", span.SpanContext())

}

func main() {

tracer := otel.Tracer("example.com/trace")

// Sample gRPC server setup

server := grpc.NewServer()

logWithTrace(context.Background(), tracer)

// Additional server setup...

}In this example, logs are associated with a specific trace span, making it easier to trace a request from logs to trace and back.

How to Send gRPC Traces to Last9 with OpenTelemetry

Pre-requisites

Before you begin, ensure you have the following:

- gRPC-based application: A basic gRPC server-client setup (or any gRPC service).

- Last9 account: A Last9 account with a set-up cluster.

- OTLP credentials: Obtain the following from the Integrations page:

endpointauth_header

1. Install OpenTelemetry Packages

Start by installing the necessary OpenTelemetry packages in your Go project:

go get -u go.opentelemetry.io/contrib/instrumentation/google.golang.org/grpc/otelgrpc

go get -u go.opentelemetry.io/otel

go get -u go.opentelemetry.io/otel/exporters/otlp/otlptrace/otlptracegrpc

go get -u go.opentelemetry.io/otel/sdk2. Setting Up the OpenTelemetry SDK

To set up OpenTelemetry in your application, add the following initialization code:

package instrumentation // You can use any name for this package

import (

"context"

"go.opentelemetry.io/otel"

"go.opentelemetry.io/otel/exporters/otlp/otlptrace/otlptracegrpc"

"go.opentelemetry.io/otel/propagation"

"go.opentelemetry.io/otel/sdk/resource"

sdktrace "go.opentelemetry.io/otel/sdk/trace"

semconv "go.opentelemetry.io/otel/semconv/v1.17.0"

)

// InitTracer initializes the OpenTelemetry tracer for your service

func InitTracer(serviceName string) func(context.Context) error {

// Set up the OTLP trace exporter

exporter, err := otlptracegrpc.New(context.Background())

if err != nil {

panic(err)

}

// Set up custom attributes such as environment

attr := resource.WithAttributes(

semconv.DeploymentEnvironmentKey.String("production"),

)

// Create a resource with defined attributes

resources, err := resource.New(context.Background(),

resource.WithFromEnv(),

resource.WithTelemetrySDK(),

resource.WithProcess(),

resource.WithOS(),

resource.WithContainer(),

resource.WithHost(),

attr,

)

if err != nil {

panic(err)

}

// Set up the trace provider with a batch processor for exporting traces

tp := sdktrace.NewTracerProvider(

sdktrace.WithBatcher(exporter),

sdktrace.WithResource(resources),

)

// Set the global tracer provider

otel.SetTracerProvider(tp)

otel.SetTextMapPropagator(propagation.NewCompositeTextMapPropagator(propagation.TraceContext{}, propagation.Baggage{}))

return tp.Shutdown

}3. Instrumenting the gRPC Server

To capture traces from incoming requests on your gRPC server, add instrumentation as shown below:

func main() {

// Initialize the OpenTelemetry tracer

shutdown := instrumentation.InitTracer("grpc-server")

defer shutdown(context.Background())

// Set up the gRPC server

lis, err := net.Listen("tcp", ":50051")

if err != nil {

log.Fatalf("failed to listen: %v", err)

}

s := grpc.NewServer(

grpc.StatsHandler(otelgrpc.NewServerHandler()),

)

// Register your gRPC services

pb.RegisterGreeterServer(s, &server{})

log.Printf("server listening at %v", lis.Addr())

if err := s.Serve(lis); err != nil {

log.Fatalf("failed to serve: %v", err)

}

}4. Instrumenting the gRPC Client

For the client, use similar instrumentation to capture traces:

func main() {

// Initialize the OpenTelemetry tracer

shutdown := instrumentation.InitTracer("grpc-client")

defer shutdown(context.Background())

// Set up the gRPC client connection with instrumentation

conn, err := grpc.Dial("localhost:50051", grpc.WithTransportCredentials(insecure.NewCredentials()), grpc.WithStatsHandler(otelgrpc.NewClientHandler()))

if err != nil {

log.Fatalf("did not connect: %v", err)

}

defer conn.Close()

// Create the client and make a request

c := pb.NewGreeterClient(conn)

name := "World"

if len(os.Args) > 1 {

name = os.Args[1]

}

ctx, cancel := context.WithTimeout(context.Background(), time.Second)

defer cancel()

r, err := c.SayHello(ctx, &pb.HelloRequest{Name: name})

if err != nil {

log.Fatalf("could not greet: %v", err)

}

log.Printf("Greeting: %s", r.GetMessage())

}

5. Running the Application

Next, set the environment variables for the OTLP exporter:

For the gRPC server:

export OTEL_SERVICE_NAME=grpc-server-app # Set the server-side application name

export OTEL_EXPORTER_OTLP_ENDPOINT=<endpoint>

export OTEL_EXPORTER_OTLP_HEADERS="Authorization=Basic <your-auth-token>"Run the server:

go run server/main.goFor the gRPC client:

export OTEL_SERVICE_NAME=grpc-client-app # Set the client-side application name

export OTEL_EXPORTER_OTLP_ENDPOINT=<endpoint>

export OTEL_EXPORTER_OTLP_HEADERS="Authorization=Basic <your-auth-token>"Run the client:

go run client/main.goNote: Ensure the service names for the client and server are distinct to differentiate the traces.

6. Viewing Traces in Last9

Once both the gRPC server and client are running, you can check the APM dashboard in Last9 to visualize and analyze the traces captured by OpenTelemetry.

Best Practices for OpenTelemetry in gRPC

Implementing OpenTelemetry in a gRPC-based system offers great visibility into your microservices and distributed architecture.

However, to get the most out of OpenTelemetry, follow these best practices to ensure efficient, accurate, and reliable tracing.

1. Use Proper Service Names

Ensure that your gRPC server and client applications have distinct service names. This makes it easier to differentiate between client and server traces in your observability platform (e.g., Last9).

Example:

// For the server side

otel.SetServiceName("grpc-server-app")

// For the client side

otel.SetServiceName("grpc-client-app")Why it matters:

- Having different service names helps when analyzing distributed traces, as it clearly distinguishes between the server's and client's perspectives.

How to identify Root Spans in Otel Collector

2. Enable Context Propagation Across Services

Propagating trace context between gRPC client and server is crucial for keeping traces consistent. OpenTelemetry automatically handles this if properly set up, but ensure you're using the correct propagators.

Best Practice: Use otel.SetTextMapPropagator() to enable context propagation and use the composite propagator for both trace context and baggage.

Example:

otel.SetTextMapPropagator(propagation.NewCompositeTextMapPropagator(propagation.TraceContext{}, propagation.Baggage{}))Why it matters:

- Context propagation ensures that your traces are accurate and include the full context across service calls.

3. Use Batch Exporting for Efficiency

When dealing with high-traffic gRPC services, exporting traces in real-time can lead to performance bottlenecks. Use batch exporting to send traces in larger chunks, improving performance and reducing the load on the exporter.

Example:

tp := sdktrace.NewTracerProvider(

sdktrace.WithBatcher(exporter),

sdktrace.WithResource(resources),

)Why it matters:

- Batch exporting helps manage the throughput of trace data, ensuring better performance while sending data to the backend.

4. Instrument gRPC Server and Client

Both the gRPC server and client should be instrumented with OpenTelemetry to track requests and responses at both ends of the communication. This gives you a complete picture of how requests move through your system.

Server-side Instrumentation:

s := grpc.NewServer(

grpc.StatsHandler(otelgrpc.NewServerHandler()),

)Client-side Instrumentation:

conn, err := grpc.Dial("localhost:50051", grpc.WithStatsHandler(otelgrpc.NewClientHandler()))Why it matters:

- Instrumenting both sides ensures that you can see the full lifecycle of gRPC calls, from the client initiating the request to the server handling it.

OpenTelemetry Protocol (OTLP): A Deep Dive into Observability

5. Attach Resource Attributes

Add meaningful attributes to your traces, such as environment, version, and host details. This helps with filtering and searching traces in your observability platform.

Example:

attr := resource.WithAttributes(

semconv.DeploymentEnvironmentKey.String("production"),

semconv.ServiceVersionKey.String("v1.0.0"),

)Why it matters:

- Adding resource attributes provides more context to your traces, making it easier to identify and filter relevant data.

6. Use Proper Sampling Strategies

Sampling controls how much trace data is collected, which is important for performance and storage management. For production environments, consider using a strategy like rate-limiting sampling to reduce the overhead of collecting too much data.

Example:

tp := sdktrace.NewTracerProvider(

sdktrace.WithSampler(sdktrace.AlwaysSample()),

)Why it matters:

- Sampling ensures that you don’t overload your system with too many traces. It allows you to focus on key traces and optimize performance.

7. Monitor Trace Latency and Errors

Always monitor latency and error rates for your gRPC calls. This helps in detecting performance bottlenecks and identifying failure points in your system.

Best Practice: Ensure that OpenTelemetry is configured to capture both success and failure spans, and capture important metrics like duration, status, and error codes.

Why it matters:

- Monitoring latency and errors helps quickly detect and resolve issues in your distributed system, improving overall reliability.

8. Integrate with Backend Observability Tools

To get the most out of OpenTelemetry, integrate it with an observability backend like Last9, which allows you to visualize and analyze your traces. Ensure that your OTLP exporter is properly set up and sends trace data to your chosen platform.

Example:

exporter, err := otlptracegrpc.New(context.Background())Why it matters:

- An observability platform will provide a powerful interface for visualizing trace data, making it easier to diagnose issues and optimize performance.

9. Handle Errors Gracefully in Tracing Code

Ensure that you handle errors and exceptions properly in your OpenTelemetry setup, especially around trace creation and exporting. This helps ensure that your tracing setup doesn’t silently fail and lose important data.

Example:

if err != nil {

log.Fatalf("failed to create exporter: %v", err)

}Why it matters:

- Proper error handling ensures that your traces are reliably exported without data loss.

Redacting Sensitive Data in OpenTelemetry Collector

Troubleshooting and Debugging with gRPC and OpenTelemetry

OpenTelemetry's observability tools are crucial for identifying and resolving performance issues in gRPC-based systems. Here are a few tips to help you debug effectively:

1. Trace Latency Across Services

By tracing requests across different services, you can pinpoint where latency issues are occurring. If a particular service is experiencing higher latency, you can use the trace data to investigate the root cause.

2. Monitor gRPC Errors

OpenTelemetry simplifies error tracking during gRPC request execution. Instrumenting your gRPC server and client with proper error handling helps quickly identify failures and address them before they impact end users.

defer func() {

if err != nil {

span.RecordError(err)

}

}()3. Analyze Metrics in Real Time

Set up OpenTelemetry to collect key metrics, such as the number of requests, request duration, and error rates. This allows you to monitor the health of your system in real-time.

Conclusion:

Integrating OpenTelemetry with gRPC brings huge benefits to the observability of your microservices architecture.

With OpenTelemetry in your toolset, your gRPC services become highly observable, performant, and reliable components, providing deeper insights into your system's behavior.

FAQs

1. What is OpenTelemetry, and why should I use it with gRPC?

OpenTelemetry is an open-source observability framework that provides APIs, libraries, agents, and instrumentation to capture, process, and export metrics, traces, and logs. Using OpenTelemetry with gRPC allows you to track the performance and health of your microservices, helping you detect issues early, improve troubleshooting, and optimize overall system performance.

2. How does OpenTelemetry integrate with gRPC?

OpenTelemetry integrates with gRPC through specific instrumentation libraries for both gRPC clients and servers. Adding OpenTelemetry's tracing and stats handlers to your gRPC server and client captures detailed traces, including request lifecycles, errors, and latency. These traces are then exported to an observability backend like Last9 for visualization and analysis.

3. Do I need to install any special libraries to instrument gRPC with OpenTelemetry?

Yes, you need to install the necessary OpenTelemetry packages to integrate with gRPC. For Go, these include packages otelgrpc for gRPC instrumentation and otlp/otlptrace for exporting traces. The installation process involves running go get commands to fetch the required libraries.

4. What are the benefits of using OpenTelemetry with gRPC?

- End-to-end visibility: Track requests from the client to the server and vice versa.

- Error detection: Quickly pinpoint errors, latencies, and bottlenecks in the communication between services.

- Performance optimization: Use the insights gained from traces to fine-tune performance and resource utilization.

- Ease of troubleshooting: With detailed tracing, debugging and fixing issues become much faster.

5. How do I configure OpenTelemetry to send data to Last9?

To send trace data to Last9, you need to configure the OTLP (OpenTelemetry Protocol) exporter in your application. This involves setting the OTLP endpoint, authorization headers, and environment variables such as OTEL_SERVICE_NAME and OTEL_EXPORTER_OTLP_ENDPOINT to ensure proper communication between OpenTelemetry and Last9.

6. Can I instrument only the server or the client?

Ideally, you should instrument both the server and the client to gain full visibility of the request lifecycle. However, you can choose to instrument only the server or the client, depending on your use case. Instrumenting both sides ensures you capture traces for requests, responses, and any errors that occur.

7. What are some best practices for using OpenTelemetry with gRPC?

- Use distinct service names for the client and server for easy trace differentiation.

- Enable context propagation to ensure trace data is consistent across services.

- Use batch exporting for efficiency when dealing with high-traffic services.

- Attach meaningful resource attributes like environment, version, and host details for better filtering.

- Monitor latency and error rates to identify performance bottlenecks.

- Integrate OpenTelemetry with an observability tool like Last9 for easy trace visualization and analysis.

8. What is context propagation, and why is it important?

Context propagation ensures that trace data flows consistently across service boundaries. In gRPC, this means that trace context (like trace ID and span ID) is carried from the client to the server, enabling end-to-end tracing. This is crucial for maintaining trace consistency and ensuring that your traces represent the full lifecycle of a request.

9. Can OpenTelemetry trace non-gRPC communication?

Yes, OpenTelemetry is not limited to gRPC. It supports tracing for various protocols and frameworks, including HTTP, messaging systems, and custom protocols. You can integrate it with multiple communication methods in a microservices architecture for comprehensive observability.

10. What are some common challenges when using OpenTelemetry with gRPC?

- Performance overhead: Instrumentation can introduce some overhead, especially if not optimized. Using batch exporting and proper sampling strategies can help reduce this.

- Complexity in configuration: Setting up OpenTelemetry and ensuring proper configuration (e.g., OTLP endpoint, authorization tokens) may require some effort, especially in larger systems.

- Context propagation: Ensuring consistent trace context propagation between services can sometimes be tricky, but using OpenTelemetry’s built-in propagators helps mitigate this.

11. Can I use OpenTelemetry with other observability platforms besides Last9?

Yes, OpenTelemetry supports integration with various observability platforms like Prometheus, Jaeger, Zipkin, and others. You can configure it to send trace data to whichever platform you choose by setting the appropriate exporter and endpoint.

12. How do I monitor gRPC performance with OpenTelemetry?

OpenTelemetry provides automatic instrumentation for measuring key performance indicators (KPIs) such as request duration, error rates, and response status. Exporting this data to a platform like Last9 allows you to analyze these metrics in real-time, helping monitor the health of your gRPC-based services.