AWS Lambda functions are a popular choice for running serverless applications. They're scalable, cost-effective, and easy to manage.

However, as your serverless applications grow, monitoring your Lambda functions effectively becomes crucial. That’s where OpenTelemetry comes in, providing an open-source framework for collecting distributed traces and metrics.

In this guide, we’ll explore how to instrument your AWS Lambda functions with OpenTelemetry. We’ll cover the basics of OpenTelemetry, how it works with AWS Lambda, and walk you through the steps to set it up.

By the end, you’ll have a clear understanding of how to gain real-time insights into your Lambda functions' performance and troubleshoot issues with ease.

What is OpenTelemetry?

OpenTelemetry is a set of APIs, libraries, agents, and instrumentation to provide observability into your applications.

It enables you to collect, process, and export telemetry data (metrics, traces, and logs) from your application, regardless of the programming language or platform.

OpenTelemetry works with various backends, such as Prometheus, Jaeger, AWS X-Ray, and CloudWatch, allowing you to analyze and visualize data in real-time.

Why Use OpenTelemetry with AWS Lambda?

Lambda functions are complex and event-driven, making them harder to trace and monitor effectively.

OpenTelemetry bridges this gap by providing distributed tracing and metrics for serverless applications, helping you monitor:

- Function execution times

- Errors and failures

- Cold start performance

- Invocation counts

- Latency issues

These metrics help you identify bottlenecks, troubleshoot issues, and optimize your serverless architecture.

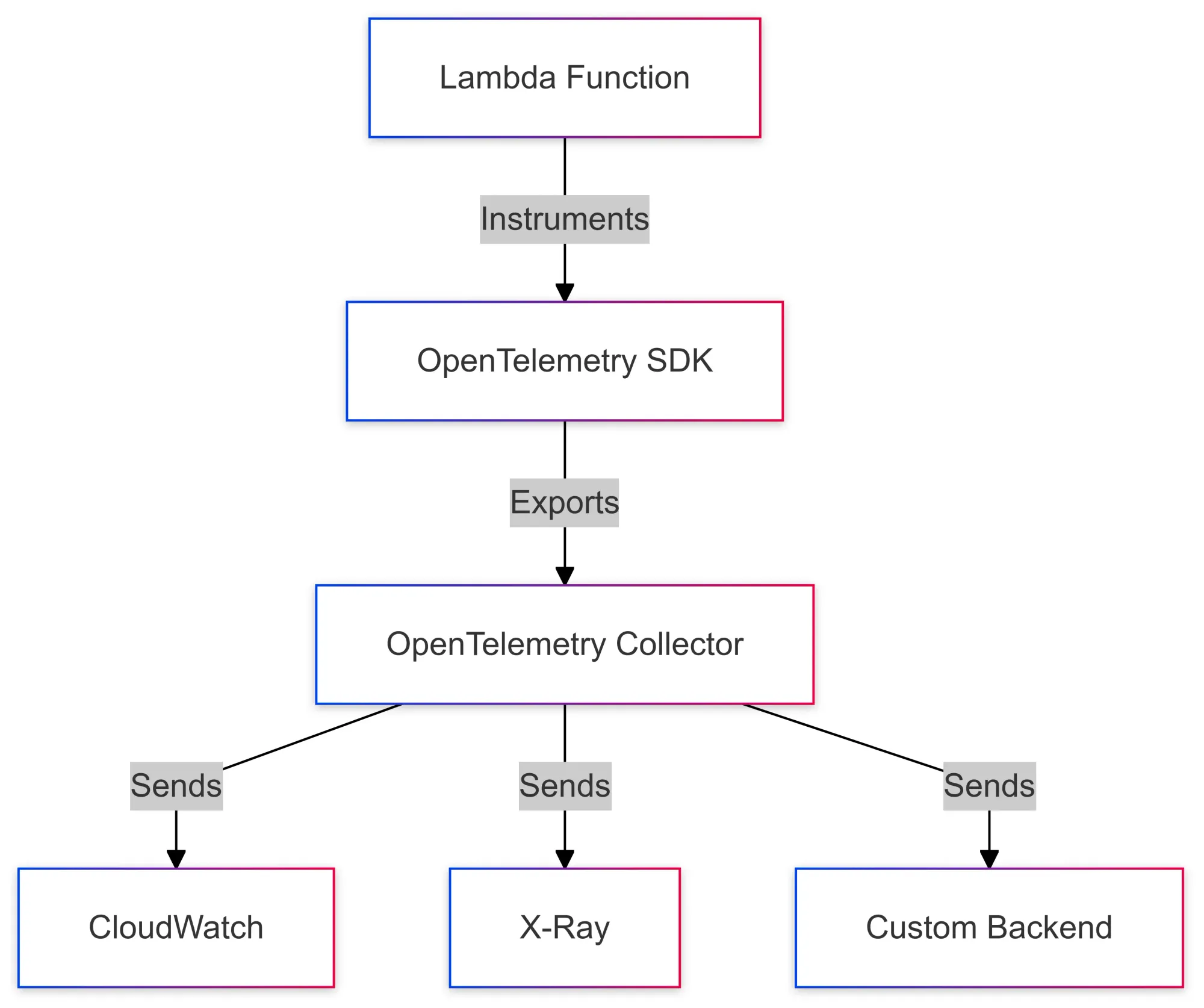

Architecture Overview

The architecture for instrumenting AWS Lambda functions with OpenTelemetry involves using the OpenTelemetry SDK to instrument your Lambda function, which then exports data to an OpenTelemetry Collector.

The collector sends this telemetry data to monitoring platforms like AWS X-Ray, CloudWatch, or any custom backend.

Step-by-Step Guide to Instrumenting AWS Lambda with OpenTelemetry

1. Set Up Your AWS Environment

Before starting, ensure you have an AWS account with the necessary permissions. Set up an IAM role to grant your Lambda function access to AWS services like CloudWatch and X-Ray.

Here’s the IAM policy that grants access for Lambda to send trace data to AWS X-Ray:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"xray:PutTraceSegments",

"xray:PutTelemetryRecords"

],

"Resource": "*"

}

]

}2. Install the OpenTelemetry Lambda Layer

The OpenTelemetry Lambda Layer simplifies the process by automatically instrumenting your Lambda function. Here’s how you can add it:

- Go to the Lambda console and open your Lambda function.

- In the “Layers” section, click “Add a layer.”

- Select “AWS Serverless Application Repository” and search for the OpenTelemetry Lambda layer.

- Add the latest version of the layer to your function.

This layer will automatically send telemetry data to your configured backend (e.g., X-Ray, CloudWatch).

3. Configure the OpenTelemetry Exporter

Configure your Lambda function’s environment variables to export telemetry data to your desired backend. For example, to configure AWS X-Ray:

- Set

OTEL_EXPORTER_XRAY_ENABLEDto true. - Set

OTEL_EXPORTER_XRAY_ENDPOINTto the appropriate X-Ray endpoint (e.g.,xray.us-west-2.amazonaws.com). - Attach the

AWSXRayDaemonWriteAccesspolicy to your Lambda’s IAM role.

4. Lambda Function Implementation

import { Context } from 'aws-lambda';

import { trace, context, SpanStatusCode } from '@opentelemetry/api';

const tracer = trace.getTracer('lambda-example');

export const handler = async (event: any, context: Context): Promise<any> => {

const parentSpan = tracer.startSpan('lambda_execution');

try {

parentSpan.setAttribute('aws.lambda.invocation_id', context.awsRequestId);

const result = await context.with(trace.setSpan(context.active(), parentSpan), async () => {

const childSpan = tracer.startSpan('process_event');

try {

const response = await processEvent(event);

childSpan.setStatus({ code: SpanStatusCode.OK });

return response;

} catch (error) {

childSpan.setStatus({ code: SpanStatusCode.ERROR, message: error.message });

throw error;

} finally {

childSpan.end();

}

});

return result;

} finally {

parentSpan.end();

}

};

async function processEvent(event: any): Promise<any> {

// Process your event here

}This code tracks the execution flow using a parent span and a child span for business logic.

5. Test and Monitor Your Instrumented Lambda

Once your Lambda function is instrumented with OpenTelemetry, monitor the results through your backend (e.g., AWS X-Ray, CloudWatch, Prometheus). You’ll be able to visualize traces and metrics, such as invocation count, duration, and error rate.

6. Troubleshoot and Optimize

Use the collected telemetry data to identify performance issues, such as high latency or frequent errors. OpenTelemetry’s tracing capabilities help you pinpoint the exact step where things go wrong.

How to Load the OpenTelemetry SDK for Custom Instrumentation in AWS Lambda

While the OpenTelemetry Lambda Layer offers automatic instrumentation for your AWS Lambda functions, you might want more granular control over the telemetry data.

In such cases, you can manually load and configure the OpenTelemetry SDK. This approach allows you to create custom spans, define specific attributes, and export telemetry data to your preferred backend.

Step 1: Install OpenTelemetry SDK and Dependencies

First, you'll need to install the OpenTelemetry SDK packages and the necessary exporters. OpenTelemetry offers various packages to help you collect and export trace data.

To begin, add the required dependencies to your project using npm:

npm install @opentelemetry/api @opentelemetry/sdk-trace-base @opentelemetry/sdk-trace-node @opentelemetry/exporter-trace-otlp-httpThis installs the following:

@opentelemetry/api: The core API for managing traces.@opentelemetry/sdk-trace-base: The base SDK that handles trace data.@opentelemetry/sdk-trace-node: The Node.js SDK for server-side trace management.@opentelemetry/exporter-trace-otlp-http: An exporter for sending traces to a backend like the OpenTelemetry Collector, Prometheus, or other compatible systems.

Step 2: Initialize OpenTelemetry SDK

With the dependencies installed, you can initialize the OpenTelemetry SDK within your Lambda function. Below is an example of how to set up the OpenTelemetry SDK, create a custom tracer, and configure an OTLP exporter to send trace data to a backend.

Here’s the code to initialize OpenTelemetry:

import { NodeTracerProvider } from '@opentelemetry/sdk-trace-node';

import { SimpleSpanProcessor } from '@opentelemetry/sdk-trace-base';

import { OTLPTraceExporter } from '@opentelemetry/exporter-trace-otlp-http';

import { trace } from '@opentelemetry/api';

export function setupOpenTelemetry() {

// Initialize the OpenTelemetry tracer provider

const provider = new NodeTracerProvider();

// Create the OTLP exporter to send traces to your backend (e.g., OpenTelemetry Collector)

const exporter = new OTLPTraceExporter({

url: 'http://your-collector-url:4317', // Replace with your collector's endpoint

});

// Add the exporter to the provider with a simple span processor

provider.addSpanProcessor(new SimpleSpanProcessor(exporter));

// Register the provider

provider.register();

}Step 3: Custom Instrumentation in Your Lambda Function

Now that the OpenTelemetry SDK is set up, you can add custom instrumentation directly within your Lambda handler function. This will enable you to create spans for specific tasks or business logic you want to monitor.

Here’s an example of how to create custom spans in your Lambda handler:

import { trace, SpanStatusCode } from '@opentelemetry/api';

const tracer = trace.getTracer('lambda-custom-example');

export const handler = async (event: any, context: Context): Promise<any> => {

// Start a parent span for the Lambda function execution

const parentSpan = tracer.startSpan('lambda_execution');

try {

// Add relevant attributes to the parent span

parentSpan.setAttribute('aws.lambda.invocation_id', context.awsRequestId);

// Example of custom business logic wrapped in a child span

const result = await context.with(trace.setSpan(context.active(), parentSpan), async () => {

const childSpan = tracer.startSpan('process_event');

try {

const response = await processEvent(event);

childSpan.setStatus({ code: SpanStatusCode.OK });

return response;

} catch (error) {

childSpan.setStatus({ code: SpanStatusCode.ERROR, message: error.message });

throw error;

} finally {

childSpan.end();

}

});

return result;

} finally {

parentSpan.end();

}

};

async function processEvent(event: any): Promise<any> {

// Your custom business logic here

}In this code:

- The parent span tracks the overall execution of the Lambda function.

- A child span is created to track a specific part of the business logic, such as processing the event.

- Attributes are added to the spans to provide context, such as the

aws.lambda.invocation_idfor unique identification.

Benefits of Custom Instrumentation

By loading the OpenTelemetry SDK directly and adding custom instrumentation, you can:

- Gain deeper visibility into your Lambda functions by tracking specific parts of your business logic.

- Add custom attributes to spans that are relevant to your use case, like tracking request IDs, user identifiers, or function-specific metrics.

- Send trace data to any backend of your choice, including the OpenTelemetry Collector, CloudWatch, Prometheus, etc.

Advanced Configurations and Best Practices

Local Testing Setup

Before deploying to AWS, you can test your Lambda function locally using the following setup:

import { NodeTracerProvider } from '@opentelemetry/sdk-trace-node';

import { SimpleSpanProcessor } from '@opentelemetry/sdk-trace-base';

import { ConsoleSpanExporter } from '@opentelemetry/sdk-trace-base';

export function setupLocalTesting() {

const provider = new NodeTracerProvider();

const exporter = new ConsoleSpanExporter();

provider.addSpanProcessor(new SimpleSpanProcessor(exporter));

provider.register();

return provider;

}This configuration exports trace data to the console for easy debugging.

Custom Sampling Strategy

import { ParentBasedSampler, TraceIdRatioBasedSampler } from '@opentelemetry/sdk-trace-base';

const sampler = new ParentBasedSampler({

root: new TraceIdRatioBasedSampler(0.1),

remoteParentSampled: new TraceIdRatioBasedSampler(1),

});This setup samples 10% of traces while ensuring that child traces are sampled if their parent is sampled.

Error Handling Patterns

Here’s how to associate errors with spans:

async function handleError(error: Error, span: Span) {

span.setAttribute('error.type', error.name);

span.setAttribute('error.message', error.message);

if (error instanceof CustomBusinessError) {

span.setAttribute('error.category', 'business_logic');

return;

}

span.setAttribute('error.stack', error.stack || 'No stack trace available');

span.setAttribute('error.category', 'system');

}Cost Considerations

Running OpenTelemetry in production can incur costs. To optimize:

- Implement intelligent sampling to reduce the volume of traces.

- Use compression for trace data to reduce network transfer costs.

- Set appropriate retention periods for trace data.

Typical costs for OpenTelemetry in production might include:

- Trace Storage: ~$0.50 per GB

- API Calls: ~$0.30 per million traces

- Network Transfer: ~$0.09 per GB

Common Pitfalls

Be aware of these pitfalls when using OpenTelemetry with AWS Lambda:

- Memory Leaks: Ensure spans are properly ended to avoid memory leaks.

- Cold Start Impact: Minimize instrumentation in the global scope to reduce overhead.

- Missing Context: Ensure context is properly propagated, especially across asynchronous boundaries.

Conclusion

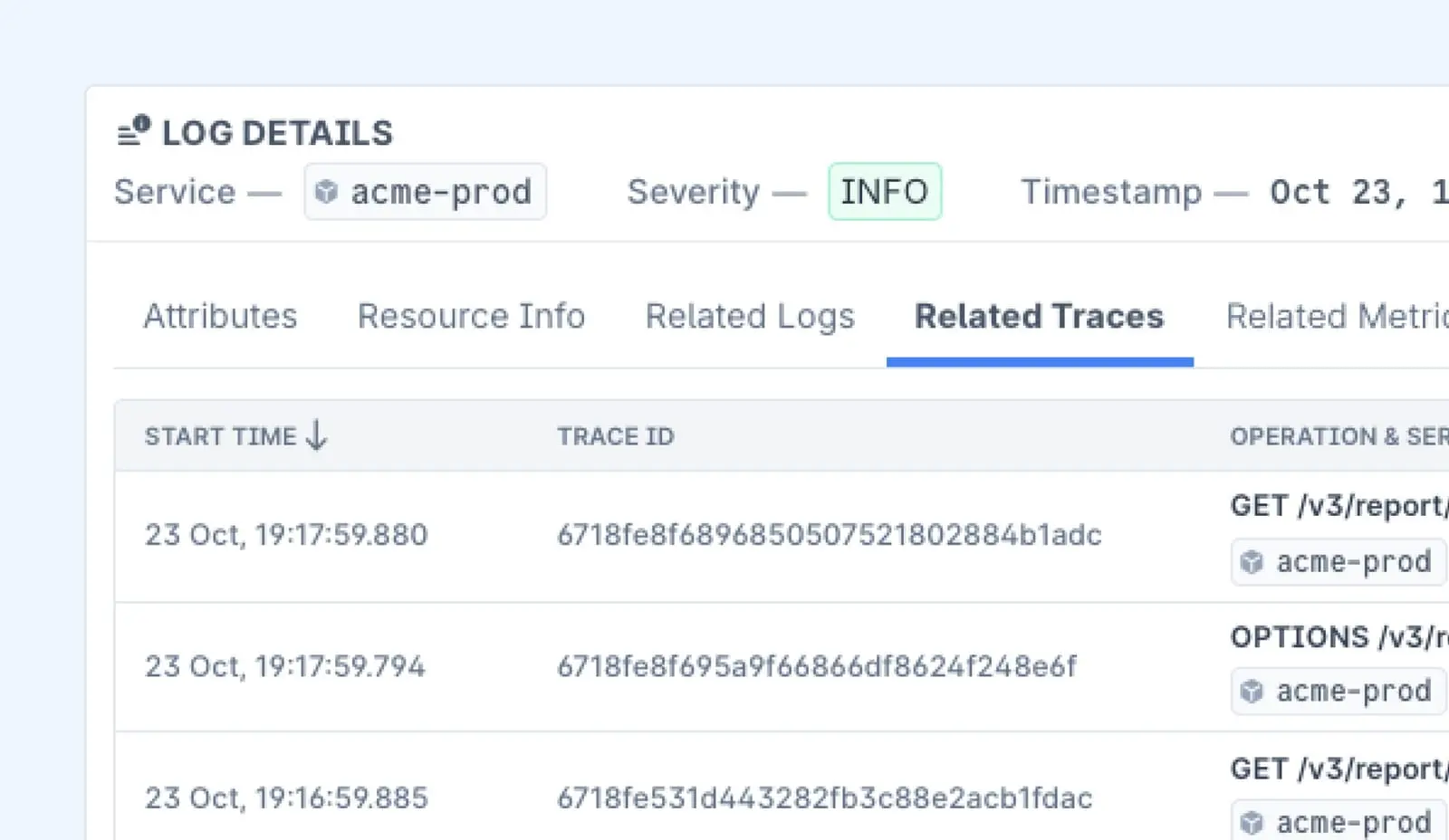

Instrumenting AWS Lambda functions with OpenTelemetry provides valuable insights into the performance and health of your serverless applications. With OpenTelemetry, you're not just collecting data – you're unlocking actionable insights to improve performance and reliability.

If you're seeking a managed observability solution, Last9 is fully compatible with OpenTelemetry and Prometheus. We bring logs, metrics, and traces into one platform, helping you reduce monitoring costs by 50-60% while enabling deeper visibility into your data.

Schedule a demo to learn more or try it for free!

FAQs

What is OpenTelemetry, and why should I use it with AWS Lambda?

OpenTelemetry is an open-source framework designed to standardize the collection of telemetry data, including traces, metrics, and logs. When used with AWS Lambda, it enables you to monitor and analyze your serverless applications by providing insights into function performance, request tracing, and error tracking. This improves observability, helping you identify bottlenecks, optimize performance, and troubleshoot issues effectively.

How does OpenTelemetry work with AWS Lambda?

OpenTelemetry integrates with AWS Lambda by instrumenting the Lambda function code. It collects telemetry data (like traces and logs) that are sent to an OpenTelemetry Collector. From there, the data can be exported to various monitoring backends, such as AWS CloudWatch, X-Ray, or a custom solution. This gives you a unified view of your serverless application’s performance and behavior.

Do I need to modify my Lambda function code to use OpenTelemetry?

Yes, you'll need to modify your Lambda function code to integrate the OpenTelemetry SDK. This involves initializing a tracer, creating spans for your functions, and exporting the telemetry data. While the setup is relatively simple, you need to ensure that the necessary libraries and configurations are in place to capture the relevant data.

What are the main benefits of using OpenTelemetry in AWS Lambda?

The main benefits include:

- Improved observability: Monitor Lambda execution times, error rates, and request flows across distributed systems.

- Traceability: Capture request traces to understand how requests flow through different Lambda functions and other services.

- Error monitoring: Easily identify and troubleshoot errors with detailed logs and trace data.

- Custom sampling: Control the volume of trace data collected to balance performance and cost.

Can I use OpenTelemetry for both traces and logs in AWS Lambda?

Yes, OpenTelemetry can be used to collect both traces and logs in AWS Lambda. Traces allow you to track the flow of requests across services, while logs provide detailed event records, such as error messages and function outputs. Together, they provide a complete picture of your Lambda functions’ performance.

How do I set up OpenTelemetry with AWS Lambda?

To set up OpenTelemetry with AWS Lambda:

- Configure the necessary IAM permissions for X-Ray or other monitoring services.

- Install the OpenTelemetry SDK in your Lambda function.

- Instrument your Lambda function code to create spans for different operations (e.g., function execution, event processing).

- Set up an OpenTelemetry Collector to export data to CloudWatch, X-Ray, or your custom monitoring solution.

What are the best practices for error handling with OpenTelemetry in AWS Lambda?

For effective error handling:

- Use spans to capture error details such as the error type, message, and stack trace.

- Set error status on spans to mark them as ERROR.

- For business-related errors, avoid including detailed stack traces, but capture error category information.

- Always ensure spans are closed in the finally block to avoid memory leaks.

How can I optimize costs when using OpenTelemetry in AWS Lambda?

To optimize costs:

- Implement intelligent sampling to collect only a subset of traces.

- Use compression for trace data to reduce the volume of data transferred.

- Set appropriate retention periods to ensure you're not storing trace data longer than needed.

- Use cloud-native services like AWS X-Ray, which offers more efficient trace storage and query capabilities.

What are the common pitfalls when using OpenTelemetry in AWS Lambda?

- Memory leaks: Forgetting to end spans can cause memory leaks. Always end spans in the finally block.

- Cold start impact: Lambda cold starts can add overhead. Minimize global code execution to reduce initialization time.

- Missing context: Ensure that the telemetry context is propagated correctly, especially when using asynchronous operations in Lambda functions.

Can I test OpenTelemetry locally before deploying to AWS Lambda?

Yes, you can test OpenTelemetry locally by setting up a local tracing environment using a ConsoleSpanExporter to output trace data to the console. This allows you to debug and validate your instrumentation before deploying to AWS Lambda.

Does OpenTelemetry support all AWS Lambda runtimes?

Yes, OpenTelemetry supports multiple AWS Lambda runtimes, including Node.js, Python, Java, Go, and others. You just need to install the appropriate SDK for the runtime you're using.

How does OpenTelemetry impact Lambda function performance?

OpenTelemetry adds some overhead due to the additional work involved in tracing and logging. However, you can minimize this overhead by using efficient sampling strategies and reducing the complexity of your instrumentation code. In most cases, the benefits of improved observability outweigh the minor performance impact.

Can I use OpenTelemetry with other AWS services besides Lambda?

Yes, OpenTelemetry is designed to work across many cloud services, not just Lambda. It can be integrated with services like AWS API Gateway, SQS, DynamoDB, and more, enabling you to trace requests across your entire AWS ecosystem.