Databases are at the heart of so many systems, but let’s face it, they can also be the source of headaches, especially when traffic surges. Optimizing them isn’t some mystical art; it’s a practical way to keep things running smoothly and avoid frustrating slowdowns.

Over the years, I’ve learned that with the right tools and strategies, you can turn a sluggish database into a high-performance engine. Let’s explore some tried-and-true techniques to help you keep up with even the busiest workloads.

8 Core Database Optimization Strategies

1. Indexing: Don’t Just Add, Optimize

When it comes to optimizing databases, indexing is where many developers start, and for good reason.

Indexes are crucial for speeding up read operations by creating a data structure that allows the database engine to quickly locate data.

But here's the catch: just throwing indexes on everything will eventually lead to performance problems. Too many indexes can slow down write operations and consume more disk space.

Optimizing Indexes:

- Choose Columns Wisely: Focus on columns that are frequently used in WHERE, JOIN, and ORDER BY clauses. These are the real performance killers when left unindexed.

- Composite Indexes: If you often query multiple columns together, a composite index can significantly boost performance. For example, if you’re frequently filtering on date and user_id, an index on both columns will be far more efficient than two separate indexes.

- Remove Unused Indexes: Regularly audit your indexes. Over time, some indexes may become obsolete, consuming unnecessary resources. Keep things lean by removing any indexes that aren’t contributing to query performance.

2. Query Optimization: Efficiency Is Key

You can have the fastest hardware and most powerful indexing strategies, but if your queries are inefficient, your database will still struggle. Query optimization isn’t just about making things run faster; it's about making them run smarter.

Query Optimization Tips:

- Avoid SELECT *: Fetching all columns may seem like a shortcut, but it’s a performance killer. Always select only the columns you need.

- EXPLAIN Command: Use the EXPLAIN command to see how your database executes queries. This can help you spot issues like full table scans, which could be avoided with proper indexing or query adjustments.

- Optimize Joins: Ensure that the columns used for joins are indexed. A JOIN on an unindexed column is a recipe for disaster, especially with large tables.

- Use Subqueries Sparingly: While subqueries can be convenient, they often lead to inefficient execution plans. Where possible, try to rewrite subqueries as joins or common table expressions (CTEs) for better performance.

3. Sharding: Scaling Horizontally

As traffic grows, horizontal scaling through database sharding becomes necessary. Sharding is one of the most effective ways to handle large amounts of data without overwhelming a single server. Instead of putting all your data in one database, you split it across multiple databases or nodes.

Best Practices for Sharding:

- Choosing the Right Shard Key: The shard key is a critical decision. If you choose a key that’s not evenly distributed (e.g., user ID in a small region), you’ll end up with uneven data distribution, causing some nodes to bear the brunt of the load.

- Data Distribution: Make sure to distribute the data evenly across shards. A shard that holds too much data will become a bottleneck, so aim for balance across your servers.

- Avoid Cross-Shard Joins: Cross-shard joins are expensive because they require data from multiple shards, which can lead to network overhead. Try to design your schema and queries to minimize these operations.

4. Caching: Boost Performance and Reduce Load

When you cache data, you're essentially putting frequently accessed data in a memory store (like Redis or Memcached) to avoid hitting the database repeatedly. This can dramatically reduce the load on your database and improve response times.

Effective Caching Strategies:

- Cache Query Results: If certain queries are run frequently (like product info on an e-commerce site), cache the results for a set period of time to prevent repetitive database hits.

- Cache Full Pages: For high-traffic pages that don’t change often, consider caching entire page outputs to minimize database queries altogether.

- Cache Invalidation: Implement cache invalidation strategies to ensure that your cache doesn’t serve outdated data. For example, cache expiration policies or event-based invalidation when data changes.

5. Database Configuration Tuning: Customization for Your Needs

Most databases come with default settings, but those are often geared towards general use cases, not high-traffic systems. Tuning your database configuration can help tailor it to your specific workload and traffic patterns.

Key Configuration Adjustments:

- Buffer Pool Size: The buffer pool is where your database stores frequently accessed data. Increasing the buffer pool size allows for more data to be cached in memory, which can reduce disk reads and improve query performance.

- Connection Pooling: Database connection overhead can be a huge bottleneck. Use connection pooling to reuse existing connections, instead of creating a new one for every query.

- Transaction Isolation Levels: Different workloads require different isolation levels. Lower isolation levels can improve performance by allowing more concurrency, but they come at the cost of potential consistency issues. Evaluate your needs carefully.

6. Routine Maintenance: Automate the Boring Stuff

Keeping your database optimized requires regular maintenance. From rebuilding fragmented indexes to updating statistics, these tasks can take up a lot of your time. Thankfully, you can automate most of them.

Maintenance Automation:

- Index Rebuilding: Over time, indexes can become fragmented, which slows down read performance. Set up a routine to rebuild or reorganize indexes periodically.

- Update Database Statistics: The query planner relies on up-to-date statistics to generate efficient query execution plans. Automate the updating of statistics to ensure that the planner has the best data to work with.

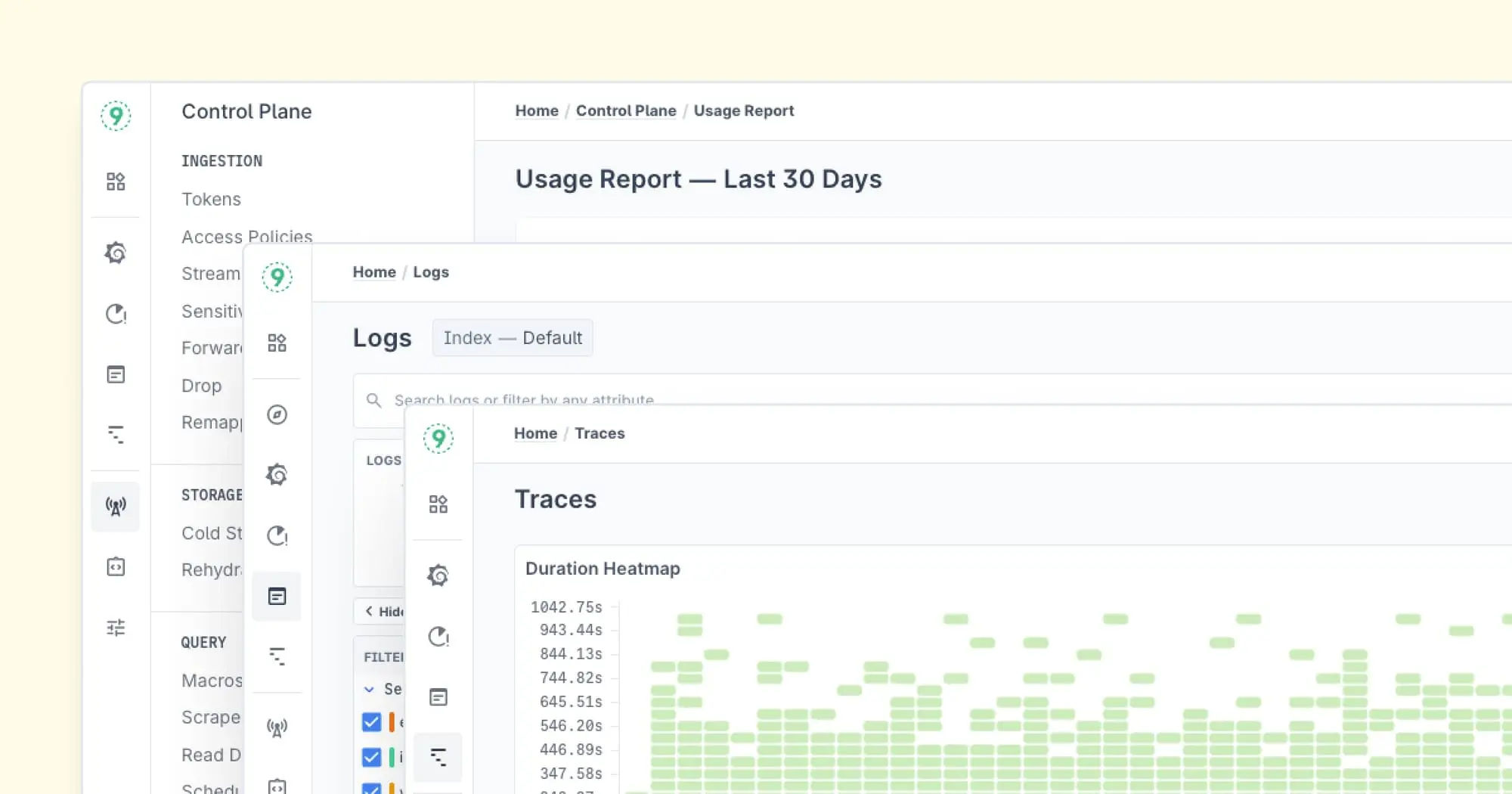

- Health Monitoring: Use tools like Prometheus, Grafana, or OpenTelemetry to continuously monitor the health of your database. These tools can alert you to issues before they escalate into serious problems.

7. Database Scaling: Prepare for Growth

Scaling your database is inevitable, especially if you're dealing with high traffic. There are two primary ways to scale: vertical scaling (more power to a single server) and horizontal scaling (adding more servers).

Scaling Strategies:

- Vertical Scaling: This is useful for small to medium-sized databases. Simply adding more CPU, RAM, and storage to a single server can help handle increased traffic and data.

- Horizontal Scaling: For larger systems, horizontal scaling is essential. This involves distributing data across multiple servers, improving both performance and availability. This is where sharding comes into play.

- Read Replicas: For read-heavy applications, you can use read replicas to offload read traffic from the primary database. This ensures that the main database isn’t overwhelmed by too many read requests.

8. Backups and Disaster Recovery: Plan for the Worst

All the optimization in the world won’t help if your data is lost or corrupted. Regular backups and a solid disaster recovery plan are essential components of a solid database strategy.

Backup Best Practices:

- Automated Backups: Set up automated backups to ensure you never miss a critical backup window.

- Backup Testing: Regularly test your recovery process to ensure that your backups are valid and that you can restore your data quickly in case of a failure.

- Off-Site Storage: Store backups off-site or in a different region to protect against localized disasters.

Performance Testing and Benchmarking for Databases

Performance testing isn’t about randomly firing off queries and timing them. It’s about understanding how your database behaves under different conditions, and that requires a systematic approach. Let’s break it down:

Establishing Performance Baselines

Before you can optimize, you need a baseline. Think of it as a performance "snapshot" of your database at its best (or at least, its average). Here’s how to capture it:

- Measure during typical workloads: Make sure you're testing real, everyday usage.

- Document peak usage: Because, let’s face it, everyone has a crazy busy time.

- Record resource utilization: Track how your database uses CPU, memory, and I/O.

- Capture query plans: Especially for the big hitters (those queries you just can’t avoid).

Key metrics to include:

- Queries per second (average and peak)

- Response times for crucial queries

- Resource usage (CPU, memory, I/O)

- Cache hit ratios

- Connection pool utilization

Load Testing Strategy

You want to stress-test your database, but not in an unrealistic way. The goal is to simulate real-world scenarios rather than just throwing queries at it. Focus on what your database is actually going to face in production:

- Daily usage patterns: Think about your average day. When do users typically log in, and what types of queries are they running? Make sure your test accounts for peak traffic during regular hours.

- Seasonal peaks: Some databases face major traffic spikes at certain times of the year (like holidays, Black Friday, or product launches). You’ll want to test for these extreme load conditions to ensure the system can handle it without collapsing.

- Data growth projections: How will your database handle an increase in data volume over time? Make sure to simulate data growth to test how performance holds up when your database scales.

- Concurrent user behavior: Multiple users performing different actions at the same time can strain a database. Consider different scenarios like multiple users making purchases, updating records, or querying complex data sets simultaneously.

- Mixed workload scenarios: In reality, databases often need to juggle different types of operations at once, such as:

- Product searches

- Cart updates

- Order processing

- Inventory checks

Simulating this "mixed bag" of database activity can give you a more realistic understanding of performance under heavy load.

Schema Design Optimization

Schema design isn’t just some afterthought – it’s foundational to performance. The right decisions upfront can save you from a world of pain later. Here are some tips to keep in mind:

Normalization Trade-offs

Normalization is great for reducing data redundancy, but sometimes performance takes a hit. Consider this real-world example:

- In a content management system (CMS), storing article tags in a separate table (normalized) requires joins every time you fetch article data. But if tags don’t change often and are needed alongside articles, denormalization might be the way to go.

Example of normalized schema:

-- Normalized (requires join)

SELECT a.*, GROUP_CONCAT(t.tag_name)

FROM articles a

JOIN article_tags at ON a.id = at.article_id

JOIN tags t ON at.tag_id = t.id

GROUP BY a.id;Example of denormalized schema:

-- Denormalized (faster reads)

SELECT * FROM articles WHERE JSON_CONTAINS(tags, '"technology"');Temporal Data Management

Dealing with time-sensitive data? It’s tricky. Here’s how you can tackle it:

- Partition by time ranges: For logging systems or time-series data.

- Rolling window tables: Keep your data fresh.

- Archival strategies: Know when and how to archive older data.

- Point-in-time recovery: Because stuff happens, and you’ll want to recover.

Example of partitioning by time for logs:

CREATE TABLE logs (

timestamp DATETIME,

level VARCHAR(10),

message TEXT

) PARTITION BY RANGE (UNIX_TIMESTAMP(timestamp)) (

PARTITION p_2023_01 VALUES LESS THAN (UNIX_TIMESTAMP('2023-02-01')),

PARTITION p_2023_02 VALUES LESS THAN (UNIX_TIMESTAMP('2023-03-01'))

);

Cloud-Specific Optimizations

Cloud databases are a bit like a buffet – there are lots of options, but picking the right one can get a little tricky.

Here are some tips for optimizing your database when it’s running in the cloud:

Multi-AZ Considerations

Running your database across multiple availability zones (AZs)? Great! Just make sure to keep an eye on:

- Replication Lag: You don’t want your data to get out of sync.

- Implement lag monitoring with alerts.

- Set automatic failover thresholds for high availability.

- Optimize cross-zone latency for better performance.

Storage Configuration

Different types of storage fit different workloads:

- General Purpose SSD: Great for most use cases.

- Provisioned IOPS: For high-performance, I/O-heavy workloads.

- Magnetic Storage: If data’s infrequent but you still need to keep it.

Cost Optimization Strategies

Cloud databases can rack up a bill if you're not careful. Here are a few ways to keep costs in check:

- Instance Right-sizing: Adjust the instance size based on actual usage.

- Auto-scaling: Use it when traffic’s unpredictable.

- Reserved instances: Great for steady workloads.

Storage Management

- Data lifecycle policies: Clean up older data automatically.

- Appropriate storage tiers: Use faster storage for frequently accessed data and cheaper options for infrequent access.

- Compression: Compress data when it makes sense to save storage costs.

Service Mesh Integration

If your database is running within a service mesh, things get a little more complicated. But there are plenty of opportunities to fine-tune performance:

Traffic Management

Service mesh proxies (like Envoy) can add overhead, but you can optimize their impact:

- Connection Pool Management: Ensure pool sizes match your usage.

- Circuit Breakers: Prevent a broken database from bringing everything down.

- Request Routing: Define retry policies, timeouts, and load-balancing strategies.

Here’s an example of how you might configure traffic management for database calls with Envoy:

clusters:

- name: primary_db

connect_timeout: 0.25s

type: strict_dns

lb_policy: round_robin

circuit_breakers:

thresholds:

max_connections: 1024

max_pending_requests: 1024

max_requests: 1024Conclusion:

Database optimization is not a one-time task. For hands-on performance tuning steps. It’s a continuous process. The more you monitor and adjust, the better your database will perform, even during periods of high traffic.

From indexing and query optimization to scaling and caching, each technique plays a role in keeping things running smoothly. It may take time and a bit of trial and error, but the results are well worth the effort.