In the microservices and containerization space, Kubernetes is the go-to platform for managing and orchestrating applications at scale. However, Kubernetes introduces complexity in monitoring, especially as applications grow and become distributed.

But what exactly is observability in the context of Kubernetes? Observability refers to the ability to measure and understand the internal states of a system based on external outputs such as metrics, logs, and traces.

With Kubernetes, observability helps DevOps teams monitor the health of clusters, identify service failures, and make data-driven decisions to optimize performance.

This post explores the key elements of Kubernetes observability and provides insights on the best practices and tools to implement it effectively in your environment.

What is Kubernetes Observability?

At its core, Kubernetes observability involves tracking the health and performance of your Kubernetes clusters through key data points: metrics, logs, and tracing.

- Metrics provide quantitative data, offering insights into things like CPU and memory usage, request latency, and error rates. They are essential for understanding the performance of containers and services in real-time.

- Logs give you access to detailed records about events and activities in your Kubernetes clusters, often generated by the applications running in the containers. These logs help you track individual events and pinpoint the exact cause of issues.

- Traces are a relatively newer concept, allowing you to track how requests move across various services in a distributed system. Tracing enables you to visualize the path a request takes, helping you identify where delays and failures occur.

Unlike traditional monitoring, where tools primarily alert you to failures or performance degradation, observability tools enable you to answer deeper questions:

- What’s happening right now?

- Why is it happening?

With this knowledge, teams can proactively manage their Kubernetes clusters, reduce downtime, and improve the overall user experience.

Why Kubernetes Observability Matters

High Availability and Performance

As your organization scales and adopts a microservices architecture, maintaining high availability becomes increasingly challenging.

Without proper observability, it's difficult to pinpoint why a service is underperforming, especially when multiple microservices are involved.

Collecting and analyzing metrics, logs, and traces enables Kubernetes observability to maintain the performance of each service, ensuring resilience and availability even under load.

Troubleshooting Complex Issues

Kubernetes is dynamic—containers are spun up and down, pods are scheduled across different nodes, and services interact in unexpected ways. This makes troubleshooting difficult, especially when issues arise intermittently or across multiple services.

Observability allows you to track failures in real-time, understand the root cause, and prevent them from becoming recurring problems. With proper logging and tracing, teams can identify whether issues are due to infrastructure failures, application bugs, or configuration problems.

Optimizing Resource Usage and Scaling

Kubernetes allows for dynamic scaling based on demand, but without observability, it's hard to know whether the scaling is happening effectively.

Monitoring the right metrics, such as CPU usage, memory consumption, and pod utilization, helps teams spot underused resources, fine-tune scaling policies, and cut down on wasted infrastructure costs.

Moreover, observability helps predict resource demands and prevent over-provisioning, ensuring a cost-effective solution while maintaining system performance.

Key Kubernetes Observability Concepts

Kubernetes Metrics Server

The Metrics Server is a critical component for gathering resource usage metrics such as CPU and memory usage from nodes and pods.

It exposes the data through a REST API, which can be consumed by tools like Prometheus, the Horizontal Pod Autoscaler, and the Kubernetes dashboard.

Kubernetes API Server

The API Server is the central management point for Kubernetes. It's responsible for validating and processing API requests, and its performance can directly impact the responsiveness and stability of the entire system.

Monitoring the API Server is crucial for diagnosing issues related to control plane performance.

Pod Lifecycle Events

Pods, the smallest deployable units in Kubernetes, have a lifecycle that includes states like Pending, Running and Succeeded.

Observability tools should track pod lifecycle events to detect issues like pod crashes, restarts, or misconfigurations that could affect application availability.

Kubernetes Network Observability

Monitoring the network between pods is just as important as monitoring the nodes themselves. Network issues can lead to degraded application performance, latency, or service outages.

Network policies in Kubernetes, along with observability tools like Cilium and Last9, can help track communication and identify issues in the networking layer.

Kubernetes Events

Events in Kubernetes provide valuable insight into what’s happening in the cluster, from pods being scheduled to nodes being marked as unhealthy.

These events can serve as a valuable source of information when troubleshooting cluster issues or identifying performance degradation.

Understanding Kubernetes Observability Components

Core Kubernetes Metrics Pipeline

The Kubernetes metrics pipeline is the foundation of cluster observability:

┌─────────────────┐ ┌──────────────┐ ┌────────────────┐

│ kubelet │ │ metrics-server│ │ HorizontalPod │

│ /metrics/cadvisor├────► ├────►│ Autoscaler │

└─────────────────┘ └──────────────┘ └────────────────┘The diagram illustrates the Core Kubernetes Metrics Pipeline, a fundamental structure for monitoring Kubernetes clusters.

- Kubelet: The kubelet, running on each node, collects resource metrics (like CPU, memory, and network usage) from the containers and sends them to the

/metrics/cadvisorendpoint. - Metrics Server: The Metrics Server aggregates the metrics from all nodes and pods, making this data available for use by other components in the Kubernetes ecosystem, such as the Horizontal Pod Autoscaler.

- Horizontal Pod Autoscaler (HPA): The HPA automatically scales the number of pods in a deployment based on resource usage metrics (e.g., CPU or memory). This ensures that the application can handle changing loads without manual intervention.

This pipeline ensures that Kubernetes clusters are continuously monitored and scaled based on real-time resource usage.

Kubernetes Resource Metrics

Here's how to implement basic resource monitoring:

apiVersion: v1

kind: Pod

metadata:

name: nginx

annotations:

prometheus.io/scrape: "true"

prometheus.io/port: "9113"

spec:

containers:

- name: nginx

resources:

requests:

memory: "64Mi"

cpu: "250m"

limits:

memory: "128Mi"

cpu: "500m"Setting Up Kubernetes Observability Stack

1. Prometheus Operator Installation

A YAML snippet for deploying Prometheus:

apiVersion: monitoring.coreos.com/v1

kind: Prometheus

metadata:

name: prometheus

namespace: monitoring

spec:

serviceAccountName: prometheus

serviceMonitorSelector:

matchLabels:

team: frontend

resources:

requests:

memory: 400Mi

securityContext:

fsGroup: 2000

runAsNonRoot: true

runAsUser: 10002. Kubernetes Service Monitors

Monitor custom application services with this YAML configuration:

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

name: app-monitor

namespace: monitoring

labels:

team: frontend

spec:

selector:

matchLabels:

app: my-app

endpoints:

- port: metrics

interval: 15s

path: /metrics

namespaceSelector:

matchNames:

- production3. Kubernetes Events Monitoring

A Python example for real-time cluster event tracking:

from kubernetes import client, watch

from prometheus_client import Counter, start_http_server

k8s_events = Counter('kubernetes_events_total',

'Kubernetes Events',

['namespace', 'reason', 'type'])

def watch_k8s_events():

v1 = client.CoreV1Api()

w = watch.Watch()

for event in w.stream(v1.list_event_for_all_namespaces):

k8s_events.labels(

namespace=event['object'].involved_object.namespace,

reason=event['object'].reason,

type=event['object'].type

).inc()

Implementing Pod-Level Observability

Custom Metrics API

Configuration for enabling custom metrics:

apiVersion: apiregistration.k8s.io/v1

kind: APIService

metadata:

name: v1beta1.custom.metrics.k8s.io

spec:

service:

name: custom-metrics-apiserver

namespace: monitoring

group: custom.metrics.k8s.io

version: v1beta1

insecureSkipTLSVerify: true

groupPriorityMinimum: 100

versionPriority: 100Pod Metrics Collection

Go implementation for monitoring pod startup times:

package main

import (

"github.com/prometheus/client_golang/prometheus"

"k8s.io/client-go/kubernetes"

)

var (

podStartupLatency = prometheus.NewHistogramVec(

prometheus.HistogramOpts{

Name: "pod_startup_latency_seconds",

Help: "Time taken for pod to become ready",

Buckets: []float64{1, 5, 10, 30, 60, 120, 300, 600},

},

[]string{"namespace", "pod_name"},

)

)

func monitorPodStartup(clientset *kubernetes.Clientset) {

// Monitoring pod startup times

}Advanced Kubernetes Observability Patterns

Multi-Cluster Monitoring

Configure federated monitoring across clusters with Prometheus:

apiVersion: monitoring.coreos.com/v1

kind: Prometheus

metadata:

name: prometheus-fed

spec:

replicas: 2

externalLabels:

cluster: us-west1

thanos:

baseImage: quay.io/thanos/thanos

version: v0.24.0

serviceMonitorSelector:

matchLabels:

federation: enabledNetwork Observability

Track pod communication with a Cilium policy:

apiVersion: cilium.io/v2

kind: CiliumNetworkPolicy

metadata:

name: network-observability

spec:

endpointSelector:

matchLabels:

app: monitored-app

egress:

- toEndpoints:

- matchLabels:

app: destination

toPorts:

- ports:

- port: "80"

protocol: TCP

Cost Optimization

- Metric Retention Policies:

retention: 30d

retentionSize: 500GB

tsdb:

outOfOrderTimeWindow: 10m- Label Management:

metric_relabel_configs:

- source_labels: [__name__]

regex: 'container_.*'

action: drop

- source_labels: [kubernetes_namespace]

regex: '^(kube-system|monitoring)$'

action: keepConclusion

Implementing observability in Kubernetes requires careful planning due to the platform’s unique nature. Start with the basics of metrics collection, and as you scale, introduce more advanced monitoring techniques, always keeping scalability in mind.

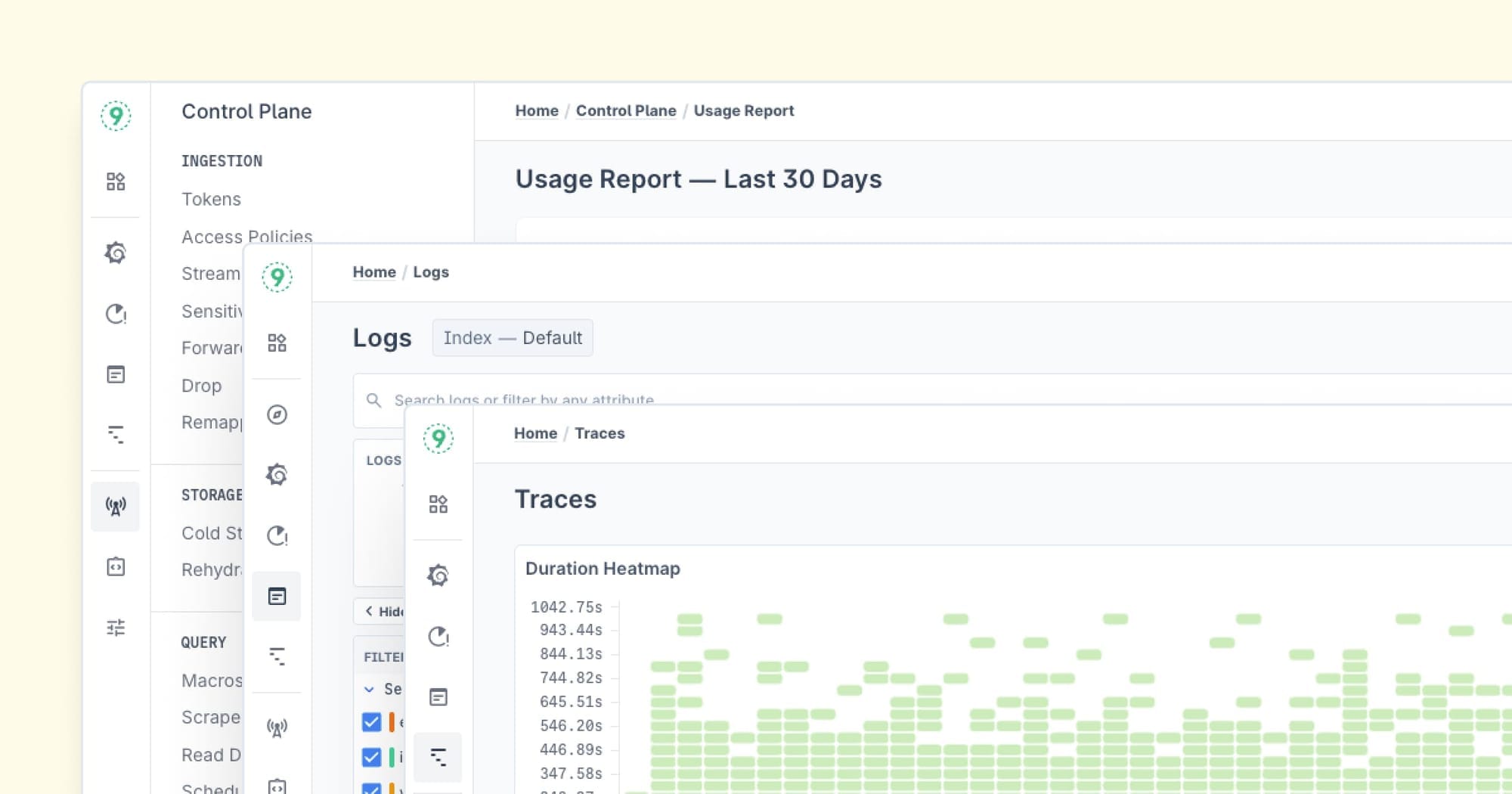

If you’re looking for a solution that consolidates metrics, logs, and traces, Last9 offers a unified approach.

At Last9, we focus on making telemetry data accessible and manageable. Our platform seamlessly combines all your telemetry—metrics, traces, and logs—so you can easily monitor and troubleshoot your Kubernetes environment.

Schedule a demo with us or try it for free!

FAQs

- What is Kubernetes observability?

Kubernetes observability is the ability to track and understand the health and performance of applications running in Kubernetes using metrics, logs, and traces. - Why is observability important for Kubernetes?

It helps identify, troubleshoot, and optimize issues in dynamic, distributed environments, providing insights into microservices interactions and performance bottlenecks. - What’s the difference between monitoring and observability?

Monitoring is reactive and focuses on data collection, while observability is proactive and helps uncover why issues are happening by analyzing metrics, logs, and traces. - How can Kubernetes observability improve troubleshooting?

It provides deep insights into system behavior, helping to quickly pinpoint issues, reducing downtime, and speeding up root cause analysis. - Can I use Kubernetes without third-party observability tools?

Yes, but third-party tools like Prometheus and Grafana offer better scalability, visualization, and deeper insights than Kubernetes' built-in tools. - How does observability help optimize Kubernetes costs?

By providing insights into resource usage, observability helps identify overprovisioned resources and optimize scaling, leading to cost savings. - What challenges come with Kubernetes observability?

Key challenges include managing high cardinality data, dealing with complex configurations, and filtering through large amounts of data to find actionable insights.