Aug 24th, ‘22/6 min read

How to restart Kubernetes Pods with kubectl

A query that keeps popping up, so decided to write a simple reckoner on how to restart a Kubernetes pod with kubectl

In Kubernetes, Pods are the smallest units of computing that you can create and manage. A Pod represents a single instance of a running process in a cluster. A Pod contains one or more containers that share the same storage and network resources. Pods are relatively ephemeral (temporary) rather than durable components and have a defined lifecycle like containers.

The lifecycle of a Pod starts in the Pending phase and then moves through Running if at least one of its primary containers starts OK. After that, it moves to either the Succeeded or Failed phases depending on whether any container in the Pod terminated in failure. And while a Pod goes through its lifecycle, there are several scenarios where you might want to restart it to ensure your cluster is at its desired state.

This article will discuss five scenarios where you might want to restart a Kubernetes Pod and walk you through methods to restart Pods with kubectl.

Five scenarios where you might want to restart a Pod

There are several reasons why you might want to restart a Pod. The following are 5 of them:

- Container Out of Memory (OOM) error: Out of Memory error is one of the most common reasons for restarting a Pod. This error happens if a Pod’s container resource usage is not configured or the application behaves unpredictably. For example, suppose you allocated 600Mi of memory for a container, and it tries to allocate additional memory. In that case, Kubernetes will kill the Pod with an “Out of Memory” error. When this error happens, you must restart your Pod after rightsizing the resource Limits.

- The Pod is stuck in a terminating state: A Pod can be said to be stuck in a terminating state when all of its containers have terminated, but it is still functioning. This occurrence usually happens when the Pod is on a Node that’s unexpectedly taken out of service, and the

kube-schedulerandcontroller-managercan't clean up all the Pods on that Node. - To easily upgrade a Pod with a newly-pushed container image if you previously set the PodSpec imagePullPolicy to Always.

- To update configurations and secrets.

- You would want to restart Pods when the application running in the Pod has an internal state that gets corrupted and needs to be cleared.

You’ve seen some scenarios where you might want to restart a Pod. Next, you will learn how to restart Pods with kubectl.

Restarting Kubernetes pods with kubectl

kubectl, by design, doesn’t have a direct command for restarting Pods as Docker has for containers — docker restart <container_id>. Because of this, to restart Pods with kubectl, you have to use one of the following methods:

- Restarting Kubernetes Pods by changing the number of replicas with

kubectl scalecommand - Downtime less restarts with

kubectl rolloutrestart command - Automatic restarts by updating the Pod’s environment variable

- Restarting Pods by deleting them

Prerequisites

Before you learn how to use each of the above methods, ensure you have the following prerequisites:

- A Kubernetes cluster. The demo in this article was done using minikube — a single Node Kubernetes cluster.

- The kubectl command-line tool is configured to communicate with the cluster.

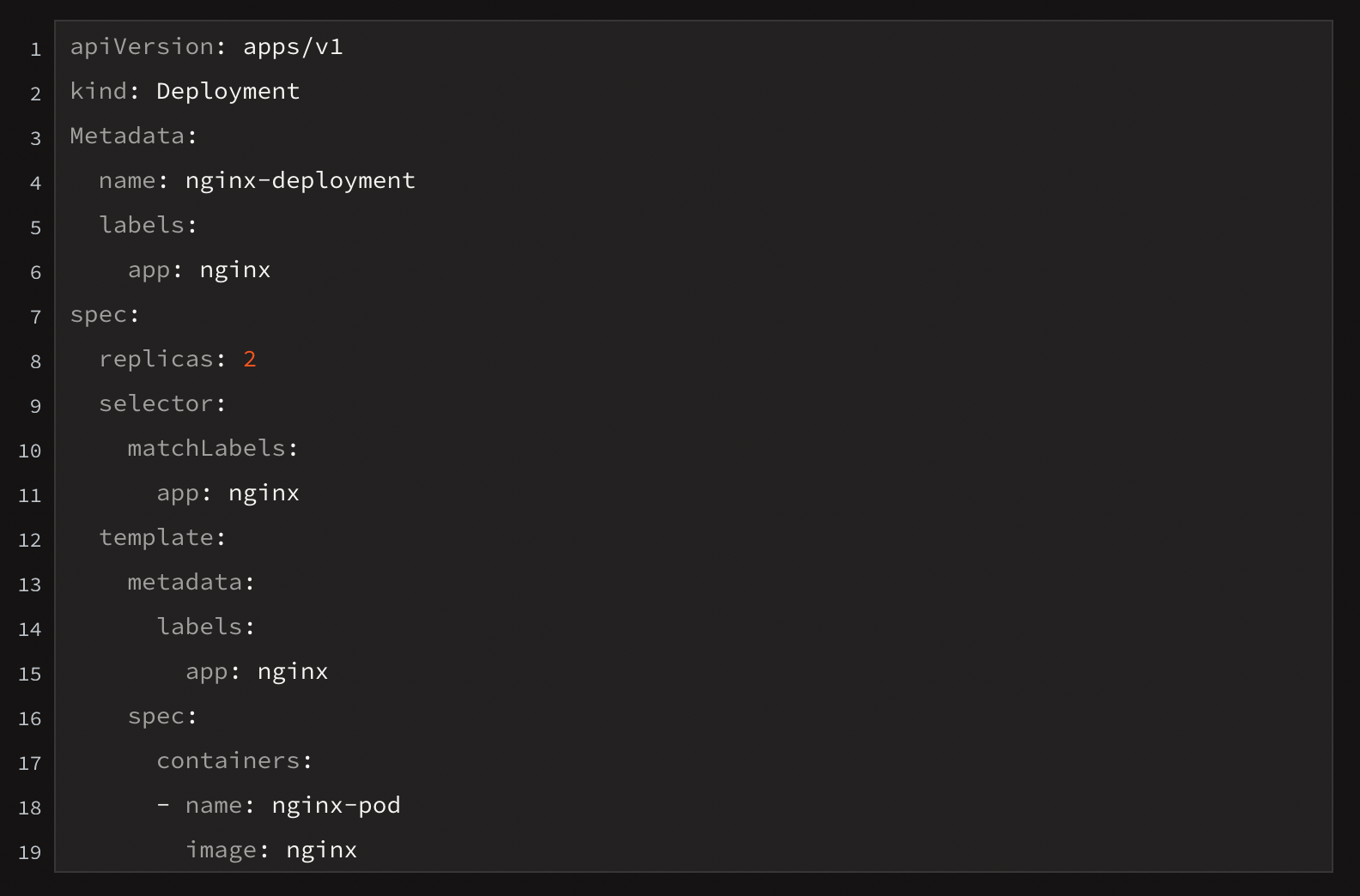

For demo purposes, in any desired directory, create an nginx-deployment.yaml file with replicas set to 2 using the following YAML configurations:

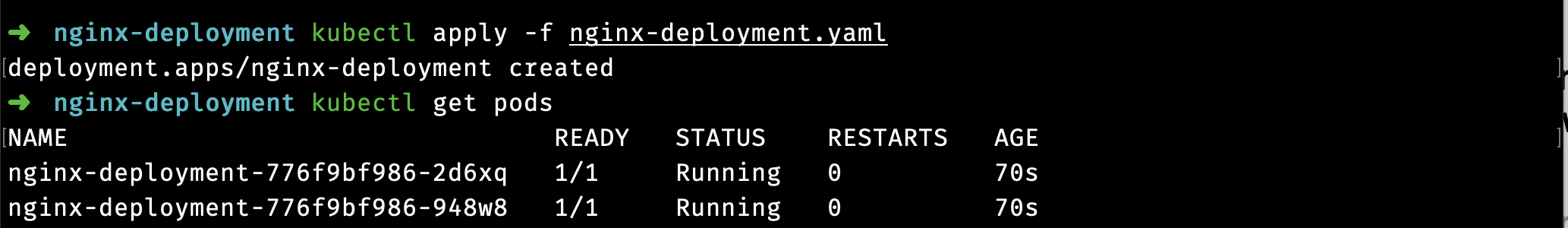

In your terminal, change to the directory where you saved the deployment file, and run:$ kubectl apply -f nginx-deployment.yaml

The above command will create the nginx deployment with two pods. To verify the number of Pods, run the kubectl get pods command.

Now you have the Pods of the Nginx deployment running. Next, you will use each of the methods outlined earlier to restart the Pods.

Restarting Kubernetes Pods by changing the number of replicas

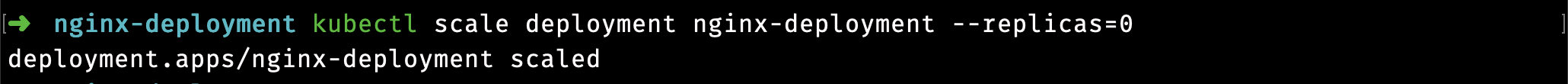

In this method of restarting Kubernetes Pods, you scale the number of the deployment replicas down to zero, which stops and terminates all the Pods. Then you scale them back up to the desired state to initialize new pods.

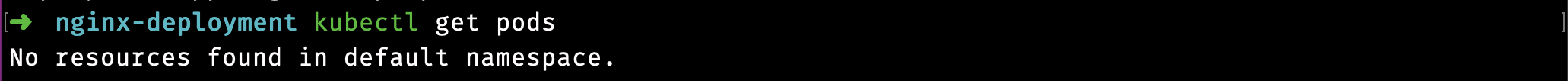

To scale down the Nginx deployment replicas you created, run the following kubectl scale command:$ kubectl scale deployment nginx-deployment --replicas=0

The above command will show an output indicating that Pods has been scaled, as shown in the image below.

To confirm that the pods were stopped and terminated, run kubectl get podsYou should get the “No resources are found in default namespace” message.

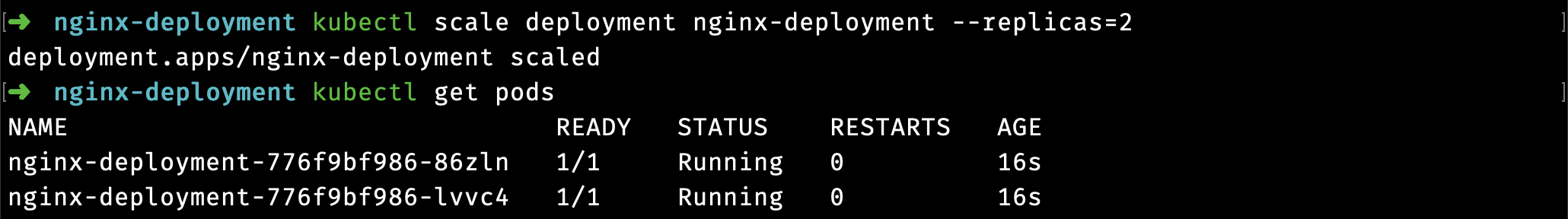

To scale up the replicas, run the same kubectl scale, but this time with --replicas=2. $ kubectl scale deployment nginx-deployment --replicas=2

After running the above command, to verify the number of pods running, run:$ kubectl get pods

And you should see each Pod back up and running after restarting, as in the image below.

Downtime less restarts with Rollout restart

In the previous method, you scaled down the number of replicas to zero to restart the Pods; doing so caused an outage and downtime of the application. To restart without any outage and downtime, use the kubectl rollout restart command, which restarts the Pods individually without impacting the deployment.

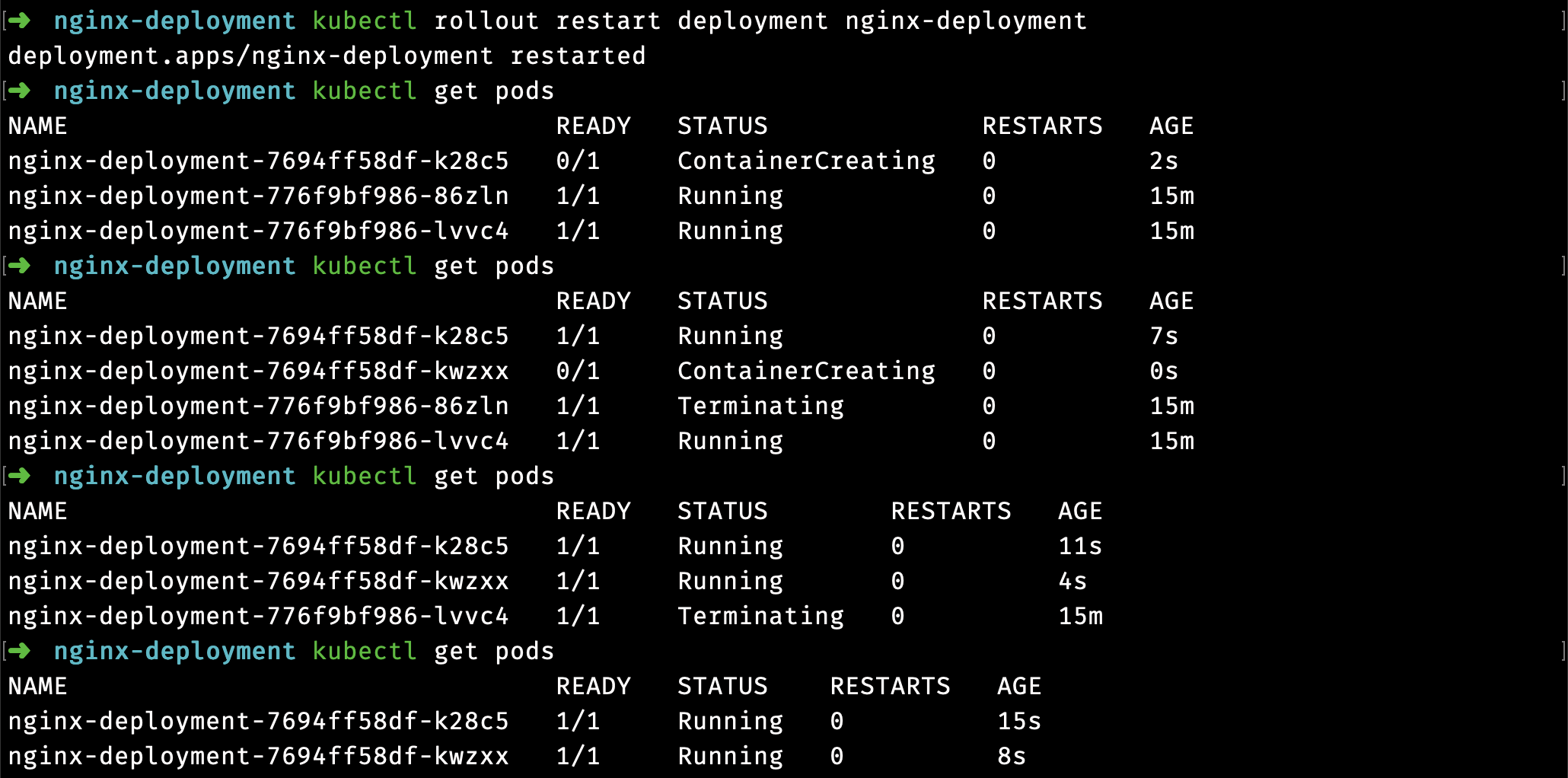

To use rollout restart on your Nginx deployment, run:$ kubectl rollout restart deployment nginx-deployment

Now to view the Pods restarting, run:$ kubectl get pods

Notice in the image below Kubernetes creates a new Pod before Terminating each of the previous ones as soon as the new Pod gets to Running status. Because of this approach, there is no downtime in this restart method.

Automatic restarts by updating the Pod’s environment variable

So far, you’ve learned two ways of restarting Pods in Kubernetes: changing the replicas and the rollout restarting. The methods work, but you explicitly restarted the pods with both.

In this method, once you update the Pod’s environment variable, the change will automatically restart the Pods.

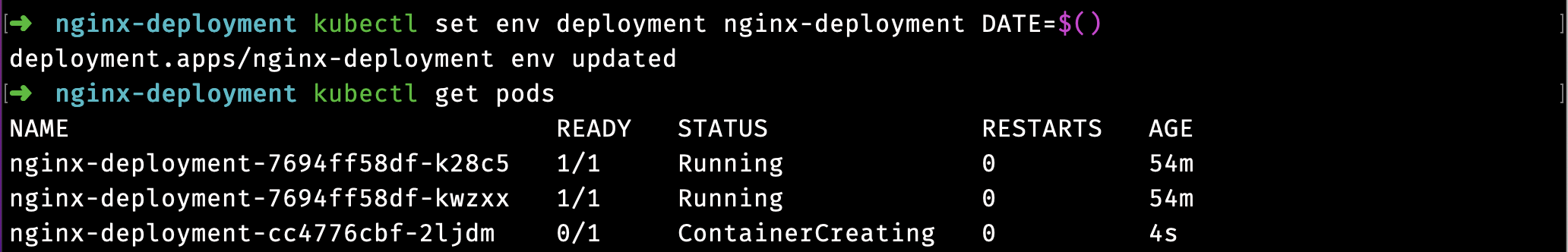

To update the environment variables of the Pods in your Nginx deployment, run the following:$ kubectl set env deployment nginx-deployment DATE=$()

After running the above command, which adds a DATE environment variable in the Pods with a null value (=$()), run kubectl to get pods and see the Pods restarting, similar to the rollout restart method.

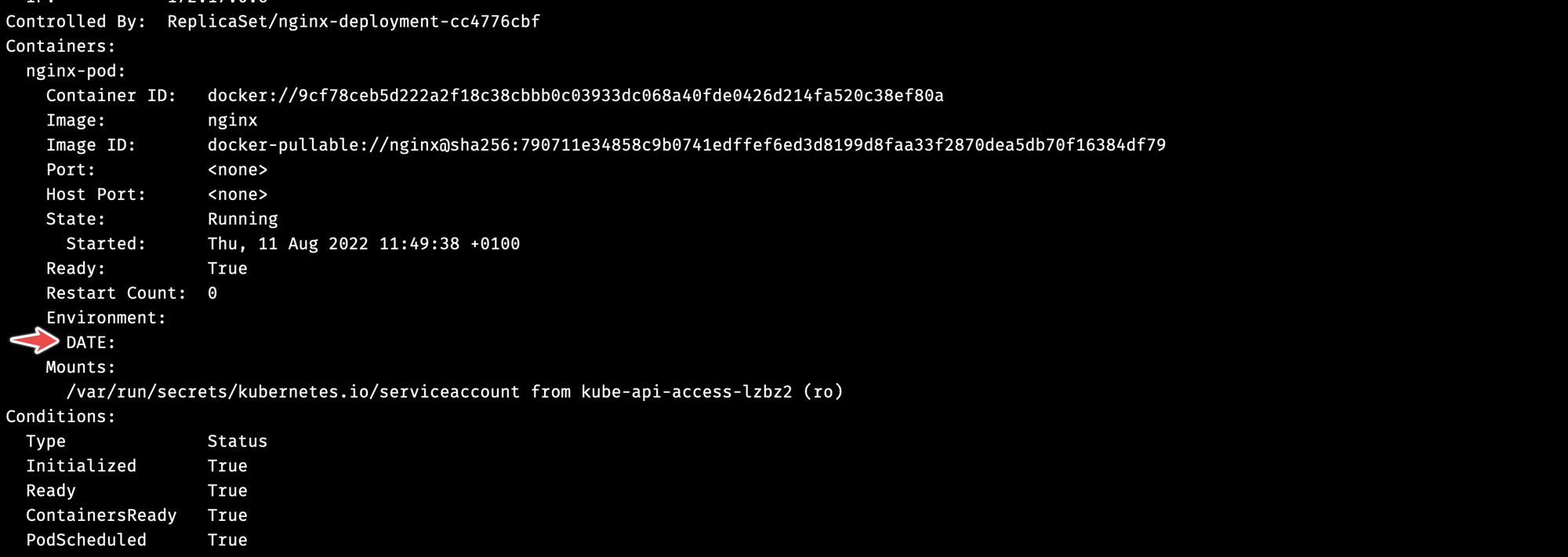

You can verify that each Pod’s DATE environment variable is null with the kubectl describe command.$ kubectl describe <pod_name>

After running the above command, the DATE variable is empty (null), like in the image below.

Restarting Pods by deleting them

Because the Kubernetes API is declarative, it automatically creates a replacement when you delete a Pod that’s part of a ReplicaSet or Deployment. The ReplicaSet will notice the Pod is no longer available as the number of container instances will drop below the target replica count.

To delete a Pod, use the following command:$ kubectl delete pod <pod_name>

Though this method works quickly, it is not recommended except if you have a failed or misbehaving Pod or set of Pods. For regular restarts like updating configurations, it is better to use the kubectl scale or kubectl rollout commands designed for that use case.

To delete all failed Pods for this restart technique, use this command:$ kubectl delete pods --field-selector=status.phase=Failed

Cleaning up

Clean up the entire setup by deleting the deployment with the command below:$ kubectl delete deployment nginx-deployment

Conclusion

This article discussed five scenarios where you might want to restart Kubernetes Pods and walked you through 4 methods with kubectl. There is more to learn about kubectl. To learn more, check out the kubectl commands reference.

Contents

Newsletter

Stay updated on the latest from Last9.

Handcrafted Related Posts

OpenTelemetry vs. OpenTracing

OpenTelemetry vs. OpenTracing - differences, evolution, and ways to migrate to OpenTelemetry

Last9

Introducing Levitate: ‘uplifting’ your metrics woes because self-management sucks like gravity

Managing your own time series database is painful. We’ve moved from servers to services, and yet, monitoring metrics data is primitive. Our managed time series database powers mission-critical workloads for monitoring, at a fraction of the cost.

Nishant Modak

How to Manage High Cardinality Metrics in Prometheus

A comprehensive guide on understanding high cardinality Prometheus metrics, proven ways to find high cardinality metrics and manage them.

Last9